How Well Do Undergraduate Research Programs Promote Engagement and Success of Students?

Abstract

Assessment of undergraduate research (UR) programs using participant surveys has produced a wealth of information about design, implementation, and perceived benefits of UR programs. However, measurement of student participation university wide, and the potential contribution of research experience to student success, also require the study of extrinsic measures. In this essay, institutional data on student credit-hour generation and grade point average (GPA) from the University of Georgia are used to approach these questions. Institutional data provide a measure of annual enrollment in UR classes in diverse disciplines. This operational definition allows accurate and retrospective analysis, but does not measure all modes of engagement in UR. Cumulative GPA is proposed as a quantitative extrinsic measure of student success. Initial results show that extended participation in research for more than a single semester is correlated with an increase in GPA, even after using SAT to control for the initial ability level of the students. While the authors acknowledge that correlation does not prove causality, continued efforts to measure the impact of UR programs on student outcomes using GPA or an alternate extrinsic measure is needed for development of evidence-based programmatic recommendations.

INTRODUCTION

More than a decade ago, the Boyer Commission Report on Reinventing Undergraduate Education (Boyer Commission on Educating Undergraduates in the Research University, 1998) provided a challenge to faculty and administrators at American research universities to infuse undergraduate education with inquiry-driven learning opportunities. The report found research universities failed to provide learning experiences for undergraduate students that instill the basic skills of critical thinking, clear writing, and coherent speaking, and challenged the research universities to utilize the expertise in their graduate and research programs in order to strengthen the undergraduate experience. The first of the recommendations of the Boyer report to “Make Research-based Learning the Standard” stimulated efforts to promote and to evaluate undergraduate education. One such effort is Bio2010: Transforming Undergraduate Education for Future Research Biologists (National Research Council, 2003), which proposed a redesign of the undergraduate curriculum with emphasis on multidisciplinary courses, inquiry-based learning, and participation in undergraduate research (UR). Many universities have increased their UR efforts in recent years, transforming the practice from “a cottage industry to a movement” (Blanton, 2008). Recent works summarize many key findings that have emerged from case studies and surveys and qualitative studies to determine best practices for management of UR programs and whether participation in UR is beneficial for students (Taraban and Blanton, 2008; Lopatto, 2009). Lopatto's cross-institutional study of the benefits of UR, the Summer Undergraduate Research Experience (SURE), included more than 100 institutions and 3000 students and supports the hypothesis that UR promotes gains in skills, self-confidence, pathways to science careers, and active learning (Lopatto, 2004, 2007, 2009). Gains in skills, scientific understanding, self-confidence, and commitment to science and research have been reported in numerous studies, including our own (Holt and Kleiber, 2001; Bauer and Bennett, 2003; Seymour et al., 2004; Russell, 2008; Trosset et al., 2008). Participation in research activities was also found to increase retention in science and likelihood of matriculation to graduate school of minority students and women as compared with peers who did not engage in UR (Nagda et al., 1998; Gregerman, 1999; Hathaway et al., 2002; Lopatto, 2004; Bauer and Bennett, 2008; Campbell and Skoog, 2008). Other studies report that faculty also note benefits and satisfaction in working with undergraduate researchers (Chopin, 2002; Zydney et al., 2002; Russell et al., 2007). Further qualitative studies contribute a personal dimension to the survey data through narratives of the students themselves to demonstrate the power of UR (Henne et al., 2008). A case study of a robust UR office provides key insight from the perspective of program administration (Locks and Gregermann, 2008).

These studies, based largely on a combination of participant surveys and qualitative research methods, have produced a wealth of information about design, implementation, and benefits of UR programs, but do not answer all questions about UR. It is important to seek quantitative external measures that may be used collectively to measure the impact of UR experiences on student achievement. Further studies that contribute to the literature about UR in all disciplines and not only the sciences are needed. In this article, we use the Center for Undergraduate Research at the University of Georgia as a model to examine two general questions about UR that are not adequately addressed by survey data but that can be approached using data from institutional research. First, we measure the increase in undergraduate participation in research for the first 10 yr after formation of a university-wide UR program. Second, we explore the use of the grade point average (GPA) as an external measure of the impact of UR on student success.

THE IMPACT OF CURO ON STUDENT ENGAGEMENT

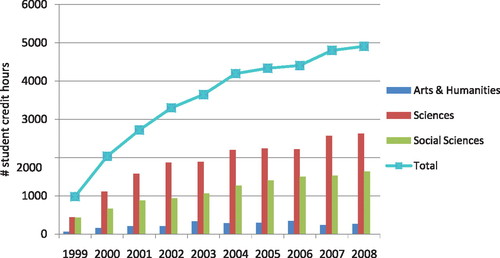

The Center for Undergraduate Research Opportunity (CURO) at the University of Georgia is a unit housed in the honors program, but serving both honors and nonhonors students in all schools and colleges within the university. The primary programs and activities of CURO are highlighted by: a Web-based directory of research mentors; an apprentice program to recruit, engage, and promote development of diverse students during their first 2 yr at the university; Gateway Research Seminars; a summer fellowship program; and an annual university-wide CURO symposium with awards for best student research papers in each discipline and mentoring awards for faculty. These program components have not all been present since inception, but have been added through efforts to institutionalize initiatives that were originally funded by grants from FIPSE (the original CURO program), Howard Hughes Medical Institute (the CURO apprentice program), and the National Science Foundation (the promising scholars program). Efforts to enhance engagement of students in UR not only in science, but across a broad array of disciplines has been a major focus of CURO since its inception. Measurement of the success of CURO in promoting student engagement is complex in the context of a large university with diverse schools, colleges, departments, and curricula, and different metrics may be expected to provide diverse measures of the engagement. In this essay, we focus on intensive research experiences, and use a definition of UR that invokes the traditional one-faculty-mentor-to-one-student relationship focused on a directed-research project. We have taken advantage of a course nomenclature that is nearly uniform across departments, schools, and colleges to probe institutional data that examine student enrollment in credit-based courses that are specifically designated with a UR call number. Since some departments group all students taking directed research with multiple professors in a single course per semester, the student credit hours generated provide the most accurate measure of student participation. According to this metric, student engagement in research has increased since the formation of the CURO program by 500% to a level of ∼5000 student credit hours per year in 2008 (Figure 1). An enhancement of 500% university wide in 10 yr is a truly impressive increase! By comparison, the total undergraduate credit hours increased by only 5% in the same period, so the increase in research participation cannot be attributed to a major change in enrollment. The annual credit-hour data show that ∼1400 students take an intensive directed-research course each year at the University of Georgia, corresponding to ∼5% of the undergraduate students at the university.

Figure 1. Student credit hours in directed-research courses at University of Georgia from 1999–2008. Student credit hours in UR courses across all disciplines and honors shown as a total sum, or sorted by discipline. “Sciences” includes the biological and physical sciences. “Social sciences” includes the conventional social sciences as well as business, law, and journalism. Arts and humanities were combined into a single category since they were about equal and together comprise ∼5% of all of the UR hours.

The impact of CURO on engagement of students in research experiences can also be assessed by measuring “capstone” activities, such as presentation at symposia and completion of honors theses. We have tracked research presentations at the UR symposium, authorship of an honors thesis, or attainment of the CURO scholar distinction, which is a permanent transcript notation for students who complete two directed-research courses, present at the UR symposium, and submit an honors thesis (Table 1). We note that while the measures are valid for comparison between years, they are not inclusive, since some departments and colleges maintain their own UR symposia and theses and do not participate in the CURO symposium. Data from the last 10 yr show that participation in the UR symposium has grown 300% since the first year to a level of 200 students per year presenting research results at the symposium in 2009. The number of undergraduate theses tripled during the emergence of CURO, and nearly doubled again in the middle of the current decade. Students earning the CURO scholar distinction are, as expected, a subset of students with thesis and CURO presentation, and the number of CURO scholars has grown significantly since the introduction of this recognition in 2002. Thus, student presentations have clearly increased, but the increases are not as large as the increases in student credit hours. Since dissemination is one of the four key elements of UR and has been identified as a key element of the overall experience in surveys of participants (Hakim, 1998; Lopatto, 2009), the results show that we have significant potential to improve by attempting to increase the fraction of students participating in research that present results at the symposium.

| Number of | Number of | ||

|---|---|---|---|

| students | students | ||

| presenting | submitting | CURO | |

| at CURO | honors | scholar | |

| Year | symposiuma | thesis | distinctionb |

| 1997 | — | 19 | — |

| 1998 | — | 23 | — |

| 1999 | — | 46 | — |

| 2000 | 68 | 63 | — |

| 2001 | 67 | 68 | — |

| 2002 | 138 | 69 | 6 |

| 2003 | 138 | 106 | 9 |

| 2004 | 115 | 89 | 12 |

| 2005 | 159 | 90 | 16 |

| 2006 | 143 | 104 | 35 |

| 2007 | 191 | 108 | 35 |

| 2008 | 211 | 67 | 35 |

| 2009 | 197 | 69 | 35 |

Analysis of student participation in UR courses at the University of Georgia in diverse disciplines over 10 yr shows that engagement is most extensive in the sciences followed by the social sciences and then the arts and humanities (Figure 1). The largest and most rapid gains in the sciences occurred in the first 3 yr of operation of CURO, and showed more modest intermittent increases in subsequent years. These results are in agreement with other studies that report the highest level of participation in the biological and physical sciences (Hu et al., 2007). The social sciences have maintained a slow but steady rate of growth since the inception of CURO, with no tendency to plateau. The arts and humanities have lower levels of student participation, with small but recurring increases in the first 4 yr of CURO, and no substantial growth in the last 5 yr. Since increases in UR participation could be affected by changes in the total number of students and their distribution across disciplines over the last 10 yr, we evaluated the effects of these potential variables on our findings. The results show that: 1) total undergraduate enrollment has increased by ∼7.5% during the study period; 2) science students are fairly constant at 25% of all undergraduates, but account for 50–55% of CURO hours; 3) social science students represent 56% of the total, but account for 33% of CURO hours. We conclude that, in general, the increase in CURO hours is not accounted for by an increase in students or a shift of students by major or discipline.

The higher levels of participation and strong growth in the sciences likely reflect both the long-standing tradition of some scientists to involve undergraduates in their research, and a community environment with postdoctoral students, doctoral students, and laboratory technicians to share some of the tasks of mentoring. The lower levels of participation but steady rate of growth in social sciences suggest that there is still untapped capacity for mentoring of undergraduates in the scholarship of these disciplines, and that significant additional increases are likely in future years. Traditionally, the work of scholars in the humanities and arts is solitary and the number of undergraduates any one faculty member can mentor is smaller. The data do not show continual gains in undergraduate participation in the arts and humanities. Additional studies of the differences in the rates of participation and growth in these different disciplines would help explain the findings and aid in the development of strategies to optimize participation in the diverse areas of scholarship within the university. This is a fertile area for future research.

It is important to note that the precise definition used to gauge participation in UR will affect the outcome. For example, the Council on Undergraduate Research (CUR) defines research as “an inquiry or investigation, conducted by an undergraduate student that makes an original intellectual or creative contribution to the discipline” (CUR, 2010). This definition is quite broad, and reflects the intellectual content of the scholarly inquiry, rather than the structure or format of the experience. Thus, the CUR definition would include students who volunteer, work for pay, or register for a variety of types of research-based courses, including individual directed-research project courses. In contrast, the current analysis used a more narrow definition that included directed-research project courses in the traditional one-faculty-member-to-one-student model, and participation rates are understandably lower. Notably, a recent study that surveyed UR participation at University of California, Berkeley, found that students doing research in a UR course comprise only 10–14% of all undergraduate students (Berkes, 2008). While we cannot assume that the Berkeley and University of Georgia models are exactly the same, this figure suggests that institutions that use the more narrow definition would find that actual participation levels are significantly higher if a broader definition is used. Although we chose to measure only individual research classes, we note that undergraduate classes with larger enrollments are being developed on many campuses, including our own. Shaffer et al. (2010) reported that large classrooms can be used effectively for engaging students in authentic research and that large classes can provide some of the same benefits of the traditional individual research classes. The more specific measure of individual directed-research project courses almost certainly underestimates all undergraduate participation in research. However, the advantage of this metric is that the activity and level of engagement is better defined, and the institutional data on individual student research courses provide a stable database for multi-year studies in the entire university or within specific disciplines, as shown in the findings previously discussed. Development of a metric for assessing the amount of student engagement in research that can be used for measurement across disciplines and between institutions over time is an ongoing challenge for scholars of UR.

DOES ENGAGEMENT IN RESEARCH PROMOTE STUDENT SUCCESS?

Benefits that accrue to our students are reflected consistently in student-reported assessments and surveys (Taraban and Blanton, 2008; Lopatto, 2009). Extrinsic measures have the potential to provide both a quantitative independent test and significant extension of the findings from these prior studies. As a starting point, we used the cumulative student GPA at graduation as a measure of the effect of participation in CURO, since it is extrinsic, quantifiable, and accessible for all past and present students through institutional records. For this analysis, we selected the cohort of baccalaureate students graduating from the University of Georgia in 2005 corresponding to the midpoint of this study. The GPAs of students taking zero, one, two, or three or more research courses were calculated for all students, and for males and females separately. The results show that students involved in UR (all students taking one or more directed-research courses) had a significantly higher GPA compared with students who did not participate in UR for all students, as well as for males and females separately (Table 2). It seemed possible to explain these results by postulating that higher achieving students both take research courses and have a higher GPA. Such an explanation would be consistent with our findings but would not support the interpretation that engagement in research is a factor contributing to higher scholastic performance. To control for this effect, the SAT score for each student was used as a covariate to control for the effect of academic ability on GPA. Even after correction of the GPAs for student aptitude, the results show statistically significant increases in cumulative GPA for students who enrolled in one or more UR courses for all students, as well as for both males and females, as compared with students who did not engage in research (Table 2).

| All students | All students | ||||||

|---|---|---|---|---|---|---|---|

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAa | N | SE | Number of UR courses | Mean cumulative GPAb | N | SE |

| 0 | 3.185 | 5031 | 0.007 | 0 | 3.220 | 4126 | 0.007 |

| 1 or more | 3.382 | 629 | 0.020 | 1 or more | 3.322 | 543 | 0.019 |

| Female | Female | ||||||

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAc | N | SE | Number of UR courses | Mean cumulative GPAd | N | SE |

| 0 | 3.277 | 2895 | 0.008 | 0 | 3.303 | 2398 | 0.008 |

| 1 or more | 3.496 | 321 | 0.003 | 1 or more | 3.392 | 283 | 0.025 |

| Male | Male | ||||||

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAe | N | SE | Number of UR courses | Mean cumulative GPAf | N | SE |

| 0 | 3.061 | 2096 | 0.011 | 0 | 3.106 | 1714 | 0.011 |

| 1 or more | 3.262 | 304 | 0.031 | 1 or more | 3.234 | 258 | 0.028 |

To determine whether the duration of engagement in UR has an impact on student outcomes, we examined GPA and GPA controlled for SAT for all students and males and females taking zero, one, two, or three or more UR courses. As shown in Table 3, students who completed more UR courses earned higher GPAs. This increase in GPA based on number of courses was found for all students as well as for males and females separately, and for both the uncorrected and corrected GPAs (Table 3). All pairwise combinations of GPAs were tested for significance to assess the impact of the duration of participation in UR (Table 4). Interestingly, 16 out of 18 pairwise comparisons of the uncorrected GPAs showed statistical significance (Table 4). The GPA of female students taking one UR course did not show a significant difference from those taking two courses, and the GPA of males taking two courses was not significantly different from those taking three or more. For the GPAs controlled for SAT, the pairwise comparisons were significant in 13 out of 18 cases (Table 4). It is noteworthy that nonsignificant comparisons differed by a single UR course (e.g., zero vs. one course, one vs. two courses, or two vs. three or more courses). It is also notable that the comparisons of corrected GPA of students taking zero vs. one research course were nonsignificant for all students and males and females (Table 4).

| All students | All students | ||||||

|---|---|---|---|---|---|---|---|

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAa | N | SE | Number of UR courses | Mean cumulative GPAb | N | SE |

| 0 | 3.185 | 5031 | 0.007 | 0 | 3.220 | 4126 | 0.007 |

| 1 | 3.296 | 410 | 0.025 | 1 | 3.259 | 342 | 0.024 |

| 2 | 3.494 | 155 | 0.038 | 2 | 3.393 | 142 | 0.037 |

| 3 or more | 3.662 | 64 | 0.045 | 3 or more | 3.532 | 59 | 0.057 |

| Female | Female | ||||||

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAc | N | SE | Number of UR courses | Mean cumulative GPAd | N | SE |

| 0 | 3.276 | 2895 | 0.008 | 0 | 3.303 | 2398 | 0.008 |

| 1 | 3.421 | 193 | 0.029 | 1 | 3.344 | 164 | 0.032 |

| 2 | 3.552 | 89 | 0.043 | 2 | 3.414 | 83 | 0.045 |

| 3 or more | 3.741 | 39 | 0.043 | 3 or more | 3.572 | 36 | 0.068 |

| Male | Male | ||||||

| No control for SAT | Control for SAT | ||||||

| Number of UR courses | Mean cumulative GPAe | N | SE | Number of UR courses | Mean cumulative GPAf | N | SE |

| 0 | 3.060 | 2096 | 0.011 | 0 | 3.106 | 1714 | 0.011 |

| 1 | 3.182 | 213 | 0.037 | 1 | 3.172 | 176 | 0.034 |

| 2 | 3.416 | 66 | 0.067 | 2 | 3.341 | 59 | 0.059 |

| 3 or more | 3.538 | 25 | 0.088 | 3 or more | 3.435 | 23 | 0.094 |

| ANOVA for all students, no control for SATa | ANCOVA for all students, control for SATb | ||||||||

| Number of UR courses | 1 | 2 | 3 or more | Number of UR courses | 1 | 2 | 3 or more | ||

| 0 | Mean diff | −0.111 | −0.309 | −0.477 | 0 | Mean diff | −0.038 | −0.173 | −0.312 |

| SE | 0.025 | 0.039 | 0.045 | SE | 0.025 | 0.038 | 0.058 | ||

| Sig | 0.000 | 0.000 | 0.000 | Sig | 0.122 | 0.000 | 0.000 | ||

| 1 | Mean diff | −0.198 | −0.366 | 1 | Mean diff | −0.134 | −0.274 | ||

| SE | 0.045 | 0.051 | SE | 0.044 | 0.062 | ||||

| Sig | 0.000 | 0.000 | Sig | 0.002 | 0.000 | ||||

| 2 | Mean diff | −0.167 | 2 | Mean diff | −0.140 | ||||

| SE | 0.059 | SE | 0.068 | ||||||

| Sig | 0.030 | Sig | 0.040 | ||||||

| ANOVA for female, no control for SATc | ANCOVA for female, control for SATd | ||||||||

| Number of UR Courses | 1 | 2 | 3 or more | Number of UR Courses | 1 | 2 | 3 or more | ||

| 0 | Mean diff | −0.145 | −0.276 | −0.465 | 0 | Mean diff | −0.041 | −0.111 | −0.270 |

| SE | 0.030 | 0.044 | 0.044 | SE | 0.033 | 0.046 | 0.068 | ||

| Sig | 0.000 | 0.000 | 0.000 | Sig | 0.210 | 0.015 | 0.000 | ||

| 1 | Mean diff | −0.131 | −0.320 | 1 | Mean diff | −0.070 | −0.228 | ||

| SE | 0.052 | 0.052 | SE | 0.540 | 0.074 | ||||

| Sig | 0.072 | 0.000 | Sig | 0.199 | 0.002 | ||||

| 2 | Mean diff | −0.189 | 2 | Mean diff | −0.159 | ||||

| SE | 0.061 | SE | 0.080 | ||||||

| Sig | 0.015 | Sig | 0.049 | ||||||

| ANOVA for male, no control for SATe | ANCOVA for male, control for SATf | ||||||||

| Number of UR Courses | 1 | 2 | 3 or more | Number of UR Courses | 1 | 2 | 3 or more | ||

| 0 | Mean diff | −0.122 | −0.356 | −0.478 | 0 | Mean diff | −0.066 | −0.235 | −0.330 |

| SE | 0.038 | 0.068 | 0.089 | SE | 0.036 | 0.060 | 0.095 | ||

| Sig | 0.011 | 0.000 | 0.000 | Sig | 0.063 | 0.000 | 0.001 | ||

| 1 | Mean diff | −0.234 | −0.356 | 1 | Mean diff | −0.169 | −0.263 | ||

| SE | 0.077 | 0.096 | SE | 0.068 | 0.100 | ||||

| Sig | 0.018 | 0.005 | Sig | 0.013 | 0.008 | ||||

| 2 | Mean diff | −0.122 | 2 | Mean diff | −0.094 | ||||

| SE | 0.111 | SE | 0.111 | ||||||

| Sig | 0.860 | Sig | 0.393 | ||||||

These results confirm and extend prior findings showing that the student-reported GPA of students engaged in research was higher than that of students who did not engage in research (Russell, 2008). Because correlation does not prove causality, additional study is warranted in other groups and larger data sets and for students from different disciplines. There may be other factors that can explain these findings. However, taken at face value, these results indicate that engagement in UR does promote student success, that taking multiple undergraduate courses is better than taking just one, and that taking one course may not be sufficient to have a meaningful effect.

SUMMARY AND PERSPECTIVE

Our investigation of the impact of CURO at the University of Georgia documents clearly that this university-wide center has promoted student engagement and dramatically increased the amount of UR activity as measured by student credit hours, presentations at UR symposia, and theses (Figure 1; Table 1). These gains have been most apparent and continue to accrue in the sciences and social sciences. The differences in student participation across disciplines are striking, and these results provide a foundation for efforts to seek to understand why these differences exist, and the types of interventions that might provide wider student engagement in research in all areas of scholarship in the research university. Further, our analyses of the number of students participating in UR help us to appreciate the multiple definitions that can be used to identify students who participate in inquiry-based learning. Indeed, the percentage of students engaged in UR depends on the breadth of definition and measures used to assess participation. Moreover, the percentage of students presenting at the UR symposia and writing theses continues to lag well behind students enrolling in research courses. Since these capstone activities are ranked highly by students in assessment surveys (Lopatto, 2009), efforts to enhance participation in these capstone projects should be made with specific assessment of the effects of these interventions.

Our analysis of the impact of undergraduate student research on student success revealed a positive effect of research on student performance as measured by the GPA (Tables 2–4). Although our findings provide direct evidence for the value-added dimensions of UR participation, these findings are limited due to the nature of the extrinsic measure and current definition of student success (GPA). Because the sample of students and courses included in this analysis come from one institution only, no attempts to generalize our findings beyond this single institution are attempted. We also acknowledge the limitations of GPA as an outcome measure for UR participation. In addition, correlation does not prove causality. However, the combination of additional research-based skills and cognitive enhancements that students report as a result of participating in UR courses (Lopatto, 2009) should be measurable with an external indicator, and do appear to be reflected in a student's higher GPA. The finding that students in our sample group taking multiple research courses show larger gains is particularly significant with regard to program management. Specifically, the results with GPA controlled for student ability as assessed by SAT show that a single UR course does not result in a statistically significant increase in GPA. This finding, if extended and replicated, would support the development of multi-semester curricula, and/or the implementation of additional incentives for students for continued engagement and capstone experiences in research in the form of transcript notation or travel funds, both of which have been used successfully by CURO. Further study is planned to include additional measures of cognitive and/or academic change as well as student perceptions of their UR experience.

Additional incentives for faculty would also enhance opportunities for a larger fraction of students to engage in research, and for more of those students to participate for multiple semesters. Inevitably, capacity in the form of faculty time will limit growth, and it is likely not possible to require that all students at the university engage in a directed-research course for multiple semesters in this traditional format. Yet, students and mentors who find sustained engagement to be mutually beneficial should be strongly encouraged to work together long term, as these relationships appear to have the most positive impacts on research productivity and on the success of individual students. Faculty members are generally appreciative of retaining undergraduates after they have been trained and have attained initial success in the research environment. Sustained engagement appears to create a win–win arrangement.

Further research should examine factors that influence persistence in UR after the initial semester of engagement. In addition, a study of the impact of engagement in the first 2 yr as compared with the second 2 yr of undergraduate study would be enlightening. While the Boyer Commission's challenge to provide every undergraduate with a research experience is admirable, the practicality of such a goal requires more investigation regarding affordances and barriers to a sustained research experience that includes dissemination. Some students will opt to become engaged at a very intense level, while others may opt to engage in less-intensive activities, including all categories in the definition proposed by CUR. Future studies should examine the outcomes and impact on students of these UR activities that provide different levels of engagement.

ACKNOWLEDGMENTS

We thank Ning Wang of the University of Georgia Office of Institutional Research for assistance with retrieval and analysis of institutional data.