Routes to Research for Novice Undergraduate Neuroscientists

Abstract

Undergraduate students may be attracted to science and retained in science by engaging in laboratory research. Experience as an apprentice in a scientist's laboratory can be effective in this regard, but the pool of willing scientists is sometimes limited and sustained contact between students and faculty is sometimes minimal. We report outcomes from two different models of a summer neuroscience research program: an Apprenticeship Model (AM) in which individual students joined established research laboratories, and a Collaborative Learning Model (CLM) in which teams of students worked through a guided curriculum and then conducted independent experimentation. Assessed outcomes included attitudes toward science, attitudes toward neuroscience, confidence with neuroscience concepts, and confidence with science skills, measured via pre-, mid-, and postprogram surveys. Both models elevated attitudes toward neuroscience, confidence with neuroscience concepts, and confidence with science skills, but neither model altered attitudes toward science. Consistent with the CLM design emphasizing independent experimentation, only CLM participants reported elevated ability to design experiments. The present data comprise the first of five yearly analyses on this cohort of participants; long-term follow-up will determine whether the two program models are equally effective routes to research or other science-related careers for novice undergraduate neuroscientists.

INTRODUCTION

The lack of effective “pipelines” and “pathways” from early science and math education to successful science- and math-related careers is well recognized in the U.S. education system (American Electronics Association [AeA], 2005; Teachers College, 2005). At the undergraduate level, only 7% of U.S. degrees are awarded in the natural sciences (National Research Council [NRC], 2003a), and 50% of undergraduates defect from science majors (Seymour, 1995a,b; Seymour and Hewitt, 1997). This concern is most alarming among under-represented minority and female students where the defection rate is proportionately greater (Seymour, 1995b). At the graduate level, only 17% of U.S. advanced degrees are awarded in science, math, or engineering, compared with >30% in other industrialized nations (Snyder, 2003). Moreover, despite recognition that diversity among science and math professionals maintains a competitive edge in the global economy (Wenzel, 2004; Committee for Economic Development [CED], 2006) and enhances some group dynamics (Nemeth, 1985; Milem, 2003), diversity among U.S. science and engineering graduate students remains low, with African Americans at 7%, Hispanics at 6%, and American Indians/Alaskan Natives at <1% (Thurgood, 2004), which is less than one-half of their representation in the U.S. population (Population Division, 2004). Inadequate science and math education reflects and predicts low science literacy across the nation. Declines in science literacy jeopardize scientific advancement, future economic growth, and national security (National Science Board [NSB], 2004; AeA, 2005; Teachers College, 2005; CED, 2006).

In response to such concerns, the U.S. CED and other blue-ribbon panels recommend that educators increase student interest in science by demonstrating the wonder of discovery while helping students master a rigorous science curriculum (NRC, 1996, 2003b; NSB, 2004; CED, 2006). The Center for Behavioral Neuroscience (CBN), an Atlanta-based National Science Foundation Science and Technology Center (NSF STC), aims to recruit and train the next generation of neuroscientists through active education and research programs with special emphasis on increasing under-represented minority and female representation (CBN, 1999). Behavioral neuroscience, a discipline that focuses on the neurobiology of behavior, including how the environment and experience influence the nervous system, is an attractive scientific discipline for recruitment of undergraduates, due to its high relevance in society and its interdisciplinary nature (Moreno, 1999; Cameron and Chudler, 2003). The CBN is also in a favorable position to recruit under-represented minorities; five of eight participating institutions are historically black colleges and universities (HBCUs; Clark Atlanta University, Morehouse College, Morehouse School of Medicine, Morris Brown College, and Spelman College). In addition, other institutions in the center, Georgia State University, Georgia Institute of Technology, and Emory, are national leaders in minority education. Georgia State University has graduated the most African Americans among U.S. institutions other than HBCUs (Meredith, 2002). Georgia Institute of Technology awards the most math and engineering advanced degrees to African Americans (Meredith, 2002); and Emory's 2005 entering freshman class included 31% minorities. Indeed, Table 1 shows high participation by under-represented minority students in past programs sponsored by the CBN. These science education and training programs are part of an extensive network of local science education outreach and research programs in Atlanta (Weinburgh et al., 1996; Resources for Involving Scientists in Education, 1998; Carruth et al., 2003, 2004, 2005; Demetrikopoulos et al., 2004; Frantz et al., 2005; Holtzclaw et al., 2005; Totah et al., 2004, 2005; Zardetto-Smith et al., 2004).

| Program | Academic level | Yearly schedule | Sample year | % Under-represented minority applicants (n) | % Under-represented minority participants (n) |

|---|---|---|---|---|---|

| Brains Rule! Neuroscience Exposition | Middle school | 2 d in March | 2004 | NA | ∼99 (180) |

| Brain Camp | Middle school | 1 wk in summer | 2004 | NA | 100 (30) |

| Institute on Neuroscience | High school | 8 wk in summer | 2004 | >33 (81) | 50 (10) |

| Seminar series | Early undergraduate | 16 wk in fall | 2001 | NA | 79 (24) |

| Research internship | Early undergraduate | 11 wk in spring | 2002 | NA | 91 (20) |

| Research apprenticeship | Early undergraduate | 10 wk in summer | 2002 | 64 (102) | 84 (56) |

One strategy to introduce students to the processes of scientific discovery and research is to involve them as apprentices in research laboratories (Barab and Hay, 2001; Avila, 2003; NRC, 2003a; Hofstein and Lunetta, 2004; Wenzel, 2004). Despite the widespread support for involvement of students in research in the form of federal grant programs, national research organizations, and discipline-specific programs (Faculty for Undergraduate Neuroscience, 1991; Council on Undergraduate Research [CUR], 2002; NSF, 2005; National Conferences on Undergraduate Research, 2006), there remains a need for careful investigation of methods for engaging students in laboratory research as well as the effectiveness of research experience at recruiting and retaining students in science- and math-related fields (Alberts, 2005; DeHaan, 2005). In an extensive review, Seymour et al. (2004) analyzed the potential benefits and expected outcomes of many undergraduate research programs and identified several studies that reached justifiable conclusions that undergraduate research can maintain positive attitudes toward science, elevate scientific thinking, and increase research skills. For example, students demonstrated broadened and matured views on the nature of science after a year-long research program (Ryder et al., 1999), and they reported improved efficacy with research skills in another program (Kardash, 2000). In an ethnographic analysis, 91% of 1227 evaluative statements from students in summer research programs described specific program benefits; 58% of the benefits referred to personal–professional growth in confidence, independence, and responsibility in the laboratory, and 57% of the benefits referenced “thinking and working like a scientist” (categories were not mutually exclusive and total >100%; Seymour et al., 2004). Moreover, in a survey of students on 41 college campuses, 20 reported learning gains were associated with research experiences, including enhanced understanding of the research process, how to approach scientific problems, and an ability to work independently (Lopatto, 2004).

To extend this line of research, we designed a 10-wk summer research program for undergraduate students and incorporated both quantitative and qualitative assessment instruments. The primary focus of the present report is a quantitative analysis of responses on pre-, mid-, and postprogram surveys, which probed student attitudes toward science, attitudes toward neuroscience, confidence with neuroscience concepts, and confidence with general science skills.

A specific challenge to effective undergraduate research experience may be recruitment of scientists willing to mentor novice undergraduates in summer programs. Even in Atlanta's rich research environment, traditional apprenticeship programs with low mentor:student ratios limit the number of undergraduate students who can participate. Smaller academic communities may have an even more limited pool of available mentors. Given the importance of the specific nature of the research experience for novice scientists (Lawton, 1997; Brooks and Brooks, 1999; NRC, 2000; Barab and Hay, 2001; Lederman et al., 2002; Hofstein and Lunetta, 2004), we were led to question whether a collaborative, inquiry-based, student-driven research opportunity for undergraduates may be as successful, if not more successful, than a one-on-one research apprenticeship.

In the present investigation, we compared outcomes from two different program models: 1) a traditional Apprenticeship Model (AM) in which individual students joined established research laboratories in basic science departments to work under individual faculty mentors; or 2) a distinctive Collaborative Learning Model (CLM) in which students worked together in small, student-driven research teams under the guidance of faculty, postdoctoral fellow, and graduate student mentors to design and conduct original experiments, according to a defined but flexible curriculum. We tested the hypothesis that both models for a summer research program in behavioral neuroscience would positively affect attitudes toward science, attitudes toward neuroscience, student confidence with neuroscience concepts, and student confidence with general science skills.

Although research experience typically is not initiated until late in undergraduate careers, early participation in research may attract and retain undergraduates in science before they defect to other majors. Even at the high school level, research experience may provide students with “insider interpretations” of what science is and how scientific inquiry is accomplished (Bell et al., 2003; Totah et al., 2004, 2005; Elmesky and Tobin, 2005), leading to higher retention in science. Moreover, early participation in research may be especially important for recruitment of minorities and females into the sciences (Tapia, 2000; NRC, 2003a; Lopatto, 2004; Elmesky and Tobin, 2005). Therefore, we biased admittance into our program to favor freshman and sophomore undergraduates, under-represented minority students, and females. Further recognizing that concerns for student retention may include successful, identifiable role models and enculturation into the profession by effective mentors (Dewey, 1925; Vygotsky, 1980; Milem, 2003; NRC, 2003a,b; Wenzel, 2004), we recruited a diverse group of faculty members and advanced trainees (e.g., postdocs and graduate students) as research mentors and instructors for both program models.

Finally, to provide an opportunity for sustained interaction between students and faculty members, a “Best Practices in Science Education Conference” was held 2 mo after the close of the summer program; all undergraduate students were invited back to meet with science educators and science education researchers. The long-term impact of the summer program and fall follow-up conference will be analyzed for 4 yr through annual surveys.

RESEARCH QUESTIONS

Does a 10-wk undergraduate summer research experience positively affect attitudes toward science, attitudes toward neuroscience, student confidence with neuroscience concepts, and student confidence with general science skills?

Do program outcomes vary between a traditional AM and a CLM?

Do program outcomes vary with gender and ethnicity?

Do long-term program outcomes vary by program model, gender, ethnicity, or attendance at the follow-up conference?

PARTICIPANTS

Student participants were chosen from a pool of 155 applicants recruited through local “recruitment fairs” as well as a national Web site for all NSF STC summer programs. Applicants were initially categorized by the following demographic characteristics: gender, ethnicity, academic year, home institution (metro-Atlanta, out of state), research experience (yes, no), course preparation (biology, psychology, neuroscience, and so on), and grade point average (GPA). To favor admittance of female, under-represented minority, freshman and sophomore students with little research experience but adequate background knowledge from relevant course work, the characteristics were ranked on a weighted scale. Applicants from metro-Atlanta institutions were given priority to increase likelihood of participation in the long-term study over the ensuing 4 yr. A committee then reviewed applications, and 45 applicants were invited to participate; 42 from this group attended, including 40 undergraduate and two postbaccalaureate students. Demographics are given in Table 2.

| Demographics | Summer program (n = 42) | Fall conference (n = 21) |

|---|---|---|

| Gender | Female, 32 (76); male, 10 (24) | Female, 17 (81); male, 4 (19) |

| Under-represented minority | Overall, 19 (45); African American or Black, 17 (40); Hispanic or Latino, 2 (5) | Overall, 9 (43); African American or Black, 7 (33); Hispanic or Latino, 2 (10) |

| Nonminority | Overall, 23 (55); White, 13 (31); Asian American, 10 (24) | Overall, 12 (57); White, 6 (29); Asian American, 6 (29) |

| Academic underclass | Overall, 19 (45); freshman, 9 (21); sophomore, 10 (24) | Overall, 9 (43); freshman, 2 (10); sophomore, 7 (33) |

| Academic upperclass | Overall, 23 (55); junior, 15 (36); senior, 6 (14) | Overall, 12 (57); junior, 8 (38); senior, 4 (19) |

| Location of home institution | Metro-area/in-state, 31 (72); out-of-state, 11 (28) | Metro-area/in-state, 14 (66); out-of-state, 7 (34) |

| Program model | CLM, 11 (28); AM, 31 (72) | CLM, 6 (29); AM, 15 (71) |

For the AM, faculty mentors were recruited to provide research projects in their own neuroscience laboratories. For the CLM, faculty, postdocs, and graduate students were recruited to play a variety of mentoring roles in a single laboratory facility, including direct instruction, teaching, coaching, and advising. The resultant group of 37 mentors for AM and CLM combined consisted of 43.2% females and 10.8% under-represented minority mentors (30 faculty; two postdocs; four graduate students, and one middle school teacher on internship).

After program acceptance, participants were further divided into AM or CLM groups based on several factors: 1) application statements of interest indicating preference for one model or another; 2) preprogram survey statements of preference in response to a specific question, and 3) verbal statements of preference during the initial two weeks of the program. We sacrificed random assignment to program models in order to maintain participant satisfaction and maximize likelihood of meeting individual goals (and we recognize that interactions between selection of program model and history of the participant may have influenced outcomes). For participants in the traditional AM, participant-mentor matches were facilitated in several ways: 1) Program administrators suggested matches based on statements of interest. 2) Participants read summaries of research, visited Web sites, and consulted publications to determine shared interests. 3) Mentor meetings were facilitated to confirm or deny “fit.” By the end of week 2, participants and AM mentors were matched. Only one participant requested and was granted reassignment, in this case from the AM to the CLM in week 3 of the program.

For the fall follow-up conference, although all summer program participants were invited to return, only 21 (50%) attended. Their demographic profile was nonetheless highly representative of the overall summer program population, indicating that no single subgroup of summer program participants was predisposed to attend the conference (Table 2).

PROCEDURE

Summer Research Program Structure

The 10-wk summer research program was entitled Behavioral Research Advancements in Neuroscience (BRAIN). It consisted of 2 wk of classroom instruction in basic neuroscience, shared by all participants, followed by 8 wk of neuroscience laboratory research in either an AM or CLM group. The first week of classroom instruction addressed cellular and molecular neuroscience and the second week addressed systems and behavioral neuroscience (∼9 am–5 pm, Monday–Friday). In accordance with national best practices in teaching science (NRC, 1996, 2000), the curriculum was designed to combine activities, lectures, and hands-on mini-experiments on a daily basis. For example, lectures on brain anatomy were preceded by guided sheep brain dissections and followed by an assignment to create the brain of a real or imaginary creature using modeling clay to explore which brain structures mediate specific behaviors (Demetrikopoulos et al., 2006). During the subsequent 8 wk, all participants were expected to work 35 h/wk in their laboratory settings, and to submit weekly time sheets signed by research mentors. To increase student awareness of and preparation for science-related careers (Fischer and Zigmond, 2004) and to support national efforts toward ethical scientific conduct (CUR, 2002; NRC, 2003a; Wenzel, 2004; Society for Neuroscience, 2006), all participants also attended weekly 4-h professional development workshops on topics including diversity in science career opportunities, graduate school preparation, stress management, science writing, how to develop effective poster presentations, and scientific ethics. The program culminated in preparation of a written report (in the form of a mini-research proposal or a journal article) as well as preparation of a research poster to be presented and judged at a closing research symposium. On successful completion of requirements early, mid, and late in the program, each participant received a $3000 stipend in $1000 installments.

Participants in the traditional AM (n = 31) joined in new or ongoing research projects in 27 different laboratories at five research institutions and the local zoo. Program administrators exerted no influence over the nature of the research experience. Based on submission of weekly time sheets signed by mentors, participants fulfilled the expectation to conduct research activities for 35 h/wk, but daily schedules were designed individually by participants and mentors as they deemed best fit for the diverse research paradigms, laboratories, and institutions that comprised the apprenticeship experiences. Participants could choose a format for their final written reports (mini-proposal or journal article), and posters were prepared for the symposium. (Resultant poster titles are listed in Supplemental Material 1.)

Participants in the CLM (n = 11) all convened in a single dedicated laboratory (with neighboring seminar rooms and computer laboratory) to engage in various research techniques using an invertebrate animal model (red swamp crayfish, Procambarus clarkii). This species was chosen due to the extensive body of literature available on its cellular and molecular mechanisms of behavior, the relative simplicity of its nervous system, ease of care, and low level Institutional Animal Care and Use Committee oversight. In total, 10 “instructors” were deployed over 8 wk for the CLM (three faculty, two postdocs, four graduate students, and one middle school teacher), with at least three people present at any given time. They led demonstrations and experiments that required participants to use the following techniques: observation of animal behavior, anatomical dissection, histological staining, electrophysiological recording (intracellular and extracellular), RNA extraction from nervous tissue, quantitative polymerase chain reaction (PCR), cDNA synthesis, and gene cloning. During the first 5 wk in the CLM, daily activities generally consisted of 1- to 2-h introductions to new material (via lecture, demonstration, and discussion related to assigned readings), review of protocols, and then initiation of experimentation in self-selected teams of two to three participants with assistance from instructors. Although all research teams used similar research techniques in a given week, their specific research questions were based on individual team interests. For example, Supplemental Material 2 contains a sample weekly experiment guide used by all teams, but the primers designed by individual teams were based on each team's unique research question. During the last 3 wk in the CLM, each team researched, designed, and conducted its own pilot research investigation on a unique topic chosen by team members. Instructors and mentors reviewed ideas, read proposed protocols, provided guidance, and assisted with data collection. Weekly seminars facilitated comprehension of peer-reviewed journal articles on crayfish neurobehavioral research. The program culminated with submission of a mini-research proposal based on collected pilot data, and each team prepared a poster for the symposium. (Resultant poster titles are listed in Supplemental Material 2.)

Program Assessment

All data collection was conducted with approval from the Georgia State University Institutional Review Board. Before arrival at the program location, participants were required to complete an online consent form followed by an electronic survey (preprogram survey; Survey Monkey Inc.). Again after completion of the 2-wk introductory neuroscience curriculum and finally after the closing research symposium, participants completed two more electronic surveys (mid- and postprogram surveys, respectively). Surveys generally are valid and reliable measures of attitudes, behavior, and values of undergraduate students (Hinton, 1993). Four different inventories were included on all surveys for comparison over the course of the program. Survey completion was tracked by e-mail address and required for receipt of stipends. Identifiers were removed before data analysis to guarantee anonymity. Demographic information was requested on each survey for correlation of gender and ethnicity with responses.

The first two inventories probed participants' attitudes toward science and neuroscience, using formats modified from the Attitudes Toward Science Inventory for science attitudes (Weinburgh and Englehard, 1994; Kaelin, 2004) and from the Student Assessment of Learning Gains (Seymour, 1997; Seymour et al., 2006) for neuroscience attitudes. Positive attitudes have long been recognized as important indicators of enhanced learning experiences (Dewey, 1925; Vygotsky, 1980); positive attitudes toward science increase the likelihood that students will engage in scientific pursuits (for review, see Koballa and Crawley, 1992). Thus, we were interested in student perceptions of their science-related experiences as well as their interest in, appreciation for, and comfort with science and neuroscience. The inventory instructions were as follows: “Mark the answer that best shows how much you agree or disagree with each statement.” This statement was followed by the statements listed in Table 3. A Likert-type scale of six possible responses included “NA” and then ranged from “strongly disagree” to “strongly agree.”

| Science attitudes | Neuroscience attitudes |

|---|---|

| Science is something that I enjoy very much. | I understand the main concepts of neuroscience. |

| I do not do very well in science. | I understand the relationship between concepts in the field of neuroscience. |

| Doing science labs or hands-on activities is fun. | |

| I feel comfortable in a science class. | I understand how concepts in neuroscience relate to ideas in other science classes. |

| Science is helpful in understanding today's world. | |

| I usually understand what we are talking about in science classes. | I appreciate neuroscience. |

| I feel tense when someone talks to me about science. | I can think through a problem or argument in neuroscience. |

| I often think, ′I cannot do this,′ when a science assignment seems hard. | I am confident in my ability to do neuroscience. |

| I feel comfortable with the complex ideas in neuroscience. | |

| I would like a job that doesn’t use any science. | I am enthusiastic about studying neuroscience. |

| I enjoy talking to other people about science. | I enjoy teaching neuroscience to others. |

| It scares me to have to take a science class. | |

| I have a good feeling toward science. | |

| Science is one of my favorite subjects. |

A third inventory probed participants' perceptions of their own understanding of neuroscience-related concepts, as modified from a Student Assessment of Learning Gains (SALG) instrument (Seymour, 1997; Seymour et al., 2006). According to the SALG developers, students give clear indications regarding what they themselves gain from a learning environment, so the SALG focuses on student perceptions of their own understanding. In another study, student ratings of their own skills matched surprisingly well with mentor ratings of the same skills (Kardash, 2000), supporting the validity of students' perceptions. However, the SALG is intended to assess the effects of specific teaching techniques or classroom activities on student learning, whereas we were interested in preexisting perceptions of understanding before the program and subsequent changes in those perceptions over the course of the program, without attention to specific teaching techniques or activities. So, we probed initial understanding by asking the question, “How well do you think you understand each of the following concepts?” This question was followed by the concepts listed in Table 4. A Likert-type scale of six responses included “NA” and then ranged from “not at all” to “a great deal.” On the midprogram survey, the question was phrased as follows: “You have completed the Orientation Curriculum in neuroscience. Now how well do you think that you understand each of the following concepts?” On the postprogram survey, the question was phrased as follows: “You have completed the Orientation Curriculum in neuroscience and have had the opportunity to apply it to your summer research experience. Now how well do you think that you understand each of the following concepts?”

| Neuroscience concepts | Science skills |

|---|---|

| Anatomy and function of a neuron | Solve problems |

| Anatomy and function of the brain | Write papers |

| The process of neurotransmission | Design laboratory experimentsa |

| Gene transcription and translation | Develop descriptions, explanations, and predictions based on scientific evidencea |

| Neural plasticity | |

| Learning and memory | Critically review journal articles |

| Pharmacology | Work effectively with others |

| Psychopathology | Give oral presentations |

| Sensory receptor function | Give poster presentationsa |

| Classical conditioning | Solve problems as a team with other students or colleagues |

| Operant conditioning | Generate testable hypotheses |

| How an action potential is generated | Conduct laboratory experimentsa |

| Comparisons and contrasts between brains from humans versus other animals | Think critically and logically about the relationship between evidence and explanations for that evidence |

| Habituation and sensitization | Identify questions that can be answered by scientific inquiry |

| Spinal cord anatomy | Talk about scientific procedures and explanations with others |

| How animals move | |

| How animals sense stimuli | |

| How animals regulate internal states such as temperature, thirst, and hunger | |

| How mental disorders arise and how they are treated | |

| How drugs affect the nervous system |

The last inventory probed participants' own perceptions of their abilities to carry out science-related activities. Again, we were interested in preexisting perceptions before the program and subsequent changes over the course of the program. Thus, we made the following request on the pre-, mid-, and postprogram surveys: “Mark the answer that best describes the degree to which you think you can do the part of science listed.” This statement was followed by the task list in Table 4. A Likert-type scale of five responses ranged from “definitely no” to “definitely yes.”

By means of formative program evaluation of activities, lectures, workshops, program administration, and instructors, additional survey components were added on the mid- and postprogram surveys using a learning gains format. Resultant data will shape future program structure.

Follow-up Long-Term Assessment

To evaluate long-term effects of undergraduate summer research on academic and career progress, we will maintain contact with the members of this cohort of undergraduate students and implement annual follow-up surveys. Our first effort to maintain contact consisted of inviting participants back for a fall conference and providing a $200 conference stipend. In future years, students will earn an annual $100 survey stipend by completing online electronic surveys similar to the summer program surveys and by sending academic transcripts and standardized test scores (Graduate Record Examinations, Medical College Admission Test, Dental Admission Test, Law School Admission Test, and so on).

Data Analysis

Data analysis took two general forms. First, responses on individual survey items were assigned a score from 1 to 5, with 1 designated for “strongly disagree,” “not at all,” or “definitely no,” depending on the inventory (science attitudes, neuroscience attitudes, neuroscience concepts, or science skills). When appropriate on the attitudes inventory, scores were inverted so that affinity or positive attitude toward science always received the highest score. To confirm that each inventory demonstrated internal reliability and that individual items could be combined into a composite score, Cronbach's α was calculated on the preprogram survey responses and required to reach 0.7 or greater. (Raw reliability coefficients are reported.) Scores were then summed to calculate a total for each inventory. An “NA” response was not scored, and any blank or “NA” response resulted in dropping that individual from the summed data calculation for that survey (pre-, mid-, or postprogram). If a participant score was dropped from an individual survey, then it was also dropped from the repeated measures analysis. Separately for each inventory, a repeated measures analysis of variance (ANOVA) was conducted with time as the repeated measure (pre-, mid-, postprogram) and three between-subjects factors (gender, ethnicity [nonminority vs. under-represented minority], program model). (Nonminority was defined as Asian American or White, whereas under-represented minority was defined as African American or Black, Latino or Hispanic, or American Indian/Alaskan Native.) In all cases, the Greenhouse–Geisser epsilon correction for possible violation of sphericity was at least 0.7, and univariate analyses are reported. Effect size was estimated using the complete η2, and scores are reported for significant main effects or interactions. SPSS software (SPSS, Chicago, IL) was used for analyses.

In the second mode of analysis, targeted individual questions were analyzed based on theoretical differences between program models. Specifically, the items indicated with superscript letter a in Table 4 were analyzed for the proportion of respondents answering “definitely yes,” the greatest confidence in their ability to carry out the part of science listed. Separate Fisher Exact Tests for Significant Differences in Proportions were conducted: first, across surveys for analysis of total population changes over the course of the program, ignoring program model; second, within program model across surveys for analysis of specific program model changes over the course of the program; and third, across program models within survey (pre-, mid-, or postsurvey).

RESULTS

Attitudes toward Science

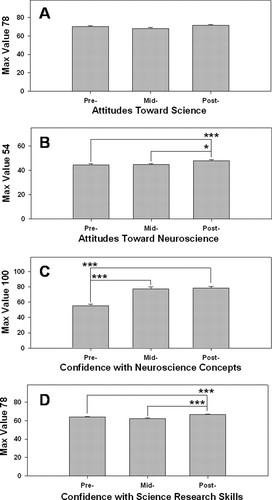

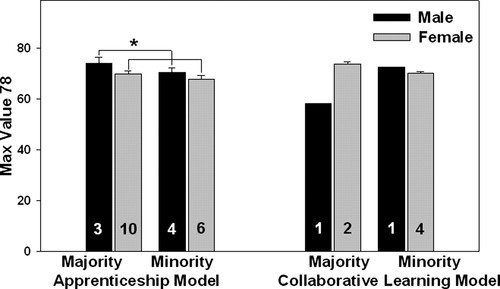

Reliability estimates for the 13 items on the attitudes toward science inventory revealed α = 0.83. Results from pre-, mid-, and postprogram inventories of attitudes toward science were summed to a composite score and subjected to repeated measures ANOVA with time as within-subjects factor and gender, ethnicity, and program as between-subjects factors (Table 5). Average results across all participants demonstrated no main effect of time (Figure 1A), but the three-way interaction between ethnicity × gender × program was significant [F(1,23) = 9.31; p = 0.006] and accounted for 16% of the variability not explained by other factors (η2 = 0.16). A follow-up two-way ethnicity × gender ANOVA on participants in the AM revealed a significant main effect of gender [F(1,19) = 4.50; p = 0.047; η2 = 0.17], such that males showed more positive attitudes toward science than females (Figure 2). Within the CLM, significant main effects of gender [F(1,4) = 22.71; p = 0.009; η2 = 0.27], ethnicity [F(1,4) = 15.3; p = 0.017; η2 = 0.18], and a gender × ethnicity interaction [F(1,4) = 43.59; p = 0.003; η2 = 0.51] revealed that females in the nonminority ethnicity group showed more positive attitudes than males, but no such gender difference existed among under-represented minority students. However, only one male student participated in each of the CLM groups, so no significance is marked on Figure 2. No other follow-up tests were significant.

| Attitudes toward science | Attitudes toward neuroscience | Confidence with neuroscience concepts | Confidence with science skills | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SS | df | F | p | SS | df | F | p | SS | df | F | p | SS | df | F | p | |

| Time | 100.94 | 2 | 1.80 | 0.18 | 146.90 | 2 | 4.14 | 0.022a | 5390.00 | 2 | 25.71 | <0.001a | 187.22 | 2 | 6.86 | 0.0020a |

| Time × ethnicity | 111.99 | 2 | 1.99 | 0.15 | 41.50 | 2 | 1.17 | 0.32 | 82.59 | 2 | 0.39 | 0.68 | 26.34 | 2 | 0.97 | 0.39 |

| Time × gender | 33.51 | 2 | 0.60 | 0.56 | 10.75 | 2 | 0.30 | 0.74 | 36.92 | 2 | 0.178 | 0.84 | 11.21 | 2 | 0.41 | 0.67 |

| Time × program | 1.45 | 2 | 0.026 | 0.98 | 9.046 | 2 | 0.26 | 0.78 | 266.56 | 2 | 1.27 | 0.30 | 54.85 | 2 | 2.01 | 0.14 |

| Time × ethnicity × gender | 134.46 | 2 | 2.39 | 0.10 | 216.98 | 2 | 6.11 | 0.0040a | 741.65 | 2 | 3.54 | 0.043a | 79.84 | 2 | 2.93 | 0.062 |

| Time × ethnicity × program | 23.42 | 2 | 0.42 | 0.66 | 0.016 | 2 | 0.00 | 1.00 | 56.89 | 2 | 0.27 | 0.76 | 24.50 | 2 | 0.90 | 0.41 |

| Time × gender × program | 58.30 | 2 | 1.038 | 0.36 | 15.16 | 2 | 0.43 | 0.66 | 235.61 | 2 | 1.12 | 0.34 | 30.83 | 2 | 1.13 | 0.33 |

| Time × ethnicity × gender × program | 3.43 | 2 | 0.061 | 0.94 | 0.75 | 2 | 0.021 | 0.98 | 243.61 | 2 | 1.16 | 0.33 | 2.92 | 2 | 0.11 | 0.90 |

| Error | 1292.09 | 46 | 923.11 | 52 | 2935.24 | 28 | 736.46 | 54 | ||||||||

| Ethnicity | 18.76 | 1 | 0.54 | 0.47 | 48.661 | 1 | 0.99 | 0.33 | 4.51 | 1 | 0.025 | 0.88 | 4.26 | 1 | 0.25 | 0.62 |

| Gender | 28.14 | 1 | 0.81 | 0.38 | 109.49 | 1 | 2.22 | 0.15 | 486.26 | 1 | 2.71 | 0.12 | 10.66 | 1 | 0.62 | 0.44 |

| Program | 46.60 | 1 | 1.34 | 0.26 | 50.13 | 1 | 1.02 | 0.32 | 0.002 | 1 | <0.001 | 1.00 | 0.74 | 1 | 0.043 | 0.84 |

| Ethnicity × gender | 220.20 | 1 | 6.31 | 0.019a | 89.77 | 1 | 1.82 | 0.19 | 452.48 | 1 | 2.52 | 0.14 | 9.66 | 1 | 0.57 | 0.46 |

| Ethnicity × program | 229.33 | 1 | 6.57 | 0.017a | 33.39 | 1 | 0.68 | 0.42 | 103.65 | 1 | 0.58 | 0.46 | 72.95 | 1 | 4.27 | 0.049a |

| Gender × program | 339.66 | 1 | 9.73 | 0.005a | 104.11 | 1 | 2.12 | 0.16 | 88.38 | 1 | 0.49 | 0.49 | 42.30 | 1 | 2.47 | 0.13 |

| Ethnicity × gender × program | 324.87 | 1 | 9.31 | 0.006a | 42.34 | 1 | 0.86 | 0.36 | 664.46 | 1 | 3.70 | 0.075 | 40.29 | 1 | 2.36 | 0.14 |

| Error | 802.74 | 23 | 1279.7 | 26 | 2513.4 | 14 | 461.69 | 27 | ||||||||

Figure 1. Summer program responses on inventories probing attitudes toward science (A; n = 31), attitudes toward neuroscience (B; n = 34), confidence with neuroscience concepts (C; n = 22), and confidence with science research skills (D; n = 35), on pre-, mid-, and postprogram surveys. See Tables 3 and 4 for survey statements. Significant results from follow-up t tests after significant main effects of time indicated (∗p < 0.05, ∗∗∗p < 0.001).

Figure 2. Responses on attitudes toward science inventory by gender, ethnicity, and model groups. Significant main effect of gender indicated (∗p < 0.05) and respondents per group shown in column. Significance not indicated for CLM due to n = 1 for male groups.

Attitudes toward Neuroscience

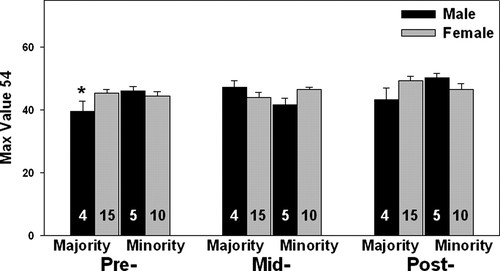

Reliability estimates for the nine items on the attitudes toward science inventory revealed α = 0.89. Results from inventories of attitudes toward neuroscience were summed to a composite score and subjected to repeated measures ANOVA with time as within-subjects factor and gender, ethnicity, and program as between-subjects factors (Table 5). Average results across all participants demonstrated a significant main effect of time [F(2,52) = 4.14; p = 0.022; Figure 1B] accounting for 11% of the variability in scores not explained by other factors (η2 = 0.11), and follow-up t tests revealed that postprogram survey attitudes were significantly elevated above both pre- and midprogram attitudes. Also, a significant three-way time × ethnicity × gender interaction [F(2,52) = 6.11; p = 0.0040] accounted for 16% of the variability not explained by other factors (η2 = 0.16) and led to follow-up two-way ethnicity × gender ANOVAs at each time point (pre-, mid-, and postprogram). A significant ethnicity × gender interaction was revealed preprogram [F(1,30) = 4.77; p = 0.037; η2 = 0.12] and postprogram [F(1,30) = 4.63; p = 0.040; η2 = 0.13], but a midprogram trend toward interaction did not reach significance [F(1,30) = 3.72; p = 0.063; η2 = 0.11]. Main effects of ethnicity and gender were not significant on any of these two-way ANOVAs. An independent samples t test between male and female participants in the nonminority ethnicity group showed significantly lower attitudes toward neuroscience among nonminority males on the preprogram survey, but no other t tests were significant (Figure 3).

Figure 3. Responses on attitudes toward neuroscience inventory by gender, ethnicity, and time. Significant effect of gender among nonminority participants on the preprogram survey indicated (∗p < 0.05) and respondents per group shown in column.

Confidence with Neuroscience Concepts

Reliability estimates for the 20 items on the attitudes toward science inventory revealed α = 0.91. Results from the neuroscience concepts inventory were summed to generate a composite score and subjected to repeated measures ANOVA with time as within-subjects factor and gender, ethnicity, and program as between-subjects factors (Table 5). Average results across all participants demonstrated a significant main effect of time [F(2,28) = 25.71; p < 0.001; Figure 1C) that accounted for 54% of the variability not explained by other factors (η2 = 0.54). Follow-up t tests revealed that both mid- and postprogram survey confidence levels were significantly elevated above preprogram confidence. Also, a significant three-way time × ethnicity × gender interaction [F(2,28) = 3.54; p = 0.043; η2 = 0.07] led to follow-up two-way ANOVAs on ethnicity × gender at each time point as well as ethnicity × time for each gender and gender × time for each ethnicity group. However, none of these two-way ANOVAs revealed significant interactions and individual group data are not reported. Nonetheless, signifi-cant main effects of time on the ethnicity × time two-way ANOVAs for male [F(2,10) = 14.25; p = 0.001; η2 = 0.61] and female [F(2,26) = 17.44; p < 0.0001; η2 = 0.57] participants confirmed the overall significant elevation in confidence with neuroscience concepts over the course of the program, as did significant main effects of time on the gender × time two-way ANOVAs for nonminority [F(2,28) = 24.93; p < 0.0001; η2 = 0.59] and under-represented minority participants [F(2,8) = 9.64; p = 0.007; η2 = 0.66]. The gender × time interaction among nonminority participants demonstrated a trend toward significance [F(2,28) = 3.25; p = 0.054; η2 = 0.08] and suggested that on the pre- and postprogram survey but not midprogram survey, nonminority females were more confident with neuroscience concepts than their male counterparts. Another trend toward significant ethnicity × time interaction was demonstrated among male participants only [F(2,10) = 3.88; p = 0.057; η2 = 0.17] and suggested that nonminority males were more confident than under-represented minority males on the mid- but not pre- or postprogram surveys.

Confidence with Science Skills

Reliability estimates for the 14 items on the attitudes toward science inventory revealed α = 0.76. Results from pre-, mid-, and postprogram inventories of confidence with science skills were summed to a composite score and subjected to repeated measures ANOVA with time as within-subjects factor and gender, ethnicity, and program as between-subjects factors (Table 5). Average results across all participants demonstrated a significant main effect of time [F(2,54) = 6.86; p = 0.0020; Figure 1D] accounting for 16% of the variability not explained by other factors (η2 = 0.16). Follow-up t tests revealed that postprogram survey confidence was greater than pre- and midprogram confidence levels. Also, the two-way interaction of ethnicity × program reached statistical significance [F(1,27) = 4.27; p = 0.049; η2 = 0.12], but follow-up independent samples t tests did not reveal significant differences between individual groups.

Participants' abilities to design laboratory experiments were hypothesized to be different between the AM and CLM because the skill was explicitly taught in the CLM, especially in the last 3 wk, but not necessarily in the AM. Therefore, the proportion of participants responding “definitely yes” was compared between time points (pre-, mid-, and postprogram surveys) and between models (Table 6). The pre- versus postprogram difference was significant for the CLM (p < 0.05) but not the AM. Moreover, when data were averaged across program models, more participants responded “definitely yes” postprogram than preprogram (p < 0.001). Three other individual statements were evaluated similarly but did not show significant differences (Table 6).

| Pre- | Mid- | Post- | ||||

|---|---|---|---|---|---|---|

| CLM | AM | CLM | AM | CLM | AM | |

| Conduct laboratory experiments | 9/11(82) | 25/31(81) | 7/10(70) | 22/30(73) | 9/11(81) | 23/29(79) |

| 34/42(81) | 29/40(73) | 32/40(80) | ||||

| Give poster presentations | 6/11(55) | 16/31(52) | 6/10(60) | 8/30(27) | 9/11(82) | 26/29(90) |

| 24/42(57) | 14/40(35) | 35/40(88) | ||||

| Develop descriptions, … | 6/11(55) | 18/31(58) | 3/10(30) | 14/30(47) | 9/10(90) | 19/29(66) |

| 24/42(57) | 17/40(43) | 28/39(72) | ||||

| Design laboratory experiments | 1/11(9)* | 6/31(19) | 2/10(20) | 9/29(31) | 9/11(82)* | 14/29(48) |

| 7/42(17)*** | 11/39(28) | 23/40(58)*** | ||||

DISCUSSION

Outcomes from this 10-wk summer neuroscience research program indicated that a traditional AM and a CLM were equally successful in elevating attitudes toward neuroscience, confidence with neuroscience concepts, and confidence with science research skills, but neither model changed student attitudes toward science over the course of the program. The application review process generated the intended diverse participant population for the program (Table 2), and a lack of robust differences between ethnicity, gender, or program model groups suggests that participants with diverse backgrounds and different program experiences made similar gains. A follow-up science education conference was attended by an equally diverse population of undergraduate participants (Table 2), supporting the conclusion that the summer program reinforced interest in science and education among all subgroups. The present data comprise the first of five yearly analyses on this cohort of participants.

Although the Attitudes Toward Science inventory did not show significant gains over the course of the program (Figure 1), the average score of 70.26 out of 78.00 on the preprogram survey indicates a pre-existing affinity toward science among these undergraduates, leaving little room for improvement. This raises the question of how best to recruit students for science education research projects. Students who self-identify an interest in science and proactively apply for summer research experience may be most likely to participate fully in program activities, but they may not provide an ideal population to demonstrate attitude gains in a science education research project. However, random recruitment of undergraduate students to programs in which they have no pre-existing interest seems unlikely to succeed by measures of elevated interest or skill.

The lower affinity toward science reported by nonminority females (Figure 2) reinforces the oft-stated importance of involving girls effectively in laboratory apprenticeships. Alternatively, attitudes toward neuroscience did show significant elevation in the entire participant group, by the time of the postprogram survey but not the midprogram survey. This suggests that the introductory curriculum alone was not sufficient to change attitudes toward neuroscience and that the research component of the program was the major contributor. Some students commented that they gained appreciation for neuroscience, despite the fact that they learned they did not want to pursue research. For example, “My goal was just to find out if research was for me. I found out that it was not, but I appreciate the field.” We predict that information aimed to increase awareness of nonresearch careers in science will be especially beneficial for students like these who do not want to pursue research. Lower attitudes toward neuroscience among nonminority males compared with nonminority females at the start of the program quickly dissolved, due to elevation of attitudes among nonminority males after the introductory curriculum. Given that positive attitudes are thought to predict better academic performance and higher involvement in school-related activities (Dewey, 1925; Vygotsky, 1980; Koballa and Crawley, 1992; Weinburgh and Englehard, 1994), the overall elevation in attitudes toward neuroscience predicts increased retention in neuroscience or related fields for most participants of both genders and all ethnic groups but particularly for the nonminority males who showed lower initial attitudes.

Confidence with neuroscience concepts increased significantly between the pre- and midprogram surveys but did not elevate further by the postprogram survey. Thus, the introductory curriculum may have been largely responsible for students' perceptions of their learning gains. However, an important limitation of the present results is the lack of an objective test of content knowledge. Insofar as student estimates of learning gains with neuroscience concepts parallel their estimates of gains in science process skills, a previously reported match between student and mentor estimates of science process skills suggests that students “know how much they know” (Kardash, 2000). Nonetheless, future programs could examine content knowledge before and after the program for a more objective analysis of learning.

Confidence with science skills increased significantly between the mid- and postprogram surveys, thereby indicating that the laboratory research portion of the program elevated student perceptions of their abilities to conduct science. Moreover, the finding that participants in the CLM reported significant gains in their abilities to design lab experiments, whereas apprenticeship participants did not (Table 6), supports our hypothesis that the CLM was at least as effective as the AM, if not more effective. Generating scientific questions was emphasized in the CLM, because participants were specifically requested to study background literature, design their own experiments, and then conduct preliminary data collection and analysis. In contrast, we suspect that many apprentices carried out predetermined or ongoing experiments, as is common in short-term apprenticeships (Barab and Hay, 2001).

Contrary to predictions, however, there was no difference across program models in perceived ability to “develop descriptions, explanations, and predictions based on scientific evidence.” Although this was another pedogogical emphasis in the CLM and we hypothesized it would be better developed among CLM compared with AM participants, we made no request of mentors in the AM to emphasize or de-emphasize it. Therefore, it may have been imparted equally to most participants.

Retention and success in advanced degree programs or careers in science have been related to science skills and research experience (Nnadozie et al., 2001; Hathaway et al., 2002; NRC, 2003b, 2005), so the overall elevation in perceived ability to conduct science that resulted from this program, with no compelling difference across ethnicity, gender, or program model, suggests that both program models increased the likelihood of retention and success in science for participants from varied backgrounds. As noted above with regard to neuroscience concept knowledge, future programs would benefit from additional objective assessments of science process skills that were not included in the present effort (e.g., laboratory practical examinations).

A limitation of note in this study is that not only did participants self-select for interest in science but also they had the opportunity to choose their preferred program model. We may not have observed significant program model effects because students chose the model that fit their own learning styles appropriately. All participants may have made similar learning gains as a result. This assertion is supported by open-ended responses on the preprogram survey, indicating that students had ideas regarding which model would be most effective for them. For example, one CLM participant stated “I would rather work with a small research team because I will be involved in all parts of the research from the beginning to the end. It will be interesting and fun to create my own project and view its outcome.” Along these lines, students had preconceived notions regarding the experience they expected from the program, and these notions supported our choice to take student preference into account when assigning program models. Nonetheless, future programs may need to sacrifice student expectations to gain random assignment and more nearly equal-sized groups in the two models.

Another limitation is that 10 mentors served in the CLM for 11 student participants, despite our goal to investigate whether a higher mentor:student ratio in a CLM compared with an AM is equally beneficial for students. This programmatic concern does not detract from our overall conclusion for at least two reasons. First, only three CLM mentors were present on a typical day. Second, the majority of mentors were postdocs and graduate students. Thus, a single lead faculty mentor could hire or recruit several postdocs or graduate students to run a CLM research experience and thereby increase the number of undergraduate students who can benefit from the input of a single faculty member.

The conclusion that this program effectively maintained interest in science and elevated interest and confidence in neuroscience and research skills is supported by qualitative responses on the postprogram survey. Participants were requested to “list your goals for summer 2005 and describe whether or not the BRAIN program helped you reach those goals.” Of the 41 responses, 36 were categorized as indicating that the program had been helpful in reaching individual goals. In the following example, several typical goals are stated in a single response: “–To gain hands-on experience in doing research work. –Learn how to design an experiment to answer specific questions and hypotheses on a topic. –Develop good connections with people in research positions that could help me with future research experiences. –Gain a better understanding of biology, neuroscience. During my time in the lab and with the BRAIN [program] orientation, I have accomplished and exceeded all my goals.” The lack of robust differences between program models ensures that a collaborative learning-type experience in a single, dedicated laboratory that is led mainly by postdoc and graduate student mentors can provide benefits to undergraduates that are equal to a research apprenticeship in an individual faculty mentor's laboratory. Outcomes were similar across models, with the exception of designing laboratory experiments, a difference that favored the benefits of CLM.

Of final note, the present report does not address the long-term outcomes from undergraduate research experience, which are important for determining the ultimate value of summer research programs. However, the present data set comprises only the first of five yearly assessments. Future surveys will probe attitudes toward science, attitudes toward neuroscience, and academic or career progress. Completed surveys accompanied by academic transcripts and standardized test scores will enable us to determine whether this cohort of students maintains interest in science or neuroscience and chooses science-related academic or career paths. Resultant data will determine whether AMs or CLMs specifically, and summer undergraduate research programs generally, serve as effective routes to research careers or other science careers for novice undergraduate neuroscientists.

ACKNOWLEDGMENTS

We thank Dr. N. R. Hanna for help with program design and J. Pecore for advice on data analysis and interpretation. We also thank the CBN Undergraduate Education Committee for reviewing program applications, all summer program mentors for facilitating research experiences for undergraduates, and Ericka Reid and Adah Douglas-Cheatham for program administration. This research project was supported by NSF STC Grant 002865-GSU through the University of California, Davis (to K.J.F.). The summer undergraduate research program and follow-up analyses are also supported by NSF through the CBN Grant IBN-9876754.