1, 2, 3, 4: Infusing Quantitative Literacy into Introductory Biology

Abstract

Biology of the twenty-first century is an increasingly quantitative science. Undergraduate biology education therefore needs to provide opportunities for students to develop fluency in the tools and language of quantitative disciplines. Quantitative literacy (QL) is important for future scientists as well as for citizens, who need to interpret numeric information and data-based claims regarding nearly every aspect of daily life. To address the need for QL in biology education, we incorporated quantitative concepts throughout a semester-long introductory biology course at a large research university. Early in the course, we assessed the quantitative skills that students bring to the introductory biology classroom and found that students had difficulties in performing simple calculations, representing data graphically, and articulating data-driven arguments. In response to students' learning needs, we infused the course with quantitative concepts aligned with the existing course content and learning objectives. The effectiveness of this approach is demonstrated by significant improvement in the quality of students' graphical representations of biological data. Infusing QL in introductory biology presents challenges. Our study, however, supports the conclusion that it is feasible in the context of an existing course, consistent with the goals of college biology education, and promotes students' development of important quantitative skills.

INTRODUCTION

Recently, scientists have directed much attention to revising undergraduate biology curricula in ways that better reflect the tools and practices of science (National Research Council [NRC], 2003; American Association for the Advancement of Science, 2009). Introductory biology courses traditionally focus on delivery of specific content, with little attention to promoting the practice of quantitative skills used by scientists within those content domains. Of the thousands of undergraduate students who crowd the large-enrollment introductory biology courses, a small fraction will pursue a career in the biological or medical sciences. These future biologists and physicians need to develop fluency in the quantitative tools and language used in interdisciplinary science (Gross, 2000; Association of American Medical Colleges [AACU] and Howard Hughes Medical Institute, 2009; NRC, 2009; Labov et al., 2010). They will probably need to apply sophisticated integrative and quantitative approaches to generate research questions, analyze and interpret evidence, develop models, and generate testable predictions (NRC, 2003, 2009). At the same time, all introductory biology students are citizens and members of society. Nonscientists and scientists alike are confronted daily with scientific and pseudoscientific claims based on quantitative measures regarding their health, environment, education, and more. All need the tools to interpret numeric information and to be able to apply them in making reasoned decisions (Tritelli, 2004).

Quantitative literacy (QL), also known as numeracy, is a “habit of mind,” the skill of using simple mathematical thinking to make sense of numerical information (National Council on Education and the Disciplines, 2001). Although mathematics is often abstract, QL is contextualized and refers to the ability to interpret data and to reason with numbers within “real-world” situations (Steen, 2004). The AACU included QL among the few key outcomes that all students, regardless of field of study, should achieve during their college education (AACU, 2005). Institutions such as James Madison University and Michigan State University include quantitative reasoning as one of several general learning goals for all their students (complete lists are available at www.jmu.edu/gened/cluster3.shtml and http://undergrad.msu.edu/outcomes.html, respectively). Through institution-wide assessment programs, these universities are measuring the scientific and quantitative reasoning skills of all students at the beginning and the end of their liberal learning curriculum (Barry et al., 2007; Ebert-May et al., 2009). Such initiatives promise to yield data that will inform the broader academic community of the level of QL among our undergraduates, as they enter and leave college.

Although some colleges have established programs and courses devoted to teaching and learning quantitative reasoning, most universities do not have an explicit plan for helping students gain QL skills during their undergraduate studies. QL is not a discipline in and of itself; therefore, it is unclear who is responsible for teaching QL. In addition, standards for QL assessment are not yet clearly defined at the institutional or curricular level. Translating broad institutional learning goals into specific learning objectives in the context of individual courses is critical for identifying what kind of evidence demonstrates QL achievement (Ebert-May et al., 2010).

Making QL a Goal of Biology Courses

Currently, most undergraduate biology students take several semesters of required courses in mathematics, physics, and chemistry in addition to the high school mathematics courses needed for admission to college. This background, however, is not an accurate predictor of students' ability to reason quantitatively about biology (Bialek and Botstein, 2004; Hoy, 2004). Because QL is the ability to apply mathematics in a specific context or discipline, the biology curriculum is one natural “place” where students should practice applying quantitative thinking about biological problems. Accordingly, advocates for the reform of introductory biology suggest developing new interdisciplinary courses that integrate the traditionally quantitative disciplines with biology (Bialek and Botstein, 2004). Others argue that quantitative concepts should be incorporated into existing biology courses throughout the entire curriculum, including general introductory biology courses (NRC, 2003; Gross, 2004; Hodgson et al., 2005; Yuan, 2005). In either case, attending to the quantitative reasoning needs of undergraduates is an issue requiring commitment and coordination at the institutional as well as course level (Hoy, 2004).

Motivated by the widespread consensus that QL is an important learning outcome for all students, we sought to embed quantitative thinking within an introductory-level biology course. In this article, we illustrate how a team of instructors infused quantitative concepts into the existing framework of a large-enrollment Introductory Biology course for science majors. Specifically, we describe the following:

The learner-centered instructional design we used to support the inclusion of QL concepts in our introductory biology course.

Our approach to rapidly assess students' QL abilities.

Evidence that students significantly improved in their ability to graphically represent quantitative data throughout the course.

We focused our intervention on basic quantitative skills that biologists routinely apply in their practice, which include representing and interpreting data, and articulating data-based arguments. The construction and evaluation of scientific arguments is particularly well suited for applying quantitative reasoning (Lutsky, 2008). We adopted, for this purpose, a simplified view of arguments, derived from the classic model proposed by Toulmin (1958): an argument is the statement of a “claim” supported by “evidence,” where the reasoning or justification leading from evidence to claim is referred to as “warrant” (Toulmin et al., 1984; Booth et al., 2008; Osborne, 2010). Students in our course often worked on problems presenting biological data (evidence) and were asked to articulate conclusions (claims) based on such evidence, and to provide appropriate reasoning (warrants) in support of their claims.

METHODS

Course Description

We conducted this study at a research university during one semester of a large-enrollment Introductory Biology course for science majors. The course is part of a two-semester introductory biology sequence and focuses on principles of genetics, evolution, and ecology. This course is currently the subject of a comprehensive reform aimed at implementing evidence-based, learner-centered instructional practices (Handelsman et al., 2004; Smith et al., 2005; Handelsman et al., 2006). There are no mathematics pre- or corequisites for this course beyond the requirements for entrance to the university (3 yr of high school math, including 2 yr of algebra and 1 yr of geometry).

Study Population and Research Context

Approximately 80% of the students enrolled in the course were in their first or second year of college (48 and 32%, respectively). Life science majors (e.g., zoology, plant biology, biochemistry) and prehealth or preveterinary students made up 60% of the course population (Table 1 and Supplemental Material). For this study, we analyzed and reported data from students in one course section who completed pre- and postinstruction assessment of QL skills (n = 175).

| Major/track | No. of students (%) |

|---|---|

| Life sciences | 63 (35) |

| Science, other (e.g., chemistry, etc.) | 23 (13) |

| Mathematics | 7 (4) |

| Engineering | 9 (5) |

| Prehealth track | 26 (14) |

| Preveterinary track | 19 (11) |

| Social sciences | 20 (11) |

| Humanities | 9 (5) |

| Undecided | 4 (2) |

This study was conducted in the context of a broader initiative aimed at reforming the introductory biology curriculum. The research was reviewed and classified as exempt by the university's Institutional Review Board.

Instructional Design

Instructors responsible for three of the course sections (150–190 students per section) met weekly to collaborate in all aspects of course design. Weekly meetings focused on constructing common learning objectives and creating learning activities and assessments used in all three sections of the course. We designed all class meetings to engage students through active, inquiry-based pedagogy. At the beginning of the course, we discussed with students the broad course goals, which included learning about the nature of science and knowing how to build scientific knowledge. To achieve these goals, students actively engaged in the activities of scientists, such as collaborative problem solving, creating and interpreting conceptual models, and articulating and evaluating scientific arguments.

Our strategy for infusing quantitative thinking in the normal course of instruction was through iterative assessment of students' QL skills, followed by feedback. We designed and administered (at the beginning of the course and then repeatedly throughout the semester) formative and summative assessments, which incorporated quantitative problems that complemented and supported learning of the biology concepts in the course. These assessments allowed us to rapidly determine whether students were fluent in QL skills directly relevant to biology. Based on the assessment outcomes, we tailored instruction in all three sections to provide students with feedback and further practice, if necessary.

Throughout the semester, we articulated specific QL objectives (Table 2) that complemented the existing course content and learning objectives. Rather than developing stand-alone QL modules, we designed instructional modules, homework, and quiz and exam items that incorporated QL objectives. In the course, students encountered multiple opportunities (Table 3) to apply quantitative thinking in the context of problems about genetics, evolutionary biology, and ecology. All classroom activities and assessments were followed by instructor feedback.

| Students should be able to | ||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||

Exemplars of QL-infused Instruction

In the first week of the course, we implemented a module—the “termite activity”—that addressed the nature of science and incorporated several quantitative aspects. In class, students observed termites following the trace of an ink pen on a sheet of paper. Working in collaborative groups, students observed a small number of termites and the termites' responses to different inks. Students quickly made the observation that termites prefer the ink traces from certain pens while ignoring others. We asked students how a scientist would start from this simple observation to generate evidence to build a scientific claim regarding the termites' behavior (e.g., “what would you need to do to demonstrate that termites prefer ink A to ink B?”). Students worked at developing testable hypotheses about the termites' ink preferences and designed simple experiments to collect quantifiable data about this behavior. To do so, students needed to devise a reproducible method for gathering quantitative data about the termites' ink preferences; conduct an experiment; and record, analyze, and interpret the data.

Instruction throughout the semester followed in this manner. Although it is beyond the scope of this article to illustrate in detail each activity, we direct the reader to Ebert-May et al. (2010) and to an example of a teaching and learning module that we implemented in the course (a case study on evolution of antibiotic-resistant bacteria; http://serc.carleton.edu/42411).

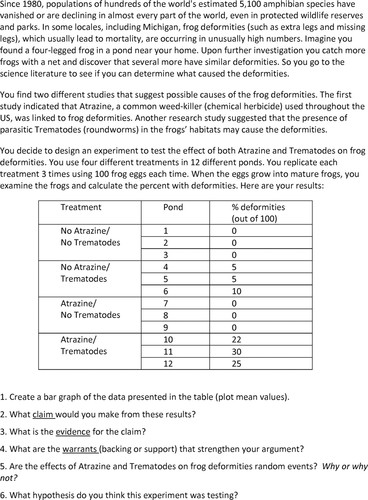

After the termite activity, we assessed students' learning about the nature of science on the first in-class quiz, which included the Frog problem (Figure 1), designed to assess both students' understanding of the nature of science and QL skills. The Frog problem presented students with an experimental scenario and a data set. Students were asked to calculate means (objective [Obj.] 1a), represent the data graphically (Obj. 1b), draw conclusions based on the evidence (Obj. 4a), justify their claim (Obj. 4b), and deduce from the experimental setup what hypothesis that experiment was testing (Obj. 3).

Figure 1. The Frog problem, adapted from an original problem (http://first2.plantbiology.msu.edu/resources/inquiry_activities/frog_activity.htm). This problem was developed by D. L. and D.E.M., based on the work of Kiesecker (2002), and includes text quoted from Miller (2002).

In the context of the unit on evolution, we taught about Hardy–Weinberg equilibrium by using a classroom simulation that required students to calculate allele and genotype frequencies (Obj. 1a) and to make predictions based on observed and calculated data (Obj. 3a). Within the ecology unit, students investigated the impact of invasive species on aquatic ecosystems by exploring the case of sea lampreys in the Great Lakes (www.glfc.org/lampcon.php). Students generated a graph of population growth (Obj. 1b); developed a null hypothesis (Obj. 3a); interpreted a chi-squared value (Obj. 2a); and articulated a complete scientific argument, including a claim (Obj. 4a) and warrant (Obj. 4b).

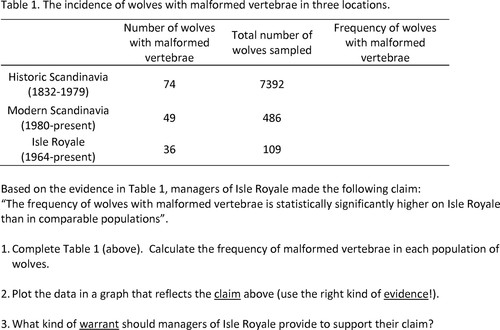

The final exam was structured around the case of the moose and wolves of Isle Royale, Michigan (www.isleroyalewolf.org/wolfhome/home.html). Students answered questions on genetics, evolution, and ecology within the context of the Isle Royale ecosystem, with particular emphasis on the moose and wolves. One item on the exam (Wolf problem; Figure 2) presented students with a data set and asked them to calculate frequency values (Obj. 1a), represent the frequency data in a graph (Obj. 1b), and predict what kind of warrant would be necessary to support a claim based on those data (Obj. 4b).

Figure 2. The Wolf problem. The data that guided design of this problem are publicly available through the “Wolves and Moose of Isle Royale” website (www.isleroyalewolf.org/overview/overview/wolf%20bones.html).

Analysis of Students' QL Skills

For this research, we focus on analysis of the Frog and Wolf problems. For both problems, we asked students to perform a simple calculation and to create a graph from the resulting data. We assigned a score of 1 or 0 (correct, or incorrect or missing) to each of the following elements, common to both graphs:

Graphing the calculated data (means for the Frog problem; frequencies for the Wolf problem)

Appropriately labeling the y-axis

Appropriately labeling the x-axis

Using an appropriate type of graph for the data (a bar graph, in both cases)

Each graph received a composite score, the sum of all four elements. For example, a score of 4 means a student graphed the calculated data using a bar graph and labeled both axes correctly. Scores of ≤3 indicate an error in one or more areas. We compared students' scores at the beginning (Frog problem) and at the end of the course (Wolf problem) by using a paired sample Wilcoxon signed rank test. Statistical analysis was conducted in the R statistical environment (R Development Core Team, 2009).

We also developed simple rubrics for coding the claims and warrants students generated as part of these problems:

The Frog problem asked students to formulate a claim based on the given evidence and to provide appropriate reasoning (warrant) to support the claim. We assigned a score of 1 or 0 for presence/absence of each of these following elements in the students' claims:

Student stated that atrazine alone has no effect.

Student stated that trematodes alone have an effect.

Student stated that the combined effect of trematodes and atrazine is greater than that of trematodes alone.

Each claim therefore received a score between 0 and 3; a score of 3 indicates a complete and correct claim. Students' warrants were analyzed for explicit reference to elements of experimental design. We scored students' warrants as correct based on whether they mentioned at least one of the following elements of the experimental setup:

Large number of frog eggs used

Number of replicates − three for each treatment

Use of the appropriate experimental controls

The Wolf problem provided quantitative evidence and a claim, and asked students what kind of warrant would support that claim. Students' warrants were scored as correct if they explicitly stated that a statistical test of significance (such as the chi-squared test) should be performed on the data to support the claim.

Based on patterns we observed in the students' warrants, we also identified elements that characterized incorrect reasoning. In this study, we focused on two kinds of “incorrect reasoning”: a) the student restated the claim and (b) the student restated the evidence, by either pointing at the raw data or at the graph. We scored students' warrants for presence (or absence) of these elements.

RESULTS

What QL Skills Do Introductory Biology Students Bring to the Course?

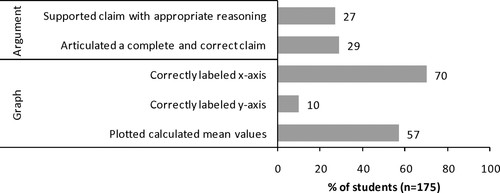

Analysis of student responses on the Frog problem revealed that, at the beginning of the course, students had difficulties with representing data on a graph, properly labeling the graph axes, and formulating complete and correct arguments (Figure 3).

Figure 3. QL skills demonstrated by students at the beginning of the course (assessed through the Frog problem).

Graphing Data. Only 57% of students correctly calculated and represented the data as means on a graph; 33% of students plotted the individual data points, rather than the means, and ∼9% plotted the sum of the data. An appropriate label for the y-axis, in this problem, included the dependent variable (frogs with deformities) and the units (percentage). Only 10% correctly labeled the y-axis on the graph; most students were inaccurate in their labeling, and the majority (∼67%) only labeled the y-axis with the word “percent” or the % symbol.

Articulating Data-based Claims. A complete and correct claim on the Frog problem included three statements, one for each of the experimental treatments (see rubric). Twenty-nine percent of students formulated a claim that included the results of all three treatments. Approximately two-thirds of the students, however, wrote a claim that was incomplete. Most students neglected to include in their claim that exposure to atrazine alone had no effect (no frog deformities observed). Reference to this particular piece of evidence was missing in ∼55% of all students' answers (Table 4).

| QL objectives | Classroom activities | Homework items | Quiz and exam items | Total |

|---|---|---|---|---|

| 1a | 8 | 3 | 5 | 16 |

| 1b | 4 | 5 | 2 | 11 |

| 2a | 0 | 1 | 0 | 1 |

| 2b | 21 | 13 | 5 | 39 |

| 3a | 5 | 1 | 0 | 6 |

| 3b | 2 | 0 | 1 | 3 |

| 4a | 4 | 10 | 4 | 18 |

| 4b | 4 | 8 | 7 | 19 |

Do Students' Graphing Skills Improve in the Course?

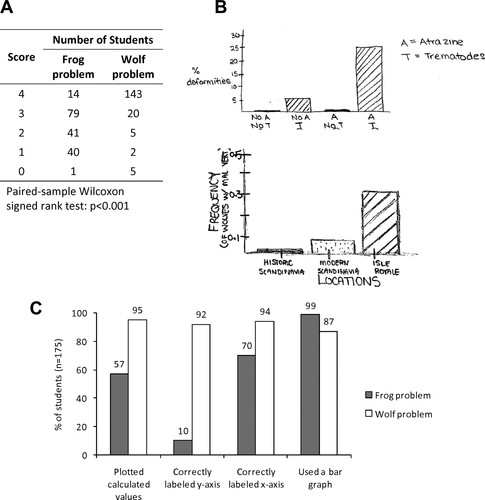

Analysis of the Wolf problem provided evidence of students' progress in their ability to graphically represent numerical data. We applied the rubric described in Methods to assign a score between 0 and 4 to each student graph, where 4 was a complete and correct graph. Statistical comparison of individual students' scores at the beginning and at the end of the semester indicated that, after instruction, students were significantly better at representing data graphically (Figure 4A). For example, a very high proportion of students labeled their graphs appropriately: 92% correctly labeled the y-axis and 95% correctly labeled the x-axis (Figure 4, B and C). This reflects students' learning the need to frame graphs so that readers can understand them, e.g., by labeling them in ways that describe the data (Booth et al., 2008).

Figure 4. Pre- and postinstruction change in the quality of student-generated graphs; “pre” refers to the Frog problem on quiz 1, and “post” refers to the Wolf problem on the final exam. (A) Scores attributed to students' graphs significantly improved after instruction. (B) Examples of student graphs that earned a score of 4 on the Frog problem (top) and the Wolf problem (bottom). (C) Change in the percentage of students who demonstrated specific graphing skills.

The only category in which students had overall a lower score on the Wolf problem was that of creating an appropriate kind of graph. However, on the Frog problem, we explicitly asked students to create a bar graph; 99% created a bar graph, demonstrating that they knew how to do that. On the Wolf problem, we gave no instructions on what type of graph to use and 87% of students appropriately chose a bar graph to represent their data (Figure 4C). Students in the other two course sections that received the same instruction performed in a very similar manner on the Frog and Wolf problem. Data about the majors' distribution and graphing skills assessed across the three course sections are available as Supplemental Material.

How Do Students Reason to Support Data-driven Claims?

Early in the semester, we discussed and practiced how scientists design experiments to test hypotheses by including appropriate controls, changing one variable at a time, performing replicates, and using large sample sizes. The Frog problem immediately followed instruction on the nature of science and experimental design; therefore, we looked for explicit reference to elements of experimental design in students' warrants (e.g., “I can make the claim above […] because I designed an experiment that had all the appropriate controls, I repeated each trial three times, I used a large number of frog eggs each time”). Analysis of the warrants (Table 5) revealed that 27% of students provided appropriate reasoning to support their claim, by citing one or more elements of the experimental design. Specifically, 15% cited the large number of frog eggs used, 23% cited the number of replicates for each treatment, and 4% cited the use of appropriate controls in the set-up of the experiment.

| Scorea | No. of students | % | Claim components | ||

|---|---|---|---|---|---|

| Atrazine only | Trematodes only | Atrazine plus trematodes | |||

| 3 | 51 | 29 | ✓ | ✓ | ✓ |

| 2 | 56 | 20 | ✓ | ✓ | |

| 9 | ✓ | ✓ | |||

| 3 | ✓ | ✓ | |||

| 1 | 53 | 23 | ✓ | ||

| 5 | ✓ | ||||

| 3 | ✓ | ||||

| 0 | 15 | 9 | |||

| In their warrant, students | Frog problem (%) | Wolf problem (%) |

|---|---|---|

| Provided appropriate reasoning | 27 | 30 |

| Restated the claim | 14 | 3 |

| Restated the evidence | 51 | 46 |

At the time of quiz 1, we had not yet discussed the use of statistics to interpret quantitative evidence; therefore, we did not expect students to include mention of statistical analyses in their warrants. Later in the semester, students practiced applying simple statistical tests, both in the classroom and in the laboratory. On the Wolf problem, we provided the claim that frequencies of wolves with malformed vertebrae were “statistically significantly different” among the three populations sampled. Only 30% of students, however, appropriately reasoned that a statistical test of significance was necessary to justify this specific claim.

Although the Frog and Wolf problems required different kinds of reasoning, we identified some fallacies in students' warrants that were common to both problems (Table 5). Many students, rather than providing some kind of reasoning, simply restated the claim. Far more students restated the evidence, either by pointing at the raw data or at the graph (e.g., “as evident from the data” or “as the graph clearly shows”).

DISCUSSION

Early in an Introductory Biology course, and then repeatedly throughout the semester, we assessed students' QL abilities and determined that students had deficiencies in key QL skills. The assessment evidence guided us to develop instruction tailored to the students' needs and abilities. We did not change the course structure, content, or learning objectives; rather, we embedded in our instruction QL elements that aligned with the existing course learning goals. What we view as the primary vehicle for infusing QL into our course is a learner-centered instructional approach. This means instructors were flexible and open to modify instruction based on student feedback from assessments (e.g., Just-in-Time Teaching [JiTT]; see this page for reference to Novak's papers on JiTT at http://serc.carleton.edu/introgeo/justintime/references.html).

Furthermore, this approach required creativity, to craft quantitative problems and data-based classroom activities for students to work on in the context of biology. Finally, implementing this kind of instruction required frequent assessments, of multiple kinds (Smith and Tanner, 2010). We achieved quick turnaround times by using clearly defined rubrics to score students' work; in some cases, homework and in-class work that served the purpose of practice were only scored qualitatively. By the end of the term, our students made significant gains in their QL abilities. Below, we discuss some of the challenges we encountered in teaching and learning QL in introductory biology.

Learning QL in Introductory Biology

Students came to our Introductory Biology course with a wide variety of mathematical abilities and backgrounds, acquired in their high school (National Council of Teachers of Mathematics, 2000), and sometimes college mathematics courses. We could not assume that they were prepared to use quantitative reasoning in the context of biology. The evidence we gathered confirms that students needed additional practice to become fluent with basic quantitative tools and language. Weaving QL through the fabric of the undergraduate Introductory Biology course provided students with the opportunity to practice applying quantitative skills while learning biology.

In our course, students improved in some quantitative skills, such as performing simple calculations and representing quantitative data in graphical form (Figure 4). However, other QL areas, such as the construction of data-based scientific arguments, proved more difficult. Creating a scientific argument from claim to warrant is a high-level cognitive task and is atypical of introductory biology courses, which tend to focus on factual recall and conceptual comprehension as the primary modes of learning (Momsen et al., 2010).

With respect to the task of constructing scientific arguments, formulating appropriate warrants proved especially challenging for students (Table 5). Although the nature of evidence and claims is relatively straightforward (e.g., “what data do you have?” and “what conclusion can you make based on these data?”), the nature of warrants is more ill-defined. By definition, a warrant is the reasoning connecting evidence to claim (Toulmin et al., 1984; Booth et al., 2008). Naturally, the kind of reasoning required to justify a claim largely varies depending on the context of the argument and is often specific of a community of practice (Toulmin et al., 1984). Students' warrants (Table 5) indicate a pervasive conception that “numbers speak for themselves” failing to show the line of reasoning that justifies appropriating the data as the basis for the claim. This observation should not be surprising. The ability to explain how a given set of numbers can lead to a claim, that is, learning to argue like a biologist, is a highly sophisticated skill that requires practice and feedback over time. Student-centered inquiry-based learning environments are well suited to support the development of this skill through frequent formative assessments that provide students practice and feedback across multiple contexts.

Teaching QL in Introductory Biology

We identified three major challenges that introductory biology instructors may perceive as obstacles to incorporating QL in their courses.

1. My Course Is Already Packed with Content. How Am I Going to Fit in QL?

Our approach was to begin with clearly defined course objectives and incorporate additional QL objectives (Table 2) that contribute toward understanding of key biology concepts. We did not make room to “teach math” but created opportunities for students to use the quantitative skills they already have in the context of biological problems. Frequent assessment coupled with feedback proved an effective strategy to support student learning. We found that QL infusion does not distract from content, rather greatly supports teaching and learning about difficult topics, such as the nature of science and the construction of scientific knowledge.

2. How Do I Choose What Quantitative Concepts to Include in My Course?

The list of all quantitative concepts that are relevant to biology (NRC, 2003) is long and may discourage a well-intentioned instructor from even considering incorporating quantitative concepts in their Introductory Biology course. However, the table of contents in biology textbooks is long as well, and every instructor needs to choose course content and objectives. These course content and objectives can guide instructors to select the QL skills and concepts that are most appropriate for understanding specific biology concepts. For example, our evolution unit includes teaching and learning about the Hardy–Weinberg equilibrium theory. One of our objectives is that students are able to apply the Hardy–Weinberg theory to determine whether a population is evolving, based on change in allele frequencies over time. To test this theory in a case study, students must 1) calculate and understand the meaning of frequencies; 2) know how to plug numerical values in a formula and perform simple calculations; 3) distinguish between expected and observed results; and 4) know when a statistical test of significance is necessary to reject a “no-change” or null hypothesis model.

3. How Do I Know That My Students Are Developing QL Skills?

Formulating appropriate measurable learning objectives (such as those in Table 2), and assessing their achievement, is in our experience the most straightforward way of answering this question. Resources such as the Quirk project website (http://serc.carleton.edu/quirk/About_QuIRK.html) offer a valuable “blueprint” of generic QL objectives that can be tailored to any specific instructional context. Frequent assessments, aligned with the objectives, will inform instructors of the students' progress. Immediate formative feedback will inform students of their own learning and address difficulties. Clearly, the kind of assessment that promotes achievement of QL goals requires students to think independently and to show their work. If we truly want our assessments to reveal what learners are thinking, we need to move away from multiple-choice tests where guessing often occurs, toward “alternative” ways of testing where students have to demonstrate their abilities and make their thinking explicit (Smith and Tanner, 2010).

CONCLUSIONS AND PERSPECTIVES

Informal feedback from students in our course confirmed that introductory biology students, as reported previously (Spall et al., 2003), do not see biology as a quantitative science. However, there is rapidly growing consensus among the scientific community that quantitative abilities are critical for future biologists and for independently thinking citizens. Our study indicates that incorporating QL in a large-enrollment introductory biology course through an active-learning pedagogy is immediately feasible, consistent with the broader undergraduate introductory biology learning goals, and enables students to develop important quantitative skills, without requiring additional resources or formal course restructuring. However, the approach we describe represents a small-scale intervention, targeting a single course within a much broader curriculum. Ideally, we should infuse QL across the entire curriculum (Gross, 2004), to ensure that students acquire a broad range of quantitative tools, gain extensive practice with them, and develop the belief that quantitative thinking is an intrinsic component in the construction of scientific knowledge. To advance in this direction, the biology education community may greatly benefit from a national dialog aimed at developing a consensus on what specific QL skills biology students need, and how to build them in the college curriculum. This is not a challenge unique to biology education. The geoscience education community, for example, has recently initiated a similar discussion on how to teach college geoscience in ways that develop students' quantitative abilities (Manduca et al., 2008). The convergence of different scientific disciplines on a common overarching theme (QL) holds a tremendous potential for a broader dialog aimed at improving undergraduate science education.

ACKNOWLEDGMENTS

This research was supported in part by National Science Foundation (NSF) grant DUE-0736928 (to T. L.). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF.