Scientific Teaching Targeting Faculty from Diverse Institutions

Abstract

We offered four annual professional development workshops called STAR (for Scientific Teaching, Assessment, and Resources) modeled after the National Academies Summer Institute (SI) on Undergraduate Education in Biology. In contrast to the SI focus on training faculty from research universities, STAR's target was faculty from community colleges, 2-yr campuses, and public and private research universities. Because of the importance of community colleges and 2-yr institutions as entries to higher education, we wanted to determine whether the SI model can be successfully extended to this broader range of institutions. We surveyed the four cohorts; 47 STAR alumni responded to the online survey. The responses were separated into two groups based on the Carnegie undergraduate instructional program categories, faculty from seven associate's and associate's-dominant institutions (23) and faculty from nine institutions with primarily 4-yr degree programs (24). Both groups expressed the opinion that STAR had a positive impact on teaching, student learning, and engagement. The two groups reported using techniques of formative assessment and active learning with similar frequency. The mix of faculty from diverse institutions was viewed as enhancing the workshop experience. The present analysis indicates that the SI model for training faculty in scientific teaching can successfully be extended to a broad range of higher education institutions.

INTRODUCTION

A number of studies and reports highlight the failure of the traditional lecture classroom to successfully train undergraduate biology students (e.g., the National Research Council report BIO2010, 2003; Lujan and DiCarlo, 2006; Woodin et al., 2009, 2010;Brewer and Smith, 2011). Faculty members in biology departments typically have little or no training in what makes effective educators and thus often resort to a lecture-style teaching method, in part because that is what they experienced. This often results in an emphasis on content and fact. As a result, many students fail to gain an understanding of some of the most basic concepts of science and have little training in the skills necessary to be an effective scientist, such as critical thinking and problem solving (DiCarlo, 2006; Lujan and DiCarlo, 2006). Additionally, some students with an interest in science lose their interest due to the style of teaching in many science courses (Tobias, 1990). To adequately train students and keep them engaged, it is important that instruction be based on evidence from the literature and from the students about the validity of the teaching methods (Handelsman et al., 2007).

Calls have been made for reforms that will implement effective, research-validated teaching techniques (National Research Council, 2003; Handelsman et al., 2004; Holdren, 2009; Woodin et al., 2009, 2010; Brewer and Smith, 2011). One common recommendation repeated in reviews on undergraduate science, technology, engineering, and mathematics (STEM) education reform is to provide training for university faculty and postdoctoral researchers in the latest research on cognitive science and effective teaching (e.g., DeHaan, 2005; Anderson et al., 2011). Several programs have been implemented to help provide training for future and current university STEM faculty, including programs that focus on training graduate students and postdoctoral researchers (Miller et al., 2009) and university faculty (Lundmark, 2002; Henderson, 2008; Pfund et al., 2009).

The National Research Council's (NRC) Board on Life Sciences, in cooperation with the NRC's Center for Education, began the National Academies Summer Institute (SI) on Undergraduate Education in Biology as a means of training faculty from research universities in the methodology of scientifically based teaching and active learning (Pfund et al., 2009; Handelsman et al., 2004). From 2004 to 2008, cohorts from 64 research institutions participated in the SI (Pfund et al., 2009). The SI is an intense, weeklong institute that trains research-university educators in the methods of scientific teaching. Scientific teaching, defined as “active learning strategies to engage students in the process of science” (Handelsman et al., 2004), relies on instructional and assessment strategies that have been proven successful through rigorous research. Within the framework of educational constructivism (Ausubel, 2000), scientific teaching focuses on three main themes: active learning, assessment, and diversity. As part of the SI application process, participants agree to implement the techniques learned at the SI in their classes and to help disseminate the principles of scientific teaching to colleagues.

In a survey, participants of the SI have reported an overwhelming positive impact on their teaching effectiveness and willingness to implement scientific teaching practices (Pfund et al., 2009). Two years after participating in the SI, participants reported increased use of active-learning strategies, formative assessment, and more ways of assessing student knowledge than before they attended (Pfund et al., 2009). In addition, participants reported undertaking efforts to reform teaching; the STAR professional development workshop described here is one such effort resulting from participation in the SI.

Between 2007 and 2011, we conducted four annual workshops called STAR (for Scientific Teaching, Assessment, and Resources) mini-institutes modeled on the SI, targeting biology faculty at 2- and 4-yr educational institutions primarily from across the state of Louisiana. Our general approach followed that of the SI, but differed in that our target audience was faculty from a broader spectrum of educational institutions, including community colleges, 2-yr campuses, and public and private research universities. The mini-institutes were held just prior to the beginning of the spring semester. To accommodate the schedules of the participants, we compressed the workshop into 2½ d. Participation from institutions with limited resources for faculty development was encouraged by covering the costs of travel, lodging, and meals for the participants.

A particular focus of our effort was to include faculty from community colleges and other 2-yr institutions due to the importance of these institutions as entries for students into higher education and as a potential source of students transferring to baccalaureate institutions (Baum et al., 2011). In the Fall of 2012, community college students constituted 45% of the national undergraduate population and 45% of first-time freshmen (American Association of Community Colleges [AACC], 2013a). Half of the students who receive a baccalaureate degree at some point in their studies attend community college (AACC, 2013b).

The challenges facing community college STEM educators are rooted in the multiple missions community colleges are tasked to accomplish, the diversity of the student population they serve, and the often inadequate preparation of students for pursuing a STEM career (Labov, 2012; National Research Council and National Academy of Engineering, 2012). Given this large population of community college students, it is important that faculty members at community colleges, as well as their colleagues at baccalaureate institutions, are trained in validated techniques of scientific teaching and active learning. In a recent report, the Center for Community College Student Engagement (2010) highlighted strengthening classroom engagement, expanding professional development focused on student engagement, and focusing institutional policies on creating the conditions for learning as key strategies for improving community college student success. These strategies are the same as those advocated in scientific teaching.

The STAR mini-institutes were a way of disseminating the practices promoted at the SI. The mini-institute described and modeled scientific teaching and the data supporting it; presented background information on assessment and how to align instruction and assessment and diversity of learning styles and students; and discussed how to document teaching for annual reports and promotion and tenure portfolios. Our goal was to introduce these teaching practices to faculty teaching undergraduate biology at institutions representative of the broad spectrum of higher educational institutions in the state of Louisiana. We made some modifications in the program to target our specific audience.

We wanted to compare the perceptions of the STAR participants with the outcomes reported by Pfund et al. (2009) in their survey of participants in the SI. To do this, we used questions from the Pfund et al. (2009) survey as part of our survey. We surveyed participants to determine their views of the impact of STAR on their teaching practices and on their students. Among the topics we addressed in the survey were: 1) the role of STAR in improving participants’ knowledge and implementation of scientific teaching practices; 2) the degree to which participants were able to implement the practices; and 3) which practices the participants found most useful and used most frequently.

In addition, we wanted to determine whether there were differences between faculty at associate's and associate's-dominant institutions and faculty at institutions with primarily 4-yr degree programs in the perceived implementation and outcomes in utilizing scientific teaching in the classroom. Our overarching question was, “Can the National Academy Summer Institute model be successfully extended to a broad range of higher education institutions from 2-yr institutions to research universities?”

METHODS

General Description of STAR and Recruitment

Four annual STAR workshops were held in early January from 2007 through 2011 on the campus of Louisiana State University (LSU). The workshops were designed to follow the approach of the National Academies SI on Undergraduate Education in Biology held in Madison, Wisconsin (Pfund et al., 2009). The STAR workshop consisted of presentations on scientific teaching, active learning, assessment, and diversity (as in Pfund et al., 2009). Unique to STAR were presentations on the construction of syllabi and teaching plans and documenting teaching performance (Supplemental Table S1). To compare the SI agenda, see the supplemental material for Pfund et al. (2009).

Faculty members teaching biology or related disciplines from Louisiana institutions of higher education, both 2- and 4-yr institutions, were eligible to apply to STAR. Participants were recruited via emails sent to department chairs and faculty known by the organizers, along with information on STAR and directions for applying. A website was set up with information and application forms (http://star.lsu.edu). In 2011, emails were also sent to biology faculty from institutions in Texas. Overall, 31 institutions in Louisiana and two in Texas were contacted. To ensure that a diversity of institutions were represented at each mini-institute, we set a goal of having three to five teams from community colleges and seven to 10 teams from 4-yr institutions.

Acceptance to STAR was competitive, with teams judged on the following criteria: strength of the team, number of students impacted, level of institutional commitment, and institutional type. Team strength was evaluated based on applicants’ teaching credentials, involvement in professional development activities, and evidence of commitment to educational reform. The number of students impacted was judged by estimated course enrollments in the two succeeding semesters. Evidence of institutional commitment may have included additional supply support, reduced teaching load in the first semester after STAR, support for additional assessment, course assistance, and so forth. Applications from teams of two to three faculty and/or administrators from each institution were accepted between August and November of each year. The teams were notified of their acceptance by December.

STAR Design Elements

Prior to arrival at STAR, participants were assigned to groups of four to six individuals based on their teaching interests. Examples of groups from the 2011 STAR included cell biology/gene expression, ecology/environmental science, physiology/anatomy, and biochemistry. Group topics varied from year to year depending on the research/teaching interests of the participants. At STAR, each group developed a teachable unit on its subject area, which included elements of scientific teaching, active learning, assessment, and diversity, during the group-work periods. Each of the groups was led by a mentor who had participated in the National Academies SI. The one exception was a mentor who is dean of the Division of Science, Technology, Engineering and Math at Baton Rouge Community College. On the last day of the workshop, each group gave a 15–20 min presentation on its teachable unit. The materials developed by the groups were posted on the STAR LSU Community Moodle site, making them available to all of the participants. The participants committed to implementing at least part of their groups’ teachable units, although this might not always be possible, because of an individual's teaching assignments. At the conclusion of each STAR mini-institute, the participants developed written action plans for the upcoming semester describing which of the scientific teaching/active-learning strategies they would employ.

On arrival at STAR, participants received a copy of the book Scientific Teaching (Handelsman et al., 2007) and a binder with an agenda, instructions, and readings. A typical schedule for STAR is presented in Supplemental Table S1. The STAR workshops started each day at 8:30 am and, on days 1 and 2, lasted until 5:00 pm; the final day ended at 2:00 pm to allow the participants time to travel home. The typical schedule for days 1 and 2 consisted of presentations of scientific teaching concepts. The topics covered, learning goals, and example activities in the presentations on teaching are listed in Table S2.

An attempt was made to incorporate active-learning and formative assessment activities to enable participants to learn by doing. The active-learning model was especially emphasized during the introduction to scientific teaching, active learning, and assessment presentations.

The presentations were followed by teachable unit development in the afternoon on days 1 and 2. All groups reconvened at 4:30 pm on days 1 and 2 to report on the progress of their teachable units. Day 3 began with groups finishing their teachable units and then delivering their presentations to all of the participants and invited observers.

Assessment of the Perceived Instructional Change of the STAR Mini-Institute

To assess the impact of STAR, we conducted two participant surveys; one pencil-and-paper survey immediately after the completion of each workshop and, in the Fall of 2011, an online survey (see STAR questionnaire in the Supplemental Material) of all attendees from the four STAR mini-institutes. The immediate post-STAR survey asked participants to complete action plans listing the active-learning and assessment techniques they would use in their classes. We conducted the follow-up online survey of STAR participants using the online survey tool Survey Monkey (www.surveymonkey.com). The survey questions focused on four areas: 1) participant demographics; 2) participant perception of design elements of STAR; 3) participant perception of gains in teaching skills, student engagement, and student performance; and 4) implementation of scientific teaching techniques. The questions on design elements provided feedback from participants on the effectiveness of the implementation of STAR. The questions on gains were used to gauge participants’ confidence in teaching skills and their perception of student engagement and performance. The questions dealing with teaching skills were replicated from Pfund et al. (2009). The questions on implementation were used to determine which active-learning and assessment techniques participants used and how often they used them. These questions were also replicated from Pfund et al. (2009).

Response frequencies between faculty from associate's versus bachelor's degree–granting institutions were compared for questions asking about design elements of STAR and implementation of scientific teaching techniques. Fisher's exact test (R version 2.15.3 [R Core Team, 2013]) was used to determine whether there were significant differences between the two groups. Between-group differences in responses to questions about gains in teaching skills, student engagement, and student performance were analyzed with the Wilcoxon rank-sum test (R version 2.15.3 [R Core Team, 2013]).

Programmatic Comparison between STAR and the SI

For the purpose of comparing the effectiveness of the programmatic approaches used by the SI and STAR, we asked questions regarding which active-learning approaches and assessment techniques were implemented and how often (see STAR questionnaire in the Supplemental Material, Implementation of Scientific Teaching Techniques, question 3). This was done to determine whether a faculty development workshop designed for 4-yr research institutions was transferable to one that encompassed a broader mix of institutions, including 2- and 4-yr institutions. For the STAR survey, respondents were asked whether they used a given technique “once a semester,” “once a month,” “once a week,” or “more than once a week” (see STAR questionnaire in the Supplemental Material, Implementation of Scientific Teaching techniques, question 3). For the SI survey, the respondents were asked whether they used a given technique “never,” “once a semester,” “multiple times a semester,” “monthly,” or “weekly” (see Supplemental Figures S2 and S3 in Pfund et al., 2009). The percentage of respondents from STAR who reported using a specific active-learning or assessment technique at least “once a month” was compared with the percentage of SI participants who reported using these techniques at least “multiple times a semester.” While this was not precisely equivalent, we felt it provided a valid way of determining broad differences in perceived implementation by participants in the two programs. For comparisons among STAR participants from 4-yr institutions, STAR participants from associate's institutions, and SI participants, the number of respondents in each category was compared in a Pearson's chi-square test using R version 2.15.3 (R Core Team, 2013). In some instances, a Fisher's exact test was also used, if counts in cells were <5, but the outcome of the analyses in these cases were not changed, so we only report the results from the Pearson's chi-square test.

The STAR mini-institute proposal received approval from the LSU Institutional Review Board (LSU IRB# E3630).

RESULTS

Participation

Eighty faculty members and one postdoctoral research fellow from 16 institutions participated in the STAR mini-institutes (Table 1). Thirty-six of the faculty members were from institutions in the Carnegie undergraduate instructional categories “associate's” or “associate's-dominant” (http://classifications.carnegiefoundation.org). The number of students taught per year by STAR participants ranged from 100 to 1000; the number of courses or sections taught annually by these faculty members ranged from one to 20. Several institutions sent teams to STAR multiple times.

| Carnegie undergraduate instructional categoryb | Number of institutions | Number of male participants | Number of female participants |

|---|---|---|---|

| Associate's | 6 | 12 | 18 |

| Associate's-dominant | 1 | 3 | 3 |

| Balanced arts and sciences/professions, some graduate coexistence | 3 | 4 | 6 |

| Balanced arts and sciences/professions, high graduate coexistence | 2 | 18 | 6 |

| Arts and sciences plus professions, high graduate coexistence | 1 | 1 | 3 |

| Professions plus arts and sciences, some graduate coexistence | 3 | 4 | 3 |

| Total | 16 | 42 | 39 |

The participants from the four mini-institutes were contacted by email in the Fall of 2011 and asked to respond to an online questionnaire on their participation in STAR, the impact it had on their teaching practices, and the perceived impact on their students. A total of 47 completed the survey for a response rate of 58%. Of the respondents, 23 were from institutions categorized as associate's or associate's-dominant institutions.

Design Elements

Based on the feedback from the participants, email announcements and contact with the department heads, who then disseminated the information, were the two most effective ways of advertising the mini-institute. Almost a third of the participants from both instructional categories were made aware of STAR by colleagues. The department heads disseminated information on STAR to 11 of the respondents from associate's institutions and four of those from baccalaureate institutions. Email announcements were the source of first information about STAR for 13 respondents from baccalaureate institutions and six from associate's institutions.

For participants who were not local, the role of financial support defraying the costs of participating was significant. Approximately 74% of these individuals said they could not have participated without a stipend covering travel, lodging, and per diem. Twelve of 14 respondents from baccalaureate institutions and 10 of 15 respondents from associate's institutions who required lodging to attend STAR reported they could not have attended without the stipend. There was no difference between the two groups (Fisher's exact test: P > 0.05).

As part of the mini-institute, participants, working in groups, developed teaching materials using the concepts covered in STAR. The participants committed to implementing at least part of their groups’ teachable units, although this might not always be possible due to an individual's teaching assignments. When surveyed, 64% of the respondents implemented at least part of their groups’ teachable units, and 28% implemented materials from other groups. At the conclusion of each STAR mini-institute, participants developed action plans for the upcoming semester in which they described the scientific teaching/active-learning strategies they would employ. Ninety-four percent of the respondents reported that they successfully implemented at least part of these plans.

The majority of respondents (92%) found it valuable to have colleagues who also completed the workshop and interacted with them in implementing changes to their teaching. Forty-nine percent found it of value to interact with STAR alumni from other institutions. STAR participants came from a broad cross-section of higher education institutions (Table 1). Three respondents felt that this diversity hindered the overall dynamics and effectiveness of the mini-institute. However, 63% felt this diversity enhanced or greatly enhanced the experience, and 26% felt it had no effect. Eighty-five percent of the participants from associate's or associate's-dominant institutions found that the diversity enhanced or greatly enhanced the dynamics of the workshop.

Instructional Change

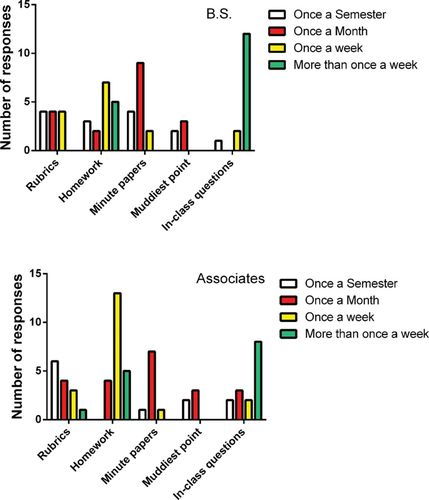

At the conclusion of each STAR mini-institute, participants reported their plans for implementing assessment tools in the next semester they taught (Table 2). The participants most frequently planned to use minute papers and in-class quizzing. None of the 81 respondents planned to use rubrics. In the 2011 survey of all STAR attendees, participants were asked to report which techniques of formative assessment presented at STAR were utilized in their classes and with what frequency (Figure 1). Among the assessment techniques implemented by the 47 respondents were the use of scoring rubrics (26 of the 47), homework assignments (39), minute papers (24), “muddiest point” papers (10), and in-class questions (30) using a student-response system (clickers). Of the faculty members using clickers, 80% used them at least once a week. Homework was assigned at least weekly by 77% of the respondents, and 79% used in-class minute papers on at least a monthly basis.

Figure 1. Faculty members were asked to report the frequency with which they used various assessment tools. The top panel illustrates the responses from faculty at bachelor's-degree institutions; the bottom panel shows the responses from faculty at associate's/associate's-dominant institutions. The choices were scoring rubrics, homework assignments, in-class minute papers, in-class muddiest point papers, and in-class questions using a student-response system (clickers).

| Yearb | |||||

|---|---|---|---|---|---|

| 2007 | 2009 | 2010 | 2011 | Total | |

| Assessment tools | |||||

| Rubrics | 0 | 0 | 0 | 0 | 0 |

| Homework | 5 | 0 | 0 | 1 | 6 |

| Minute papers | 1 | 8 | 11 | 3 | 23 |

| Muddiest point papers | 2 | 2 | 1 | 1 | 6 |

| In-class questions | 8 | 7 | 16 | 4 | 35 |

| Active-learning techniques | |||||

| Student group discussion | 2 | 3 | 16 | 4 | 25 |

| Cooperative learning | 0 | 0 | 0 | 0 | 0 |

| Problem-based learning | 0 | 0 | 0 | 1 | 1 |

| Case studies | 0 | 0 | 0 | 1 | 1 |

| Think–pair–share | 6 | 10 | 6 | 2 | 24 |

| Concept maps | 0 | 0 | 1 | 0 | 1 |

The only difference in use of assessment techniques between the associate's/associate's-dominant faculty and the bachelor's-degree faculty was in the use of homework. Associate's faculty members were more likely to assign homework once a month or more frequently (Fisher's exact test: P = 0.0044). There were no statistical differences between the two groups in the other assessment techniques (Fisher's exact test: P > 0.05). For both groups, homework and in-class quizzes were the most popular assessment tools.

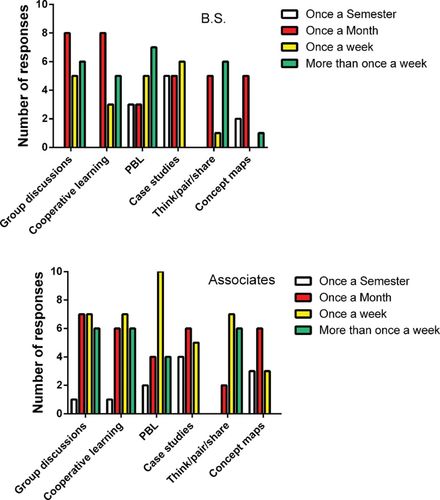

At the conclusion of each STAR, participants were asked which active-learning techniques they planned to employ in the next semester they taught. The most frequently reported plan was to implement student group discussions and think–pair–share activities (Table 2). In the 2011 survey of all STAR attendees, the participants were queried about which active-learning activities they utilized in their classes and with what frequency. Respondents reported using group discussions at least once (40 of the 47), cooperative learning (46), problem-based learning (38), case studies (31), think–pair–share (46), and concept maps (20) during the semester (Figure 2). All of these approaches were reported to be used with similar frequency by the respondents in the two groups of faculty (Fisher's exact test: P >0.05). The most popular active-learning technique was student group discussions. Concept mapping was used by the fewest respondents.

Figure 2. Faculty members were polled as to how often they used active-learning techniques in their classes: student group discussions, cooperative learning, problem-based learning (PBL), case studies, think–pair–share, and concept maps. The top panel illustrates the responses from faculty at bachelor's-degree institutions; the bottom panel is the responses from faculty at associate's/associate's-dominant institutions.

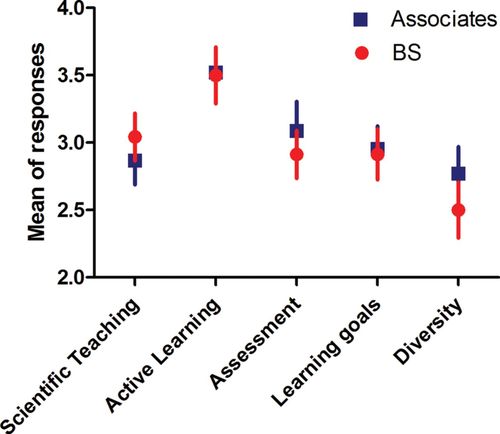

The respondents were asked to assess their gains in teaching skills in the areas of scientific teaching, active-learning exercises, means of assessment, developing learning goals, and coping with diversity in their classrooms as a result of their participation in STAR (Figure 3). For all of these categories, a moderate gain was the most common response. The largest gains were for active learning, with 47% of the participants reporting large to very large gains. On average, the gains were similar among the two groups of faculty. For all of the categories the 95% confidence limits of the means of the responses were greater than 2.0. This suggests that the respondents perceived that they received benefit in all these categories from participating in STAR.

Figure 3. Self-reported gains in teaching skills using techniques presented at STAR. Participants reported their gains in using scientific teaching, active learning, techniques of formative and summative assessment, developing learning goals, and meeting the demands of a diverse student population. A Likert scale was used: 1, no gain; 2, small gain; 3, moderate gain; 4, large gain; and 5, very large gain. Means and SDs are shown for responses from faculty at bachelor's-degree institutions (circles) and associate's/associate's-dominant institutions (squares).

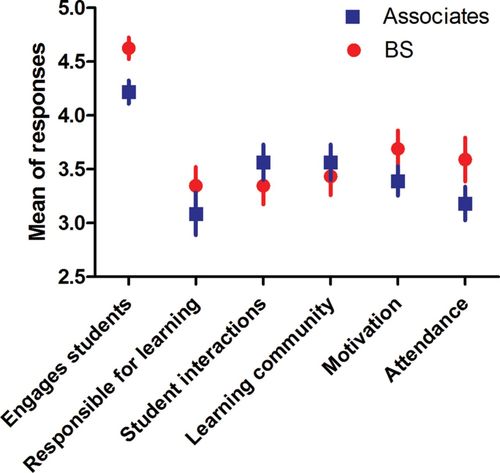

Participants reported that implementing the methods covered at STAR had a positive impact on student engagement and learning (Figure 4). Ninety-seven percent said students were more engaged in class. Class attendance was reported to have increased by 41% of the respondents. One aspect of student behavior did not improve. There was no perceived increase in students taking responsibility for their learning (the 95% confidence limits were not significantly different from 3.0, the neutral response). The perceptions reported by the faculty from associate's/associate's-dominant and bachelor's-degree institutions closely mirrored each other (Figure 4).

Figure 4. Faculty-reported measures of student engagement in classes taught using scientific teaching and active-learning techniques. Faculty members were asked whether they agreed with statements about whether scientific teaching and active learning engaged their students, whether students became more responsible for their own learning, whether there was more of a student learning community, whether students interacted with one another more outside of class, whether student motivation improved, and whether attendance was increased. A Likert scale was used: 1, strongly disagree; 2, disagree; 3, neutral; 4, agree; and 5, strongly agree. Means and SDs are shown for responses from faculty at bachelor's-degree institutions (circles) and at associate's/associate's-dominant institutions (squares).

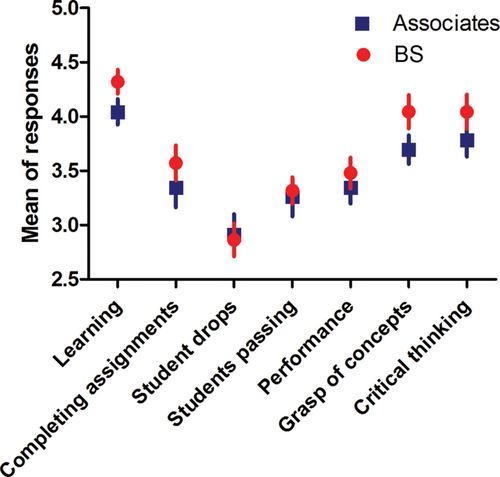

Faculty members were asked about their perceptions of whether applying the lessons of STAR resulted in improvements in measures of student performance (Figure 5). There appears to be no perceived effect on the number of students dropping or passing courses. For all other measures reported, there was a perceived positive impact (95% confidence limits greater than 3.0, the neutral response). Perceived student learning, overall performance, grasp of concepts, and critical thinking/problem solving all were positively impacted. The perceptions reported by the faculty from associate's/associate's-dominant and bachelor's-degree institutions again closely mirrored each other (Figure 5).

Figure 5. Faculty-reported measures of student performance. Faculty members were polled on improvements in student learning, whether fewer students dropped the course, whether more students passed the course, whether overall performance of the students improved, and whether students showed an improved grasp of concepts and an improvement in critical thinking and problem-solving skills. A Likert scale was used: 1, strongly disagree; 2, disagree; 3, neutral; 4, agree; and 5, strongly agree. Means and SDs are shown for responses from faculty at bachelor's-degree institutions (circles) and at associate's/associate's-dominant institutions (squares).

Ninety-seven percent of the respondents reported that STAR had a positive or very positive impact on their teaching, and almost the same number (94%) reported that their participation in STAR led to a perceived positive or very positive impact on student engagement and learning. In this area, there were no differences between the two groups of faculty in their evaluations.

Cross-Program Comparison

Pfund et al. (2009; see their Figure S1B) surveyed SI participants before and immediately after the SI and 1 yr later about the participants’ levels of skill and knowledge of scientific teaching, active-learning assessment, learning goals, and diversity. We questioned STAR participants a year or more after their participation about their gains in knowledge of these topics (Figure 3). The SI participants showed significant self-reported gains after participation in SI (see Figure S1B in Pfund et al., 2009). STAR participants reported that they had increased their skill in and knowledge of these topics (Figure 3). The questions asked of the SI and STAR participants are not identical, but clearly the respondents from both programs reported a gain in skills and knowledge.

The responses of the STAR participants and the SI participants reported by Pfund et al. (2009), who were queried about how frequently they used certain active-learning techniques in their classrooms more than once in a semester, were similar for five of the 10 questions common between our two surveys (Table 3). There was a difference in the communication of course goals and objectives to the students more than once a semester. Of the SI respondents, 91% did this multiple times during the semester, while 48% of STAR participants did this multiple times, and 98% of STAR respondents did this at least once a semester. The responses to questions about open dialogue and debate among students and the use of cooperative learning, problem-based learning, concept maps, and case studies were comparable among the two STAR groups and the SI participants (Table 3). Fewer STAR alumni reported using techniques such as think–pair–share and scoring rubrics more than once a semester (Table 3). More STAR alumni made use of minute papers, and STAR participants from 2-yr institutions assigned homework more frequently (Table 3).

| Technique | STAR: baccalaureate (n = 23) | STAR: associate's (n = 23) | SI | Pearson's chi-square testb |

|---|---|---|---|---|

| Cooperative learning | 70% | 83% | 83% (n = 66) | χ2 = 2.14, P = 0.34 |

| Problem-based learning | 65% | 78% | 73% (n = 66) | χ2 = 0.99, P = 0.61 |

| Case studies | 48% | 48% | 55% (n = 66) | χ2 = 0.49, P = 0.78 |

| Homework | 61% | 96% | 82% (n = 68) | χ2 = 9.22, P = 0.01 |

| Think–pair–share | 52% | 65% | 79% (n = 68) | χ2 = 6.68, P = 0.04 |

| Concept maps | 26% | 39% | 28% (n = 68) | χ2 = 1.22, P = 0.54 |

| Scoring rubrics | 35% | 35% | 65% (n = 68) | χ2 = 9.85, P = 0.007 |

| Minute papers | 48% | 35% | 20% (n = 68) | χ2 = 6.68, P = 0.04 |

| Communicate course goals and objectives to students | 43% | 52% | 91% (n = 67) | χ2 = 26.6, P = 0.00002 |

| Open dialogue and debate among students | 83% | 87% | 85% (n = 67) | χ2 = 0.17, P = 0.92 |

While the STAR mini-institute was modeled after the SI, it was not replicated exactly. Several sessions and the group work and presentations were faithful to the SI model, but other sessions in STAR were changed to account for the different faculty population and the shortened time frame (see Table S2 in Pfund et al., 2009; also see online Supplemental Material Table 1B of this paper). The sessions in which STAR closely followed the SI framework involved the inclusion of the “three pillars” of scientific teaching—assessment, active learning, and diversity (Handelsman et al., 2007). In comparing these three sessions, the one that was least alike between the SI and STAR was the diversity session. The diversity session at the SI focused on bias and prejudice and how these affect student learning (Pfund et al., 2009), while at STAR it focused on differences in students at 2- and 4-yr institutions and learning styles. Sessions unique to STAR included teachable unit development, syllabus and teaching plans, and evaluation of teaching; there was some overlap, which was covered as part of several sessions at the SI. For example, the syllabus and teaching plan session at STAR included discussion of using Bloom's taxonomy to develop and evaluate learning goals. Sessions unique to SI (as reported in Pfund et al., 2009) included scientific teaching, framework for a teachable unit, how people learn, dissemination, and institutionalization, but, again, there was overlap between SI and STAR sessions.

DISCUSSION

The STAR mini-institute was developed in response to the call by the SI to become one of the agents of change and promote improvements in undergraduate biology education. STAR was modeled after the annual SI held at the University of Wisconsin–Madison for faculty from research universities (Pfund et al., 2009). In contrast to the SI, STAR targeted faculty at institutions of higher education representing a broader spectrum of schools, from community colleges and 2-yr institutions to public and private research universities (Table 1). To assess the efficacy of the SI model of training faculty from this broader diversity of institutions, which differ in mission, student preparation, and resources, we pooled our STAR survey respondents into two groups based on the Carnegie undergraduate instructional program categories. We pooled the responses of faculty from associate's and associate's-dominant institutions (23 respondents from seven institutions) and the responses of faculty from institutions with primarily 4-yr degree programs (23 faculty members and 1 postdoctoral fellow, nine institutions).

The survey responses from the two groups of STAR faculty were strikingly similar. The STAR mini-institutes were perceived to have a positive impact on measures of student engagement (Figure 4) and performance (Figure 5). There was, however, no perceived effect on the number of students passing or dropping courses. This similarity of responses from the two groups of faculty suggests that the SI program, which was designed for faculty from 4-yr research institutions, is transferable to faculty from 2-yr institutions.

For most of the participants, having representatives from diverse institutions at STAR had a positive effect on the workshop dynamics. Faculty members from associate's/associate's-dominant institutions, in particular, felt the diversity of faculty had a positive effect. In addition, having colleagues from their own institution who participated in STAR was beneficial in implementing the techniques covered at STAR.

About 64% of the respondents were able to implement at least some of their teachable units in their classes. This may, among other things, reflect teaching assignments, because 94% of the respondents were able to implement at least part of the action plan they developed at the end of STAR. Both groups of faculty utilized assessment tools with similar frequency (Figure 1). Homework and in-class quizzes were the most popular assessment tools; muddiest point papers were the least used by both groups. The only difference in use of assessment tools between the associate's/associate's-dominant faculty and the bachelor's-degree faculty was in the use of homework. Faculty members from associate's/associate's-dominant institutions were more likely to assign homework once a month or more. Faculty members from both groups chose to use active-learning tools with similar frequency. The most popular active-learning technique was student group discussions. Concept mapping was used by the fewest respondents. In comparing the action plans that the participants formulated at the conclusion of their participation in STAR (Table 2) with the activities reported in the 2011 survey of all STAR participants (Figure 1), the participants, as a group, did implement the plans to use minute papers and in-class questions. It is interesting to note that participants apparently broadened their exploration of different assessment tools over and above what they had planned. For instance, none of the respondents initially planned to use scoring rubrics as an assessment tool, while 26 of the 2011 survey respondents used rubrics at least once a semester. Similarly, the participants as a group broadened the active-learning techniques that they used in their classrooms (Figure 2) compared with their initial plans (Table 2).

The differences in the use of these techniques by SI and STAR alumni (Table 3) may reflect different emphases placed on these techniques or the longer time frame of SI, which permits more opportunity for practice. STAR runs approximately half as long as the SI. Also, on the basis of experience, we advised STAR participants to introduce change in their teaching gradually, in amounts that could be managed comfortably. SI alumni used scoring rubrics and think–pair–share exercises more frequently (Table 3). STAR participants initially did not plan to use scoring rubrics (Table 2), but 35% of both STAR groups incorporated these into their classrooms. SI alumni communicated course goals and objectives to students more often than did the STAR participants (Table 3), although 98% of STAR participants did this at least once a semester. STAR participants made more frequent use of minute papers than SI participants (Table 3). Both of these differences between STAR and SI participants may reflect different emphases on these techniques during the two workshops. STAR faculty from 2-yr institutions assigned homework more frequently than the STAR baccalaureate or SI faculty (Table 3). These differences may reflect smaller class sizes in 2-yr institutions, different student populations, or different categories of courses (e.g., introductory vs. upper level). Despite these differences in the frequency of using some techniques of assessment and active learning among the different groups, for the most part, the self-reported use of these techniques was remarkably similar (Table 3). Although the emphases and length of time in the STAR and SI workshops may have differed, the focus of both workshops was on the “three pillars” of scientific teaching, and this appears to have impacted the participants’ approach to teaching in similar ways. The more streamlined STAR mini-institute appears to be an effective mechanism for communicating scientific teaching to faculty with limited time to engage in professional development activities.

Several questions in our survey concerned how best to publicize and recruit faculty to professional development workshops such as STAR. We recruited faculty to STAR through email solicitations to department heads, who were asked to disseminate information to their faculty and direct emails to colleagues. Almost a third of the participants were made aware of STAR through colleagues. In an attempt to be accessible to all the educational institutions from which we recruited, STAR paid travel and lodging costs and provided a per diem to faculty traveling to LSU. For participants who were not from the local area, the role of support defraying the costs of participating was significant, perhaps due to limited institutional resources for travel to professional development opportunities. Approximately 74% of these individuals reported they could not have participated without a stipend covering travel, lodging, and per diem. There was no difference between faculty from different undergraduate instructional categories in the importance of the stipend. In targeting faculty for professional development programs, it is apparent that attention needs to be given to overcoming institutional resource limitations. The SI uses a somewhat different model, requiring a financial commitment from the sponsoring campus and that the institution covers the travel expenses of their participants (Pfund et al., 2009). The SI model assumes that institutions that have a financial commitment are more likely to support their faculty members’ efforts at reforming teaching. Both the STAR and SI models target different kinds of institutions, and both models have their place.

We recognize that the STAR alumni who responded to our survey are a self-selected group, and those who responded were likely to be receptive and enthusiastic about having participated in STAR. The data reported in this study reflect the perception respondents had of the impact of STAR on their teaching practices. These faculty members believe that they have changed their teaching practices to more student-centered approaches, but we must regard these conclusions with caution. As reviewed in Kane et al. (2002), after professional development programs, faculty members often overestimate the degree to which they implement student-centered learning practices in the classroom. In a study examining the effects of professional development programs for university faculty emphasizing the use of student-centered teaching practices, Ebert-May et al. (2011) compared the results of participant surveys with videotaped observations of classroom practices evaluated using the Reformed Teaching Observation Protocol (RTOP; Swada et al., 2002). The comparison demonstrated that the instructors’ perceptions of their use of student-centered approaches did not entirely match the observational evaluation (Ebert-May et al., 2011). Although participants may have an inflated view of their adoption of learner-centered teaching, this does not entirely undermine the value of professional development workshops in training faculty to use these practices.

In determining the efficacy of the professional development programs, Ebert-May et al. (2011) compared average RTOP scores with previously determined categories defining levels of reformed teaching from Swada et al. (2002). In this analysis, participants with an RTOP score of 45 or less were considered to have a teacher-centered classroom environment, and those with 46 or more a learner-centered classroom environment. Ebert-May et al. (2011) did not collect observations of teaching practice prior to the professional development workshops. It is possible that although faculty members had not achieved a learner-centered environment (i.e., they did not achieve an RTOP score of 46 or more), they may have made significant progress toward it. As part of our present study, we are analyzing videotaped classroom observations of STAR participants both prior to and after attending STAR (Ales et al., 2010). These data are currently being collected and analyzed and will examine another facet of the efficacy of STAR.

Direct observation of teaching practices is optimal for evaluating the efficacy of professional development programs. Participant surveys, however, are commonly used and, in this case, allowed for the comparison between STAR and the SI. With the recent emphasis on faculty development, these cross-program comparisons will allow organizers of the faculty development programs to identify and implement best practices for their target audiences.

For professional development to have a lasting and large impact in reforming STEM teaching at universities, it needs to be an ongoing process; however, short, intense workshops such as the SI (Pfund et al., 2009) and STAR have value in introducing and training faculty in scientific teaching. Application of what was learned at these professional development workshops clearly results in a perceived positive impact on student engagement and performance. Teams from participating institutions can help one another sustain and improve the application of newly acquired teaching techniques. Even if this reflects only an incremental increase in the effectiveness of STEM teaching, it is a needed first step toward the goal of improving undergraduate science education.

A major focus of STAR was to make training in scientific teaching available to biology faculty from a broad spectrum of higher education institutions including public 2-yr institutions. Handelsman et al. (2004) stressed the importance of research universities taking the lead in reforming undergraduate science education. However, not only faculty from research universities can benefit from instruction on scientific teaching. The importance of expanding the reform movement to community colleges cannot be overstated. While the largest percentage of college students enrolled in STEM fields attend public 4-yr institutions (41%), the next highest percentage (31%) are enrolled in public 2-yr institutions (President's Council of Advisors on Science and Technology, 2012). The students enrolled in 2-yr institutions are often not as equipped to succeed as their peers in 4-yr institutions and thus stand to gain more from reforming teaching practices. For example, only 28% of students seeking associate's degrees at community colleges succeed in 3 yr, and only 45% have succeeded in attaining an associate's degree in 6 yr (Center for Community College Student Engagement, 2010). To help improve these success rates for community college students, the Center for Community College Student Engagement (2010) has advocated the same teaching reforms (i.e., active learning, assessment, diversity) described by Handelsman et al. (2004). The STAR mini-institute has made an effort to disseminate these teaching practices to faculty at community colleges.

On the basis of our experience and feedback from STAR participants, we believe future faculty development workshops can benefit from the following recommendations. First, providing some type of stipend to help defray the cost of attending such workshops is extremely important to faculty from institutions that do not have resources to dedicate to professional development. This kind of support can help increase the pool of potential participants. Second, reaching out to a diversity of institutions ranging from 2-yr colleges to research universities can help expose the faculty members from all of these institutions to the variety of issues faced in their classrooms and help generate more ideas for improving learning outcomes in a diversity of students. The mix of faculty from 2- and 4-yr institutions may also enhance the dynamics of the workshop. Third, workshops need to present a wide variety of active-learning and assessment techniques. Immediately after STAR, most participants planned to implement only a few of the techniques presented, but it was evident in the follow-up surveys that, as a group, they tried more of the techniques than originally planned. We believe that exposure to these techniques was a springboard for the participants to investigate more innovations in their teaching. While the impact of STAR on student outcomes remains to be investigated, the present study of the impact of STAR suggests that it has had a positive influence in changing the instruction methods of participants to more active approaches. The present analysis indicates that the SI model for training faculty in scientific teaching can successfully be extended to a broad range of higher education institutions, from community colleges to research universities.

ACKNOWLEDGMENTS

This work was supported by National Science Foundation grants DUE 0736927 and DUE 0737050, the LSU College of Science, and the LSU Department of Biological Sciences. We thank Dr. Michelle Withers, Leigh Anne Howell, and Sandra Ditusa for their valuable assistance with this project. We particularly thank Drs. Christine Pfund and Jo Handelsman for providing us with the data from Pfund et al. (2009) to compare with our results.