Student Perceived and Determined Knowledge of Biology Concepts in an Upper-Level Biology Course

Abstract

Students who lack metacognitive skills can struggle with the learning process. To be effective learners, students should recognize what they know and what they do not know. This study examines the relationship between students’ perception of their knowledge and determined knowledge in an upper-level biology course utilizing a pre/posttest approach. Significant differences in students’ perception of their knowledge and their determined knowledge exist at the beginning (pretest) and end (posttest) of the course. Alignment between student perception and determined knowledge was significantly more accurate on the posttest compared with the pretest. Students whose determined knowledge was in the upper quartile had significantly better alignment between their perception and determined knowledge on the pre- and posttest than students in the lower quartile. No difference exists between how students perceived their knowledge between upper- and lower-quartile students. There was a significant difference in alignment of perception and determined knowledge between males and females on the posttest, with females being more accurate in their perception of knowledge. This study provides evidence of discrepancies that exist between what students perceive they know and what they actually know.

INTRODUCTION

Currently, attention is being given to thinking about thinking, or metacognition, and the essential role it plays in the process of learning (National Research Council [NRC], 2000; Tanner, 2012). Although different explanations of metacognition exist (Schraw and Moshman, 1995; Pintrich, 2002; Donovan and Bransford, 2005; Crowe et al., 2008), all have developed from the description established by Flavell (1979) as “cognition about cognitive phenomena” or “cognition about cognition” (Flavell et al., 2002). Metacognition “includes skills that enable learners to understand and monitor their cognitive processes” (Schraw et al., 2006, p. 112). Metacognition allows learners to manage and evaluate their cognitive skills (Schraw, 1998) and can have important implications during the learning process.

Learning in science can be complex because of the emphasis placed on integrating, organizing, synthesizing, and analyzing content (American Association for the Advancement of Science, 2011). To understand and develop new knowledge, one must utilize prior knowledge (Herman et al., 1992; Tobias and Everson, 1996). Students cannot be effective learners if they cannot distinguish what they know from what they do not. To be effective learners, college students need to possess the ability to assess their knowledge, especially in courses with an emphasis on large volumes of new knowledge. Students who are able to accurately monitor their knowledge can focus their efforts on studying content not yet understood (Tobias and Everson, 1996). However, students who cannot make accurate judgments may not devote effort to studying (Ehrlinger et al., 2008) or may devote effort to studying content already understood while neglecting content that is not (Tobias and Everson, 1996). Content overage has increased over time in biology courses (Lord, 1998), which could have particularly important implications for learning. Being aware of when you are knowledgeable is a characteristic of being a self-regulated learner, which means that these individuals should be able to accurately monitor and evaluate their learning (Zimmerman, 1990). All of these learning elements are components of metacognition.

Students who lack accurate perception of their knowledge or ability can suffer from what has been referred to as a “dual burden,” in that not only do they draw incorrect conclusions but their inability to monitor or evaluate their knowledge means they are unable to recognize their faults (Kruger and Dunning, 1999). It is difficult to comprehend how it would be possible for students to understand new content if they cannot make accurate judgments about their current knowledge (Tobias and Everson, 2002). If a student's perception of his or her skills or knowledge is superior to his or her actual skills, it may have a negative effect on self-efficacy, leading to discouragement (Grant et al., 2009) when the misalignment is discovered. The inaccuracy can also lead to students drawing incorrect conclusions. For instance, some students who perform poorly on an exam may conclude the exam was unfair (Willingham, 2003/2004), instead of concluding their knowledge was not sufficient to perform well. The implications for metacognition in learning environments are substantial, but instructors often do not invest time in determining students’ knowledge or lack of knowledge (Tanner and Allen, 2005).

Studies focusing on undergraduate student metacognition, perception, judgment of knowledge, feeling of knowing, and prediction of performance often have included the completion of contrived tasks in laboratory settings (Glucksberg and McCloskey, 1981; Koriat, 1993; Hargittai and Shafer, 2006). Recently, research has begun to investigate metacognition in college courses. In a study by Grant et al. (2009), students were required to demonstrate their skills using three computer applications, after which their performances were compared with their perceptions of their skills. The results showed a discrepancy existed between student perception and actual performance for two of the three applications. This disparity demonstrates that students had higher perceptions of their skills than their performances indicated. Knowledge surveys have been used in courses to assess student understanding of their learning in chemistry (Bell and Volckmann, 2011) and biology (Bowers et al., 2005). In these studies, students were asked to indicate their confidence in answering questions related to course content, and then their confidence was compared with performance on other assessments or their final grades. Bell and Volckmann (2011) found high correlations between students’ indicated confidence on the knowledge survey and knowledge determined by exam scores. However, Bowers et al. (2005) found that, although student confidence on the knowledge survey increased over the semester, there was no relationship between final grades or correct responses to exam questions. The contradictory results from these studies may suggest concern over the effectiveness of evaluating the relationship between student perception of knowledge and actual knowledge through the comparison of knowledge surveys and exams.

The discrepancy between perceived and actual knowledge or performance may not affect all students equally. In a study conducted by Ehrlinger et al. (2008), top-performing students in an introductory psychology course were more accurate in predicting their performance than lower-performing students, who significantly overestimated their performance. A similar trend was also observed in a study by Hacker et al. (2000), in which top-performing students in an introductory psychology course were significantly more accurate in predicting their performance on an exam than lower-performing students. Ehrlinger et al. (2008) explains that students who perform poorly may overestimate their abilities, because they lack the skills necessary to make accurate judgments. Studies have also found gender-related differences between perception of skills or knowledge. When examining student performance on a science reasoning quiz, Ehrlinger and Dunning (2003) found that, although men and women performed equally, women significantly underestimated their scientific ability compared with men.

A limited body of research exists that has examined students’ perception of knowledge compared with their actual knowledge in an undergraduate course, and even less research has examined this phenomenon in biology. This study is distinct in that it links students’ perception of their knowledge to their determined knowledge about specific biological concepts and terms in an upper-level biology course by using a pre/posttest approach. The research questions investigated in this study include:

What is the relationship between students’ perception of their knowledge of biological concepts and their determined knowledge?

Are students who are more knowledgeable about biological concepts and terms better at perceiving their knowledge?

Does alignment between perception and knowledge improve through the course?

What is the relationship between gender and students’ perceived and determined knowledge?

If students have an accurate perception of their knowledge about biology concepts and terms, there would be no difference between perception and determined knowledge. It was expected that students with a better understanding of biology concepts and terms would be more accurate in evaluating their understanding. It was expected that the alignment between student perception and determined knowledge would increase in accuracy from the beginning to end of the course as students gained a deeper understanding about the complexity of concepts and terms.

METHODS

Research Context

This study took place at North Dakota State University, a large land-grant research university. The course was a three-credit, upper-level elective biology course that met three times weekly and covered physiological mechanisms underlying life history trade-offs and constraints in an ecological and evolutionary context. The course emphasized building upon previous course work to understand complex concepts and connections across biology. Two of three main objectives for this course, as outlined in the syllabus, were to become familiar with terminology and concepts in physiological ecology and to understand physiological adaptation and evolution by natural selection. The only prerequisite for this course was a semester of introductory “organismal” biology lecture and laboratory. Instruction was primarily teacher centered and lecture based and was intermixed with student discussions. Students were primarily evaluated using portfolio-based assessments that accounted for 90% of students’ final grades.

Data Collection

Student perceived and determined knowledge was collected and analyzed through the completion of a pre- and posttest. The pre- and posttest were designed by the instructor and were completed by students as an ungraded in-class activity at the beginning and end of the course, respectively. The pre- and posttest were identical and were composed of open-ended questions designed to elicit student knowledge of biological concepts and terms (e.g., acclimation, genotype, symmorphosis, evolution) that aligned with important concepts in the course. The pre- and posttest contained three components, two of which were analyzed for this study. First, students were asked to indicate their knowledge level of ∼24 biological concepts and terms as: concepts I know, concepts I sort of know, concepts I’ve heard of but don't know, and concepts I’ve never heard of. Through this designation process, students indicated how they perceived their knowledge for each biology concept and term. The other section analyzed for this study had students demonstrate their knowledge or understanding by being prompted to provide a written response for each of the concepts and terms, with the response possibly including a definition, example, or synonym. The third component of the pre- and posttest had students group or connect concepts together and was not analyzed as part of this study. For more information about the pre- and posttest, see the Supplemental Material. Only undergraduates were included in this study, and all 40 enrolled in the course agreed to participate and to allow access to their course work. Seniors accounted for 60% of the participants, 85% were zoology majors, and 27 were male. The entering grade point average (GPA) for students was 3.0 ± 0.612.

Data Analysis

The level of students’ indicated perceived knowledge was converted into an ordinal scale (concepts I know = 4, concepts I sort of know = 3, etc.). Student written responses from the pre- and posttest were analyzed to determine knowledge of biological concepts and terms by using a rubric designed by Ziegler and Montplaisir (2012) that was developed to code student knowledge of concepts from written responses (Table 1). If a student's response fit multiple areas of the rubric, it was coded into the category with which it most closely aligned. This rubric was converted into an ordinal scale for analysis (advanced = 4, intermediate = 3, etc.). Instances in which students did not designate a concept at a particular knowledge level or did not provide a written response were coded as nonresponses and scored as zero.

| Coding rubric | Coding explanation | Pretest osmosis example | Posttest adaptation example |

|---|---|---|---|

| Nonresponse | — | — | — |

| Naive | Response is incorrect or is too vague to determine whether it is correct. | “Moving from less dense to more” | “Using an existing trait to serve a different purpose” |

| Novice | Response contains both incorrect and correct statements and may be incomplete. | “Diffusion of oxygen from high to low concentration” | “An evolutionary trait of an species that has been selected for and increases fitness” |

| Intermediate | Response is correct but is not complete. | “Diffusion of H2O” | “A heritable trait that is advantageous for a particular environment or situation” |

| Advanced | Response is correct and complete. | “Diffusion of water through [a] cell membrane” | “Its an evolutionary process which enables an organism to survive in a particular environment” |

For the purposes of analysis, two researchers independent from the course individually coded student responses from the pretest. The researchers discussed any disagreement in scores until each was resolved. Then one researcher continued to code student responses from both the pre- and posttest. After the initial coding, one researcher completed a reiteration of the coding process to determine level of agreement. The intrarater reliability for the pre- and posttest was 0.915 and 0.921, respectively.

Student performance on the pre- and posttest was matched in order to determine gain in performance. Gain was calculated with the following equation outlined by Kohlmyer et al. (2009), g = (F – I)/(total – I), where F represents the student posttest score, I represents the student pretest score, and “total” is the total possible score on the assessment. Only students who completed both components on the pre- and posttest were used in the analysis for gain (n = 30). Analysis was conducted to compare upper-quartile and lower-quartile student perceived and determined knowledge. There was no significant difference between the entering GPA of upper- and lower-quartile students.

RESULTS

Students’ Perception of Knowledge and Determined Knowledge

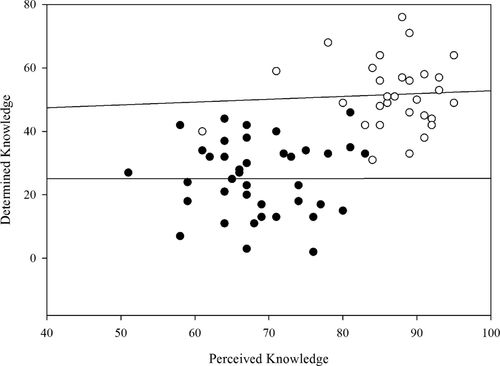

The mean score on the pretest for student perception (mean = 68.65, SD = 7.2131) was significantly higher than for determined knowledge (mean = 25.15, SD = 11.5925, t(68) = 10.36, p < 0.0001). There was no relationship between perceived and determined knowledge (F(1, 38) = 0.000, p = 0.9954, r2 = 9.036 × 10−7) on the pretest (Figure 1). When student responses on the pretest were analyzed, it was found that 39.7% indicated they knew the concepts; however, when their knowledge was determined, only 8.3% of responses were coded as being at an advanced knowledge level and 12.8% were coded as intermediate. Of the responses for perceived knowledge, 2.2% were coded as nonresponses, while for the determined knowledge component of the pretest, 53.0% of the responses were coded as nonresponses.

Figure 1. Relationship between perceived and determined knowledge total scores for the pretest (F(1, 38) = 0.000, p = 0.9954, r2 = 9.036 × 10−7) and posttest (F(1, 28) = 0.0953, p = 0.7599, r2 = 0.003391). Dark circles represent the relationship between student perceived knowledge and determined knowledge on the pretest, and the open circles show the relationship on the posttest. The solid lines represent the regression for the pre- and posttest.

Mean student scores for perception and determined knowledge differed significantly on the posttest (t(70) = 9.8145, p < 0.0001). On average, student perceived knowledge (mean = 81.0938, SD = 22.3323) was higher than determined knowledge (mean = 51.9688, SD = 11.4314). Students overperceived their knowledge, with 75.9% of student responses indicating they knew the concepts, while only 24.1% of the responses were determined to be advanced and 24.6% to be intermediate in knowledge. Of the responses for perceived knowledge, 4.2% were coded as nonresponses, while 19.1% of the responses for determined knowledge were coded as nonresponses on the posttest.

Through the matching of student pre- and posttests, gain was calculated for student perception of knowledge (0.635 ± 0.272, n = 30) and determined knowledge (0.387±0.152, n = 30). A significant difference exists between student perception of knowledge on the pre- to posttest (t(65) = 20.1502, p < 0.0001) and determined knowledge on the pre- to posttest (t(52) = 14.4269, p < 0.0001).

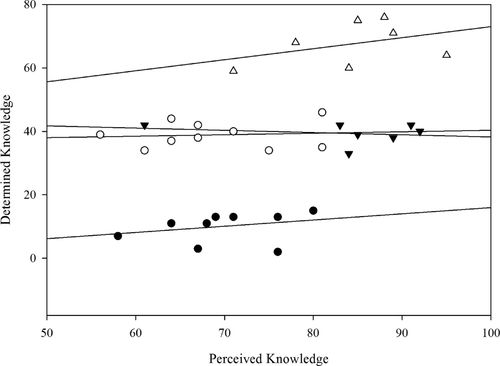

Student Knowledge and Ability to Perceive Knowledge

There was a significant difference in alignment between student perception and determined knowledge on the pretest for students who performed in the upper quartile on the pretest for determined knowledge compared with students in the lower quartile (χ2(7, n = 479) = 101.237, p < 0.0001; Figure 2). Students in the lower quartile for determined knowledge on the pretest did not perceive their knowledge any differently than students in the upper quartile on the pretest (χ2(4, n = 479) = 4.735, p = 0.3156). Similarly, lower-quartile students perceived their knowledge to be similar to students in the upper quartile (χ2(4, n = 336) = 1.526, p = 0.8220) on the posttest. Students in the upper quartile demonstrated significantly better alignment between perception and determined knowledge than students in the lower quartile on the posttest (χ2(7, n = 479) = 74.163, p < 0.0001).

Figure 2. Relationship between total perceived and determined knowledge scores in upper- and lower-quartile students on the pre- and posttest. The dark circles represent the relationship between lower-quartile students’ perceived and determined knowledge on the pretest (n = 9, r2 = 0.7619), and the open circles represent the relationship between upper-quartile students’ perceived and determined knowledge on the pretest (n = 9, r2 = 0.0086). The filled and open triangles represent the relationship between perceived and determined knowledge on the posttest for lower-quartile (n = 7, r2 = 0.0513) and upper-quartile (n = 7, r2 = 0.1579) students, respectively. The solid lines represent the regression for each relationship.

Alignment between Student Perception and Knowledge over the Course

There was a significant difference between students’ perception of their knowledge on the pre- and posttest (χ2(4, n = 1728) = 353.998, p < 0.0001). For instance, 39.7% of student responses on the pretest indicated they knew a concept or term, while 75.9% of student responses on the posttest indicated they knew a concept or term. For students’ determined knowledge, there was a significant difference between the pre- and posttest (χ2(4, n = 1727) = 250.870, p < 0.0001). There was a substantial shift in more responses being coded at higher knowledge levels on the posttest compared with the pretest. The biggest shift occurred in the nonresponse category. On the pretest examining determined knowledge, 53% of the responses were coded as nonresponses, but this number dropped to 19.1% on the posttest.

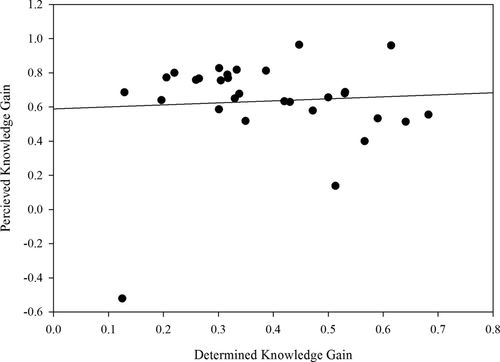

Student alignment between perception and determined knowledge was significantly better on the posttest than the pretest (F(1, 38) = 8.5258, p = 0.0059, r2 = 0.1833), with students having more accurate perceptions of their knowledge on the posttest compared with the pretest. A relationship also exists between perceived knowledge gain and determined knowledge gain (t(28) = 5.04, p < 0.0001; Figure 3).

Figure 3. Relationship between perceived knowledge gain and determined knowledge gain. The solid line represents the regression (r2 = 0.004).

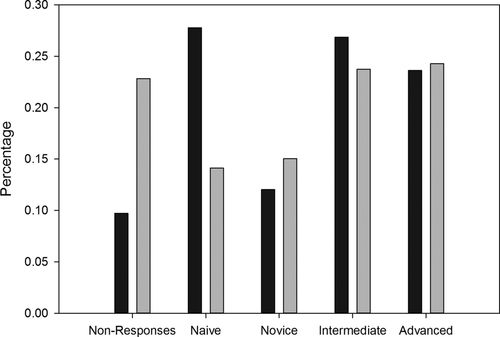

Gender

There was no difference between males and females on the pretest; however, there was a significant difference in the distribution of males and females for determined knowledge (χ2(4, n = 768) = 31.646, p < 0.0001) on the posttest. Females had a lower perception of their knowledge but higher determined knowledge than males on the posttest (Figure 4). One of the most substantial differences was that males had a higher number of nonresponses when examining student determined knowledge (16.41%) on the posttest compared with females (2.73%). There also was a significant difference in alignment of perceived and determined knowledge on the posttest between males and females (χ2(8, n = 960) = 37.924, p < 0.0001), with females being more accurate in their perception of knowledge compared with males.

Figure 4. The distribution of male (n = 23) and female (n = 9) determined knowledge on the posttest. There is a significant difference between gender on the posttest for determined knowledge (χ2(4, n = 768) = 31.646, p < 0.0001). The dark gray bars represent females, and the light gray bars represent males.

DISCUSSION

The main aim of this study was to investigate whether students are able to accurately perceive their knowledge about biology concepts and terms. Student knowledge and accuracy in perceiving knowledge improved from pre- to posttest. The results suggest that students’ ability to monitor or evaluate their knowledge improved over the duration of the course. Even though improvement exists, there still are significant differences between students’ perception and determined knowledge at the end of the course. These discrepancies may signify that students in this study may be novice-like in their thinking of biology concepts and terms. Experts should have the ability to monitor and regulate their understanding (NRC, 2000) and would be able to differentiate what they know from what they do not (Pintrich, 2002). This relationship was not found for the pretest, and although significant improvement in student knowledge was found on the posttest, misalignment still exists. Although, there was gain in student perceived and determined knowledge, the gain was higher when examining perceived compared with determined knowledge. Differences in student thinking and their accuracy of alignment can have important implications for learning, including characterizing the relationship between what content students know, what content they accurately recognize knowing, and how accuracy may be developed.

In this study, there was misalignment between student perceived and determined knowledge of biology concepts and terms. This misalignment could be attributed to students misinterpreting familiarity with concepts and terms as being knowledgeable (Willingham, 2003/2004). However, there was an option for students on the pre- and posttest that should have teased out this distinction. Students could have indicated that, although they had heard of a concept, they did not know it. Discrepancies between perceived and determined knowledge could also be attributed to students initially not fully grasping the complexity of concepts and terms. Students may not be aware that concepts are more complex if they have not been introduced to that complexity. This explanation is supported by Grant et al. (2009), who examined student perception and knowledge of computer applications. In that study, misalignment may have been observed because students had a simplistic view of the functions of the applications. If a student has not been exposed to the complexity of a concept, then it is reasonable to assume that the student would not be able to recognize that he or she does not have a deep understanding of that concept. Based on this explanation, it would be expected that alignment between perception and determined knowledge would improve from the beginning to end of the semester as students gain a deeper understanding of concepts and their complexity, which is what was found in this study.

The trend observed for student responses related to the volume of nonresponses is troublesome. Even though the course focused on students learning biological concepts and terms, a high number of nonresponses exist on the pre- and posttest. In this study, a number of students indicated a level of familiarity or knowledge about concepts and terms but then did not provide a written response to support the level indicated. This relationship was more pronounced on the pretest than the posttest. When experiencing the “tip of the tongue” phenomenon, a person is not able to retrieve certain knowledge, although he or she is aware of possessing it, which is similar to the feeling of knowing in which a person believes knowledge can be retrieved even if not at that specific time (Miner and Reder, 1994). The high number of nonresponses on the pretest may be explained by a feeling of knowing or a tip of the tongue sensation.

It is possible to forget a piece of information that you can recall now and recall a piece of information now that you may later forget (Flavell, 1979). Students may recognize or know a term but be unable to demonstrate that recognition or knowledge at the specific time an assessment is given. The design of the pre- and posttest utilized in this study required students to construct their own responses; therefore, students could rely only on knowledge that could be retrieved and not triggered from answer recognition. For students completing a multiple-choice pre- and postassessment, the outcomes may differ, because the answer choices could trigger student memory. This provides an opportunity for a student to provide an answer without necessarily having an understanding, as it is typically easier to recognize an answer from a list of possibilities than to construct a response.

PERFORMANCE AND PERCEPTION

This study shows that students whose performance was in the upper quartile for determined knowledge on the pre- and posttest had more accurate judgments about their knowledge as seen through significantly better alignment of their perception and determined knowledge. Our findings are supported by a previous study investigating student judgment of learning and monitoring accuracy in an educational psychology course. Cao and Nietfeld (2005) found the ability to make accurate judgments about learning is related to higher performance. It was surprising that in the study presented here there was no difference between upper-quartile and lower-quartile students’ perception of their knowledge. This suggests that even though there were significant differences in determined knowledge between the upper-quartile and lower-quartile students, the groups did not perceive their knowledge to be any different.

Two explanations could illuminate this finding. The first is that the lower-quartile students overestimated their perception of knowledge, and the second is that upper-quartile students underestimated their perception of knowledge. Kruger and Dunning (1999) suggest that these explanations could be working conjointly, because, in a study investigating a variety of metacognitive tasks, individuals deemed incompetent overestimated their performance while competent individuals underestimated theirs. The results from the study presented here appear to support the hypothesis that lower-quartile students overestimated their knowledge as opposed to the substantial underestimation of knowledge by upper-quartile students. The upper-quartile student alignment was significantly more accurate between their perceived and determined knowledge.

Gender

Results from this study show that, although males and females have similar perceptions of knowledge and determined knowledge at the beginning of the course, a gap emerges on the posttest. Little research has been conducted examining the relationship between perceived and determined knowledge and its relationship to gender. Brewe et al. (2010) found that a gap between males and females in understanding of physics concepts increased over the duration of a physics course that utilized modeling instruction. Conversely, when examining final course grades and learning gains in an introductory biology course, Lauer et al. (2013) found that no achievement gap existed between men and women. Ehrlinger and Dunning (2003) examined performance on a science quiz and found that men and women performed equally, but women underestimated their scientific reasoning ability compared with men. These differences may not be due to females underestimating their knowledge; as results from Lundberg et al. (1994) indicate, it may be that males overestimate. Gender studies in science, technology, engineering, and mathematics (STEM) fields are widespread. Examining the relationship found in this study adds to previous findings that have examined gender in STEM disciplines, yet the relationship between gender and knowledge perception is still unclear.

Limitations

Although discourse exists about the validity and reliability of self-reported student data, especially in regard to institutional research (Herzog and Bowman, 2011), the instruments and design used in this study work to minimize some of the concerns expressed and provide important insights into student thinking. On the instruments implemented in this study, students not only indicated their perception of knowledge for concepts and terms but also demonstrated it on the same instrument at a single point in time. A delay in the comparison measures may not be as accurate a method for comparing student perception and knowledge nor may asking students to make judgments about their knowledge over time. As Bowman (2010) explains, when students complete assessments at the same time they estimate their skills, they do so with reasonable accuracy.

Information concerning students’ perceived and determined knowledge was collected in a written format, which usually results in minimizing the size of social desirability bias (Gonyea, 2005). It is possible that students were reluctant to provide accurate information concerning their perceived knowledge, leading to social desirability bias (Gonyea, 2005). The pre- and posttest were administered as part of the course, and students may have been apprehensive about indicating a novice level of knowledge for certain concepts and terms, knowing the instructor would be examining the tests. This study also investigated upper-level students, the majority of whom were biology and zoology majors. We would presume that this population would have a deeper understanding of their own biological knowledge than introductory or non–major students, which is often the population explored in similar studies investigating student confidence in performance.

The magnitude of the results could potentially be overestimated due to the sample size being reduced because of the high volume of nonresponses. Even when evaluating the responses on the pre- and posttest the sample size can be reduced due to the high volume of nonresponses. An additional limitation concerning nonresponses is that the reason why a student did not provide a response for a particular concept or term can only be speculated. It is not possible to determine whether nonresponses were due to students not having enough time to complete the pre- and posttest or due to lack of knowledge. However, the instructor was interested in student knowledge, and it can be presumed that a reasonable amount of time was given for completion. The course in which this study occurred was primarily lecture based and teacher centered. It is possible that the lack of alignment that was observed was due to the course not implementing or modeling instruction that aims to facilitate metacognitive behavior. However, the type of assessment implemented in this course, portfolio-based assessment, could have been a factor in impacting students’ ability to monitor their learning, as portfolios are expected to be reflective (Lynch and Shaw, 2005) and allow for self-assessment throughout the learning process, which should correlate to students developing more self-knowledge (Pintrich, 2002). Through this type of assessment, students were evaluated on how thorough an understanding was evidenced by their ability to make connections among the concepts across the semester, whether they revisited reflections, and their ability to identify misconceptions and preconceptions that align with components of metacognitive skills.

CONCLUSIONS

The dilemma concerning the shortfalls in student understanding in science has been highlighted as an important issue (Perkins, 1993). If students are unable to monitor or evaluate their learning by being able to differentiate between what they do and do not know, they cannot engage in metacognitive activities (Tobias and Everson, 2002). The accuracy of monitoring one's self-knowledge is critical in the learning process, because it is doubtful students will put forth effort to learn content if they believe they already have an understanding of the content (Pintrich, 2002). Engaging students’ metacognitive skills is essential when promoting student understanding of concepts or connections between concepts (Tanner and Allen, 2005); however, this study suggests that student metacognitive skills may also need to be developed.

As far as we are aware, no study has investigated student perception of knowledge and determined knowledge concerning biology concepts in an upper-level biology course. This study suggests that not only should there be concern over student knowledge of biology but also students’ perception of that knowledge. The results from this study provide evidence that students struggle with monitoring or regulating their cognition, as observed through the misalignment that existed between student perceived and determined knowledge. Additionally, the results show that overall students’ perception of their knowledge is not well aligned with what they actually know. What has led students to not have an accurate perception of their knowledge remains unclear. Additional research is necessary to determine how to increase alignment or how to improve students’ ability to more accurately perceive their knowledge.

ACKNOWLEDGMENTS

The research received approval from the local institutional review board (IRB protocol SM10164). This research was supported in part by National Science Foundation HRD 0811239 and by the Department of Biological Sciences and the Graduate School at North Dakota State University. We thank the instructor and students who willingly allowed us access to their materials through participation in this research project.