Want to Improve Undergraduate Thesis Writing? Engage Students and Their Faculty Readers in Scientific Peer Review

Abstract

One of the best opportunities that undergraduates have to learn to write like a scientist is to write a thesis after participating in faculty-mentored undergraduate research. But developing writing skills doesn't happen automatically, and there are significant challenges associated with offering writing courses and with individualized mentoring. We present a hybrid model in which students have the structural support of a course plus the personalized benefits of working one-on-one with faculty. To optimize these one-on-one interactions, the course uses BioTAP, the Biology Thesis Assessment Protocol, to structure engagement in scientific peer review. By assessing theses written by students who took this course and comparable students who did not, we found that our approach not only improved student writing but also helped faculty members across the department—not only those teaching the course—to work more effectively and efficiently with student writers. Students who enrolled in this course were more likely to earn highest honors than students who only worked one-on-one with faculty. Further, students in the course scored significantly better on all higher-order writing and critical-thinking skills assessed.

INTRODUCTION

Science progresses through writing, from early emails brainstorming about ideas; to drafts of manuscripts, feedback from coauthors, and posters and other presentations; to the culmination of all these efforts, the peer-reviewed publication. Given how critical it is for scientists to have strong writing skills, it seems paradoxical that the teaching of writing is not central to science education. Certainly, some science instructors teach writing in their courses, but many bemoan the poor writing skills of college graduates (Labianca and Reeves, 1985; Moore, 1994; Berthouex, 1996; Jerde and Taper, 2004; Joshi, 2007).

One of the best opportunities that undergraduates have to learn to write like a scientist is to write a major research paper or thesis after participating in a faculty-mentored undergraduate research experience. These research experiences are known to help students develop critical-thinking skills and research methods (Lopatto, 2003; Seymour et al., 2004; Hunter et al., 2007); the skills that students are least likely to develop, however, are writing skills (Kardash, 2000; Lopatto, 2004). This is unfortunate because an undergraduate thesis is perhaps the first authentic writing experience that science students have and is, therefore, one of the best opportunities to learn scientific writing. Too often, science is taught as a collection of facts instead of as a way of thinking (Songer and Linn, 1991; Linn and Hsi, 2000), and canned laboratories and writing assignments in which students simply summarize what they have learned perpetuate this myth. Students who write theses, on the other hand, are engaged in critical and scientific ways of thinking; they ask scientific questions, synthesize literature, select appropriate methods, evaluate data, and interpret results. For some students, this is the first time they have something to contribute to an ongoing scientific conversation and, as a result, they are highly invested in their writing and are particularly receptive to writing instruction.

To develop as writers, students must understand both the conventions of scientific writing and what their readers expect (Gopen and Swan, 1990). The first is fairly straightforward to teach, and there are many books describing the structure of scientific papers and what information belongs in each section (e.g., Day and Gastel, 2006; Pechenik, 2006). The second issue is more challenging. Strong writers are able to communicate complex ideas clearly because they anticipate which details their audience needs—and which they don't—at particular locations in the text. Strong writers are also able to consider the different ways that language can be interpreted by readers, and they try to reduce ambiguity with precise words and explicit reasoning. Typically, faculty assume that students will just figure out what readers expect by reading scientific articles (Joshi, 2007). This approach may work for some students who read many well-written articles, but a more direct and expedient way to develop this skill is to engage in the iterative nature of drafting, soliciting feedback, and revising.

There are two common models for how to work with undergraduate thesis writers. One model is for research supervisors to work one-on-one with students. The advantage of this approach is the personalized attention that students receive. A disadvantage of this approach is the possibility that overzealous faculty might take over with extensive editing, in some cases rewriting students’ work. Although the final draft may be better, the student did not make the writing choices and therefore may not have developed much as a writer. Another disadvantage of this model is the possibility that overextended faculty might focus their mentoring on the science and neglect the writing altogether.

Another model for how to work with undergraduate thesis writers is to offer a course to support student writers. The advantages of writing courses are that instructors explicitly teach the conventions of scientific writing, and the structured nature of a course helps students stay on track. Unfortunately, some science departments might have a difficult time staffing such a course given that scientists are not often well versed in writing pedagogy, and many assume that teaching such a course requires an unmanageable time commitment.

In this article, we present a hybrid model that combines the structured nature of a course with the personalized attention of working one-on-one with faculty members. Instead of a traditional course in which the instructor provides most of the feedback on students’ writing, in this course students get the majority of their feedback from faculty not teaching the course and from their classmates. To optimize these interactions, the course offers structured opportunities to engage in the scientific peer-review process. Students learn actively to solicit and work with feedback from multiple readers, and their readers learn to respond to student writing efficiently and effectively.

Given the significant challenges associated with adding a writing course into an already packed curriculum, the payoffs need to be substantial to justify the effort by both faculty and students. To determine the effectiveness of this approach, we compared the quality of theses written by students enrolled in this course versus theses by students who simply worked one-on-one with faculty members. We assessed theses for both the quality of writing as well as the development of critical-thinking skills. In this article, we present details of the course and results from our study, and we describe how our approach benefits both students and faculty.

METHODS

Undergraduate Theses in Biology

All undergraduates undertaking a biology thesis at our university work with a faculty research supervisor, someone who mentors the student on his or her research project through an independent study or an internship. In addition to mentoring about science, research supervisors are charged with providing feedback and guidance to students in the preparation of their theses, particularly in addressing the significance of the research question, accuracy of the literature review, appropriateness of the methods, and the interpretation of results. All thesis writers are also assigned a faculty reader, another faculty member in the biology department who is not in the lab in which the student is conducting research. The primary role of the faculty reader is to offer feedback about the writing, particularly in addressing overall clarity and whether or not the thesis clearly demonstrates the student's in-depth understanding of the goals and context of the study. Even with the support of research supervisors and faculty readers, some students still struggle to understand how to write a coherent scientific paper. Additionally, some faculty lack the time or expertise to provide sufficient guidance for novice science writers (Reynolds et al., 2009). Therefore, we undertook the development, implementation, and evaluation of a course to support student writers.

The Course

Writing in Biology is a writing-intensive, full-credit, one-semester course that meets once a week for 2.5 h. The course is designed for undergraduates working on a thesis or major research paper and is recommended, but not required, for all biology honors students. The primary objectives of the course are for students to learn the conventions of scientific writing, how to construct a narrative from their research, and how to anticipate and address readers’ expectations through soliciting and responding to feedback (see Supplemental Material A for course syllabus and schedule).

This course is certainly not the first to teach science writing or to have guidelines for thesis writers (e.g., Hand et al., 2004; Yalvac et al., 2007; Kayfetz and Almeroth, 2008; Yeoman and Zamorski, 2008). What makes this course unique is that one of its primary goals is to teach students to work more efficiently and effectively with their research supervisors and faculty readers. Given that our department has up to 70 thesis writers per year, we needed an effective approach to mentoring students through the writing process without overburdening the instructor of this course.

The guiding philosophy of the course is that by teaching students to engage effectively in scientific peer review—the same process of self-regulation and evaluation used by professional scientists to improve quality and uphold standards—they will have an authentic learning experience that they are more likely to transfer beyond the context of the course. This approach is also ideal for research supervisors and faculty readers because 1) they are already familiar with the approach, 2) scientific peer-review (when done well) models best practices in teaching writing, and 3) this approach requires less effort than the traditional approach of editing student writing. By the end of the semester, students will understand both the basic standards of scientific writing and the specific expectations for an undergraduate thesis. They will also be able to solicit high-quality feedback from faculty and their peers, respond to feedback in thoughtful and deliberate ways when revising, and produce high-quality written work. Grades in the course are based not only on the quality of the final drafts but also on how well students engaged in the scientific peer-review process.

To achieve these learning outcomes, the course has several interrelated components. The course instructor teaches students to engage in scientific peer review using BioTAP, the Biology Thesis Assessment Protocol (Reynolds et al., 2009). BioTAP is a tool that guides and supports students and their faculty members through the thesis-writing process; it includes both a rubric that articulates expectations for the thesis and a guide to the drafting–feedback–revision process that is modeled after professional scientific peer review. BioTAP is primarily a teaching tool that promotes the development of writing and critical-thinking skills by teaching students how to evaluate and respond to feedback during the revision process. BioTAP also facilitates meaningful communication between faculty members and students by offering guidelines for better methods of giving feedback on drafts. Finally, BioTAP is an assessment tool that makes evaluations of student work more consistent between faculty members and helps departments assess the overall quality of student work.

At the beginning of the semester, BioTAP serves as an extensive “guide to authors”; its detailed rubric describes the requirements for honors theses and the department's standards. The rubric includes mid- and lower-order issues such as organization and proper writing mechanics but emphasizes higher-order writing and critical-thinking skills such as how to construct an argument for the significance of the students’ research within the context of the scientific literature. Students learn how to use BioTAP as a guide for evaluating writing by using it to assess short excerpts of student writing. These assessments are discussed in class so students can calibrate their work with those of the course instructor and fellow students (the calibration exercise could be implemented using the technology of Calibrated Peer Review [see Table 1 and Reynolds and Moskovitz, 2008] if class size were large enough to warrant the need). Once students develop proficiency using the rubric, they then have opportunities to practice using it to evaluate their peers’ writing; peer reviews are worth 20% of the course grade and are graded based on the justification they give for their assessment.

| 1. Is the writing appropriate for the target audience? |

| 2. Does the thesis make a compelling argument for the significance of the student's research within the context of the current literature? |

| 3. Does the thesis clearly articulate the student's research goals? |

| 4. Does the thesis skillfully interpret the results? |

| 5. Is there a compelling discussion of the implications of findings? |

| 6. Is the thesis clearly organized? |

| 7. Is the thesis free of writing errors? |

| 8. Are the citations presented consistently and professionally throughout the text and in the list of works cited? |

| 9. Are the tables and figures clear, effective, and informative? |

Once students have written initial drafts, they learn how to solicit the types of feedback that would be most helpful to them given where they are in the writing process. Instead of passively submitting a draft to a faculty member or peer and asking “let me know what you think,” students are required to reflect on the strengths and weaknesses of particular drafts and to ask readers direct, focused questions that get at the struggles they identify in their own work. Certainly, students will not be aware of all their struggles, but this active approach to soliciting feedback tends to produce high-quality comments that students can use toward revision.

Through the use of BioTAP's guidelines, reviewers learn to respond to, but not edit, students’ writing (see Supplemental Material B for detailed guidelines). Instead of fixing students’ writing, reviewers are instead prompted to ask questions and make comments such as “When I read this I thought…”, “I expected you to say… but instead you said…”, and “I am confused here. What message are you trying to convey?” Reviewers are encouraged to ask about what is written rather than try to guess what the writer intended.

After students have received feedback, they need practice making writing choices. To make this process explicit, students create a table in which the first column contains comments from all reviewers of a particular draft. The second column describes the writing choices the student made, and the third column states where in the text the change was made, if any change was needed. These tables highlight any contradictions between reviewers’ comments and make students’ choices visible so the course instructor can help them decide whether their choice was appropriate and effective. These tables also help give students a voice in what is often an unequal power relationship between themselves and faculty members.

Certainly there is some overlap between the roles of the faculty research supervisors, faculty readers, and the course instructor, but this overlap is essential given that the course is not required. Furthermore, feedback from multiple readers is essential to the development of strong writers, an assumption we test explicitly in this study.

Assessment Methods

This study compares the quality of undergraduate honors theses written by students who worked one-on-one with faculty members versus students who also enrolled in this course. We assessed honors theses written by 190 biology majors who graduated from Duke University between 2005 and 2008. To be eligible for the honors program, students must have a minimum grade point average (GPA) of 3.0 within the biology major and must complete a thesis. Students disqualified from the honors program, due to low GPAs or not completing the thesis, were not included in this study. Of the 190 students in this study, 47 enrolled in the course.

To assess the quality of theses written by students in the two groups, we used BioTAP's rubric (Reynolds et al., 2009). The rubric contains 13 questions assessing writing, critical thinking, and scientific accuracy. For this study, we focused only on the nine questions that assess writing and critical-thinking skills (Table 1); we did not assess scientific accuracy because the theses in this study were based on research conducted in many subdisciplines of biology and our raters were not experts in all the subdisciplines. BioTAP questions 1–5 are higher-order writing and critical-thinking skills, dealing with issues such as audience, evidence, and argumentation. Questions 6–9 are mid- to lower-order writing concerns, dealing with issues such as the structure of scientific writing, writing errors, formatting, citations, and design.

Each question was scored on a scale from 1 to 5. A score of 1 indicated that the thesis did not meet the department's minimum acceptable standards for that question. A score of 3 indicated that the minimum standards were met, and a score of 5 indicated that the standards of excellence were mastered. A score of 2 or 4 was assigned if a thesis contained sections that fit into more than one category. Given that questions 6–9 dealt with mid- to lower-order writing issues, they were weighted half as much as questions 1–5. Therefore, the maximum possible score a thesis could receive was 35 points. A thesis was eligible for highest honors if it scored 34–35 points, high honors if it scored 27–33 points, and honors if it scored <27 points.

We hired nine biology graduate students and postdoctoral fellows to read and rate theses. Each rater completed >8 h training in the use of the BioTAP rubric. This training included a workshop in which raters examined student writing that illustrated inadequate, adequate, and excellent examples of each of the nine writing issues described in the rubric. Then, each rater read several sample theses that were not part of our assessment, and assessed them. After the individual assessments were completed, all raters discussed their responses and, as a group, calibrated their scores.

Each de-identified thesis was read by two raters, and each rater individually scored the thesis. Then, raters who read the same thesis discussed how they rated it and looked for any discrepancies in their assessments. This process resulted in ongoing recalibration occurring after every two to three theses read. Individual scores were never changed, but an additional “consensus score” was given to each thesis based on the two raters’ discussion. Consensus scores are not an average, but rather a score agreed upon by both raters as a result of rereading and discussing relevant sections of the thesis.

As reported previously (Reynolds et al., 2009), the interrater reliability of the BioTAP rubric was .72 (p < 0.01), calculated using the Pearson correlation coefficient for total scores. The joint probability of agreement for BioTAP questions 1–9 ranged from 76% to 90%, with kappa values from .41 to .67 (all p < 0.01). Taken as a whole, these results indicate moderate to strong agreement between raters using the BioTAP rubric.

Comparability of the Course and No-Course Groups

Because the course is not required and students self-selected into each of the two groups, it was necessary to determine whether the two groups were comparable. We hypothesized that there would be no difference between the two groups based on gender or ethnicity. To test this hypothesis, we conducted two-by-two chi-square analyses for the two groups and each of the following variables: gender; African Americans; Asians, Asian Americans, and Pacific Islanders; Caucasians; Hispanics; or other ethnicity (as specified by the student). If differences were found between the two groups, we then tested the hypothesis that gender and ethnicity do not affect thesis quality. This was done with three-by-two chi-square analyses for the three honors levels (honors, high honors, and highest honors) and any demographic variable that was found to be different between the two groups. For subgroups with small sample sizes, the Fisher exact test was used.

We also hypothesized that there were no significant differences between the two groups based on prior academic history. To test this hypothesis, we used a t test to evaluate group comparability on SAT verbal and math scores and grades in a first-year writing course (the only prior writing course required of all our students). All academic and demographic data were obtained through the university registrar; SAT scores were available for only 177 of the 190 students in this study, and grades were available for only 182 students. Institutional Review Board approval was obtained for this research.

Analysis of Data

To determine whether there were any differences in the quality of theses written by students enrolled in the course (n = 47) versus those who were not (n = 143), we compared the consensus scores for each group. To assess overall quality, we compared the distributions of the frequencies of theses earning honors (score <27), high honors (score 27–33), and highest honors (score >33) between the two groups using a three-by-two chi-square analysis.

To determine whether there were differences in student mastery of specific writing and critical-thinking skills between groups, we compared the distributions of mastery of standards (score = 5) and nonmastery of standards (score <5) for BioTAP questions 1–9 using two-by-two chi-square analyses.

RESULTS

Overall Quality of Honors Theses

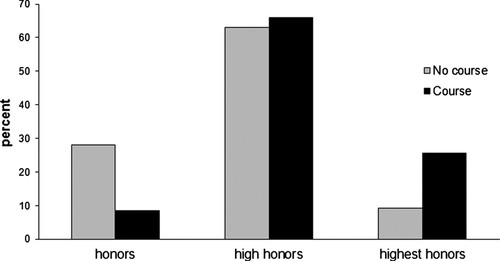

The distribution of the frequencies of theses earning honors, high honors, or highest honors differed significantly for students who enrolled in this course (n = 47) and those who did not (n = 143) (χ2 = 13.1, df = 2, p < 0.001). Of the students who took the course, 26% earned highest honors and only 9% earned honors; conversely, of those who did not take the course, only 9% earned highest honors and 28% earned honors (Figure 1). Although the majority of students in both groups (63–66%) wrote well enough to earn high honors, taking the course shifts the distribution toward highest honors.

Figure 1. Taking the course significantly increases the likelihood of earning highest honors and decreases the likelihood of earning honors. The distributions of the frequencies of theses earning honors, high honors, and highest honors for students who took the course (n = 47) and those who did not (n = 143) differed significantly (χ2 = 13.1, df = 2, p < 0.001). Consensus scores reported.

Quality of Critical-Thinking and Writing Skills

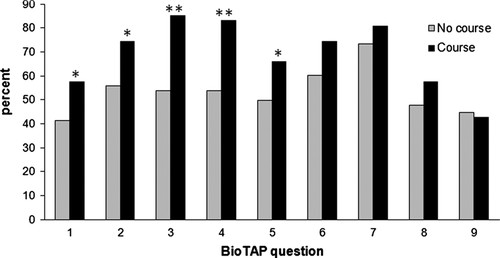

Students who took the course received significantly higher scores than those not taking the course on all the BioTAP questions that address higher-order writing and critical-thinking skills (p < 0.05 for questions 1, 2, and 5, and p < 0.01 for questions 3 and 4; Figure 2). There were no significant differences between the two groups for BioTAP questions 6–9, which deal with mid- to lower-order writing skills.

Figure 2. Taking the course significantly improves students’ scores on higher-order writing and critical-thinking skills. The percentage of theses in which the standards of excellence were met was significantly higher for questions 1, 2, and 5 (*p < 0.05) and for questions 3 and 4 (**p < 0.01) for students who enrolled in the course (n = 47) vs. those who did not (n = 143). Two-by-two χ2 analyses were performed for each BioTAP question, comparing mastery of the standards of excellence (score = 5) vs. nonmastery of these standards (score < 5, only mastery data shown) for theses in each group. Questions 1–5 are higher-order writing and critical-thinking issues, whereas questions 6–9 represent mid- to lower-order writing issues (Table 1). Consensus scores reported.

Comparability of Groups

Although there was no difference between the two groups in the relative percentage of African Americans, Caucasians, Asians (including Asian Americans and Pacific Islanders), or “other” group, Hispanics were significantly more likely to enroll in the course than non-Hispanics (p = 0.01; Table 2). However, the distribution of Hispanic students’ writing scores was not significantly different from the rest of the student population (p = 0.11; Table 3). We did not detect a significant difference in gender composition between the two groups (p = 0.06; Table 2), but gender does appear to have an effect on overall performance (p = 0.02; Table 3). More men than expected performed in the lowest honors category, but there were no differences between the percentage of women and men performing in the highest honors category.

| Course (n) | No course (n) | χ2 | p | |

|---|---|---|---|---|

| African American | 13% (6) | 18% (26) | ||

| Not African American | 87% (41) | 81% (117) | 0.74 | 0.39 |

| Asian, Asian American, and Pacific Islander | 13% (6) | 25% (36) | ||

| Not Asian, Asian American, or Pacific Islander | 87% (41) | 75% (107) | 3.16 | 0.08 |

| Caucasian | 53% (25) | 43% (61) | ||

| Not Caucasian | 47% (22) | 57% (82) | 1.58 | 0.21 |

| Hispanic | 15% (7) | 4% (6) | ||

| Not Hispanic | 85% (40) | 96% (137) | 6.35 | 0.01 |

| Other (as denoted by student) | 6% (3) | 10% (14) | ||

| Not other | 94% (44) | 90% (129) | 0.50 | 0.48 |

| Female | 72% (34) | 57% (81) | ||

| Male | 28% (13) | 43% (62) | 3.65 | 0.06 |

| Asian, Asian American, and Pacific Islander (n) | Not Asian, Asian American, or Pacific Islander (n) | χ2 | p | |

|---|---|---|---|---|

| Highest honors | 10% (4) | 14% (21) | ||

| High honors | 74% (31) | 61% (90) | ||

| Honors | 17% (7) | 25% (37) | 2.390 | 0.30 |

| Hispanic (n) | Not Hispanic (n) | p* | ||

| Highest honors | 30% (4) | 12% (21) | ||

| High honors | 62% (8) | 64% (113) | ||

| Honors | 8% (1) | 24% (43) | 0.1124 | |

| Female (n) | Male (n) | χ2 | p | |

| Highest honors | 13% (15) | 13% (10) | ||

| High honors | 70% (81) | 53% (40) | ||

| Honors | 17% (19) | 33% (25) | 7.628 | 0.02 |

No significant differences were detected between the two groups with regard to prior academic performance (Table 4). Because the differences in SAT math scores approached statistical significance (p < 0.06), we conducted further analyses. An analysis of variance of SAT math scored by level of honors found no significant differences (highest honors: n = 24, mean = 738; high honors: n = 111, mean = 743; honors: n = 42, mean = 735; F = 0.37, df = 2, p = 0.69), and SAT math scores were not significantly associated with the writing consensus score (Spearman rank correlation coefficient: r = .03, p = 0.70).

| Course | No course | ||||||

|---|---|---|---|---|---|---|---|

| Mean (n) | SD | Mean (n) | SD | t value | df | p | |

| Grade in first-year writing | 3.77 (46) | 0.31 | 3.72 (142) | 0.37 | −0.86 | 186 | 0.39 |

| SAT verbal | 710 (46) | 51.40 | 725 (131) | 59.06 | 1.55 | 175 | 0.12 |

| SAT math | 726 (46) | 60.37 | 745 (131) | 56.41 | 1.87 | 175 | 0.06 |

DISCUSSION

Given the considerable constraints associated with science faculty teaching writing courses, we developed a hybrid course in which students have the structural support of a course plus the personalized benefits of working one-on-one with faculty. In our model, the course instructor teaches the conventions of scientific writing and how to anticipate reader expectations but also structures students’ engagement in the scientific peer-review process. Armed with this knowledge, and using BioTAP as a guide, students then get the majority of the feedback on their writing from research supervisors, faculty readers, and their peers. Additionally, the course instructor was able to teach a large number of students each semester because she was not the only person giving feedback to student writers. This approach not only improved student writing but also helped faculty members across the department—not only those teaching the course—to work more effectively and efficiently with student writers (see Reynolds et al., 2009, for a description of how we obtained faculty buy-in).

Our study found that students who enrolled in this course were more likely to earn highest honors than students who only worked one-on-one with their research supervisors and faculty readers. We also found that students in the course scored significantly better on all higher-order writing and critical-thinking skills assessed. Although we were not able to ascertain directly which aspects of the course contributed most to these improvements, we suspect that it was a combination of effects. First, students noted on course evaluations that a primary benefit of the course was working through examples of student writing in class so that they would better understand the conventions of scientific writing, what readers expect from scientific papers, and the expectations for honors theses. Second, we assume that students who took the course had a better understanding of how to use BioTAP when interacting with faculty members. Third, we suspect that the course provided a safety net for students whose research supervisors or faculty readers lacked the time or expertise to provide them with sufficient guidance on their writing. Finally, the course structure certainly helped students stay on track, not only with drafting sections of their theses but also with soliciting timely feedback.

Although there were no significant differences in gender composition or prior academic abilities between the two groups of students in this study, and ethnicity had no significant effect on writing scores, we did find that a disproportionate number of Hispanics opted to take the course. Given the concern for recruiting and retaining minorities in the sciences, we wondered what factors might influence this choice. We currently do not know the reasons, but we plan to survey students in future courses to try to understand this issue more fully.

The next phase of our research will compare the quality of theses written by students who used BioTAP to engage with their faculty supervisors and readers but who did not take the course versus theses written by students who took the course structured around BioTAP. Given the benefits that we detected for students who engaged in scientific peer review with their research supervisors and faculty readers, our department now makes this tool available to all students who are writing a thesis, and the overwhelming majority of our faculty members use BioTAP to assess theses.

ACKNOWLEDGMENTS

We thank Jennifer Hill and Matt Serra in Duke University's Office of Assessment for their assistance with collecting academic and demographic data and for running many of the statistical tests for us. Additional thanks go to our raters: Arielle Cooley, Whitney Jones, Laura Simmons Kovacs, Tami McDonald, Cary Moskovitz, Kathryn Perez, Andrew Procter, Amy Sayle, and Robin Smith. J.A.R. thanks the American Society of Microbiology's Biology Scholars Program (National Science Foundation Award 0715777) for helping her develop her research ideas. Funding for this project was provided through the Duke Endowment.