Improved Student Learning through a Faculty Learning Community: How Faculty Collaboration Transformed a Large-Enrollment Course from Lecture to Student Centered

Abstract

Undergraduate introductory biology courses are changing based on our growing understanding of how students learn and rapid scientific advancement in the biological sciences. At Iowa State University, faculty instructors are transforming a second-semester large-enrollment introductory biology course to include active learning within the lecture setting. To support this change, we set up a faculty learning community (FLC) in which instructors develop new pedagogies, adapt active-learning strategies to large courses, discuss challenges and progress, critique and revise classroom interventions, and share materials. We present data on how the collaborative work of the FLC led to increased implementation of active-learning strategies and a concurrent improvement in student learning. Interestingly, student learning gains correlate with the percentage of classroom time spent in active-learning modes. Furthermore, student attitudes toward learning biology are weakly positively correlated with these learning gains. At our institution, the FLC framework serves as an agent of iterative emergent change, resulting in the creation of a more student-centered course that better supports learning.

INTRODUCTION

Introductory undergraduate science courses may be the first and sometimes only exposure to the scientific process for our students. For students who continue on to careers in science, introductory courses need to foster lifelong learning skills, including the ability to gather, evaluate, and apply information. Equally important, students who do not major in a scientific field need these same skills to gain a fundamental understanding of how science impacts society (American Association for the Advancement of Science [AAAS], 2011). Therefore it is critical that introductory courses accurately represent the nature of science and its importance to society (National Research Council [NRC], 2003; AAAS, 2011; Henderson et al., 2011). The traditional didactic lecture alone is not well suited to student exploration of scientific concepts or the development of these critical-thinking skills (Haak et al., 2011). Further, students who are uninspired by the representation of science in their introductory courses may elect to pursue other career options (NRC, 2003; AAAS, 2011). An additional challenge for instructors in undergraduate biology courses is the need to adapt to a rapidly growing and dynamic body of scientific knowledge (NRC, 2003).

Recent advances in educational research demonstrate that undergraduate biology education is better implemented through active, student-centered learning strategies, as opposed to traditional instructor-centered pedagogies (AAAS, 2011; Singer et al., 2012; Freeman et al., 2014). Active-learning strategies require students to engage with concepts and then provide students with feedback on their learning process (Freeman et al., 2014). Such strategies have been shown to enhance interest, conceptual understanding, and science process skills (Prince, 2004; Armbruster et al., 2009; Freeman et al., 2011, 2014; Haak et al., 2011).

Implementation of these evidence-based pedagogies in large-enrollment courses is an open challenge for faculty members. Altering the structure of a large course requires changes wherein course goals, teaching strategies, and assessments must evolve simultaneously. The capital spent on these ventures is instructor time, which is constrained by a multitude of other faculty commitments. Consequently, departments search for methods of change that are both time efficient and show strong promise for success (Sirum et al., 2009; Addis et al., 2013). The challenges are magnified in large-enrollment, multisection, and multi-instructor courses and compounded by the need for consistency between sections (Ueckert et al., 2011). Further, courses need to continuously adapt to the changing field of biology and practices in teaching (NRC, 2003). Hence, it is of interest to evaluate the impact of course change that fosters collaboration while maintaining individual faculty autonomy.

At Iowa State University (ISU), our approach to this challenge draws on the literature of emergent change (Henderson et al., 2011) and uses faculty learning communities (FLCs) in which instructors collaborate as an instructional team (Sirum et al., 2009; Addis et al., 2013). FLCs capitalize on faculty autonomy, enthusiasm, and ability to react to student needs. Because the FLC involves a minimal time commitment (1 h every 2 wk), the process is sustainable. This model of emergent change allows instructors to coordinate and pool resources, while still enabling individual instructors the freedom to define their own teaching (Sirum et al., 2009; Addis et al., 2013). Importantly, this model supports ongoing, iterative change. The faculty in the FLC are able to continuously adapt courses to further improve student learning outcomes and to reflect rapidly changing fields. Overall FLCs have been successful in the past within multiple courses at ISU, with faculty participants reporting the use of active-learning strategies in their courses more often than faculty who did not participate in an FLC (Addis et al., 2013). However, it is unclear whether the self-reported increase in use of active-learning strategies among FLC members translates to measurable changes in course structure and student learning.

To investigate how an FLC can impact student learning, we studied the outcomes of a faculty group working to transform an introductory biology course at ISU. Collectively, the FLC members worked to integrate more active-learning strategies into the Principles of Biology II course, the second in the introductory course series taken by students in several life sciences majors. The main goal of the study was to determine how course changes, as implemented by instructors within an FLC in this course, impact student learning and attitudes. This work extends prior results that come from course interventions with controlled implementations and less faculty flexibility (Burrowes, 2003; Walker et al., 2008; Armbruster et al., 2009; Freeman et al., 2011). Here, we report how integrating assessment of student outcomes with an FLC can increase both faculty engagement with active-learning strategies and student learning.

METHODS

Course Description

Principles of Biology II is the second semester of introductory biology at ISU, covering cell and molecular biology and plant and animal physiology. This course serves more than 1600 students annually (generally first- and second-year students) and is divided into five face-to-face sections in stadium-seated classrooms and two online sections. During this study, course sections ranged from ∼140 to 290 students. This course is required for biology and genetics majors and for many other life sciences majors, including horticulture and kinesiology. Overall, more than 80 distinct majors are represented in the course each year, including majors outside the life sciences and other science, technology, engineering, and mathematics fields. Throughout this study, sections were generally team-taught by two faculty members. Graduate teaching assistants were available for grading during the Spring and Fall semesters of 2013. Students in all sections completed five to six multiple-choice exams throughout the semester, including a cumulative final exam. Exams counted for more than 50% of the final grade for all sections. Enrollment is historically higher during Spring semesters, as Principles of Biology II is the second course in the introductory biology sequence. Additionally, biology majors (including general biology, genetics, and microbiology) comprise a greater proportion of enrolled students in the Spring (Table 1). In 2010, individual Principles of Biology II instructors were beginning to reexamine the course learning objectives. While some instructors were experimenting with clickers in the classroom, Principles of Biology II was predominantly a traditional large-enrollment lecture course. In 2011, the instructors formed the Principles of Biology II FLC, giving structure, momentum, and common purpose to course reform efforts.

| Semester | Section | Instructor team | Total enrollment (n) | Study participant count (n) | % Biology major | % Life sciences major |

|---|---|---|---|---|---|---|

| Fall 2012 traditional | — | 1 | 184 | 47 | 0 | 72.9 |

| Spring 2013 online | — | 2 | 138 | 88 | 6.4 | 62.9 |

| Fall 2012 reform | — | 3 | 193 | 62 | 18.8 | 81.2 |

| Spring 2013 reform | a | 4 | 173 | 96 | 31.3 | 82.0 |

| b | 5 | 277 | 114 | 22.8 | 81.5 | |

| c | 6 | 286 | 140 | 30.4 | 88.4 | |

| Fall 2013 reform | a | 3 | 190 | 58 | 9.4 | 74.4 |

| b | 7 | 255 | 72 | 8.3 | 75.0 |

The FLC

The Principles of Biology II FLC members worked together to 1) redesign course objectives to reflect a greater emphasis on central biological concepts and skills; 2) incorporate more student-centered learning to meet defined learning goals; 3) investigate, evaluate, and adapt specific active-learning strategies and activities for their particular classroom settings; and 4) administer and interpret assessments of student learning to inform future course changes. Active-learning strategies included clicker questions, group discussions, and group problem solving. Starting in the Fall of 2012, all 10 Principles of Biology II instructors participated in the FLC, including one instructor who elected to keep a traditional lecture format, which provided a comparison group for this study. From 2012 through 2013, 10 instructors taught Principles of Biology II, with one new instructor joining the course in the Fall of 2013. Eight out of the 10 original FLC members continued teaching Principles of Biology II and participating in the FLC throughout the three semesters of the study.

During biweekly meetings, the FLC discussed education research literature, pedagogical resources, and personal experiences implementing active-learning strategies. Through these discussions, faculty instructors began to create a reformed student-centered course, shifting focus from memorizing facts to building skills and engaging students in the real-world applications of biology. A typical FLC meeting would include one instructor presenting an activity used in the course and a reflection on how well the activity worked and how students performed on the activity. Follow-up discussion revolved around suggestions for improved implementation (e.g., encouraging more students to share out with the entire class) and troubleshooting. Resources for the active-learning material were shared with the entire FLC so that other instructors could make use of it in their sections. As a result, many activities were used across multiple course sections. Members of the FLC did not commit to using active-learning strategies for a specific amount of time within each section; rather, instructors chose the activities and techniques with which they were comfortable and confident.

Postdoctoral scholars supported the Principles of Biology II FLC by presenting current education research literature, providing resources for generating activities, participating in discussions during meetings, and conducting research into student learning. The postdoctoral scholars also presented preliminary findings from data on student learning and attitudes in the course. One postdoctoral scholar worked through the Spring of 2012; a second began in the Fall of 2012 and continued through to the end of this study. The postdoctoral scholars earned their PhDs in the biological sciences and had experience with teaching. Their time was split between education research for the FLC and research in the biological sciences. Their efforts in education research were supported by collaborators in the School of Education, other discipline-based education researchers at ISU, and biology faculty with an interest in education research.

Reform Sections

Six of the seven face-to-face sections offered during the course of this study incorporated active-learning strategies: one in the Fall of 2012, all three sections in the Spring of 2013, and both sections in the Fall of 2013. For the rest of this paper, these sections will be referred to as “reform sections.” Reform sections included a variety of active-learning strategies while covering the same content as the traditional section, with a few modifications in the order in which topics were presented. Beginning in Spring 2013, all reform sections used undergraduate learning assistants in the classroom to help ensure that groups remained on task and to promote and deepen student discussions (Otero et al., 2010). Individual instructors determined which activities and strategies would be used in their classes. The same instructional team taught the Fall 2012 reform section and the Fall 2013 section a. Different instructional teams taught all other sections (Table 1).

Active-learning strategies commonly included clicker questions and think–pair–share activities (Caldwell, 2007; Armbruster et al., 2009) and, less frequently, longer group assignments (Tanner et al., 2003; Gaudet et al., 2010). Questions used during instruction (e.g., for clicker questions or think–pair–share activities) were sourced from textbooks and publicly available online materials or were designed by instructors and graduate teaching assistants. Students were encouraged to discuss answers with peers before providing individual answers to clicker questions (Mazur, 1997; Smith et al., 2011), which focused on understanding basic knowledge and concepts. Think–pair–shares were targeted toward larger problems or ideas that students discussed in groups (Armbruster et al., 2009). Instructors held students accountable by randomly selecting groups to share their ideas or by assigning related clicker questions to the entire class. Finally, several instructors included longer group projects during class time (e.g., guided, stepwise problems and activities; Tanner et al., 2003; Allen and Tanner, 2005; Gaudet et al., 2010). Such activities were written up and turned in for a collective group grade. Not represented in the data shown are online preparatory activities, designed to introduce students to the basic terms before class sessions (Moravec et al., 2010). Reform sections used the same exam format and schedule as the traditional lecture section.

Study Design

We tracked course transformation as instructors incrementally incorporated active-learning strategies into their classrooms. Instructors used student performance data (responses on exams and preinstruction and postinstruction assessments, described below) from each semester to evaluate learning objectives, activities, and overall course structure. This continuous feedback was critical to the long-term success of course alterations, as it ensured that instructors invested their time and effort efficiently. During the Fall of 2012, the traditional section and reform section were observed at least once a week by the lead researcher, although the amount of class time used for active-learning strategies (e.g., clicker questions and think–pair–shares) was not systematically recorded. During the Fall 2012 semester, it became apparent that data regarding the amount and type of active learning would be of interest to the FLC, since self-reported use of active-learning strategies does not always correlate with actual practices (Ebert-May et al., 2011). Beginning in the Spring of 2013, the use of active learning in each section was observed (E.R.E. and undergraduate learning assistants), recorded as a percentage of class time on task, and coded by topic area and type of activity (Supplemental Material). More than 75% of class sessions in each section were monitored for the use of active learning.

We used a comparison group design to assess the impact of course changes. A section taught in the traditional lecture format by a highly experienced faculty member during Fall 2012 served as our baseline. Student learning and attitudes toward learning biology in reformed sections were compared with those of students in the baseline section. The online section, taught in Spring 2013, served as another comparison group. The traditional format of the course as offered in Fall 2012 served as a template for the syllabus of the online version. Students enrolled in the Spring 2013 online section participated in some activities designed to mimic active learning within the classroom but with the restrictions of working individually and without immediate feedback. For example, students created a model of DNA using household objects and wrote a brief reflection of the activity and what they learned from the process. Course points for these activities accounted for 6.7% of the total points over the course of the semester. All other sections of Principles of Biology II from Fall 2012 through Fall 2013 were classroom-based and incorporated varying amounts of active learning (reform sections). We also examined the extent to which student learning depended on the amount of time an instructor used active learning.

Assessment of Student Learning and Attitudes

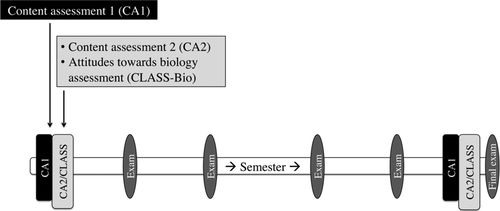

Student learning was assessed using preinstruction and postinstruction diagnostic instruments during the second week and second-to-last week of a 15-wk semester, respectively. The timing of these assessments is shown in Figure 1. The concepts chosen for evaluation included macromolecular structure and function, basic cellular biology, and energetics (Supplemental Material). Because no existing complete concept inventories aligned with the learning objectives of the course, we chose individual questions from the Biological Concepts Instrument (BCI; Klymkowsky and Garvin-Doxas, 2008; Klymkowsky et al., 2010) and the Introductory Molecular and Cellular biology Assessment (IMCA; Shi et al., 2010). The assessment was split into content assessment 1 (CA1) and content assessment 2 (CA2), both of which are documented in the Supplemental Material. CA1 was administered in class using clickers (Turning Technologies) and CA2 was administered online via the Blackboard Learning Management System.

Figure 1. Timing of course assessments during one semester of Principles of Biology II. Black and light gray time blocks represent timing of preinstruction and postinstruction content and student attitude assessments. CA1 was administered in class via clicker technology; CA2 and student attitude assessments (CLASS-Bio) were administered online via Blackboard. Dark gray ovals represent course exams, with the rightmost dark gray oval representing the final exam. All sections included five to six multiple-choice midterm exams plus a comprehensive final exam.

An experienced instructor of Principles of Biology II (C.R.C.) and E.R.E. selected questions for CA1 and CA2 based on 1) alignment with course objectives; 2) emphasis typically placed on different concepts in Principles of Biology II in terms of both time and exam question coverage; and 3) concepts with which Principles of Biology II students historically struggled, based on exam performance and one-on-one student conversations. CA1 included questions judged by instructors to be more straightforward for Principles of Biology II students. Administering these questions in class was intended to limit the opportunity to use external sources to search for the correct answers. CA2 questions were administered online to allow students to work at their own pace to answer questions that were judged as more challenging (Supplemental Material) with minimal loss of class time. The content questions were rated using Bloom’s taxonomy by authors E.R.E., C.R.C., and E.J.G. with high interrater reliability (rating alignment for two out of three raters on 95% of questions, Kendall’s coefficient of concordance = 0.73, Supplemental Material; Crowe et al., 2008). Although CA2 contained more higher-level questions (application level) than CA1, the overall difference was not significant (Wilcoxon signed-rank test, p = 0.21). Both sets of questions covered the same learning objectives. The questions from CA1 and CA2 were also sorted into topical groups: biological membranes, energetics, and genetics (described in the Supplemental Material).

Student learning was assessed by per-student normalized score change (c) to correct for variation in preassessment scores. For cases in which the postinstruction score was equal to or greater than the preinstruction score, c = (postscore – prescore)/(highest possible score – prescore). For cases in which the postscore was lower than the prescore, c = (postscore – prescore)/prescore (Bao, 2006; Marx and Cummings, 2007). Students with a score change of zero were included in the analysis. Both pre- and postscores are represented as a fraction, where 1 indicates that 100% of correct answers were chosen. For some analyses, an overall content score change was calculated based on performance on all content questions combined (fraction correct of all CA1 and CA2 questions). Positive normalized score change on content assessments is referred to as “learning gains” throughout the manuscript. Students earned a small number of course points for completing each content survey. Only those students who completed all content surveys (preinstruction CA1 and CA2 and postinstruction CA1 and CA2) were included in the analysis. Twenty-five percent of students were excluded from analysis, because they did not provide informed consent. Of the students who did provide informed consent, 50% did not complete all content assessments and were excluded from analysis. Overall, 37.5% of students were included in the analysis.

We also asked students about their perceptions and attitudes toward biology at the beginning and end of the semester, using the Colorado Learning Attitudes toward Science Survey, Biology (CLASS-Bio; Semsar et al., 2011). The CLASS-Bio was administered online via Blackboard. This instrument assesses appreciation for real-world connections, recognition of conceptual connections, effort, and self-reported skill in problem solving and reasoning. We coded answers on a five-point Likert-type scale and report the percentage of “agree” or “strongly agree” responses (Perkins et al., 2004; Semsar et al., 2011). Students earned a small number of course points for completing the CLASS-Bio question sets. The CLASS-Bio instrument includes a question that allows for exclusion of responses from students who did not read the questions carefully. For analysis of the CLASS-Bio results, we included only those students who completed both the preinstruction and postinstruction CLASS-Bio surveys and all content question sets. Of the students who provided informed consent and completed the content assessments, 76.7% also completed the CLASS-Bio surveys and correctly answered the screening question that selects for students who read the questions carefully.

FLC Member Survey

At the end of the study, FLC participants were asked via an online survey to reflect on how the student learning and attitudes data influenced their perception of the course and their teaching. The survey also asked FLC members to reflect on their experience with the study, on their experience teaching the course, their perspectives on active learning, and how they planned to teach the course in the future. The survey included both Likert-scale and open-response questions (Supplemental Material). Answers to open-response questions were open coded (Strauss and Corbin, 1990; Armbruster et al., 2009). Survey results are presented as the ratio of FLC members commenting on a particular aspect and/or selecting particular Likert-scale responses.

Statistical Methods

The differences in learning gains between sections were analyzed by comparing the normalized score change via one-way analysis of variance (ANOVA) with a Scheffé post hoc comparison, which is more stringent than the Tukey-Kramer adjustment and is better suited to uneven sample sizes (Lewis-Beck et al., 2004). Differences in CLASS-Bio scores between different sections and across different majors were compared by one-way ANOVA. We examined the correlations between learning gains, CLASS-Bio scores, and student exam scores via linear regression. We also performed cluster analyses grouping students’ CLASS-Bio responses using Ward’s minimum variance method (agglomerative hierarchical, squared Euclidean distance). The number of clusters was determined by scree plot (Ward, 1963; Morey et al., 2010). These clusters were used to assign students to a high- or low-favorability group based on both preinstruction and postinstruction CLASS-Bio scores. All analyses were performed with IBM SPSS statistical software. Differences were considered significant if p ≤ 0.05.

RESULTS

Data on student learning and perceptions of biology were collected as part of an ongoing reform effort driven by the FLC. After each semester and after completion of the project, findings were reported by the postdoctoral fellow to the FLC in the form of summary documents and presentations. Sharing these data was intended to provide faculty with more detailed feedback about where students struggled, inform course revisions by helping faculty understand which interventions had been successful, and improve the quality and relevance of the assessment instruments for use in our introductory biology sequence. During and after the data presentations, faculty members 1) discussed the validity of specific questions; 2) compared results with their own impressions of student learning based on conversations with students and performance on exams; 3) discussed which results they found most meaningful, compelling, and interesting; 4) identified areas for improvement; and 5) suggested future research questions of interest.

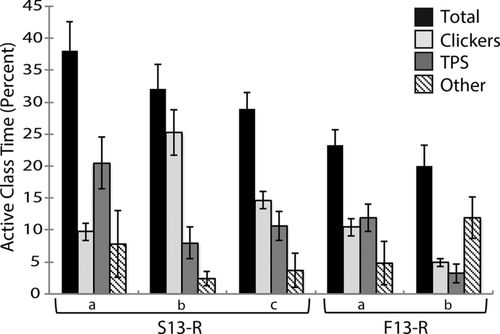

Use of Active-Learning Strategies

Instructors in the FLC used a variety of active-learning strategies in their classrooms (Figure 2). Activities in reform sections ranged from clicker questions to group problem solving; the amount and type of active learning varied by instructor and topic. In the reform sections, the use of active learning ranged from 20.0 ± 3.2% to 38.1 ± 4.5% of total class time (mean ± SEM; Fall 2013 section b, and Spring 2013 section a, respectively). In contrast, the traditional lecture section taught in Fall 2012 used clicker questions for 2–4% of class time (about once per week). Remaining class time was dedicated mostly to lecture.

Figure 2. Use of active learning in reform sections. Bars represent average percentage of class time (± SEM) used for active-learning strategies in reform sections from the Spring of 2013 (S13-R) and the Fall of 2013 (F13-R). The three reform sections in Spring 2013 are labeled “a,” “b,” and “c.” The Fall 2013 reformed sections are labeled “a” and “b.” Different sections were taught by unique instructor teams (Table 1). Percentages were calculated as the percent of time dedicated to active-learning strategies per day, averaged across all class sessions for each section. Percentages are shown for total active-learning time (sum of all activity types, black bars), clicker questions (light gray bars), think–pair–shares (TPS; dark gray bars), and other (patterned bars). Other includes longer group problem-solving activities. Data represent values from more than 75% of all class sessions.

Student Learning

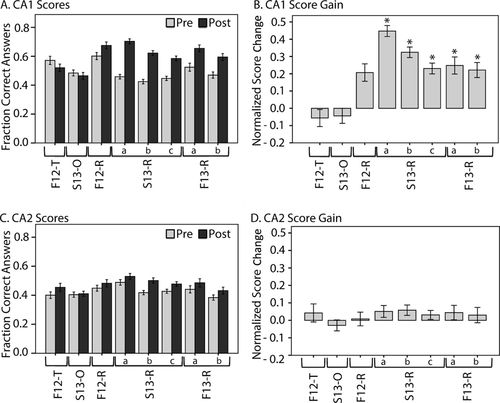

Preinstruction assessments indicated that students entered Principles of Biology II with different levels of existing knowledge. Data on student responses for CA1 and CA2 are represented as mean ± SEM throughout. Preinstruction scores on CA1 and CA2 were variable between sections: section averages on CA1 ranged from 0.42 ± 0.02 to 0.60 ± 0.02; averages on CA2 ranged from 0.38 ± 0.02 to 0.49 ± 0.02 (Figure 3 and Table 2). The range of preinstruction scores likely reflected differences in student preparation and background. Between sections, only the CA1 preinstruction scores in Spring 2013 sections b and c were significantly different compared with the traditional section (one-way ANOVA, F(7, 670) = 8.6, p < 0.0005, Scheffé post hoc comparison, p < 0.05; Table 2). Student preinstruction scores on CA2 were significantly different between sections (one-way ANOVA, F(7, 670) = 3.2, p = 0.003), but pairwise comparisons indicated that none of the reform sections differed significantly from the traditional section (Scheffé post hoc comparison, all p > 0.28; Table 2).

Figure 3. Student performance and score gains on content questions. (A and C) Bars represent average student scores (± SEM) on CA1 (A) and CA2 (C), preinstruction (light gray) and postinstruction (dark gray). (B and D) Bars represent normalized score change for CA1 (B) and CA2 (D). CA1 and CA2 scores range from 0 to 1 (1 representing 100% correct). Score changes range from −1 to +1. F12-T, traditional Fall 2012 lecture; S13-O, Spring 2013 online section. The traditional section served as a baseline comparison group. F12-R, S13-R, and F13-R represent reform sections from Fall 2012, Spring 2013, and Fall 2013, respectively. The three reform sections in Spring 2013 are labeled “a,” “b,” and “c.” The Fall 2013 reformed sections are labeled “a” and “b.” With the exception of the first reform section in Fall 2012, the CA1 score gains in all reform sections are significantly different from CA1 score gains in the traditional lecture section. Asterisks indicate significant differences compared with the traditional lecture section, F12-T (one-way ANOVA, Scheffé post hoc comparison, all p < 0.02).

| Semester | Section | n | CA1 pre (± SEM) | p Value | CA2 pre (± SEM) | p Value |

|---|---|---|---|---|---|---|

| Fall 2012 traditional | — | 47 | 0.57 ± 0.03 | — | 0.40 ± 0.02 | — |

| Spring 2013 online | — | 88 | 0.48 ± 0.02 | 0.423 | 0.40 ± 0.02 | 1.000 |

| Fall 2012 reform | — | 62 | 0.60 ± 0.02 | 0.998 | 0.45 ± 0.02 | 0.947 |

| Spring 2013 reform | a | 96 | 0.46 ± 0.02 | 0.096 | 0.49 ± 0.02 | 0.275 |

| b | 114 | 0.42 ± 0.02 | 0.003 | 0.42 ± 0.01 | 1.000 | |

| c | 140 | 0.45 ± 0.01 | 0.021 | 0.43 ± 0.01 | 0.996 | |

| Fall 2013 reform | a | 58 | 0.52 ± 0.03 | 0.971 | 0.44 ± 0.02 | 0.982 |

| b | 72 | 0.47 ± 0.02 | 0.257 | 0.38 ± 0.02 | 1.000 |

Student learning gains were assessed by comparing normalized score change, representing the change in individual student performance before and after instruction, between sections. There was no correlation between preinstruction scores and normalized score change, indicating that the normalized score change is independent of preinstruction scores and controls for variation in preinstruction assessment scores (Supplemental Figure 1; Marx and Cummings, 2007). On CA1, students in all reform sections except the Fall 2012 reform section showed significantly higher learning gains compared with the traditional section (one-way ANOVA, F(7, 669) = 17.5, p < 0.001, Scheffé post hoc comparison, p < 0.05; Figure 3B and Table 3). Learning gains on CA1 in reform sections ranged from 0.21 ± 0.05 to 0.45 ± 0.03, while learning gains in the traditional section were negligible at −0.06 ± 0.05 (Table 3). Between the two reform sections taught by the same instructor team (the Fall 2012 reform section and Fall 2013 reform section a), there were no significant differences in learning gains on CA1 (0.21 ± 0.05 vs. 0.25 ± 0.05, Scheffé post hoc comparison, p = 1). In contrast to the learning gains on CA1, there were no significant differences in gains on CA2 between the reform and traditional sections (one-way ANOVA, F(7, 670) = 0.6, p = 0.69; Figure 3D and Table 3).

| Semester | Section | n | CA1 (± SEM) | p Value | CA2 (± SEM) | p Value |

|---|---|---|---|---|---|---|

| Fall 2012 traditional | — | 47 | −0.06 ± 0.05 | — | 0.04 ± 0.05 | — |

| Spring 2013 online | — | 88 | −0.04 ± 0.04 | 1.000 | −0.03 ± 0.03 | 0.982 |

| Fall 2012 reform | — | 62 | 0.21 ± 0.05 | 0.052 | 0.01 ± 0.04 | 1.000 |

| Spring 2013 reform | a | 96 | 0.45 ± 0.03 | 0.000 | 0.05 ± 0.03 | 1.000 |

| b | 114 | 0.32 ± 0.03 | 0.000 | 0.06 ± 0.03 | 1.000 | |

| c | 140 | 0.23 ± 0.03 | 0.003 | 0.03 ± 0.03 | 1.000 | |

| Fall 2013 reform | a | 58 | 0.25 ± 0.05 | 0.011 | 0.04 ± 0.04 | 1.000 |

| b | 72 | 0.22 ± 0.04 | 0.020 | 0.03 ± 0.04 | 1.000 |

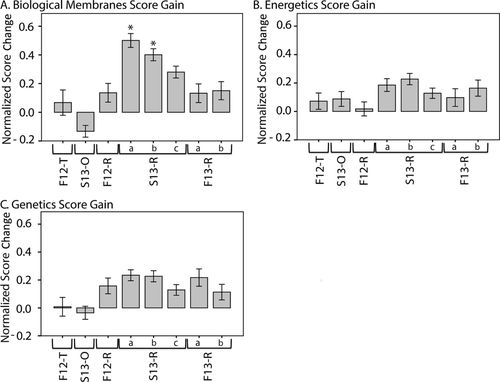

Student performance within particular topic areas (biological membranes, energetics, and genetics) was evaluated to identify the concepts with which students struggled and to help focus further course reform efforts (Supplemental Material). We observed variation in student performance on preinstruction questions about biological membranes and energetics (Supplemental Figure 2, A and B). The highest and lowest average biological membranes preinstruction scores for different sections and semesters were 0.65 ± 0.03 and 0.45 ± 0.02, respectively. Within the energetics questions subset, the highest and lowest average scores were 0.60 ± 0.02 and 0.42 ± 0.03, respectively. In contrast, preinstruction scores on questions addressing genetics concepts tended to be relatively even across sections (Supplemental Figure 2C), with high and low average scores of 0.46 ± 0.03 and 0.40 ± 0.02, respectively.

Student learning gains were greatest within the subject of biological membranes. Gains in two reform sections (Spring 2013 sections a and b) were significantly greater than those in the traditional section, with normalized score changes of 0.50 ± 0.05 (p = 0.001) and 0.40 ± 0.04 (p = 0.029), respectively, compared with the traditional section with a normalized score change of 0.07 ± 0.09 (one-way ANOVA, F(7, 670) = 15.9, p < 0.001, Scheffé post hoc comparison, p < 0.05). This topic also showed the greatest variation between reform sections (Figure 4A). Within the genetics questions subset, the only significant pairwise differences occurred between the online section and Spring 2013 reform sections a and b (one-way ANOVA, F(7, 670) = 15.9, p < 0.001, Scheffé post hoc comparison, p < 0.05). Questions related to energetics saw the least improvement over the traditional section, with a maximum reform section score change of 0.23 ± 0.04 compared with 0.07 ± 0.06 in the traditional section (Figure 4B, one-way ANOVA, F(7, 670) = 1.9, p = 0.068). We note that the postinstruction scores of the reform sections on biological membranes and energetics questions were similar to each other (Supplemental Figure 2). Increased discernment between different levels of students may require a different set of assessment questions that more accurately reflect the range of content knowledge expected from the course.

Figure 4. Content learning by topic and course section. Bars represent normalized score change (± SEM) on biological membranes (A), energetics (B), and genetics (C) questions. F12-T, traditional Fall 2012 lecture; S13-O, Spring 2013 online section; F12-R, S13-R, and F13-R represent reform sections from Fall 2012, Spring 2013, and Fall 2013 respectively. The three reform sections in Spring 2013 are labeled “a,” “b,” and “c.” The Fall 2013 reformed sections are labeled “a” and “b.” Asterisks indicate significant differences compared with the traditional lecture section, F12-T (one-way ANOVA, Scheffé post hoc comparison, all p < 0.03).

To determine whether student major partially accounted for different content scores in various reform sections, we disaggregated the assessment results by major. The distribution of enrolled majors differed across the semesters: biology, genetics, and microbiology majors (grouped together) made up a greater percentage of students in the Spring (23.5%) compared with the Fall of 2013 (10.1%; Table 1). We observed no significant differences between content assessment preinstruction scores, postinstruction scores, or normalized score change between majors (unpublished data).

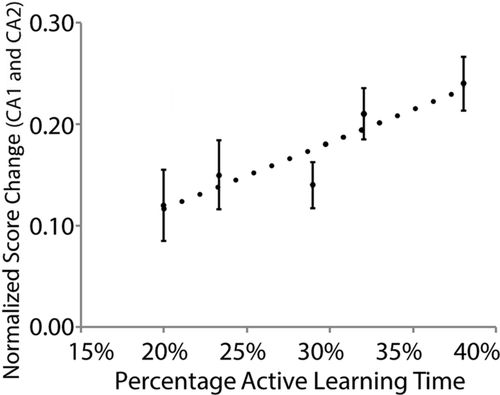

As shown in Figure 5 and Table 4, increased time spent on active learning was associated with increased learning gains. Different sections within the same semester incorporated varying amounts and types of student-centered learning activities. The highest percentage of active class time (Spring 2013 section a, 38%) was associated with the highest recorded overall normalized score change on CA1 (0.45 ± 0.03; Table 4). Student learning as measured by CA1 correlated with the amount of student-centered learning activities in the section. Pairwise comparisons using Scheffé post hoc analysis indicated that students in the section with the highest percentage of student-centered learning activities demonstrated a significantly higher score change than other reform sections (one-way ANOVA, F(4, 476) = 6.9, p < 0.0001, Scheffé post hoc comparison, p < 0.05), with the exception of the Spring 2013 section b (Table 4). Although the greatest differences occurred within CA1, the trend holds when the use of active learning is correlated with normalized score change on all content questions from both CA1 and CA2 (Figure 5).

Figure 5. Student learning gains are positively correlated with the amount of class time dedicated to active learning. Normalized score change on all content questions (CA1 and CA2) by the percentage of class time dedicated to active learning. Data are from Spring 2013 and Fall 2013. The dotted line represents the linear regression line (y = 0.12x − 0.043; R2 = 0.78; p = 0.046).

| Percentage active-learning time | n | CA1 score change | SEM | p Value | CA2 score change | SEM | p Value |

|---|---|---|---|---|---|---|---|

| 20.0 | 72 | 0.22 | 0.04 | 0.002 | 0.04 | 0.04 | 0.997 |

| 23.3 | 58 | 0.25 | 0.05 | 0.020 | 0.04 | 0.04 | 1.000 |

| 29.0 | 140 | 0.23 | 0.03 | 0.000 | 0.03 | 0.03 | 0.995 |

| 32.0 | 114 | 0.32 | 0.03 | 0.174 | 0.06 | 0.03 | 1.000 |

| 38.1 | 96 | 0.45 | 0.03 | — | 0.05 | 0.03 | — |

Student Attitudes toward Biology

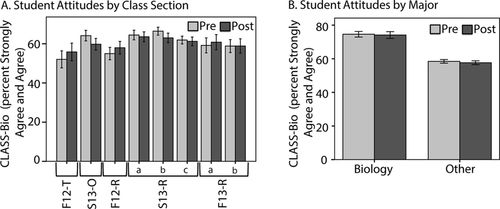

Preinstruction and postinstruction responses on the CLASS-Bio instrument were similar, showing that student attitudes toward biology did not shift following instruction (Figure 6). Preinstruction CLASS-Bio scores were significantly different between sections (one-way ANOVA, F(7, 512) = 17.5, p = 0.011), although there were no significant pairwise differences between sections (Scheffé post hoc, all p > 0.17). Postinstruction scores and score shifts were not significantly different between sections (one-way ANOVA, F(7, 512) = 0.6 and 1.9, p = 0.722 and 0.066). Across all students, the average preinstruction CLASS-Bio score and postinstruction CLASS-Bio scores were 61.6% ± 1.0 and 60.9% ± 1.0, respectively. The greatest CLASS-Bio score shift was seen in the traditional section, with an average score change of 3.8% ± 2.4. Note that this section started with the lowest prescores. Overall, no gains were observed within CLASS-Bio question subsets, such as personal interest and conceptual learning (unpublished data; Semsar et al., 2011). The preinstruction scores were higher for biology majors (biology, genetics, and microbiology, 75% favorable) compared with other groups of declared majors (60% favorable); however, neither group showed any shift after instruction (Figure 6B).

Figure 6. Student attitudes toward learning biology. Bars represent average student CLASS-Bio scores (± SEM) before (light gray) and after (dark gray) instruction comparing (A) sections and (B) declared majors. F12-T, traditional Fall 2012 lecture; S13-O, Spring 2013 online section; F12-R, S13-R, and F13-R represent reform sections from Fall 2012, Spring 2013, and Fall 2013, respectively. The three reform sections in Spring 2013 are labeled “a,” “b,” and “c.” The Fall 2013 reformed sections are labeled “a” and “b.” There are no significant differences between the preinstruction score or the postinstruction score between specific sections (one-way ANOVA, Scheffé post hoc comparison, all p > 0.17). The “biology” major bars encompass biology, genetics, and microbiology majors. Preinstruction and postinstruction CLASS-Bio scores are not significantly different across any section or major.

Relationship between Student Learning and Attitudes

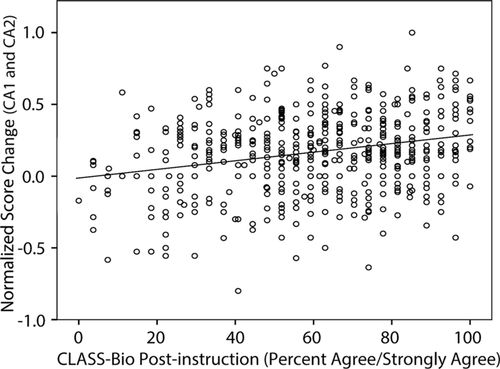

We investigated the interrelatedness of the two goals of the FLC (improving both understanding and appreciation of biology) by examining the correlation between learning gains and CLASS-Bio scores. On the basis of previous studies (Semsar et al., 2011), we expected that higher learning gains would correlate with positive perception of learning biology. Student scores on the CLASS-Bio postinstruction assessment correlate weakly but significantly with student learning (R2 = 0.058, p = 0.0001), as measured by normalized score change on all questions from CA1 and CA2 (Figure 7).

Figure 7. Normalized score change on all content questions are positively correlated with student attitudes toward learning biology (CLASS-Bio postinstruction scores). Solid line shows the least-squares regression (y = 0.003x − 0.013; R2 = 0.058; p = 0.0001).

Cluster analysis revealed a small segment of the student population that showed shifts in CLASS-Bio scores. Cluster analysis (Ward’s minimum variance method) on CLASS-Bio preinstruction and postinstruction responses grouped students into two preinstruction CLASS-Bio groups and two postinstruction CLASS-Bio groups. We categorized these groups as high-scoring clusters and low-scoring clusters (preinstruction averages of 47% ± 1.1 for “low” cluster, 78% ± 0.9 for “high” cluster; postinstruction averages of 43% ± 1.1 for “low” cluster, 80% ± 0.8 for “high” cluster). Most of the students stayed within their preinstruction cluster; however, 12% of students shifted from the high-scoring cluster to the low-scoring cluster. A comparably sized group shifted from the low-scoring cluster into the high-scoring cluster. Students were also grouped based on how their attitudes changed over time: low to low, low to high, high to high, and high to low (Table 5).

| Post | ||

|---|---|---|

| Pre | High | Low |

| High | 33.4% | 12.2% |

| Low | 12.4% | 42.0% |

Among these four groups of students, there were differences in learning gains on content questions from CA1 and CA2 (Table 6). Students who stayed highly positive about biology had approximately twice the learning gains of students who started and remained more negative about biology (ANOVA, F(3448) = 4.98, p = 0.002). Students who shifted into the more positive attitude group after instruction performed as well on the content assessments as their peers who started and stayed in the high CLASS-Bio scoring group. The group of students who dropped in their attitudes to biology showed a comparably low gain in content assessment in comparison with their peers who started and remained in the low CLASS-Bio scoring group.

| Pre to post cluster | n | Mean normalized change (all content) | SEM | p Value |

|---|---|---|---|---|

| Low to low | 190 | 0.12 | 0.02 | — |

| Low to high | 56 | 0.18 | 0.04 | 0.546 |

| High to low | 55 | 0.10 | 0.04 | 0.987 |

| High to high | 151 | 0.22 | 0.02 | 0.007 |

The FLC

On a survey conducted at the end of the project, all instructors indicated that the FLC had been helpful or very helpful toward their efforts to plan and teach Principles of Biology II. Faculty indicated value in the sharing of resources and activities, especially those regarding the efficient use of limited time and inspiring classroom innovations. For example, comments included “Often times, I have either borrowed really good activities that other instructors have shared … [or acquired ideas for] activities through our FLC discussions,” and “The time efficiencies we have realized through the FLC have been central to the [successes] of this team of instructors.” In particular, one comment indicated that the faculty member would probably not have tried active learning without the influence of the FLC but would be likely to continue using these strategies in the future. Instructors also highlighted the importance of discussions about how to form groups (e.g., “Our discussion of the advantages and disadvantages of different ways to assign teams has helped me select the one I use in my section of the course.”) and design accurate assessments of student learning (e.g., “Discussion of … how to design proper test questions and assessments were most helpful.”).

During and after the study, FLC members had the opportunity to discuss and interpret the data on student learning and attitudes. Instructor responses to student learning gains varied from discouragement (“Overall, I must admit that this trend isn’t as clear and isn’t as large as I thought/hoped it would be.”) to optimism (“It is clear from the data that active learning is better for student learning than a traditional lecture format.”). Both perspectives were coupled with a desire for further data collection. Suggested interpretations for the difference in CA1 and CA2 among faculty included a discrepancy in complexity and the different modes of administration (online vs. in class). Although instructors were not surprised by the lack of change in CLASS-Bio scores, some were pleased that the scores did not drop: “This is one of my biggest worries—that I am turning students off to science.”

Seven out of eight instructors found the process of data collection and presentation of results valuable and indicated that the findings have influenced how they plan to teach in the future. In particular, five out of eight faculty members reported increased confidence in active learning as an effective approach. Six of the instructors indicated that these data increased their confidence in their own ability to effectively use active-learning strategies. One respondent reported less confidence in ability to use active learning but still indicated a desire to continue using active-learning strategies. This instructor commented on the need for more individualized data about how students in his/her section responded to particular strategies and activities, and he/she was particularly interested in trying activities beyond clicker questions. This suggests that, although the results were not as striking as hoped for, these small gains bolstered the reform efforts and attitudes of the instructors. Indeed, all FLC members reported that they were likely or very likely to continue using active learning in their courses.

DISCUSSION

This study presents the FLC as a potential solution to many of the challenges inherent in incorporating active-learning strategies in large-enrollment introductory courses (Sirum et al., 2009; Addis et al., 2013). Active, student-centered learning strategies are associated with improved student learning and engagement (Smith et al., 2005; Freeman et al., 2007, 2011, 2014; Gaudet et al., 2010; Haak et al., 2011), but reforming large-enrollment, multiple-section introductory courses presents unique challenges. Course reform for such courses requires a balance between section consistency and flexibility to allow instructors to accommodate both their individual styles and the needs of the students in their classes. Developing the most effective approaches for a particular course requires trial and error and instructor experience with the processes, materials, and students (Andrews et al., 2011). An FLC provides a forum for ongoing conversations about the course and allows compromise between section consistency and faculty autonomy while supporting individual instructor development. This study expands on efforts at other institutions to reform large-enrollment biology courses using interactive and engaging classrooms focused on the foundational concepts in the field (Smith et al., 2005; Walker et al., 2008; Stanger-Hall et al., 2010; Ueckert et al., 2011).

At ISU, instructors have used an FLC as a platform for enacting course reform. The FLC provided the space and resources for collective conversations about goals, activities, teaching strategies, assessments, and indicators of success. The members of the FLC aimed to improve student mastery of biological concepts and promote more expert-like perceptions of biology. As a result of discussions within the FLC, all Principles of Biology II sections incorporated student-centered learning, commonly in the form of clicker questions, think–pair–shares, and written group activities as of the Spring of 2013 (Figure 2). All of these approaches are known to improve student learning (Smith et al., 2005, 2011; Caldwell, 2007; Freeman et al., 2007, 2014; Gaudet et al., 2010). Although instructors shared resources and materials, the amount and types of active learning varied between sections, with instructors free to use activities that best matched their pedagogical preferences. The different activities engaged students in a range of cognitive processes. The increase in learning on one content assessment among reform sections, compared with more traditional sections, suggests the conversion to a more student-centered course resulted in some success. Students in reform sections showed larger learning gains on the CA1 content knowledge assessment (Figure 3 and Table 3), consistent with earlier reports of improved student learning with active-learning strategies (Freeman et al., 2014). Similar learning gains were not observed within CA2. Preinstruction scores on CA2 were also lower compared with CA1 scores (Figure 3 and Table 3). These differences in preinstruction scores and normalized score change could reflect either the selection process used to sort questions into CA1 and CA2 or the different modes of administration (in class vs. online).

As there were no significant differences in Bloom’s level between CA1 and CA2 questions (Wilcoxon signed-rank test, p = 0.21, Supplemental Material), the difference in learning gains is likely not attributable to a discrepancy in question complexity. However, CA2 included questions on topics that the course instructors considered more advanced, such as in-depth understanding of promoter function (question I7, Supplemental Material) as opposed to DNA replication (question B4, Supplemental Material). The lack of learning gains on questions deemed more advanced by instructors may be the expected result of a broad survey course. Nonetheless, FLC members indicated that the questions on CA2 were still reasonable for Principles of Biology II. Three faculty members pointed to a need for more interventions that could better prepare students for these potentially more advanced questions.

The discrepancy between learning gains on the two assessments could also be attributed to the different methods of administration. In-class assessments (CA1) may have induced greater motivation to focus and reflect on the questions. There was no incentive for individual students to rush through questions in order to shorten the time spent on the assessment, as the questions were available for a prescribed amount of time. The class environment may also offer fewer distractions, especially among students who are inclined to multitask while filling out surveys at home. Responses to the BCI have been shown to be similar when administered online or on paper (Klymkowsky et al., 2010), while there is some evidence that mode of administration has some effect on student responses to the IMCA instrument (Shi et al., 2010). We expected that the use of clicker technology would provide similar results to in-class, on-paper administration. However, we do not yet have data on how this method affects student responses. Future studies are needed to determine whether differences in normalized score change are related to question content, mode of administration, or both. Thus, future data collection on student learning will include measures to evaluate the validity of these assessments, such as cognitive interviews regarding the questions and analysis of the mode of administration.

Learning gains on CA1 were correlated with the amount of time instructors spent on active learning; students in sections with more active learning demonstrated greater learning gains than their peers in other sections (Table 4). This correlation persists when active-learning time is compared with student performance on all content questions (Figure 5). Interestingly, the learning gains increase linearly with the amount of time spent on active learning. This finding suggests the type of course changes supported by the FLC support student learning, and the effects of these activities and strategies may be additive, at least to a point. The amount of class time dedicated to active-learning strategies alone does not fully explain the differences in learning gains between sections. Thus, continued course improvement will require the distillation of which aspects were most effective and targeted revisions directed at those topics with lower learning gains.

To understand what approaches have been most effective, future studies will need to investigate not only the quantity but the quality of activities and how well these activities engage students. Understanding the quality of these activities requires data on the types of questions and student thought processes (Crowe et al., 2008), instructor reflection, the efficacy of different materials, the impact of undergraduate learning assistants on student engagement, and more robust observation protocols for assessing how students interact with course materials (Sawada et al., 2002; Ebert-May et al., 2011; Smith et al., 2013). Of particular interest is whether the structure or implementation of these strategies improves as FLC members continue to share experiences, reflect, and revise materials. Which concepts and cognitive processes do activities focus on, and how is this evolving over time? In terms of student engagement with the material, future work will also include assessment of students’ perceptions of active learning. In Principles of Biology II, student pushback and distrust of the active-learning process, evident through evaluations and student discussions with learning assistants, has been a continuing theme throughout reform. Whether this perception is a barrier to learning is unclear. Efforts are currently underway to understand how our students perceive this classroom style in order to promote motivation and positive attitudes. In these future studies, the focus provided by instructor interests and perceptions of the course will be invaluable.

Although biology, genetics, and microbiology majors were overrepresented in the Spring compared with the Fall semesters, students in these majors did not outperform other students on the content assessments (unpublished data). Although the preinstruction content assessments controlled for the variation in biology background, these assessments did not account for different background knowledge in related fields, nor do they control for student interest or demographics (Carini et al., 2006; Rotgans and Schmidt, 2011).

Faculty plan to use the results of these assessments to modify course design and improve activities based on the concepts with which students struggle. Future development of activities will focus on the areas in which students showed the lowest learning gains, including genetics concepts (Figure 4). Discerning different levels of students may require a different set of assessment questions that more accurately reflect the range of learning outcomes within the course. For example, the current content assessments do not address other content areas covered in Principles of Biology II, such as plant and animal physiology or student lifelong learning and science process skills. Future studies will need to include assessments of multiple types of learning to comprehensively measure the impact of reforms.

As part of the FLC, instructors spent time discussing effective assessment, refining learning objectives, and reflecting on the successes and failures during instruction. Although all instructors were invested in using active learning and believed that active learning could benefit their students, differences in instructor confidence in particular activities could influence student learning as well. Notably, the amount of time spent on active-learning strategies in the Spring of 2013 was greater than that in the Fall of 2013 (Figure 2). However, different instructional teams taught almost all sections involved in this study (Table 1). Repeated observations of the same instructional teams over time will provide a better comparison for the effect of the FLC and allow observation of how changes in use of active learning by the same instructional teams impact student learning.

Student scores on the CLASS-Bio assessments were striking in their persistence, with scores unchanged by instruction (Figure 6). However, a small subset of students shifted to a positive attitude toward biology, while a comparable number shifted to lower scores (Table 5). Although a few instructors were encouraged that average CLASS-Bio scores do not drop after instruction as reported in previous studies (Adams et al., 2006; Semsar et al., 2011), most would prefer gains in these assessments. Overall, student scores on the CLASS-Bio were relatively high, especially among biology majors, as expected (Figure 6; Semsar et al., 2011). Achieving more expert-like perceptions of biology for more students may require interventions beyond the active-learning strategies currently used. Data regarding how well activities reflect authentic practices in the field and student responses to these activities will be helpful in the future. Importantly, Principles of Biology II is part of a two-part introductory biology series, and the majority of students enroll in this course after completing the first semester. The downward shift in attitudes toward biology described in previous studies may very well have occurred during the first semester (Adams et al., 2006; Semsar et al., 2011). Student perceptions of biology may be more firmly established by the time students reach Principles of Biology II.

Student attitudes and learning gains were positively correlated, but the effect was small (Figure 7 and Table 6). These results are consistent with the association between CLASS score and learning gains of undergraduate physics students reported by Perkins et al. (2004). The small effect suggests that student perceptions of biology are not entirely predicted by learning gains and performance. These data also indicate that a positive attitude toward biology was not strictly required for student learning. Nonetheless, students who stayed highly positive about biology had approximately twice the learning gains of the group of students who started and remained more negative about biology. It is notable that students who shifted from a lower attitude score to a higher attitude score after instruction performed similarly to students who started and remained positive (Table 6). The question remains as to whether instructors could implement an early intervention to improve student engagement and improve student learning throughout the semester. Additional studies exploring student motivation will inform the design of more effective interventions for introductory biology students.

FLC members found both the FLC and the process of data collection and analysis valuable. All current instructors continue to be active members of the FLC. Confidence in the effectiveness of active learning in general did not increase for all members, as many instructors were convinced of the efficacy of active learning before the formation of the FLC. Even instructors who found these initial results less encouraging still planned to continue using active learning. Instructor comments reflect the importance of iteration, for example, the use of active learning must be informed by data about our students to be most effective. As one instructor commented: “We need information like this to inform our modes of instruction and target areas for improvement.” In general, there is great interest among instructors in gaining more information about student backgrounds and goals and in students’ knowledge retention and skill development: How do our students perform in future life science courses? With these assessment results, the faculty now have a better understanding of what their students know when they join the course and what level of understanding students gain as they work through Principles of Biology II.

Viewed as a whole, the initial reforms of Principles of Biology II appear to be successful in improving student learning on at least one assessment of content learning. Although initial learning gains are modest, they mark a positive first step. Assessment of learning gains has provided valuable insight into the skills and knowledge of our students, which will be used to continue the process of reform as we tailor instruction to best meet students’ needs. These results represent the efforts of faculty members over more than three semesters and strike a balance between section consistency and faculty autonomy by allowing for continued collaboration. Students benefit from such reforms, even in the very early stages of long-term iterative emergent change.

ACKNOWLEDGMENTS

The ongoing efforts in introductory biology are part of a larger transformation at ISU supported by a Howard Hughes Medical Institute (HHMI) Engage to Excel grant. The transformation of introductory biology is a result of the efforts of 10 instructors, science-teaching postdoctoral fellows, graduate teaching assistants, and undergraduate learning assistants at ISU. We thank Thomas Holme, Kimberly Linenberger, and Cynthia Luxford and instructors Philip Becraft, Jeffrey Essner, Stephen H. Howell, Sayali Kukday, Thomas Peterson, Donald S. Sakaguchi, David Vleck, and Yanhai Yin for their invaluable help in designing and implementing this project. The protocol for using students as human subjects was approved by ISU Institutional Review Board, 12–392. E.J.G. was supported in part by a fellowship from the ISU Office of Biotechnology.