Alternative Realities: Faculty and Student Perceptions of Instructional Practices in Laboratory Courses

Abstract

Curricular reform efforts depend on our ability to determine how courses are taught and how instructional practices affect student outcomes. In this study, we developed a 30-question survey on inquiry-based learning and assessment in undergraduate laboratory courses that was administered to 878 students in 54 courses (41 introductory level and 13 upper level) from 20 institutions (four community colleges, 11 liberal arts colleges, and five universities, of which four were minority-serving institutions). On the basis of an exploratory factor analysis, we defined five constructs: metacognition, feedback and assessment, scientific synthesis, science process skills, and instructor-directed teaching. Using our refined survey of 24 items, we compared student and faculty perceptions of instructional practices both across courses and across instructors. In general, faculty and student perceptions were not significantly related. Although mean perceptions were often similar, faculty perceptions were more variable than those of students, suggesting that faculty may have more nuanced views than students. In addition, student perceptions of some instructional practices were influenced by their previous experience in laboratory courses and their self-efficacy. As student outcomes, such as learning gains, are ultimately most important, future research should examine the degree to which faculty and student perceptions of instructional practices predict student outcomes in different contexts.

INTRODUCTION

Determining faculty instructional practices in a course is important in a variety of contexts, including evaluating courses and instructors (Kendall and Schussler, 2013), assessing the effectiveness of faculty professional development (Ebert-May et al., 2015), and determining how instructional practices influence student learning outcomes (Umbach and Wawrzynski, 2005; Corwin et al., 2015a; Dolan, 2015). A variety of approaches can be used to measure how courses are taught. Faculty may be directly observed by an outside observer and their instructional practices scored using a standard rubric (Sawada et al., 2002; Weiss et al., 2003; Smith et al., 2013). However, direct observation has many limitations. Observers need to be trained on the rubric to ensure interrater reliability. Furthermore, it is often only feasible to make observations a few times during a semester, and these observations may not capture variation in teaching practices across a semester. For example, faculty might be more likely to include inquiry-based teaching practices when they are being observed, as compared with when no outside observer is present. Even if classes are recorded (which is becoming more common), scoring class periods throughout a semester might not be feasible due to time constraints. A second approach for determining faculty instructional practices is to ask faculty themselves about their approaches to teaching. For example, as a part of the Classroom Undergraduate Research Experience (CURE) survey, faculty are asked about the “course elements” in their courses (www.grinnell.edu/academics/areas/psychology/assessments/cure-survey). Yet faculty perceptions of their own teaching practices do not always align with their actual practices (Fang, 1996; Ebert-May et al., 2011; but see Smith et al., 2014; Ebert-May et al., 2015). Finally, students in a course may be asked how a course is taught (e.g., Corwin et al., 2015b). Student evaluations of teaching are well-known to be biased by a large array of factors (Zambaleta, 2007; Clayson and Haley, 2011), including the type of college instructor, graduate assistant versus faculty (Kendall and Schussler, 2012); the point in time during a semester when evaluations are conducted (Kendall and Schussler, 2013); instructor age and gender (d’Apollonia and Abrami, 1997); and the grades students expect or have already received (d’Apollonia and Abrami, 1997). However, these factors might be less likely to affect student reports of what activities occur in a course, as compared with whether they liked those activities or found them to be effective.

Because all approaches for determining faculty instructional practices have shortcomings or are potentially biased in some way, using multiple approaches to try to triangulate on actual teaching practices might be most appropriate. If multiple approaches are consistent in their assessment of teaching practices, then we are more confident that we have a measure of actual teaching practices. Yet, if different approaches lead to different conclusions about teaching practices, what measure of teaching practices is most accurate (or most relevant) remains an open question. To date, few studies have examined the relationship between student reports, faculty self-reports, and third-party observations of teaching. As noted earlier, faculty self-reports of their teaching practices do not always correspond to their actual practices as scored by an outside observer (Ebert-May et al., 2011), although they do align in some cases (Smith et al., 2014; Ebert-May et al., 2015). In addition, third-party faculty observers and students have been shown to have different perceptions of what content is taught (Hrepic et al., 2007). Furthermore, perspectives on the purpose of courses can differ among faculty instructors, teaching assistants, and students (Volkmann et al., 2005; Melnikova, 2015). However, Marbach-Ad et al. (2014) found that student reports of teaching practices correlated with faculty reports of teaching practices. Yet faculty were reporting on a single course, whereas students were reporting on “their entire undergraduate degree program” (Marbach-Ad et al., 2014). Therefore, the relationship between faculty and student perceptions of teaching practices in the same class remains unclear.

Given the practical difficulties of conducting classroom observations on a large scale and at multiple time points and the absence of an established rubric for classroom observation in laboratory courses, surveys of students and their instructors about instructional practices must be used. However, the alignment between these two measures is unknown. We therefore developed and broadly implemented a survey for faculty and students to determine how laboratory courses are taught and how students in those classes are assessed. Our survey was a part of a 4-year project that began in 2009 aimed at determining how guided-inquiry pedagogy in laboratory courses affects student learning gains. The majority of the faculty participants in the current study participated in a 2½-day faculty professional development workshop on implementing guided-inquiry in biology laboratory courses using the bean beetle, Callosobruchus maculatus, model system (www.beanbeetles.org). During this workshop, faculty discussed the range of pedagogical approaches to laboratory courses and the characteristics of inquiry-based activities in that continuum. Evidence for the efficacy of inquiry-based laboratory teaching and learning was discussed, and a hands-on laboratory session was conducted in which the faculty participants were the students in a guided-inquiry laboratory activity. The student participants in our study were students in laboratory courses taught by these faculty or other faculty at their institutions who also were using the bean beetle model system for guided-inquiry laboratory modules.

At the time we began our study, and currently, no surveys of instructional practices in inquiry-based laboratory courses had been developed and broadly tested in undergraduate biology laboratory courses. The majority of items in the survey were designed to assess the degree to which the different aspects of inquiry-based learning defined in the National Science Education Standards (National Research Council [NRC], 1996) were implemented. The assessment areas included: constructing knowledge by doing, asking scientific questions, planning experiments, collecting data, interpreting evidence to explain results, and justifying and communicating conclusions (NRC, 1996; Trautmann et al., 2002). In addition, we included items to determine whether students generated their own procedures and whether the outcomes of experiments were determined in advance, as these components are essential to some types of inquiry-based learning in laboratory courses (D’Avanzo and McNeal, 1997; Flora and Cooper, 2005; Weaver et al., 2008). Finally, we included items related to assessment for learning (see Davies, 2011).

The five characteristics of scientific inquiry and inquiry-based learning defined in the National Science Education Standards (NRC, 1996) and America’s Lab Report (NRC, 2005) were also used by Campbell et al. (2010) to develop a pair of surveys on inquiry in laboratory courses. However, they tested their surveys with a small sample of secondary school teachers and students, such that the relevance of their survey constructs in an undergraduate context is unclear. More recently, Corwin et al. (2015b) published a student survey of teaching practices for research-based courses. Their survey was designed to determine the degree to which aspects that are unique to course-based research courses (collaboration, discovery and relevance, and iteration; Auchincloss et al., 2014) occur in laboratory courses. As a result, their survey does not encompass the range of inquiry-based teaching and learning in laboratory courses that we wanted to capture. If we are to examine the range of inquiry experiences in biology laboratory courses and how those experiences affect student outcomes, the development and broad testing of a general survey of inquiry-based instructional practices is essential.

METHODS

In collaboration with the external evaluator for our project, we developed a 30-question survey (see the Supplemental Material). Because we were interested in the frequency of particular instructional practices, we used two different four-point Likert scales depending on the item (A = never, B = seldom, C = often, D = all of the time; or A = not at all, B = very little, C = somewhat, D = a great deal; see the Supplemental Material). We used a four-point Likert scale, as it forces respondents to choose a direction rather than remaining neutral (Singleton and Straits, 2009).

The survey was administered to faculty and students at the end of the semester in which the course of interest was taught. Faculty completed the survey online. Students completed the survey either online or on paper as a part of a larger posttest, and in neither case was the survey timed. We collected data from 878 students in 54 courses (41 introductory level and 13 upper level) from 20 institutions (four community colleges, 11 liberal arts colleges, and five universities, of which four were minority-serving institutions). The entire student data set was used for our exploratory factor analysis. To examine the relationship between faculty and student responses to the survey, we used a subset of the data, as some faculty did not respond to the survey. As a result, we used data from 16 institutions (two community colleges, nine liberal arts colleges, and five universities of which two were minority-serving institutions), 39 courses (29 introductory level and 10 upper level), 21 instructors, and 665 students. For the instructors, 67% were female and 14% were underrepresented minorities. For the students who responded to the demographic questions, 63% were female, 21% were underrepresented minorities, and 68% were science, technology, engineering, and mathematics majors. Thirteen percent of the students were in nonmajors courses, 65% were in introductory majors courses, and 22% were in upper-level majors courses.

All responses were coded from 1 to 4, with 1 representing either “never” or “not at all” and 4 representing “all of the time” or “a great deal.” Although some items were worded to indicate a lack of inquiry (e.g., “I worked on projects in which my instructor provided me with experimental design protocols”), we did not reverse code these in our original analysis but rather looked for negative factor loadings in our exploratory factor analysis (see Factor 4 Instructor-directed teaching, Table 1 later in this article).

|

One approach to analyzing Likert-scale data from a survey is to examine each item in a survey individually. An advantage of this approach is that researchers can conduct a fine-grained analysis of responses to individual items. However, if items are intercorrelated, which may often be the case, responses to individual items are not independent, and multiple items may be measuring the same underlying variable. Furthermore, if a large number of items are used in statistical analyses, the likelihood of type I error (i.e., the likelihood of rejecting the null hypothesis when it is true) increases.

An alternative approach often used in survey and assessment design is exploratory factor analysis (e.g., Corwin et al., 2015b; Hanauer and Hatfull, 2015), in which intercorrelated items are grouped into a smaller number of factors, and these factors are then analyzed statistically. In the case of our survey, analysis of survey items that group together based on similar student responses generates broader representations of students’ views of faculty instructional practices (i.e., “constructs”). To determine the underlying constructs in our survey, we used all 30 items in an exploratory factor analysis. Initially, we used two different criteria to determine how many factors to keep—eigenvalues greater than 1 and a scree plot (Costello and Osborne, 2005). For the scree plot, we included only the number of factors before the one where the curve flattens out (Costello and Osborne, 2005). We then estimated the internal reliability of each construct using Cronbach’s alpha.

On the basis of the results of the factor analysis, for each faculty and student survey, we calculated construct scores for each of the constructs by first summing the Likert scores of items in a construct and then dividing by the number of items in that construct. We calculated student construct scores in several ways. As students within a course are not statistically independent of one another, we needed to combine the data for all of the students in a particular course. For each item, we calculated the mean of the responses of students in a particular course and then calculated the construct score based on the item means. In addition to using item means, we also explored using item medians and item modes. However, using medians or modes did not improve the predictive relationship between faculty and student perceptions. Therefore, we report only the results using item means.

Our sample size for examining the relationship between faculty and student perceptions of instructional practices at the course level might be inflated, as some of the faculty in our sample taught more than one course in our sample. To eliminate that risk, we also calculated student construct scores based on mean response of students taught by a particular faculty member. The results of all analyses are presented at the course level and instructor level. Faculty and student construct scores for each of the constructs were normally distributed; therefore, we used parametric statistics to analyze these data.

To examine whether faculty perspectives of their own instructional practices are predictive of student perspectives of faculty instructional practices, we used simple linear regression with faculty construct score as the independent variable and student construct score as the dependent variable for each construct independently. We report statistical significance with α = 0.05 and R2 as an estimate of the proportion of the variation in student construct score explained by variation in faculty construct score. To determine whether mean perceptions of faculty and students differed, we used paired t tests for each construct, because instructor scores and student scores can be paired for a particular course. In addition, we compared the variability in faculty and student responses for each construct by estimating the coefficient of variation, a standardized measure of variability.

The intent of the current study was not to evaluate the wide range of potential causes for variation in student perceptions of faculty instructional practices. However, student academic experience and self-efficacy have been suggested as influences of their perceptions of inquiry (Welsh, 2012; Trujillo and Tanner, 2014). As a result, we examined whether the number of previously taken college laboratory courses and self-efficacy as it relates to science process skills might influence student perceptions of faculty instructional practices. As part of a more extensive survey at the beginning of the semester, we asked students about the number of previous college laboratory courses they had taken. The level of these previous laboratory courses and how these previous laboratory courses were taught is unknown. In the survey at the beginning of the semester, students also rated their self-efficacy as it relates to science process skills, using a 12-item survey with a five-point Likert scale ranging from “not confident” to “very confident” (based on Champagne, 1989; see the Supplemental Material). This survey addressed the science process skills we were interested in as part of our broader study. The student academic experience and self-efficacy survey was administered by each laboratory instructor at the beginning of the semester and was completed by students either online or on paper.

The self-efficacy survey showed excellent reliability for our sample (Cronbach’s alpha = 0.93). Therefore, we used the average score for the 12 items as a measure of self-efficacy. The number of previous laboratory courses was tabulated in five categories (0, 1, 2, 3, and 4 or more). We used general linear models with the different instructional practices constructs as dependent variables and the number of previous laboratory courses and student self-efficacy as independent variables. Pairwise comparisons between the different categories for the number of previous laboratory courses were made based on estimated marginal means at the average self-efficacy score, and p values were adjusted for multiple comparisons using a Bonferroni adjustment.

RESULTS

Exploratory Factor Analysis

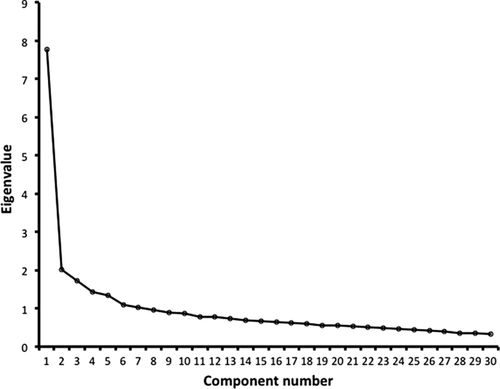

Seven factors had eigenvalues greater than 1 and explained 54.7% of the variation in the data (see Supplemental Table S1). However, only the first four factors had acceptable internal reliabilities (Cronbach’s alpha > 0.6). In contrast, examination of a scree plot suggested that we retain five factors that explained 47.6% of the variation (Figure 1). Including additional factors did not substantially increase the amount of variance explained. The first three factors had high internal reliabilities (Cronbach’s alpha > 0.7). Because many of the items in each of the first four factors in the seven-factor model were grouped together in the same way in the five-factor model (see Supplemental Table S1), we used the five-factor model as the basis for the development of our constructs (Table 1).

FIGURE 1. Scree plot from exploratory factor analysis.

The first factor contained seven items, six of which were related to metacognition, feedback, and assessment (Table 1). The seventh item (“You work on projects that are meaningful to you”) was conceptually unrelated to these constructs and had a low factor loading (0.43). Removing this item did not change the internal reliability of the factor (Cronbach’s alpha = 0.85). Although the remaining six items group together as a factor and might relate to the same underlying construct, the items are more easily understood as two separate constructs (metacognition, feedback and assessment) that are significantly correlated with one another. Both constructs are composed of three items and have high internal reliability (Cronbach’s alpha = 0.82 for metacognition and 0.76 for feedback and assessment). The second factor also contained seven items (Table 1). Although some items had low factor loadings, all of the items related to student skills related to the synthesis of information. This scientific synthesis construct has an internal reliability of 0.79. The third factor was composed of eight items, mostly related to experimental design and science process skills (Table 1). One item (“I used project criteria (rubrics) that I helped establish to gauge what I am learning”) had a low factor loading (0.39) and was more conceptually related to assessment than science process skills. As a result, we removed this item from the construct, which had a minimal impact on internal reliability, reducing it from 0.73 to 0.71. The fourth factor had four items related to instructor-directed teaching or “cookbook” labs (Table 1). One item (“Your instructor lectured in lab”) had a low factor loading (0.33), likely because instructors lecture in the majority of laboratory courses. Removing this item increased the internal reliability to 0.61, which is low but still considered acceptable (DeVellis, 2012). Our final factor contained four items that were not conceptually related, and the construct had low internal reliability (Cronbach’s alpha = 0.53). As a result, we removed this construct from our analysis.

Faculty and Student Perceptions of Instructional Practices

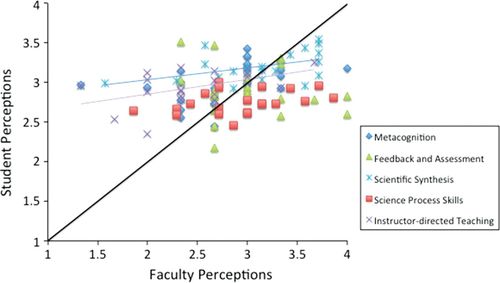

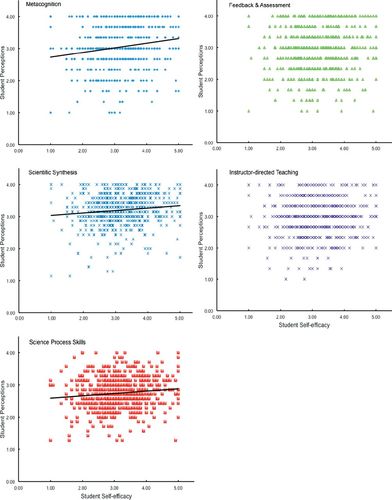

At the course level (n = 39), faculty and student perceptions of instructional practices were largely unrelated (Figure 2 and Supplemental Table S2). However, faculty perceptions of how often students were required to use scientific synthesis skills were significantly positively related to mean student perceptions of how often they were required to use these skills (p < 0.001). Yet, faculty perceptions explained <30% of the variation in mean student perceptions across courses (R2 = 0.28). At the instructor level (n = 21), faculty and student perceptions of instructional practices were again largely unrelated (Figure 3 and Supplemental Table S3). Faculty and student perceptions of the frequency of scientific synthesis skills and their perceptions of instructor-directed teaching were significantly positively related (p = 0.04 and p = 0.03, respectively). Yet faculty perceptions again explained little of the variation in mean student perceptions across instructors (R2 = 0.21 and 0.23, respectively).

FIGURE 2. Relationship between faculty and student perceptions of instructional practices at the course level (n = 39). The regression is shown for scientific synthesis (R2 = 0.277, p = 0.001) with reference line for 1:1 perceptions.

FIGURE 3. Relationship between faculty and student perceptions of instructional practices at the instructor level (n = 21). Regression lines are shown for scientific synthesis (R2 = 0.211, p = 0.036) and instructor-directed teaching (R2 = 0.232, p = 0.027) with reference line for 1:1 perceptions.

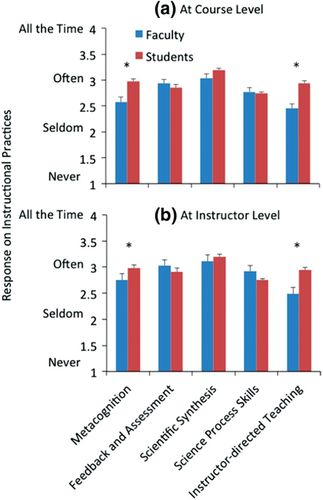

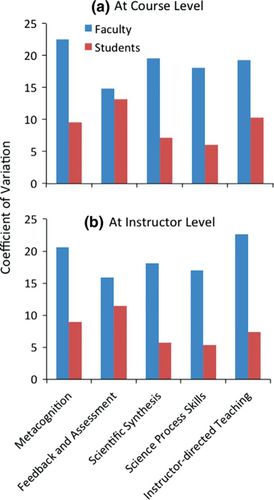

The lack of significant relationships between faculty and student perceptions of instructional practices might be due to biases in how students or faculty use the scale in our instrument. At the course level, students perceived that their laboratory courses promoted metacognition significantly more often than faculty (t = −4.42, df = 38, p < 0.001; Figure 4a). Students also perceived their laboratory courses to be more instructor directed than did faculty (t = −6.24, df = 38, p < 0.001; Figure 4a). In addition, faculty perceptions were much more variable across courses for all of the constructs, except for feedback and assessment, than student perceptions (Figure 5a). Faculty seemed to make fuller use of the scale than students, with student responses focusing on “often” or “somewhat” (3 on the four-point Likert scale). At the instructor level, we found the same patterns that we found at the course level (Figures 4 and 5).

FIGURE 4. Differences in mean perceptions of instructional practices for faculty and students at (a) the course level and (b) the instructor level. Error bars represent 1 SE. Significant differences between student and faculty mean responses are indicated by the asterisks (paired t test, p < 0.05).

FIGURE 5. Coefficient of variation in perceptions of instructional practices for faculty and students at (a) the course level and (b) the instructor level. The coefficient of variation is a standardized measure of variation.

Predictors of Student Perceptions of Instructional Practices

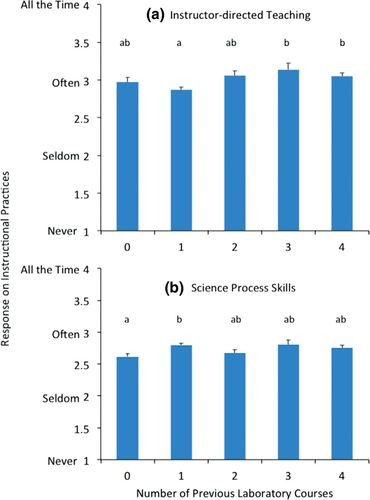

As a consequence of the disconnect between faculty and student perceptions of instructional practices, we were interested in some other factors that might influence student perceptions of instructional practices. Students’ confidence in their science process skills at the beginning of the semester were significantly positively related to metacognition (n = 636, t = 3.76, p < 0.001), scientific synthesis (n = 636, t = 2.49, p = 0.01), and science process skills (n = 636, t = 2.68, p < 0.01; Figure 6). However, feedback and assessment (n = 636, t = −0.138, p = 0.89) and instructor-directed teaching (n = 636, t = 1.05, p = 0.30) were not significantly related to student self-efficacy (Figure 6). In contrast, the number of previous laboratory courses had a significant effect on student perceptions of instructor-directed teaching (F4630 = 3.81, p = 0.005; Figure 7a). Students who had taken three or more laboratory courses perceived their current course to be more instructor directed compared with students who had only taken one previous laboratory course. Student perceptions of the degree to which science process skills were incorporated into their course also were significantly affected by the number of previous laboratory courses (F4630 = 2.86, p = 0.02; Figure 7b). However, the only contrast that was significant was between students who had taken one previous laboratory course and students who had taken no previous laboratory courses, with higher ratings given by students who had taken one previous laboratory course. The number of previous laboratory courses did not significantly influence student perceptions for the other three constructs (feedback and assessment; F4630 = 0.79, p = 0.53; scientific synthesis: F4630 = 2.18, p = 0.07; metacognition: F4630 = 2.42, p = 0.05, but no post hoc, pairwise comparisons were significant).

FIGURE 6. Relationship between student self-efficacy in science process skills at the beginning of the semester and students’ perceptions of faculty instructional practices. Separate graphs are shown for each of the five instructional practice constructs. Regression lines are shown for metacognition (n = 636, t = 3.76, p < 0.001), scientific synthesis (n = 636, t = 2.49, p = 0.01), and science process skills (n = 636, t = 2.68, p < 0.01). Feedback and assessment (n = 636, t = −0.138, p = 0.89) and instructor-directed teaching (n = 636, t = 1.05, p = 0.30) were not significantly related to student self-efficacy.

FIGURE 7. Estimated marginal means (± 1 SE) for (a) instructor-directed teaching and (b) science process skills at the average self-efficacy score based on the number of previous laboratory courses. The p values for multiple comparisons were adjusted using a Bonferroni adjustment. Marginal means with the same letter are not significantly different.

DISCUSSION

In this study, we designed a 30-question survey to be administered to both students and faculty to determine instructional practices in laboratory courses. Based on an exploratory factor analysis, 24 of the items could be grouped into five constructs with high internal reliability. The constructs measure the degree to which laboratory courses include instructional practices related to metacognition, feedback and assessment, scientific synthesis, science process skills, and instructor-directed teaching (Table 1). The majority of constructs reflect the different aspects of inquiry-based learning defined in the National Science Education Standards (NRC, 1996) and America’s Lab Report (NRC, 2005) and assessment for learning (Davies, 2011). In contrast to the other constructs, the instructor-directed teaching construct is negatively associated with inquiry-based teaching and learning in laboratory courses, as instructors either provide experimental designs or require students to develop particular experimental designs and the results of experiments are already known.

Our final survey and the related constructs differ from those proposed by Campbell et al. (2010), although both surveys were based on similar aspects of inquiry-based learning. Campbell et al. (2010) proposed two constructs that were similar, but not identical, for their teacher and student surveys. One of the constructs they interpreted as representing a shift from teacher-centered instruction to student-centered instruction, and the other construct they interpreted as representing traditional methods of instruction. Neither construct corresponded directly to the aspects of inquiry-based learning (NRC, 1996, 2005; Campbell et al., 2010). The differences in the final constructs based on their surveys and ours may have several causes. First, our factor analysis was based on survey responses from a diverse population of undergraduate students. In contrast, their factor analyses were based on survey responses from secondary school teachers and students (Campbell et al., 2010). Second, we used survey responses from almost 900 students for our factor analysis, whereas their factor analyses were based on responses from 88 teachers and 130 students (Campbell et al., 2010), and exploratory factor analyses based on smaller samples can lead to different factor structures than those based on larger samples (Costello and Osborne, 2005). For these reasons, we feel confident that our survey and constructs are appropriate for use in undergraduate biology laboratory courses at diverse institutions.

Our constructs also differ from those recently proposed by Corwin et al. (2015b) in their Laboratory Course Assessment Survey. Their survey examined the degree to which students participated in collaboration, discovery, and iteration. However, these differences are not surprising, in that the intent of their survey was to be able to distinguish between course-based research courses and traditional laboratory courses, whereas our intent was to develop a survey to assess instructional and assessment practices across a range of inquiry-based laboratory courses. As a result, the intents of the surveys and potential future uses of the surveys differ.

In addition to developing a survey to assess instructional practices in inquiry-based laboratory courses, we were interested in the degree to which student and faculty perceptions of instructional practices in the same courses agreed. Perhaps not surprisingly, student and faculty perceptions of instructional practices did not correspond for most of the constructs. At the course level, we did find a significant positive relationship between faculty and student perceptions of instructional practices related to scientific synthesis. At the instructor level, faculty and student perceptions were significantly positively related for scientific synthesis and instructor-directed teaching. However, in all cases, the relationships were not strong, with variation in faculty perceptions explaining <30% of the variation in student perceptions. This disconnect between faculty and student perceptions of instructional practices is in line with previous studies that found differences between instructors and students in their perspectives on the purpose of courses (Volkmann et al., 2005; Melnikova, 2015) and between students and outside faculty observers in their perspectives of what content was taught (Hrepic et al., 2007).

The general lack of a relationship between faculty and student perceptions of instructional practices could be due to a variety of reasons. Faculty and students might use the scale on our survey differently. For example, students might generally score courses higher (or lower) than faculty. However, for three of the five instructional practices, mean faculty and student perceptions did not differ. For the instructor-directed teaching and metacognition constructs, students perceived courses to use these approaches more often than faculty did. In contrast to mean perceptions, the variability in faculty and student perceptions was quite different. Student perceptions were far less variable across courses than faculty perceptions, with most student responses limited to the upper half of the four-point Likert scale on our survey. The fact that faculty used the entire scale suggests that they might have more nuanced views of their instructional practices than do students. Aside from differences between how faculty and students responded to our survey, the two groups might differ in their perceptions of instructional practices if faculty do not articulate clearly the approaches they are using and why they are using these approaches. In other words, at the very beginning of an inquiry-based activity, faculty should discuss with students the different aspects of the scientific process and each student’s role in the inquiry process that will be conducted.

Because faculty and student perceptions of instructional practices did not align, we were interested in some other factors that might influence student perceptions. Students who had taken three or more laboratory courses thought their current courses were more instructor directed. However, students with one previous laboratory course perceived their courses to include more science process than students with no previous laboratory courses. The cause for these patterns is not clear. Other studies have found that number of years in college affects student perceptions of inquiry and their perceptions of the importance of inquiry-based instructional practices for their learning (Welsh, 2012). Future research needs to determine how previous experience with inquiry-based learning influences current perceptions of instructional practices. Finally, we found that students who were more confident in their science process skills at the beginning of the semester were more likely to rate courses high in terms of metacognition, science process, and scientific synthesis. It remains to be determined whether students who are more confident in their abilities are more receptive to inquiry-based practices and therefore perceive their current courses to be more inquiry based (Trujillo and Tanner, 2014). In general, a more detailed study of student factors that influence their perceptions of faculty instructional practices in inquiry-based laboratory courses is warranted.

Active learning in lecture courses (Freeman et al., 2014) and inquiry-based learning (guided inquiry, open-ended inquiry, and course-based research) in laboratory courses (Beck et al., 2014; Corwin et al., 2015a) lead to improved student learning outcomes. However, which specific components of these instructional practices lead to these improved outcomes is unclear (Umbach and Wawrzynski, 2005; Corwin et al., 2015a; Dolan, 2015). Furthermore, our findings that faculty and student perceptions of instructional practices do not correspond indicates the need to determine whether faculty perceptions or student perceptions of instructional practices are more predictive of learning gains.

Past research on the relationship between perceptions (of faculty or students) and student learning gains comes to a range of conclusions, leading us to assert that neither faculty nor student perceptions alone will best predict future learning gains. Umbach and Wawrzynski (2005) found significant positive relationships between faculty reporting active and collaborative instructional practices and student engagement and student self-reported gains in learning and intellectual development at the institutional level. However, student perceptions of instructional practices themselves may be highly variable. Welsh (2012) conducted a survey of undergraduate student perceptions on the importance of active learning on their academic performance in science and mathematics lecture courses. She found highly diverse perceptions of the importance of active learning, with perceptions varying based on gender and experience. Similar mixed results were found in a study of student perceptions of the value of active learning in a cross-disciplinary course (Machemer and Crawford, 2007).

Future research should focus on whose perceptions (faculty or student) of instructional practices are most accurate for predicting specific student learning gains in undergraduate laboratory courses. The best predictor might not be the same for all learning outcomes. In addition, the specific context (community college, liberal arts college, regional university, or research university) and the student population (ethnicity, gender, educational experience, economic background, and family education level) may be very important (Kardash and Wallace, 2001; Eddy and Hogan, 2014). This kind of specificity is necessary if we are to develop instructional practices and foster perceptions that lead to the best possible outcomes for the students who are in our courses.

ACKNOWLEDGMENTS

We thank Tom McKlin and Shelly Engelman of the Findings Group for their help in survey development and initial data analysis. We also thank Beth Schussler for pointing us in the direction of some relevant literature. Members of the Science Education Research Journal Club at Emory University provided valuable feedback on an earlier draft of the manuscript. The study was approved by the institutional review boards of Morehouse College and Emory University and was funded by National Science Foundation (NSF) grants DUE-0815135 and DUE-0814373 to Morehouse College and Emory University, respectively. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessary reflect the views of the NSF.