“Oh, that makes sense”: Social Metacognition in Small-Group Problem Solving

Abstract

Stronger metacognition, or awareness and regulation of thinking, is related to higher academic achievement. Most metacognition research has focused at the level of the individual learner. However, a few studies have shown that students working in small groups can stimulate metacognition in one another, leading to improved learning. Given the increased adoption of interactive group work in life science classrooms, there is a need to study the role of social metacognition, or the awareness and regulation of the thinking of others, in this context. Guided by the frameworks of social metacognition and evidence-based reasoning, we asked: 1) What metacognitive utterances (words, phrases, statements, or questions) do students use during small-group problem solving in an upper-division biology course? 2) Which metacognitive utterances are associated with small groups sharing higher-quality reasoning in an upper-division biology classroom? We used discourse analysis to examine transcripts from two groups of three students during breakout sessions. By coding for metacognition, we identified seven types of metacognitive utterances. By coding for reasoning, we uncovered four categories of metacognitive utterances associated with higher-quality reasoning. We offer suggestions for life science educators interested in promoting social metacognition during small-group problem solving.

INTRODUCTION

As researchers investigate ways to support life science instructors’ use of interactive learning in their classes (Wilson et al., 2018), there is a parallel need to uncover processes that help students fully benefit from these increasing opportunities. Successful interactive group work includes collaboration, or engagement in a coordinated effort to reach a shared goal. Social metacognition, or awareness and regulation of the thinking of others, can increase effective student collaboration during interactive group work (e.g., Kim and Lim, 2018). To support life science students’ use of social metacognition during group work, we need to characterize social metacognition in the context of the life sciences. Then we can use our understanding of social metacognition in the life sciences to provide guidance, such as prompts to pose during group work, that helps students fully benefit from opportunities to collaborate with their peers.

In this study, we characterize the unprompted social metacognition life science undergraduates use when they work in small groups to solve problems. We investigate the aspects of their social metacognition that are associated with higher-quality reasoning, which we define as reasoning that is correct, backed by evidence, and generated by more than one individual. Our analysis of student conversation during small-group problem solving draws upon several guiding frameworks. In the following sections, we present relevant background information on the frameworks we use to guide our study of interactive group work, social metacognition, and reasoning.

Increasing Adoption of Interactive Group Work

Interactive group work is increasingly being adopted in college science classrooms (Wilson et al., 2018). Having students work in small groups to solve problems helps students develop essential skills, like collaboration, which are valued in the sciences (Kuhn, 2015; National Research Council [NRC], 2015). The adoption of group work also aligns with the view that knowledge construction is a socially shared activity, rather than an individual one. Social cognitive theory, or the idea that learning occurs in a social context from which it cannot be separated (Vygotsky, 1978; Bandura, 1986), forms the foundation behind the promotion of interactive group work.

One framework for studying interactive group work is the ICAP framework, which hypothesizes that learning improves as students’ cognitive engagement progresses from passive to active to constructive to interactive, with the deepest level of understanding occurring in the interactive mode (Chi and Wylie, 2014). The interactive mode occurs when students take frequent turns in dialogue with one another by interjecting to ask questions, make clarifications, and explain ideas (Chi and Wylie, 2014). Through this exchange of dialogue, students are able to infer new knowledge from prior knowledge in an iterative and cooperative manner as they take conversational turns. When students work in groups to solve problems, they can employ the social practice of conversation or discourse (Cameron, 2001; Rogers, 2004) to co-construct knowledge in the interactive mode (Chi and Wylie, 2014). As more science instructors implement group work in their courses, we need to better understand how social learning contexts impact important aspects of learning, like metacognition.

Metacognition in Social Learning Contexts

Metacognition, or the awareness and control of thinking for the purpose of learning, is linked to higher academic achievement and can be engaged at the individual or social level. Metacognition is composed of two components: metacognitive knowledge and metacognitive regulation (Schraw and Moshman, 1995). Metacognitive knowledge consists of what one knows about their own thinking and what they know about strategies for learning. Metacognitive regulation consists of the actions one takes to learn, including planning strategy use for future learning, monitoring understanding and the effectiveness of strategy use during learning, and evaluating plans and adjusting strategies based on past learning (Schraw and Moshman, 1995). Metacognition gained prominence in cognitive science and education over the last 50 years because of its relationship to enhanced individual learning (Tanner, 2012; Stanton et al., 2021). For example, students with stronger metacognitive skills learn more and perform better than peers who are less metacognitive (e.g., Wang et al., 1990).

Most research on metacognition has focused at the level of the individual learner. Metacognition was initially conceptualized as an individual process, because discussions on learning were influenced by Piaget’s individual-based theory of cognitive development (Brown, 1978). Since then, some researchers have conceived of metacognition more broadly as people’s thoughts about their own thinking and the thinking of others (Jost et al., 1998). In essence, metacognition includes both individual and social components. Individual metacognition is one’s awareness and regulation of one’s own thinking for the purpose of learning, and social metacognition is awareness and regulation of other’s thinking for the purpose of learning (Stanton et al., 2021). While the theoretical boundaries between individual and social metacognition are clear, distinguishing between the two in practice can be challenging. For example, during small group work, it can be difficult to know whether a student’s spoken metacognition is directed inward versus outward (i.e., a reflection of individual vs. social metacognition). However, when a student shares their metacognition in this way, it could potentially stimulate metacognition in another group member. For this reason, we operationally define social metacognition as metacognition that is shared verbally during collaborative work.

Social metacognition, also known as socially shared metacognition, has been explored in just a few disciplines, such as mathematics (Goos et al., 2002; Smith and Mancy, 2018), physics (Lippmann Kung and Linder, 2007; Van De Bogart et al., 2017), and the learning sciences (Siegel, 2012; De Backer et al., 2015, 2020). Social metacognition researchers have focused on identifying the metacognitive “utterances,” or words, phrases, statements, or questions, students use during small-group problem solving. Metacognitive utterances are identified through discourse analysis, which is the investigation of socially situated language (Cameron, 2001; Rogers, 2004). From these foundational discourse analyses in other disciplines, a conceptual framework of social metacognition emerged. Social metacognition can happen when students share or disclose their ideas to peers, invite their peers to evaluate their ideas, or evaluate ideas shared by peers (Goos et al., 2002). Social metacognition also occurs when students enact, modify, or assess their peers’ strategies for problem solving (Van De Bogart et al., 2017).

Research on social metacognition in other disciplines has shown that students working in small groups can stimulate metacognitive processes in one another, leading to improved learning. For example, researchers found that one variation in social metacognitive dialogue, which they called “interrogative” (i.e., evoked by a thought-provoking trigger and generally followed by elaborative reactions), was positively related to college students’ individual performance on a learning sciences knowledge test (De Backer et al., 2020). Middle school students who came up with a correct solution as a group had higher levels of metacognitive interactions or made more metacognitive utterances during group problem solving (Artz and Armour-Thomas, 1992). Support for this finding was provided by a comparison of successful versus unsuccessful problem solving in a high school math class. Successful problem solving (i.e., working together as a group to come to a correct solution on a math problem) involved students assessing one another’s ideas, correcting incorrect ideas, and endorsing correct ideas, while during unsuccessful problem solving, students lacked critical engagement with one another’s thinking (Goos et al., 2002).

Although metacognitive utterances have been identified in a few disciplinary contexts, the metacognitive utterances that college students use during small-group problem solving in the life sciences has yet to be documented. Social cognitive theory posits that learning is socially situated, meaning it is specific to the context and social environment in which it is embedded (Bandura, 1986). This means learning is not easily transferable from one context to another. For example, the nature of metacognition that occurs in a high school calculus class using problem-based learning could differ from that which occurs in a college biology class using process-oriented guided inquiry learning (POGIL). Additionally, just because a student can use metacognition in their calculus class does not necessarily mean they will employ the same metacognition in their biology class. Given that the metacognition students use can differ based on context, defining the metacognitive utterances life science majors use during small-group problem solving is an important first step for understanding social metacognition in the life sciences. We can then use this understanding to provide guidance to students as they work together in groups.

Social metacognition has also been linked to reasoning. In physics labs, metacognitive utterances impacted learning behavior by helping students transition from logistical to reasoning behavior. For example, a metacognitive utterance helped students transition from recording data (logistical behavior) to assessing their experimental design (reasoning behavior). The action the group takes after metacognitive utterances seems to be what matters most for successful problem solving in physics labs (Lippmann Kung and Linder, 2007). Research in grade school mathematics classrooms indicated a positive association between metacognitive talk and transactive talk, or reasoning that operates on the reasoning of another. Results from this study suggest that metacognitive talk is more likely to be preceded or followed by reasoning (Smith and Mancy, 2018). These promising results indicate that social metacognition is associated with improved reasoning in other disciplinary contexts.

Reasoning in Social Learning Contexts

The skill or practice of scientific reasoning is a valued outcome of science education and a focus of major science education reform efforts (NRC, 2007; American Association for the Advancement of Science, 2011). Scientific reasoning reflects the disciplinary practices of scientists and can create a more scientifically literate society. Scientific reasoning is the process of constructing an explanation for observed phenomena or constructing an argument that justifies a claim. Scientific reasoning skills include identifying patterns in data, making inferences, resolving uncertainty, coordinating theory with evidence, and constructing evidence-based explanations of phenomena and arguments that justify their validity (Osborne, 2010).

One important framework for reasoning is Toulmin’s argument pattern. Toulmin described an argument as the relationship between a claim, the information that supports the claim, and an explanation for why the claim flows logically or causally from the information (Toulmin, 2003). Toulmin’s argument pattern framework is domain general in nature and therefore does not include an assessment of whether an argument is coherent or accurate. Many studies have used Toulmin’s argument pattern to guide analysis of scientific reasoning in group discourse (Osborne et al., 2004; Sampson and Clark, 2008; Knight et al., 2013, 2015; Paine and Knight, 2020). A key adaptation of Toulmin’s argument pattern is the evidence-based reasoning framework (Brown et al., 2010).

The evidence-based reasoning framework is “intended to help researchers and practitioners identify the presence and form of scientific argumentation in student work and classroom discourse” (Brown et al., 2010, p. 134). The evidence-based reasoning framework draws distinctions between the component parts of scientific reasoning by identifying claims, premises, rules, evidence, and data (Supplemental Figure 1). A “claim” is a statement about a specific outcome phrased as either a prediction, observation, or conclusion. A “premise” is a statement about the circumstances or input that results in the output described by the claim. A “rule” is a statement describing a general relationship or principle that links the premise to the claim. “Evidence” is a statement about an observed relationship, and “data” are reports of discrete observations. Together, rules, evidence, and data can be considered forms of backing (Furtak et al., 2010). For example, as someone is driving, they may think, “This traffic light is yellow. Yellow lights quickly turn to red, so I will slow down.” In this example, the statement “This traffic light is yellow” is a premise, “Yellow lights quickly turn to red” is backing (specifically, a rule), and “I will slow down” is a claim. The evidence-based reasoning framework suggests that more sophisticated scientific reasoning occurs when students make a claim supported by backing (Brown et al., 2010; Furtak et al., 2010). Reasoning quality is relative and likely occurs on a qualitative continuum from lower to higher quality. One of our goals was to characterize this reasoning quality continuum. In this study, we use the evidence-based reasoning framework as a starting point to identify instances of complete reasoning during student discourse or conversation. We expand the continuum so that higher-quality reasoning also includes the consideration of correctness and the transactive nature of the reasoning (i.e., whether the reasoning was generated by more than one individual).

Research Questions

With the increasing adoption of interactive group work in undergraduate life science classrooms, there is a need to study the role of social metacognition, or metacognition that occurs out loud in a social learning context, and its relationship to reasoning. To address this gap, we used discourse analysis of small-group problem solving from an upper-division biology course to address the following qualitative research questions:

What metacognitive utterances do students use during small-group problem solving in an upper-division biology course?

Which metacognitive utterances are associated with small groups sharing higher-quality reasoning in an upper-division biology classroom?

METHODS

Context and Data Collection

This study was conducted at a large, public, research-intensive university in the southeastern United States. Participants were recruited from an upper-level cell biology course taken by life science majors in 2018. Average enrollment in this course was ~80 students per section. The course consisted of an interactive lecture 3 days a week and a smaller breakout session once per week (~40 students per breakout session). The breakout sessions were held in a SCALE-UP classroom designed to facilitate group work with multiple monitors and round tables where students sat in groups of three (Beichner et al., 2007). During weekly breakout sessions, students worked in small groups of three to solve problem sets in-person using a pen-and-paper format.

The problem sets were designed using guided-inquiry principles (Moog et al., 2006). The problems scaffolded student learning about a cell biology concept and asked students to analyze relevant published scientific data. The first problem set covered import of proteins into the nucleus (“nuclear import”), and the second problem set focused on transcription. The breakout session problem sets were formative assessments and were not letter graded but were highly aligned to the learning objectives and the exams in the course. Approximately 40% of the exam points covered material from the breakout sessions. In lieu of being letter graded, a graduate teaching assistant provided written feedback to the groups on their completed problem sets, similar to the feedback that would be provided if the problem set was an exam.

To form groups during the breakout sessions, participants were allowed to pick their own group of three or they could opt to be randomly assigned to a group. The groups of three did not change during the course of the study. In each group, there were three roles: manager, presenter, and recorder (Stanton and Dye, 2017). The recorder was responsible for writing and turning in the group’s answers for a participation grade and feedback. The presenter was responsible for sharing group results during the whole-class discussion at the end of the breakout session and for sharing group solutions on dry-erase boards during the breakout session. The manager was responsible for keeping the group on task during the allotted time. Group roles were randomly assigned at the start of each breakout session and rotated week to week. The study was classified by the University of Georgia’s Institutional Review Board as exempt (STUDY00006457).

Four groups of three students each agreed to be audio-recorded during two consecutive breakout sessions. Each participant was compensated $20 for participation in the study, and all participants provided written consent. To accurately record individuals in a group setting, each group member was individually microphoned using 8W-1KU UHF Octo Receiver System equipment (Nady Systems, Inc.). After the audio recordings were collected, the individual recording tracks were synced and merged into one recording per group per breakout session using Steinberg Cubase software.

The audio recordings were professionally transcribed (Rev.com), and the transcripts were checked to ensure accuracy before analysis. The following transcription conventions were used: 1) speaker turns were arranged in vertical format, with all speaker turns arranged in a single column one above another to reflect the equal status of each speaker as students; 2) utterances ending in a sharp rising intonation were considered questions and were signified with a question mark (?); 3) a single dash (-) following a word was used to indicate interrupted, truncated, or cut-off words or phrases; 4) an ellipsis (…) was used to indicate pauses in speech or when a speaker trailed off; and 5) when available, researchers provided interpretations of nonspecific pronouns (e.g., this and that), which are indicated in brackets ([]) (Du Bois et al., 1992; Edwards, 2005). We did not use transcription conventions to signify overlapping stretches of speech, but these instances were coded. Participant names were changed to pseudonyms in the transcripts. The transcripts and accompanying audio serve as the primary data for this study.

Here, we report on data from two of the four groups during two consecutive breakout sessions. The data from the third group were excluded from analysis because one group member was absent the day of the second recording. The data from the fourth group were excluded from further analysis because the group spent a significant amount of time looking through their notes and reading them to one another rather than discussing their thoughts about the problem set. Our rationale for this decision was that we could not study social metacognition if it was not evident. We will refer to the two groups analyzed in this study as Group A and Group B. The groups attended separate breakout sessions on the same afternoons. Group A consisted of three women: Bella, Catherine, and Michelle, who elected to work together. Group B consisted of one woman and two men: Molly, Adam, and Oscar, who were assigned to work together. The group roles of the participants for each problem set can be found in Supplemental Table 1. Our sample size, while small, is in alignment with sample sizes for foundational discourse analysis (Cameron, 2001; Rogers, 2004). For example, one study on social metacognition involved the analysis of one pair of students working on a single physics laboratory problem (Van De Bogart et al., 2015).

Timeline Creation and Analysis of Silence

Each transcript was analyzed to create a timeline of each breakout session. Start and end times of on-task work, which we defined as students directly working on the problem set, and off-task work, which we defined as students discussing ideas unrelated to the problem set, were recorded. Next, two researchers (S.M.H. and E.K.B.) listened to all transcripts and recorded the start and end time of all silences equal to or greater than 5 seconds in length. The duration of the silences was summed for each transcript. Using this information, a percentage of time spent in silence was calculated for each transcript as follows:

Qualitative Discourse Analysis

Transcripts of student discourse were analyzed by a team of researchers using MaxQDA 2020. Our basic unit of analysis was an utterance. An utterance is either a word, phrase, statement, or question that an individual or group of students makes while collaborating. A single line of speech from a single student could contain multiple utterances. For example, one student could say, “Yeah, I don’t know. What do you think?,” and this could be broken into three separate utterances with “Yeah” as a single word utterance, “I don’t know” as a phrase utterance, and “What do you think?” as a question utterance. Alternatively, multiple lines of speech from multiple students could compose a single utterance. For instance, when one student interrupts another to complete the other’s thought, the combined statement could be considered an utterance composed by two individuals. Our qualitative discourse analysis of these utterances occurred in multiple, iterative cycles.

First-Cycle Coding.

First-cycle coding began with open, initial coding of all the transcripts (Saldaña, 2021). The goal of our initial coding process was to begin identifying the utterances from our data set that related to social metacognition and reasoning. Two coding schemes were developed to investigate our research questions. The first codebook was developed to capture metacognitive utterances from student interactions. The second codebook was developed to capture the reasoning quality present in the discussion. This dual-coding methodology meant utterances could be double coded as both metacognitive and as a part of reasoning. Data were first coded as metacognitive utterances and then coded for reasoning quality (Figure 1). We discuss the development of each codebook in the following sections.

FIGURE 1. Schematic of dual-coding process. In step 1, types of metacognitive utterances throughout each transcript were coded. As a simplified example, the image under step 1 depicts five lines of a single transcript, three of which contain a metacognitive utterance. Metacognitive utterances are words, phrases, statements, or questions students made that were related to their awareness and control of thinking for the purposes of learning. In step 2, each transcript was segmented into problem episodes. Problem episodes consisted of sections of the transcript in which students were solving problems that dealt with cell biology data, e.g., “Explain the results in the YRC panel.” The image under step 2 shows that the first four lines of the example transcript make up a single problem episode (green box). In step 3, the problem episodes were further segmented into reasoning units. Reasoning units consisted of a chunk of discourse in which a student or students were discussing a single collection of connected ideas. The image under step 3 shows that the first two lines of the example transcript make up one reasoning unit, and the fourth line is a separate reasoning unit (purple boxes). In step 4, each reasoning unit was assigned a reasoning code (Supplemental Table 2). The image under step 4 shows the first reasoning unit was assigned with the level 7 code, and the second reasoning unit was assigned with the level 0 code (Supplemental Table 2). Attribution for images: Profile and Profile Woman by mikicon from the Noun Project.

Social Metacognition.

All four authors coded the transcripts for social metacognition, wrote analytic memos, and then met to discuss emergent ideas. Deductive codes originated from prior work on social metacognition: self-disclosure, feedback request, and other monitoring (Goos et al., 2002). We developed inductive, or emergent, codes based on the utterances present in our data and our knowledge of metacognition as a construct. We refined these codes through discussion, listening to the audio, and careful consideration of which codes aligned with our research questions. Two researchers (S.M.H. and E.K.B.) then coded all four transcripts individually and subsequently met as a team to discuss how each researcher applied the codes. These discussions led us to add, remove, or redefine our existing codes, further refining the codebook. Through these iterations, our codebook stabilized. We then revisited segments of the data that were selected for reasoning analysis and coded them to consensus with the stabilized social metacognition codebook until all discrepancies were resolved. Attribute coding, or the notation of participant characteristics (Saldaña, 2021), was also employed to take note of the group roles during the first-cycle coding for social metacognition. For example, in the nuclear import transcript for Group A, every line from Michelle was coded as a line from the manager, which was her assigned group role that day.

Reasoning.

A reliable, systematic methodology for 1) identifying reasoning and 2) assessing its quality was needed. Once sections of the transcripts related to reasoning about scientific data were identified in initial coding, the transcripts were broken into problem episodes. Problem episodes consisted of all utterances in a transcript in which students discussed an answer to a specific problem in the problem set (Figure 2, green boxes). For example, there was one problem episode from Group A for problem 2 in the transcription problem set. Given the emphasis on backing (rules, evidence, and data) in the evidence-based reasoning framework (Supplemental Figure 1), we selected only those problems that required students to analyze a diagram or data figure (questions 2 and 4 for the nuclear import problem set and questions 2, 3, and 5 for the transcription problem set).

FIGURE 2. Timelines of small group work. Group work timelines are represented as bar graphs with time (in minutes) on the x-axis. The timelines show how each group spent each breakout session. White sections of the timeline indicate when groups were silent. Black sections of the timeline indicate when groups were discussing the problem set. Blue sections of the timeline indicate when groups were discussing ideas unrelated to the problem set. Problem episodes selected for reasoning analysis are indicated on the timelines in green boxes. Groups did not finish the problem set in the same amount of time. For example, Group B finished the problem set for breakout session 1 early, whereas Group A did not complete the problem set in the allotted class time for breakout session 2.

Problem episodes were further parsed into reasoning units. Reasoning units are defined as a conversational chunk of discourse in which a student or students are discussing a single collection of connected ideas. Two researchers (S.M.H. and E.K.B.) then evaluated each reasoning unit against a list of a priori codes consisting of structural reasoning components (premise, claim, backing) derived from the evidence-based reasoning framework (Brown et al., 2010; Furtak et al., 2010) and correct and incorrect scientific ideas. The evidence-based reasoning framework does not account for accuracy of scientific information, but it was critical in our analysis of the data to consider accuracy (correct vs. incorrect scientific ideas) of the structural reasoning components. Another researcher (J.D.S.) provided insight into what counted as backing and correct scientific ideas throughout this process because of their expertise in the course content and context. We undertook this part of first-cycle coding together and discussed all discrepancies until consensus was reached.

Second-Cycle Coding.

Second-cycle coding began with establishing reasoning quality codes using our first-cycle codes for reasoning. The purpose was to assess the quality of reasoning that was occurring across three dimensions: a reasoning unit’s 1) transactive nature, 2) completeness, and 3) correctness. First, reasoning units were either transactive, meaning two or more students participated in reasoning and one of those students clarified, elaborated, or justified the reasoning of another student(s); or they were nontransactive, in which individual students share their reasoning but their reasoning is not operated on by another student (Kruger, 1993). Second, the reasoning units were also either complete or incomplete. Complete reasoning units were defined by the presence of at least one, clear claim, a premise, and some form of backing that connected the premise to the claim through data, evidence, or a rule (Brown et al., 2010; Furtak et al., 2010). Incomplete reasoning units were defined as having a claim and/or a premise but lacked a form of backing such as data, evidence, or a rule. Third, the reasoning units were either correct or mixed. A correct reasoning unit could either solely consist of correct scientific ideas, or it could contain an incorrect scientific idea, as long as that incorrect idea was ultimately corrected within the reasoning unit. In contrast, a mixed reasoning unit contained both correct and incorrect scientific ideas, but the incorrect ideas were never corrected. We chose the word “mixed” instead of “incorrect,” because no reasoning units were wholly incorrect.

Combining these three binary parameters resulted in eight codes that were ordered by first prioritizing transactive over nontransactive behavior (Supplemental Table 2). This decision to view transactive behavior as more beneficial than nontransactive behavior aligns with the continuum outlined in the ICAP framework (Chi and Wylie, 2014) and the view that exchanges of reasoning are of higher quality (Knight et al., 2013). The ordering of the reasoning codes also reflects our decision to prioritize completeness of reasoning units over correctness. We made this choice, because it may be easier for instructors and students to correct ideas rather than to push students to reason with backing. This prioritization and ordering resulted in the view that transactive, complete, and correct reasoning units represent higher-quality reasoning, and nontransactive, incomplete, and/or mixed reasoning units represent lower-quality reasoning. These mutually exclusive codes were then applied to predefined reasoning units in selected problem episodes of the transcripts (Figure 1).

To identify the metacognitive utterances that co-occurred with higher-quality reasoning, we examined the results from our dual-coding process, both metacognitive utterance types and reasoning codes, together in the last phase of the second cycle. We relied on pattern coding as our selected second-cycle coding method to uncover categories in our dual-coded data (Saldaña, 2021). Pattern coding is a way to group the results from first-cycle coding into larger categories. Specifically, we gathered all reasoning units with higher-level codes (level 6 and level 7) and looked for patterns among the metacognitive utterances in these higher-quality reasoning units. We investigated both level 6 and level 7 reasoning units during pattern coding, because these reasoning units were both transactive and complete. The only difference was that level 6 reasoning units contained mixed ideas. This process was facilitated by complex code querying in MaxQDA 2020. In addition to first- and second-cycle coding, we also relied on our analytic memos about the data to inform our coding decisions (Saldaña, 2021).

RESULTS

We first present an overview of how the two groups spent their time during the breakout sessions to provide the reader with important context for the analysis that follows. Next, we demonstrate the results from our dual-coding scheme. To address our first research question (What metacognitive utterances do students use during small-group problem solving in an upper-division biology course?), we define the metacognitive utterances students used during small-group problem solving. Then we provide an analysis of reasoning that occurred during small-group problem solving, which is required to answer our second research question. Finally, by tying our two coding schemes together, we address our second research question (Which metacognitive utterances are associated with small groups sharing higher-quality reasoning in an upper-division biology classroom?) by presenting the metacognitive utterances that were associated with higher-quality reasoning in our data set.

How Students Spent Their Time during Group Work

Students spent the majority of the breakout sessions working directly on the problem set. Little to no time was spent off-task discussing ideas unrelated to the problem sets. Group A was rarely off-task and Group B was never off-task (Figure 2). Group B spent more of their time in silence. On average, Group B spent 40% of their working time in silence, whereas Group A spent 14% of their working time in silence. Despite spending a larger percentage of their time in silence, Group B completed the problem sets faster than Group A. When Group B finished early during the first breakout session, they spent the remainder of the group work time getting to know one another, because they had never met. In contrast, the members of Group A knew one another and had previously met. Overall, both groups were on-task and focused on the problem sets during the breakout sessions but approached their work together differently.

What Metacognitive Utterances Do Students Use during Small-Group Problem Solving?

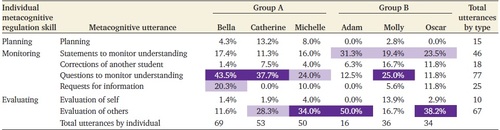

We identified several types of metacognitive utterances that upper-division biology students used during group work throughout the breakout sessions analyzed in this study. Metacognitive utterances are words, phrases, statements, or questions students made that were related to their awareness and control of thinking for the purposes of learning. The metacognitive utterances we identified included “planning,” “statements to monitor understanding,” “corrections of another student,” “questions to monitor understanding,” “requests for information,” “evaluations of self,” and “evaluations of others,” which can all be mapped to the individual metacognitive regulation skills of planning, monitoring, and evaluating (Table 1). While metacognitive utterances related to planning may play an important role in small-group problem solving, they did not directly impact reasoning in this data set and thus were not investigated further.

| Individual metacognitive regulation skill | Metacognitive utterance | Description | Example |

|---|---|---|---|

| Planning | Planning | When a student talked about how they wanted to go about completing the problem set in terms of time allocation or resource use | Okay, let’s see. How many questions are there total? One, two, three, four, five, and we need to be done by 3:00. So, 50 minutes I guess we could spend around like 10 minutes on each. |

| Monitoring | Statements to monitor understanding | When a student shared what they were thinking out loud to make sure they understood a concept without prompting | Oh. That makes sense. Because in the nucleus, we have Ran-GTP, so in the nucleus, it’s in this form. |

| Corrections of another student | When a student directly corrected a perceived incorrect statement from another group member | Student 1: Okay. So RanGAP is found in the nucleus-Student 2: In the cytoplasm.Student 1: Yeah, sorry. Cytoplasm. | |

| Questions to monitor understanding | When a student asked their group member(s) a yes or no question to clarify an idea or approach | Do y’all understand? | |

| Requests for information | When a student asked their group member(s) to share knowledge about an idea or approach beyond a yes or no question | Okay. But how does that affect the concentration gradients? | |

| Evaluating | Evaluation of self | When a student assessed their own thinking, approach, or solution and whether or not it was effective or relevant | So, yeah, I shouldn’t make assumptions. I should stay within the realm of the question. |

| Evaluation of others | When a student assessed the thinking, approach, or solution shared by a group member and whether or not it was effective or relevant | Does it answer the question why? |

Metacognitive Utterances Related to Monitoring.

The individual metacognitive regulation skill of monitoring involves assessing one’s understanding of concepts while learning (Stanton et al., 2021). The metacognitive utterances related to monitoring that we identified in our data took the form of both statements (statements to monitor understanding and corrections of another student) and questions (questions to monitor understanding and requests for information) (Table 1). These metacognitive utterances are related to monitoring, because they involve assessing either one’s own or a group member’s understanding of concepts. For example, statements to monitor understanding included assessments of one’s own conceptual understanding through self-corrections or self-explanations, whereas corrections of another student involved assessments of a group member’s conceptual understanding.

Students also used questions to monitor understanding to assess their knowledge of concepts. These questions helped them clarify their own understanding or their group members’ understanding of a concept. These questions were closed in nature, meaning they could be answered with a simple one-word response like “yes,” “no,” or “correct.” Questions of this type included follow-up questions, questions to make sure group members were following along, or requests for confirmation on a shared idea (Table 1). In contrast to the closed nature of questions to monitor understanding, we also found evidence of students using more open-ended questions to request access to their group mates’ thinking (requests for information). Requesting access to a group mates’ thinking is related to metacognition, because one must first be aware of the thinking of others in order to act on it or regulate it. Students would make requests for information when they asked group mates to disclose their knowledge and information about a concept beyond a simple yes or no question. These requests for information only occurred when a student was asking for information they had not already supplied themselves and often centered around an interrogative word such as “who,” “what,” “when,” “where,” “why,” or “how” (Table 1).

Metacognitive Utterances Related to Evaluating.

The individual metacognitive regulation skill of evaluating involves appraising one’s plan or approach for learning (Stanton et al., 2021). Two of the metacognitive utterances we identified, evaluations of self and evaluations of others, are related to evaluating, because they involve appraising either one’s own or a group member’s thinking or approach and whether it is effective or relevant to the problem. Evaluations of others took the form of both statements and questions (Table 1). When in statement form, evaluations of others were matter-of-fact critiques that appraised the group’s current solution to a problem in the problem set, like Oscar’s statement “I don’t know how you can even say that there’s DNA present. If it wasn’t immunoprecipitated, it would’ve been washed away.” Audio does not reveal how Oscar’s group mates felt about this critique, nor does Oscar’s statement explicitly invite his group members to engage with his critique. In contrast, evaluations of others that were in question form requested some sort of engagement with the appraisal from the group. Evaluations of others posed as questions either invite pauses for clarification, reorient the group back to the problem set, or challenge an idea, all of which can more directly impact reasoning. For example, Michelle’s inquiry, “Does it answer the question why?,” reoriented the group back to the question posed in the problem set.

The frequency of the metacognitive utterance types we identified by individual participant is provided in Table 2. The most frequently used metacognitive utterance types were questions to monitor understanding, evaluation of others, statements to monitor understanding, and requests for information.

|

Reasoning in Small-Group Problem Solving

To answer our second research question regarding the metacognitive utterances associated with higher-quality reasoning, we needed to first analyze the quality of reasoning that occurred when participants solved problems in small groups. Guided by the evidence-based reasoning framework, we identified reasoning components in our data and rated them using the reasoning coding scheme described in the Methods (Supplemental Table 2). Overall, the reasoning displayed by both groups in the problem episodes analyzed (green boxes in Figure 2) was high in quality (Supplemental Table 2). Group A had nearly double the number of reasoning units compared with Group B (Supplemental Table 2), which aligns with the finding that Group A spent more time talking compared with Group B (Figure 2). In this section, we use examples from participant discourse to illustrate the reasoning coding scheme (Supplemental Table 2) by presenting an example of lower-quality reasoning and then an example of higher-quality reasoning. For each example, we share an excerpt of group discourse with a line-by-line analysis of the discourse dynamics and then offer an analysis of the reasoning units using the coding scheme (Russ et al., 2008).

An Example of Lower-Quality Reasoning.

In the segment of discourse presented in Figure 3, Group A is solving problem 2b in the transcription problem set. They are discussing why it is important to control fragment length in a chromatin immunoprecipitation (ChIP) protocol. The discourse in Figure 3 is one conversation composed of two reasoning units (purple boxes), both of which are examples of lower-quality reasoning. Lower-quality reasoning units are nontransactive, incomplete, and/or mixed, meaning students are not operating on one another’s reasoning, they do not provide backing to support linking their premises to a claim, and/or incorrect ideas are present.

FIGURE 3. An example of lower-quality reasoning. An excerpt of Group A’s discourse or conversation while solving problem 2 in the transcription problem set. The excerpt consists of two lower-quality reasoning units, outlined in purple boxes. Lower-quality reasoning units are nontransactive, incomplete, and mixed, meaning that students are not operating on one another’s reasoning, they do not provide backing to support linking their premises to a claim, and incorrect ideas are present. Each line corresponds to a speaker turn. Line-by-line analysis of the discourse dynamics is provided.

Reasoning Unit Analysis.

In Figure 3, there are two reasoning units (purple boxes). The first reasoning unit is nontransactive, because no group member directly acts on the reasoning Catherine shares in line 1. On the surface, it may appear as though Michelle and Bella are providing Catherine with confirmation on her reasoning in lines 2 and 3; however, they are not elaborating on the content of Catherine’s reasoning. Therefore, this reasoning unit is considered nontransactive. In terms of structure, Catherine shares a claim about the regulatory proteins breaking or splitting in half. She also shares two premises about 1) the fragments being too small and 2) the goal of ChIP as figuring out “where the proteins bind to DNA.” Given that Catherine’s reasoning lacks backing in the form of data, evidence, or a rule, her reasoning in this first unit is incomplete. Additionally, Catherine’s ideas about the proteins breaking or splitting in half are incorrect. Thus, this first reasoning unit is also mixed. Taken together, the first reasoning unit in this excerpt of discourse was coded as the lowest-quality reasoning (level 0: nontransactive, incomplete, mixed reasoning).

The next reasoning unit in Figure 3 begins with Bella asking Catherine to repeat what she said previously and ends with Bella’s decision about what to write down for this problem and to move on to the next problem in the set. At the start of this unit, Bella is interested in what Catherine said earlier and asks Catherine to repeat herself. Catherine reshares her incorrect claim, and Bella presents her own incorrect claim. Catherine extends her own reasoning, and Michelle agrees with it. Bella asserts her own incorrect claim again. Catherine agrees with Bella in words, yet continues to extend the incorrect claim that she and Michelle agree on. Bella does not write down Catherine and Michelle’s incorrect claim, but moves the group on to the next problem. Despite the fact that Bella initially elicits reasoning from Catherine, she does not seem to be acting on the reasoning shared by her peers. Michelle acts on Catherine’s reasoning once, and Catherine appears to act on Bella’s reasoning twice by confirming and agreeing with her ideas, albeit superficially. Because they are acting on one another’s reasoning, this second reasoning unit is considered transactive. Given the presence of incorrect ideas that go uncorrected by peers and that Group A is solely sharing premises and claims in this section, this unit is considered incomplete and mixed (level 4: transactive, incomplete, mixed reasoning). This is an improvement from Catherine sharing her siloed reasoning in the first unit, but there were missed opportunities to constructively reason as a group (Figure 3).

An Example of Higher-Quality Reasoning.

In the segment of discourse presented in Figure 4, Group B is solving problem 4a in the nuclear import problem set. They are considering fluorescence resonance energy transfer (FRET) data from a published figure and are tasked with explaining the results in one of the figure’s panels. The discourse in Figure 4 is an example of higher-quality reasoning. Higher-quality reasoning units are transactive, complete, and correct, meaning students are operating on one another’s reasoning, they provide backing to link their premises to a claim, and no incorrect ideas are present, or if they are, they are ultimately corrected.

FIGURE 4. An example of higher-quality reasoning. An excerpt of Group B’s discourse or conversation while solving problem 4a in the nuclear import problem set. The excerpt consists of two higher-quality reasoning units, outlined in purple boxes. Higher-quality reasoning units are transactive, complete, and correct, meaning that students are operating on one another’s reasoning, they provide backing to support linking their premises to a claim, and no incorrect ideas are present, or if they are, they are ultimately corrected. Each line corresponds to a speaker turn. Line-by-line analysis of the discourse dynamics is provided.

Reasoning Unit Analysis.

In the first reasoning unit shown in Figure 4, Oscar shares his idea that something in the figure only makes sense if Ran-GTP is bound. Molly verbalizes that she was thinking the same thing as Oscar and explains what should be occurring if Ran-GTP is not bound in terms of fluorescence output for FRET, and she ties that to what the observed ratio should then be. Adam questions Molly’s reasoning, pondering if both the yellow fluorescent protein (YFP) and cyan fluorescent protein (CFP) molecules should fluoresce when Ran-GTP is not bound. This question triggers Molly to share more of her reasoning, including an accurate description of how FRET works. Oscar then clarifies her idea by stating that you would get more YFP, not necessarily only YFP emission when FRET works. Molly agrees with Oscar, then Oscar ties their co-constructed prediction to the schematic representation of FRET provided in the problem. Molly then connects their reasoning to the observation that the cytoplasm is green in color, which corresponds to a ratio value greater than one. Group B’s reasoning in this unit is transactive in nature, because they are exchanging reasoning. They are displaying complete reasoning by 1) sharing what they know about Ran-GTP concentrations from the prompt (premises) and 2) providing backing for their claims in the form of color observations (data) and ratio relationships (evidence). Their reasoning is also correct (level 7: transactive, complete, correct reasoning).

In the second reasoning unit shown in Figure 4, Group B follows a similar structure for their reasoning displayed in the first reasoning unit, but this time for what is happening in the nucleus. Molly shares her conclusion about YFP and CFP intensity in the nucleus. Oscar elaborates on her conclusion by adding in his prior knowledge about the location and concentration of Ran-GTP in the nucleus, and Adam brings in the observation that the nucleus should then be very dark in color. Group B’s reasoning is transactive in nature, because they are acting on and building upon one another’s ideas to co-construct shared reasoning. As Group B discusses this problem, they consistently provide backing for their claims in the form of data (color observations) or evidence (ratio relationships) and premises, including what they know about Ran-GTP concentrations and relevant pieces of information shared in the problem prompt. For this reason, their reasoning is complete. Additionally, their reasoning is also correct (level 7: transactive, complete, correct reasoning).

Both reasoning units in Figure 4 were coded as transactive, complete, and correct, which represent the highest-quality reasoning code (level 7: transactive, complete, correct reasoning). As is evident in the example discourse, students in our study often do not start with the data in their discussion of this problem. Rather, students start by sharing their conclusions and what they know about the problem before drilling down to the data that support their conclusions. In both reasoning units within this example of discourse, Group B takes this conclusion-first approach to answering problem 4a.

Metacognitive Utterances Associated with Higher-Quality Reasoning

To address our second research question (Which metacognitive utterances are associated with small groups sharing higher-quality reasoning in an upper-division biology classroom?) we investigated the overlap and interplay between our two coding schemes. We were particularly interested in the metacognitive utterances situated within higher-quality reasoning units (level 7: transactive, complete, correct reasoning). Four categories emerged from this analysis. The metacognitive utterances that were associated with higher-quality reasoning units either included 1) evaluative questioning, 2) requesting and receiving evaluations, 3) requesting and receiving explanations, or 4) elaborating on another’s ideas (Table 3). Some higher-quality reasoning units contained more than one of these metacognitive utterance categories (Supplemental Figure 2). In the following subsections, we highlight illustrative examples of each metacognitive utterance category in transactive, complete, and correct (level 7) reasoning units.

| Individual metacognitive regulation skill | Category | Description | Types of metacognitive utterances involved | Example |

|---|---|---|---|---|

| Evaluating | Evaluative questioning | When students would question whether or not the solution answered the problem or when students would challenge one peer’s reasoning with alternative reasoning |

| Figure 5Supplemental Figure 3 |

| Requesting and receiving evaluations | When a closed-ended question was met with an evaluation or a correction |

| Figure 6Supplemental Figure 2 | |

| Monitoring | Requesting and receiving explanations | When an open-ended question was met with an explanation beyond a single-word answer |

| Figure 7Supplemental Figure 2 |

| Elaborating on another’s reasoning | When students would explain or build on a group member’s reasoning beyond a single-word answer without prompting |

| Figure 8 |

Evaluative Questioning.

In this study, a key distinguishing feature of higher-quality reasoning was evaluative questioning. We define evaluative questioning as an appraisal of an approach or thinking formed as a question. For example, Michelle’s question, “Does it answer the question why?,” is considered evaluative questioning, because it is an appraisal of the group’s solution to a problem and is posed as a question to her group members. In contrast, Bella’s question, “Can you explain that?,” seeks an explanation from her group mate Catherine but is not an evaluation of Catherine’s answer or approach. In essence, evaluative questioning occurred in our data set when metacognitive utterances that were questions included within them an evaluation, or when a question to monitor understanding or a request for information overlapped with an evaluation of others (Table 3). Evaluative questioning was found only in level 6 and level 7 reasoning units.

Evaluative questioning took two forms. First, evaluative questioning occurred when students would question whether or not the constructed reasoning answered the question asked in the problem set. An example of this type of evaluative questioning can be seen in Supplemental Figure 3, when Oscar asked, “But does that have to do with the gradient?,” in line 3. Another example of this type of evaluative question came from Michelle when she asked, “Does it answer the question why?” (Table 1). Her group was trying to answer a problem that asked why Ran-GDP is concentrated in the cytoplasm. Before Michelle’s question, Bella and Catherine came up with a solution consisting of the correct rule that Ran-GTP has a high affinity for importin and exportin. This solution, while composed of correct backing, did not completely address the problem asked or link the correct backing to a claim. With her evaluative question, Michelle raises the concern that their solution might not fully answer the problem. Her question got Catherine to reflect and admit that their solution did not answer why Ran-GDP is concentrated in the cytoplasm. This led the group to discuss the role of regulatory proteins (e.g., GAPs and GEFs). Ultimately, the group established consensus around the correct idea that Ran-GDP is concentrated in the cytoplasm because RanGAP, which activates the GTPase activity of Ran-GTP, is found in the cytoplasm. Michelle’s evaluative question redirected and refocused her group to the problem that was posed.

The second form of evaluative questioning occurred when students would challenge a part of one peer’s reasoning with alternative reasoning. An example of this type of evaluative questioning can be seen in Figure 5. In this example, Oscar provided his reasoning about how the Ran-GTP binding domain of importin beta will behave as a control in a FRET experiment that his group was discussing, which led Adam to ask an evaluative question in line 8 (Figure 5). Adam’s evaluative question focused on Oscar’s premise that half of Ran-GTP will bind to importin beta by suggesting an alternative. Adam asked, “Wouldn’t you expect almost all of it to bind, because it looks like the same as this one?” In essence, Adam’s evaluative question challenges Oscar’s reasoning with alternative reasoning (Figure 5). An additional example of this type of evaluative question came from Adam when he asked, “Wait, why would you not get both? Don’t both of these fluoresce if it’s not bound?” (Figure 4, line 3). Before this question, Molly shared an explanation. Adam then asked for clarification and challenged her explanation by offering an alternative interpretation of what might be happening in the experimental figure. Adam’s evaluative question pushed Molly to explain her reasoning in greater detail for the group.

FIGURE 5. Evaluative questioning in student discourse that challenges reasoning. A higher-quality (level 7) reasoning unit from Group B, outlined in purple. Adam’s evaluative question is in bolded font. Parts of the discourse and analysis shown in gray text are provided as context for the reader to emphasize what occurs after the evaluative question (black text).

Requesting and Receiving Evaluations.

Another distinguishing feature of higher-quality reasoning in this study was requesting and receiving evaluations. Requesting and receiving evaluations occurred when a closed-ended question to monitor understanding was followed by an evaluation of others or a correction of another student (Table 3). An example of requesting and receiving an evaluation is seen when Adam asked for confirmation on an idea and was corrected by Oscar in lines 7 and 8 of Figure 6. Adam shared his reasoning posed as a question: “So, wouldn’t it be like the GDP grabs it in here and then sends it through here and then it’s converted to GTP in here?” By doing so he sought confirmation on his idea from his group members. His question to monitor his own understanding opened the floor for feedback from his group members and gave Oscar the opportunity to correct Adam’s thinking. This resulted in Adam accepting Oscar’s correction.

FIGURE 6. Requesting and receiving evaluations in student discourse. A higher-quality (level 7) reasoning unit from Group B, outlined in purple. Adam’s request is in bolded font in line 7 and Oscar’s evaluation is in bold in line 8. Parts of the discourse and analysis shown in gray text are provided as context for the reader.

In higher-quality reasoning units, not every question to monitor understanding was met with an evaluation or a correction. In other words, asking a question to monitor understanding did not always guarantee a response such as that shown in Figure 6. Some questions to monitor understanding that sought an evaluation or confirmation were not addressed verbally by the group. We acknowledge the possibility of students receiving simple nonverbal answers (like the shake or nod of a head) from group members that cannot be detected via audio recordings. Alternatively, this may suggest that, when students asked for feedback in this way, they were sometimes ignored. We speculate that some questions to monitor understanding went unmet because the group might have thought the individual was talking to themselves. Additionally, questions to monitor understanding were also found in lower-quality reasoning units. However, in lower-quality reasoning units, questions to monitor understanding were met with simple one-word confirmations or with another question rather than more elaborate evaluations or corrections. How the group responds to requests for evaluation seems to be important.

Requesting and Receiving Explanations.

Another distinguishing feature of higher-quality reasoning in this study was requesting and receiving explanations. Similar to requesting and receiving an evaluation, a request is made by one student and then met by one or more members of the group, but the nature of the request is slightly different. Requests that sought confirmation resulted in evaluations, whereas requests that were open-ended questions resulted in explanations. The nature of the request dictated the response from the group.

Requesting and receiving explanations occurred when an open-ended request for information was met with an explanation composed of more than a single-word answer (Table 3). For example, consider the reasoning unit in Figure 7 that begins with a request for information from Bella, “Can you explain that? I’m very confused” (Figure 7, line 1). Bella directly asked for an explanation from her group members and received one. Interestingly, every request for information was met with a response in our study. No request for information went unmet. This suggests that, when students in our study asked for help this way, they were never ignored. While requesting and receiving explanations were more common in higher-quality reasoning units, requesting and receiving explanations were occasionally found in lower-quality reasoning units. However, in lower-quality reasoning units, requests for information were met with explanations that often included incorrect or mixed ideas that were never corrected. We underscore that how these requests are met appears to be important.

FIGURE 7. Requesting and receiving explanations in student discourse. A higher-quality (level 7) reasoning unit from Group A, outlined in purple. Bella’s request is in bolded font in line 1, and Catherine’s explanation is in bold in line 2. Parts of the discourse and analysis shown in gray text are provided as context for the reader.

Elaborating on Another’s Reasoning.

Elaborating on another student’s reasoning was another category for metacognitive utterances associated with higher-quality reasoning units. Elaborating on another’s reasoning occurred when students would self-explain a group member’s reasoning or elaborate on a group member’s reasoning beyond a simple “yeah” or “okay” (Table 3). These self-explanations and elaborations were unprompted statements to monitor understanding. To illustrate this category of elaborating on another’s reasoning, Figure 8 shows an excerpt of discourse between Michelle and Bella as they formed a conclusion about a data figure on the occupancy of histone acetylation for a region of a yeast chromosome. In the first part of this excerpt (lines 1–13), Michelle and Bella co-constructed their prior knowledge about the role of acetylation and methylation of nucleosomes in regard to transcription. Bella then restated the question in the problem set (“Okay, so what can you conclude?”), and Michelle responded with her reasoning by providing evidence about a relationship between one gene in the figure and the level of acetylation (“At [gene 1], we’ve got a lot of acetylation”) and a claim (“so that means that that’s a gene that’s being regularly transcribed”). Bella then elaborated on Michelle’s reasoning by providing data (“Oh, yeah, because at [gene 1] is when it shoots up”) and clarifying the evidence Michelle stated by bringing in the idea that the increase in acetylation is occurring at the start of the gene (“So, at the advent of [gene 1], we see dramatic increase in acetylation”). Michelle then provided unprompted confirmation of Bella’s idea by defining the start of the gene as the promoter region based on what she remembers from class (“Yeah, because she said the little arrow thing means promoter, so we’ve got a promoter right there. So, right there, it’s getting going”).

FIGURE 8. Elaborating on another’s reasoning in student discourse. A higher-quality (level 7) reasoning unit from Group A, outlined in purple. Bella’s and Michelle’s elaborations are in bolded font in lines 16 and 17. Parts of the discourse and analysis shown in gray text are provided as context for the reader.

As seen in Figure 8, some unprompted elaboration found in the higher-quality reasoning units was composed of “yes, and/because/so” statements (lines 16 and 17). However, “yes, and/because/so” statements were not always indicative of higher-quality reasoning. These elaborative statements to monitor understanding, while more common in higher-quality reasoning units, were also found in lower-quality reasoning units. In some instances of lower-quality reasoning, these elaborative cues masked disagreement. Take for instance, Group A’s use of elaborative cues in Figure 3 (lines 6 and 9). Catherine’s use of “yeah, and” statements may have been perceived as agreement by her group members even when, based on the content of the conversation, it was clear to the research team that the group was not in complete agreement about their reasoning (Figure 3). The appearance of agreement and elaboration could be misleading for students working in small groups in real time.

In summary, metacognitive utterances that 1) stimulated reflection about the solution or presented alternative reasoning, 2) provided evaluations when requested, 3) provided explanations when requested, or 4) elaborated on another’s reasoning in an unprompted manner emerged as critical aspects of higher-quality reasoning in the data analyzed for this study.

DISCUSSION

Analysis of discourse during small-group problem solving in an upper-division biology course revealed seven types of metacognitive utterances (Table 1) and four categories of metacognitive utterances that were associated with higher-quality reasoning in our study (Table 3). These findings have not been described in this context before and begin to define social metacognition that occurs in the life sciences. We situate our findings from this unique context among broader findings on social metacognition, outline implications for life science instructors based on our data, and suggest future directions for research on social metacognition in the life sciences.

Social Metacognition and Reasoning in the Life Sciences

Our findings build on prior research on social metacognition from mathematics, physics, and the learning sciences and contribute the first exploration of social metacognition in the life sciences. Prior work conceptualized metacognitive utterances during small-group problem solving in secondary mathematics courses broadly as “new ideas,” or occurrences when new information was recognized or alternative approaches were shared, and “assessments,” or occurrences when the execution, appropriateness, or accuracy of a strategy, solution, or knowledge was appraised (Goos et al., 2002). Other researchers have used the individual metacognitive regulation skills of planning, monitoring, and evaluating to categorize metacognitive utterances from small group work during content analysis (Kim and Lim, 2018). Our rich descriptions of seven types of metacognitive utterances in an upper-division biology course moves the field forward by 1) exploring social metacognition in a new context and 2) conceptualizing metacognitive utterances in social settings beyond new ideas and assessments (Goos et al., 2002; Van De Bogart et al., 2017) and the three broad individual metacognitive regulation skills of planning, monitoring, and evaluating (Lippmann Kung and Linder, 2007; Siegel, 2012; De Backer et al., 2015; Kim and Lim, 2018). We propose alignment of the metacognitive utterance types and categories we found in our data set to the individual metacognitive regulation skills of planning, monitoring, and evaluating in order to begin to bridge individual metacognitive theory to the social metacognition framework (Tables 1 and 3). “How are individual and social metacognition related?” remains an open question in the field. More research is needed to determine additional criteria that make metacognition social and not individual.

Seven types of metacognitive utterances emerged in our data (Table 1). For the metacognitive utterances that were questions, the nature of the question determined the type of response it received. For example, open-ended questions like requests for information elicited more elaborate explanations from group members. In contrast, a closed request for feedback, like a question to monitor understanding (“Is my idea right?”), often elicited single-word responses (“Yeah.”). Although this is the first study of social metacognition in the life sciences, this finding aligns with prior research on group work. In a study on student discourse in a life science course, open questions using the words “how” and “why” led to more conceptual explanations from peers (Repice et al., 2016). In another study, peer learning assistants’ use of open-ended prompting questions and reasoning requests during clicker discussions encouraged students to share their thinking and elicited student reasoning (Knight et al., 2015).

The four categories of metacognitive utterances that were associated with higher-quality reasoning in our study (Table 3) extend the ways that a student’s thinking can become the subject of discussion (Goos et al., 2002). Our categories of evaluative questioning and requesting and receiving an explanation build on what Goos et al. (2002) called a “partner’s challenge” or when one student (Student A) asks another student (Student B) to operate on the second student’s (Student B’s) thinking in order to clarify their meaning. Evaluative questioning extends this idea of a partner’s challenge to also include when students question whether or not their co-constructed reasoning or solution answered the question asked in the problem set. Both types of evaluative questioning that we identified in our study and requesting an explanation elicited further reasoning from group members. Our category of requesting and receiving evaluations also builds on what Goos et al. (2002) called an “invitation” or when one student (Student A) asks another student (Student B) to operate on the first student’s (Student A’s) thinking in order to receive feedback. Requesting and receiving evaluations extends this idea of invitation and suggests that invitations are particularly powerful when met. Our category of elaborating on another’s thinking is aligned to what Goos et al. (2002) called “spontaneously, partner-initiated other-monitoring,” in which one student (Student A) operates on another student’s (Student B’s) thinking in an unprompted manner in order to provide unrequested feedback. Unique to our investigation of higher-quality reasoning, we found that spontaneous other-monitoring often appeared as statements of agreement or elaboration, that is, “yes, and/because/so,” rather than corrections. In fact, we did not see evidence of unprompted corrections of another student in our analysis of higher-quality reasoning. Every correction in our data set was invited or requested by a group member (Figure 6).

In research on reasoning and argumentation, the moments when students disagree appears to be critically important (Kuhn, 1991). In fact, some reasoning and argumentation frameworks rank discussions with counterclaims, disagreements, and rebuttals as more sophisticated (Osborne et al., 2004). Disagreements were present in some but not all of our higher-quality reasoning units. A few disagreements appeared overtly as direct corrections of another student (“No, …”), but more often disagreements and counterclaims were present in our data set in the form of subtle evaluations of the group’s solution through evaluative questioning (see Adam’s and Oscar’s questions in Figures 4 and 5 and Supplemental Figure 3). The phrasing of critiques and counterclaims as questions might be a way to be polite, soften the blow, or save face in a group setting, because outright disagreement with one’s peers can be seen as socially undesirable. Group members might feel more open to discussion or comfortable with the possibility of having an incorrect idea when an alternative idea is presented as a question. On the other hand, phrasing critiques and counterclaims tentatively might cause some contributions to be overlooked if not stated directly or assertively (see the exchange in Figure 3), especially depending on the group dynamic (i.e., if there is a more dominating group member present).

Implications for Instructors

We found that metacognitive utterances that 1) stimulated reflection about the answer or presented alternative reasoning, 2) provided evaluations when requested, 3) provided explanations when requested, or 4) elaborated on another’s reasoning in an unprompted manner were associated with higher-quality reasoning. Students are likely to need structured guidance on how to be socially metacognitive (Chiu and Kuo, 2009; Stanton et al., 2021) and how to reason (Knight et al., 2013; Paine and Knight, 2020). Our rich, qualitative work begins to provide the foundational knowledge needed to develop this guidance.

Scripts or prompts for social metacognition could be provided to students working in small groups (Miller and Hadwin, 2015; Kim and Lim, 2018). Other researchers, particularly in the realm of computer supported collaborative learning, have identified and used scripting tools to structure and sequence collaborative online interactions like small-group problem solving (Miller and Hadwin, 2015; Kim and Lim, 2018). Scripting for social metacognition in a life science classroom could involve providing prompts for students to use during group work and then modeling when and why to use these prompts. Based on our data, we suggest several possible prompts in Table 4. Incorporating these prompts during small group work could encourage students to practice aspects of social metacognition that were associated with higher-quality reasoning in our study. The effectiveness of these prompts in promoting social metacognition and reasoning is unknown and will be tested by our lab in a future study.

| To encourage students to… | Have students consider… |

|---|---|

| respectfully challenge their group member’s thinking | asking their group, “Does this answer the question asked?” or “What alternative ideas do we have?” |

| invite their group members to evaluate their thinking | asking, “What about my idea does not make sense?” after they share an idea. |

| ask for explanations | asking their group, “Can you explain that to me?” |

| elaborate on one another’s ideas | using “I agree/disagree because…” or “That makes sense/doesn’t make sense because…” statements after their group members share an idea. |

Another way to possibly facilitate social metacognition during small-group problem solving is through the use and modification of group roles. Other researchers suggest that students’ natural role choices are not necessarily optimal and that consideration of group roles can improve group discussions (Paine and Knight, 2020). In our study, students took on a defined group role as either recorder, presenter, or manager which are common group roles for a POGIL-style classroom (Moog et al., 2006). To facilitate social metacognition, these group roles could be expanded and tested in the following ways. First, we do not suggest expanding the recorder role, because it was already the most demanding group role in our study. Second, the presenter role could be expanded to include the role of prompter. The role of the prompter would be to encourage the group’s use of scripting prompts throughout the group work (Table 4). The prompter could also be tasked with ensuring any questions asked by a member of the group are answered, because we found that requests and invitations were particularly powerful when met. Finally, the manager role could be expanded to include the role of moderator. The role of the moderator would be to encourage active listening.

A particularly interesting form of active listening to consider for the expanded role of moderator is apophatic listening (Dobson, 2014; Samuelsson and Ness, 2019). Apophatic listening is a process in which learners start by being quiet to give space for a speaker to share their ideas. Learners use this silence to temporarily suspend their expectations and reflect on the speaker’s ideas before actively participating in the conversation. After actively listening to the speaker, learners then ask follow-up questions and interpret what the speaker shared. The listener then shares their alternative understanding with the speaker, and the listener and speaker work together to collectively create a shared and mutual understanding (Samuelsson and Ness, 2019). Adding the role of moderator to encourage apophatic listening could ensure all group members are heard. In our study, apophatic listening may have been more present in Group B’s discourse because of the greater proportion of silence and turn-taking during group work compared with Group A’s overlapping talk (Figure 2).

Structured guidance on how to reason is also needed in college life science classrooms (Paine and Knight, 2020). Another study of upper-division biology students showed that instructional cueing for reasoning led to higher-quality reasoning during clicker question discussions (Knight et al., 2013). In alignment with these previous findings from a different population of upper-division biology students (Knight et al., 2013), the majority of the reasoning units analyzed in our work were higher in quality (Supplemental Table 2). A combination of factors, including the prior educational experiences of the studied population, the nature of the task itself (Zagallo et al., 2016), and the course expectations around sharing reasoning, may have been sufficient to elicit higher-quality reasoning in our study. Notably, students in our study were not explicitly taught to reason using the evidence-based reasoning framework (Brown et al., 2010), and the most common approach to reasoning in our data set was a conclusion-first or claim-first approach (Figure 4). It might be helpful to teach students to reason during small-group problem solving with a data-first approach (Supplemental Figure 1) so their claims flow logically from backing (data, evidence, rules).

Future Directions for Research