Osmosis and Diffusion Conceptual Assessment

Abstract

Biology student mastery regarding the mechanisms of diffusion and osmosis is difficult to achieve. To monitor comprehension of these processes among students at a large public university, we developed and validated an 18-item Osmosis and Diffusion Conceptual Assessment (ODCA). This assessment includes two-tiered items, some adopted or modified from the previously published Diffusion and Osmosis Diagnostic Test (DODT) and some newly developed items. The ODCA, a validated instrument containing fewer items than the DODT and emphasizing different content areas within the realm of osmosis and diffusion, better aligns with our curriculum. Creation of the ODCA involved removal of six DODT item pairs, modification of another six DODT item pairs, and development of three new item pairs addressing basic osmosis and diffusion concepts. Responses to ODCA items testing the same concepts as the DODT were remarkably similar to responses to the DODT collected from students 15 yr earlier, suggesting that student mastery regarding the mechanisms of diffusion and osmosis remains elusive.

INTRODUCTION

Fifteen years ago, Odom and Barrow (1995; Odom, 1995) created the Diffusion and Osmosis Diagnostic Test (DODT; available in Odom [1995] and Odom and Barrow [1995]), designed to assess secondary and college biology students’ understanding of osmosis and diffusion. Odom and Barrow reported that performance on the DODT by college biology majors was consistently poor, and scores earned by college non–biology majors and high school students were even lower (Odom, 1995). Although understanding how the fundamental processes of diffusion and osmosis work is essential to comprehending a wide range of biological functions, the research of Odom and Barrow and others has demonstrated that student mastery of osmosis and diffusion is extremely difficult to achieve. Inadequate understanding of osmosis and diffusion has been documented among high school and college students in the United States (e.g., Marek, 1986; Westbrook and Marek, 1991; Marek et al., 1994; Zuckerman, 1998; Christianson and Fisher, 1999) and elsewhere (e.g., Friedler et al., 1987; She, 2004).

The DODT contains 12 two-tiered, multiple-choice questions. The first tier asks “What happens when …?” (“What?”), and requires students to analyze a situation and/or make a prediction about what will happen in a situation, given specific conditions. The second tier asks the reason (“Why?”) for the first-tier response. Item distracters (incorrect responses) were drawn from 20 prevalent misconceptions held by students, as identified by the DODT authors (Odom, 1995; Odom and Barrow, 1995).

Twelve years later, Odom and Barrow (2007) examined responses and levels of certainty among 58 high school students who responded to the DODT after completing a week of instruction about osmosis and diffusion. Responses among subjects were remarkably similar to those obtained previously. Further, the authors assessed students’ confidence levels and found that students displayed high levels of confidence that their (incorrect) responses on the DODT were accurate. The extended time frame between studies (12 yr), the similarities in students’ responses across the years, and the students’ certainty about the correctness of their (incorrect) responses all illustrate the persistence and tenacity of students’ misconceptions (i.e., alternative ideas, naive ideas) regarding the processes of osmosis and diffusion.

In this report, we use the original term, “misconception,” although we recognize that there are other, perhaps more appropriate, ways to refer to the scientifically inaccurate understandings that students have developed about natural phenomena (e.g., Smith et al., 1993; Chi, 2005). The misconception-based distracters in the DODT are valuable. They can reveal to instructors the presence of prevalent misconceptions among students in a given class. A wise instructor can effectively use that information to help students move from maintaining the identified misconceptions to applying accurate scientific reasoning.

Our project's overarching goal was to develop several validated instruments that could be used for programmatic assessment in order to monitor learning at the college level, and specifically at our university. With these instruments, we examined biology students’ understandings of five fundamental topics, including 1) osmosis and diffusion, 2) natural selection, 3) cell division, 4) photosynthesis and respiration, and 5) nature of science.

This paper describes our efforts to assess students’ understanding of the first topic area, osmosis and diffusion, by creating a modified version of the DODT that better corresponds to the instructional emphases on our campus, and that would fit within our programmatic assessment plan, in terms of both its overall length and the topics it included (described in Methods). The resulting modified Osmosis and Diffusion Conceptual Assessment (ODCA) was developed, evaluated, refined, and validated through successive administrations to students enrolled in several lower- and upper-division biology courses at a large public university across multiple semesters.

In response to the growing interest in conceptual assessments in biology (e.g., D’Avanzo, 2008), we provide the completed ODCA (Supplemental Material) as another validated instrument that can be used to assess students’ comprehension (Chi and Roscoe, 2002) in order to improve both instructional effectiveness and curriculum design, as well as to aid programmatic assessment efforts.

METHODS

Development and Design

The ODCA items were developed and modified over a period of several years through 1) administration of developing items each term to a subset of students in multiple classes, 2) reviews by expert faculty, and 3) interviews with both students and faculty about the test items. Incremental revisions were made between each administration of the evolving ODCA and were evaluated after subsequent ODCA administrations. In developing the ODCA, we attempted to focus on examining students’ reasoning about the biological mechanisms of osmosis and diffusion, which involves relatively high-level cognitive skills.

To align with our programmatic learning goals, which emphasize different content areas within the realm of osmosis and diffusion than are addressed in the DODT, we began with the DODT, and deleted some items, modified others, and added items (Table 1; see Supplemental Material for ODCA items). In addition, we wanted to reduce the length of the instrument to encourage more faculty, even those teaching large college classes, to use it to inform their teaching, and to encourage students to pay close attention and read all items carefully and reflect on their answers. With our modifications, the validated ODCA is composed of eight paired items (16 questions), while the DODT has 12 paired items (24 questions). To use examples that are more relevant to essential curriculum in our state, we retained items relating to Odom and Barrow's (1995) categories of “kinetic energy of matter” and “membranes” (DODT items 7 and 12 [ODCA items 9/10 and 1/2], respectively). To shorten the test, we reduced the total number of items assessing concepts of “the process of diffusion,” “the particulate and random nature of matter,” and “the process of osmosis,” by omitting DODT items 1a,b; 3a,b; and 10a,b. Since concepts of “tonicity and concentrations” and “the influence of life forces on diffusion and osmosis” are not specifically addressed in our learning outcomes, we omitted those items to keep the ODCA brief (DODT items 4a,b and 9a,b, and items 11a,b, respectively). New items were added to the ODCA regarding diffusion of particles, and solute and solvent movement through a membrane (Tables 1 and 2). We also modified one item into a question about human cells, rather than plant cells, so it would be more appropriate for our human physiology courses, where students might not have strong preparation in plant biology and cell structure. We modified the language of many of the response choices during iterations of face validation by expert faculty to: 1) reduce the length of some response choices, especially when the correct choice was the longest option; 2) increase the number of responses that would allow us to diagnose students’ conceptual understanding of diffusion and osmosis, rather than testing students’ ability to define terms; and 3) attempt to reduce the number of concepts being tested in any one response choice by shortening some responses. Lastly, we reordered response choices to ensure correct responses were more evenly distributed among all response letters (a–d). These changes produced a shorter, validated test that addressed the processes and scientific reasoning we want our graduates to apply accurately.

| Six DODT item pairs modified in ODCA | |||

|---|---|---|---|

| Six DODT item pairs omitted from ODCA | DODT | ODCA | 3 New item pairs in ODCA |

| 1a,b | 2a,b | 3/4 | 11/12 |

| 3a,b | 5a,b | 5/6c | 13/14 |

| 4a,b | 6a,b | 15/16 | 17/18 |

| 9a,b | 7a,b | 9/10 | |

| 10a,b | 8a,b | 7/8 | |

| 11a,b | 12a,b | 1/2 | |

| Seven scientifically correct ideas | Response(s) |

|---|---|

| Category A: Dissolving and solutions | |

| 1. When a soluble substance is placed in water undisturbed, it will dissolve in water spontaneously, assuming its concentration is below the saturation point of the solution. | 5b |

| Category B: Solute and solvent movement through a membrane | |

| 2. Cell membranes are semipermeable in both directions, allowing some substances to pass through but not others. | 1a, 2d, 12a |

| 3. When separated by a semipermeable membrane through which only water can pass, water particles will move from the higher concentration of water (low solute concentration) into the lower concentration of water (high solute concentration). This can raise the water level on the side to which the water migrates. | 7a, 8c, 14d, 17a, 18c |

| 4. If water moves into an animal cell, the cell may swell and burst because water moves toward regions where there is more solute, so if a blood cell is placed in pure water, the cell will swell and eventually burst. | 13b |

| Category C: Diffusion of particles | |

| 5. Solutes and solvents move from higher to lower concentrations. | 3a, 5b, 6d, 11a |

| 6. Component particles of all phases of matter are moving; molecules continuously move due to Brownian motion; molecules become evenly distributed throughout their container, and continue to move. | 4b, 5b, 15b, 16c |

| 7. The higher the temperatures, the faster the rate of diffusion, all other things being equal, because the individual molecules are moving faster. | 9b, 10c |

From interviews, we realized that some students responded to questions based on their recognition of specific biological terms and their recall of definitions—a low-level and often short-lived cognitive skill that sometimes led them to select incorrect responses reflecting inappropriate scientific reasoning. Thus, as much as possible, we avoided using scientific terminology in the ODCA to avoid triggering recall responses and to encourage thinking about mechanisms. We also eliminated or modified responses with low student response rates to improve the attractiveness of all distracters. Ultimately, six DODT item pairs were omitted, another six DODT item pairs were modified, and three new item pairs were created as a result of the revision process (Table 1). The two-tiered ODCA items are numbered consecutively (instead of using 1a and 1b, as Odom and Barrow did).

In creating the ODCA, we did not aim to identify new misconceptions held by students. Rather, like the DODT, the ODCA assesses 1) students’ abilities to identify scientifically accurate interpretations of osmosis and diffusion events and 2) their attraction to well-known misconceptions regarding the processes of osmosis and diffusion.

Specification Tables

Specification tables were developed and modified along with the ODCA. These tables were used to track the scientifically correct ideas (Table 2) and misconceptions (Table 3) captured in the various item responses to allow comparison of students’ responses to similar ideas in different contexts. The tables are similar in construct to those used in the development of the Conceptual Inventory of Natural Selection (CINS; Anderson et al., 2002; Anderson, 2003) and to the lists of propositions and misconceptions identified in the development of the DODT (Odom, 1995; Odom and Barrow, 1995). ODCA concepts are organized into three categories (Tables 2 and 3): “Dissolving and solutions,” “Solute and solvent movement through a membrane,” and “Diffusion of particles,” and the item responses associated with those concepts are shown in the right columns of Tables 2 and 3. Table 2 lists the seven scientifically correct ideas examined in the ODCA and Table 3 summarizes the 20 misconceptions or other types of errors presented in the ODCA alternative responses.

| Twenty misconceptions and other errors | ODCA responses |

|---|---|

| Category A: Dissolving and solutions | |

| 1. Dissolved substances will eventually settle out of solution. | 5a, 6a, 6c, 16b |

| 2. Solute particles will not dissolve in water spontaneously. | 6b |

| 3. Conflation of solvent and solute concentration and their interactions with solutions. | 7c, 17b, 17c, 18b |

| Category B: Solute and solvent movement through a membrane | |

| 4. Semipermeability is unidirectional. | 2b |

| 5. Only beneficial materials pass through a cell membrane. | 2c |

| 6. All small materials can pass through a cell membrane. | 1b, 2a |

| 7. Water moves from high to low solute concentration. | 8a, 13a, 14a |

| 8. Solutes do not cross a semipermeable membrane. | 11c |

| 9. When solutes in water-based solutions cross a semi-permeable membrane, they can alter the heights of the liquids on each side; movement of water particles across the membrane does not warrant consideration. | 7b, 11b, 12c |

| 10. In a system open to atmospheric pressure, water always equilibrates the levels on both sides of a membrane. | 8b, 12d, 18a |

| 11a. An animal cell can maintain itself in any environment. | 13c, 14b |

| 11b. An animal cell cannot survive outside the body. | 14c |

| 12. At a given temperature, solute and solvent particles move across a membrane at different speeds. | 12b |

| Category C: Diffusion of particles | |

| 13. Solvent particles will move from lower concentration of that solvent to higher concentration of that solvent. | 3b, 7b, 8d |

| 14. Coolness facilitates movement. | 9a, 10b |

| 15. Dye tends to break down in solution. | 10a |

| 16. As the temperature changes, individual particles (atoms, molecules, ions) expand or contract. | 10d |

| 17. Particles move through solutions or gases only until they are evenly distributed, then they stop. | 4c, 15a, 16a |

| 18. Particles move by repelling one another. | 4d |

| 19. Particles actively seek (want) isolation or more room. | 4a |

| 20. Particles in gases and liquids are moving; particles in solids are not. | 16d |

Initial Refinement and Validation

As noted previously, initial versions of the ODCA were administered to biology students for several semesters to improve instrument validity. During this period, incremental changes were made to increase clarity and effectiveness of items, and ensure that all responses were attractive to some students. Face validation was obtained when 56 biology instructors taking part in scoring the AP Biology Examination voluntarily completed the ODCA. Of those who responded, 33 taught biology at the high school level and 23 taught biology at the community college or university level (San Diego State University [SDSU] Institutional Review Board [IRB] Approval 113073). Each of the participants stated that they had taught osmosis and diffusion to students in the past year. In addition to taking the ODCA, these instructors were asked to provide feedback concerning the wording of the instrument items and questions, and this feedback was taken into consideration during development of the conceptual assessment. The instructors were also invited to describe the specific areas in which their students tend to experience the most difficulty when trying to comprehend diffusion and osmosis.

In addition, 16 undergraduate students (10 biology majors, four kinesiology majors, and two nursing majors) were invited to participate in semistructured interviews (Bernard, 1988). The interviews focused on students’ thinking about osmosis and diffusion and were conducted by two graduate students. Each interviewed student was asked to read ODCA items aloud and provide explanations for why he or she selected or rejected each response. Students were also encouraged to suggest an alternative response if they were not satisfied with the fixed choices offered. Additionally, the interviewer asked students to respond to follow-up probes designed to further explore their reasons for answering the way they did. Manipulatives, demonstrations, and/or drawings were occasionally used to elicit additional information regarding participants’ ideas about osmosis and diffusion.

To determine construct validity, we calculated the standard difficulty index (p: proportion of students who answered a test item correctly) and discrimination index (d: index that refers to how well an item differentiates between high and low scorers), and evaluated the instrument's reliability with Cronbach's alpha (Cronbach, 1951), using PASW Statistics 17, Release Version 17.0.2 (IBM SPSS Statistics [2009], Chicago, IL). Values from the ODCA were then compared with those reported for the DODT (Odom, 1995; Odom and Barrow, 1995).

ODCA Administration

Once ODCA design was finalized, student performance was assessed in four successive semesters during two academic years, 2007–2008 and 2008–2009 (Table 4; IRB Approval 278). This study compares the performance of three groups of university students: upper-division biology majors, lower-division biology majors, and lower-division allied health majors. The upper-division biology majors were students enrolled in four upper-division biology courses (“core” courses of the curriculum) taken by most biology majors. The lower-division allied health majors were non–biology majors enrolled in a lower-division microbiology course. These two groups were surveyed for four semesters. The lower-division biology majors consisted of students enrolled in a lower-division introductory biology course required for all biology majors; they were surveyed during the 2008–2009 year.

| UD majors | LD majors | NM | ||||

|---|---|---|---|---|---|---|

| Semester and year | Invited | Tested | Invited | Tested | Invited | Tested |

| Fall 2007 | 68 | 40 (59%) | — | — | 50 | 33 (66%) |

| Spring 2008 | 64 | 39 (61%) | — | — | 43 | 26 (60%) |

| Fall 2008 | 66 | 44 (67%) | 102 | 45 (44%) | 46 | 26 (57%) |

| Spring 2009 | 80 | 52 (64%) | 101 | 62 (61%) | 50 | 42 (84%) |

| Total | 278 | 174 (63%) | 203 | 107 (53%) | 189 | 127 (67%) |

A random subset of students in each course was invited each semester to complete the ODCA, using the course management system, Blackboard Academic Suite. Students were asked to complete the ODCA during a 10-d window after course registration ended and enrollments were finalized. Students were encouraged to complete the ODCA as their contribution to the biology department's annual programmatic assessment effort; three participation points could be earned by completing a “survey.” We used the term “survey” to distinguish the ODCA and other conceptual assessments from the graded tests taken by students. Approximately 20% of the students enrolled in each of the courses were randomly invited to complete the ODCA each semester (Table 4). The remaining students received one of four other conceptual assessments on different topics examined for programmatic assessment. None of the courses directly addressed topics of osmosis and diffusion before the ODCA was administered, as these processes were taught explicitly in previous courses.

RESULTS

Variation among Courses

Of the 670 students invited to complete the ODCA over the four semesters, 408 (61%) responded and gave consent to use their data (Table 4). Within each level (upper-division biology major, lower-division biology major, and nonmajor), there was considerable within-class variation in student performance. Consequently, no significant differences were found in student performance across semesters within the upper-division courses, lower-division courses, or nonmajor courses (two-way analysis of variance (ANOVA), P > 0.05). Thus, for each level (upper division, lower division, nonmajor), results from all semesters were combined for these analyses.

Difficulty and Discrimination Indices

Difficulty indices (p) of the items ranged from 0.27 to 0.98 (Table 5), with a mean of 0.65, providing a wide range of item difficulty, similar to values reported for the DODT (Odom and Barrow, 1995). The discrimination indices (d) ranged from 0.07 to 0.67, with a mean of 0.44. The discrimination index refers to how well an item differentiates between high and low scorers; it is a basic measure of the validity of an item. On ordinary tests, a low or negative discrimination index value generally indicates that the item does not measure what other items on the instrument are measuring. However, in the case of the paired items on this instrument, the first-tier “What happens?” item is often quite easy, and provides the necessary context for the more difficult second-tier “Why does this happen?” item. This can produce a low discrimination index for some of the first (odd-numbered) items, but a high discrimination index for the paired (even-numbered) items. For example, three odd-numbered ODCA “What?” items had discrimination indices of 0.07, 0.08, and 0.16—below the value of 0.20 that is typically considered as a minimum (Odom and Barrow, 1995), and the subsequent even-numbered “Why?” items had discrimination values over 0.20. Thus, on this two-tiered assessment, a correct answer on the first-tier item (content) did not predict performance on the second-tier item (reason). For purposes of this project, all ODCA test items were considered acceptable, since the item pair (and particularly the “Why?” response) is the element of interest.

| Difficulty (No. of items) | Discrimination (No. of items) |

|---|---|

| 0.20–0.29 (1) | 0.00–0.09 (2) |

| 0.30–0.39 (1) | 0.10–0.19 (1) |

| 0.40–0.49 (3) | 0.20–0.29 (2) |

| 0.50–0.59 (2) | 0.30–0.39 (1) |

| 0.60–0.69 (5) | 0.40–0.49 (2) |

| 0.70–0.79 (1) | 0.50–0.59 (7) |

| 0.80–0.89 (2) | 0.60–0.69 (3) |

| 0.90–0.99 (3) | — |

For evaluating the difficulty of an item, it is helpful to consider the likelihood of guessing the correct answer. For a multiple-choice item with four possible responses, there is a 25% chance of guessing the correct response. A two-tier item with two selections for the first tier and four selections for the second tier provides a 12.5% chance of guessing the correct answer combination. In the ODCA, there are two or three response options in the first tier, and three or four in the second tier. This results in chances of guessing correct answers at 8.3% to 12.5%.

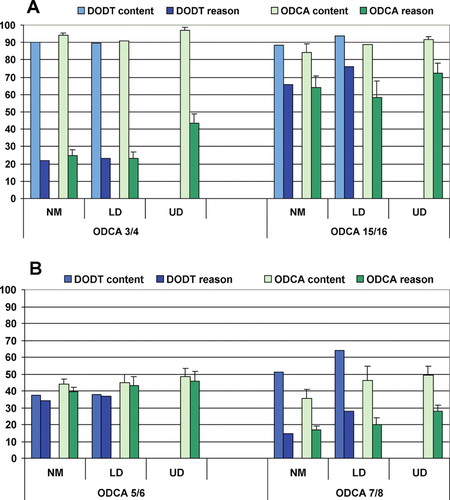

For the first tier of the ODCA, the percentage of students responding with correct answers ranged from means of 48.3% to 97.8% for upper-division biology major classes, 45.0% to 95.4% for lower-division biology major classes, and 35.6% to 97.3% for nonmajor classes (Table 6 and Figure 1). The mean percentage of combined content–reason responses on the ODCA that were correct ranged from 28.2% to 92.3% for upper-division biology major classes, 19.9% to 74.7% for lower-division biology majors, and 16.8% to 85.3% for nonmajors. Results from college students taking the DODT and ODCA were strikingly similar (Table 6), especially for the highest and lowest scoring-item combinations (Figure 1).

Figure 1. Percentages of college students who selected the correct content response (pale bars) and the correct content plus reason combination (darker bars) on (A) ODCA items 3 and 4 compared with DODT items 2a,b, and on ODCA items 15 and 16 compared with DODT items 6a,b, and (B) ODCA items 5 and 6 compared to DODT items 5a,b, and ODCA items 7 and 8 compared with DODT 8a,b. ODCA items are shown in green, while DODT items are shown in blue. NM, nonmajors; LD, lower-division biology majors; UD, upper-division biology majors; Values represent means + 1 SE. Sample sizes appear in Table 4.

| Non–biology majors | Biology majors | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ODCA Item no. (DODT no.) | DODT | DODT | ODCA | ODCA | LD DODT | LD DODT | LD ODCA | LD ODCA | UD ODCA | UD ODCA |

| Item pair | Tier 1 | Comb | Tier 1 | Comb | Tier 1 | Comb | Tier 1 | Comb | Tier 1 | Comb |

| 1/2 (12) | 95.1 | 81.3 | 97.3 | 85.3 | 95.7 | 92.3 | 95.4 | 74.7 | 97.8 | 92.3 |

| 3/4 (2) | 90.2 | 22 | 94.2 | 24.8 | 89.7 | 23.1 | 90.7 | 23.0 | 97.3 | 43.6 |

| 5/6 (5) | 37.4 | 34.1 | 44.2 | 39.5 | 37.6 | 36.8 | 45.0 | 43.1 | 48.3 | 45.8 |

| 7/8 (8) | 51.2 | 14.6 | 35.6 | 16.8 | 64.1 | 28.2 | 46.3 | 19.9 | 49.3 | 28.2 |

| 9/10 (7) | 97.6 | 95.9 | 89.6 | 50.0 | 94 | 92.3 | 84.8 | 65.2 | 95.0 | 72.3 |

| 11/12 | 55.3 | 34.1 | 58.0 | 44.6 | 74.0 | 58.0 | ||||

| 13/14 | 64.8 | 47.6 | 62.2 | 46.1 | 77.5 | 58.4 | ||||

| 15/16 (6) | 88.6 | 65.9 | 84.4 | 64.1 | 94 | 76.1 | 88.8 | 58.3 | 91.7 | 72.3 |

| 17/18 | 68.1 | 58.6 | 63.1 | 52.8 | 63.1 | 53.7 | ||||

Test reliability was at least 0.70 each semester using Cronbach's alpha calculation (Fall 2007: 0.70; Spring 2008: 0.74; Fall 2008: 0.73; Spring 2009: 0.70), which is considered to be an acceptable level. Test completion time ranged from 5 to 20 min per student and was not correlated with performance (r2 > 0.05; n = 71, 72, and 83 for three semesters, Fall 2007–Fall 2008).

Expert Performance

We found no difference between high school and college instructors’ performance on the ODCA; thus, all 56 instructors were grouped together for data analysis. As expected, these biology experts performed exceedingly well on the ODCA, earning a mean score of 16.5 out of the total possible 18 (SE = 0.2). These data provide evidence that the test items accurately represent the processes of osmosis and diffusion.

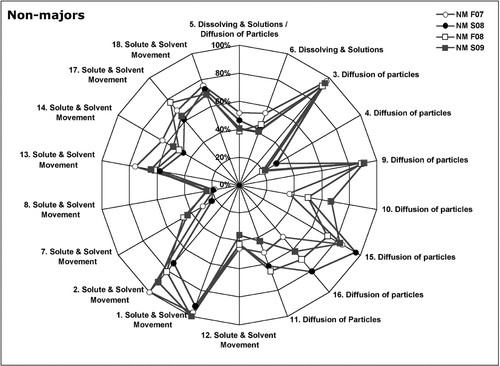

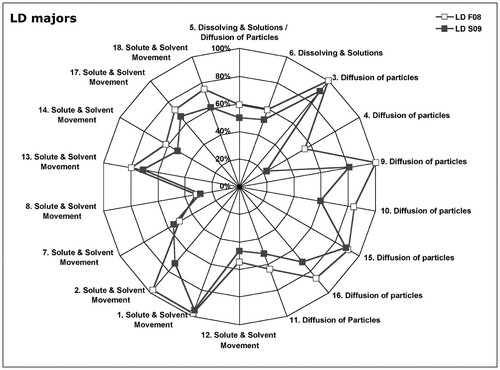

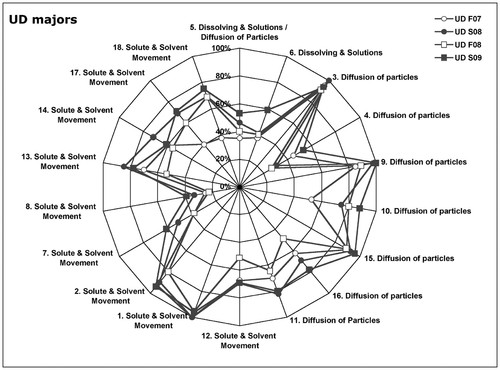

Student Performance

The most striking result is the overall consistency of students’ response patterns across levels, semesters, and years (Figure 2). Students generally performed better on first-tier “What?” items (odd numbers) than on second-tier reason “Why?” items (even numbers) on both the ODCA and the DODT (Figures 1 and 2 and Table 6), indicating that they often can predict the outcome but have less understanding about the underlying mechanisms.

Figure 2A. Radar graph shows the percentage of nonmajors (NM), by course and by semester, who selected the correct response for each item on the ODCA. Items are grouped into the three conceptual categories described in the text. Note the similarity of performance across semesters. S, spring semester; F, fall semester. Sample sizes appear in Table 4.

Figure 2B. Radar graph shows the percentage of lower-division biology majors (LD), by course and by semester, who selected the correct response for each item on the ODCA. Items are grouped into the three conceptual categories described in the text. Note the similarity of performance across semesters. S, spring semester; F, fall semester. Sample sizes appear in Table 4.

Figure 2C. Radar graph shows the percentage of upper-division biology majors (UD), by course and by semester, who selected the correct response for each item on the ODCA. Items are grouped into the three conceptual categories described in the text. Note the similarity of performance across semesters. S, spring semester; F, fall semester. Sample sizes appear in Table 4.

Of the nine ODCA question pairs, 3/4, 5/6, and 7/8 yielded the lowest combined content–reason values (Figure 1 and Table 6), and thus reflected the most prevalent misconceptions among our students. Excerpts from these three item pairs are shown in Table 7, along with frequencies of student responses and variance among semesters in parentheses.

More than 90% of all students correctly indicated that particles (dissolved substances) would move from areas of high to low concentration (response 3a; Figure 1A and Table 6). However, when asked to provide a reason for their answer, many faltered (Table 6). The correct combined content–reason ODCA responses, 3a and 4b, were selected by < 25% of nonbiology majors and lower-division biology students (Table 6). More than one-fourth (27–44%) of students at each course level chose response 4a, “crowded particles want to move to an area with more room” (Table 3, misconception #19; Table 7). That is, a substantial proportion of our undergraduates attributed anthropomorphic qualities to substances in place of scientifically accurate alternatives. Interviews corroborated these tendencies. When an interviewee was asked why she selected 4a (“… crowded particles want to move …”) rather than 4b (“the random motion of particles suspended in a fluid results in their uniform distribution”), she stated that 4a seemed more understandable and consistent with her conceptualization of the diffusion process. “I just think of diffusion as…there's bunch of particles here and there aren't any there, so it's crowded. So [the particles] just move to the other side because…they want to even out” (italicized word indicates emphasis by the speaker). When asked why she didn't choose answer 4b, the student replied that while she knew that the particles moved randomly, she didn't quite understand how such motion would result in uniform distribution.

| Prevalent misconceptions | NM | LD | UD |

|---|---|---|---|

| Category C: Diffusion of particlesb | |||

| 3a: “During the process of diffusion, particles will generally move from an area of (a) high to low particle concentration …”c | |||

| 4a. because crowded particles want to move to an area with more room.d | 0.31 (0.05) | 0.44 (0.01) | 0.27 (0.06) |

| 4c. because the particles tend to keep moving until they are uniformly distributed and then they stop moving.d | 0.37 (0.02) | 0.24 (0.02) | 0.23 (0.02) |

| Category A: Dissolving and solutionb | |||

| 5a: “If a small amount of table salt (1 tsp) is added to a large container of water) and allowed to set for several days without stirring … the salt molecules will (a) be more concentrated on the bottom of the water …”c | |||

| 6a. because salt is heavier than water and will sink.d | 0.25 (0.04) | 0.24 (0.07) | 0.28 (0.02) |

| 6c. because there will be more time for settling.d | 0.10 (0.02) | 0.24 (0.07) | 0.28 (0.02) |

| Category B: Solute and solvent movement through a membraneb | |||

| 7a: “In Figure 1, … the water level in Side 1 will be higher than in Side 2 …”c | |||

| 8a. because water will move from high to low solute concentration.d | 0.11 (0.04) | 0.19 (0.02) | 0.12 (0.02) |

| 7b: “In Figure 1;, … the water level in Side 1 will be lower than in Side 2 …”c | |||

| 8a. because water will move from high to low solute concentration.d | 0.19 (0.04) | 0.12 (0.02) | 0.09 (0.02) |

| 7c: “In Figure 1, … the water level in Side 1 will be the same height as in Side 2 …”c | |||

| 8b. because water flows freely and maintains equal levels on both sides.d | 0.29 (0.07) | 0.27 (0.06) | 0.26 (0.04) |

Surveyed biology instructors similarly identified anthropomorphism as a problem for students. For example, a college instructor with 10 years of experience noted that students think molecules “want to diffuse.” Another instructor suggested that it is difficult for students to eliminate the ideas of “‘vitalism’ and ‘volition’ on the part of the particles.”

One-fourth of our students indicated that particles tend to keep moving until they are uniformly distributed and then they stop moving (response 4c). Some of the students’ follow-up statements during interviews seemed to suggest that the students conceived of particles moving around only if there was a need to do so (e.g., a concentration gradient is present). When there was not such a need, they thought that particles would stop moving and remain stationary. Surveyed biology instructors offered similar observations. For example, a high school instructor with 20 years of experience stated: “[Students have trouble with the idea] that molecules have constant motion which can vary with conditions (temperature, pressure, etc.).” Similarly, a college instructor with 15 years of experience noted that, “[Students exhibit] no prior knowledge of kinetic energy/Brownian movement.”

Regarding question pair 5 and 6 (Figure 1B; and Table 7), illustrating misconception 1 (Table 3), more than 50% of all students (including upper-division biology majors) in most semesters indicated that if a small amount of salt is added to a large volume of water and then allowed to sit still for several days, salt molecules would be concentrated at the bottom of the container. In some semesters, more than 50% of students chose this content response (Figure 2 and Table 6). Of those students, 24–28% chose reason 6a, asserting that salt would sink, because salt is heavier than water, and 10–28% chose reason 6c, indicating that salt would sink because there will be more time for settling (Table 7).

In interviews with test takers, students who selected content response 5a combined with reason response 6a or 6c were confident that, given enough time, solute particles would be concentrated on the bottom. For example, when asked why she selected her response, one senior biology major stated, “I do think that salt is heavier…When you put it in a container, it goes to the bottom.” Interviewees stated that this would be the case both for solid solutes (e.g., salt and sugar) and for liquid solutes (e.g., food coloring or dye). Thus, in the minds of many undergraduate students, even after considerable training in biology, gravity appears to be a major factor affecting dissolved substances, even when only a small amount of solute is introduced into a solvent.

Item pair 7/8 (Figure 1B, Table 7, and Supplemental Material) presents an illustration and description of a container divided by a semipermeable membrane, with dye plus water on the left and pure water on the right. The problem states that only water can move through the membrane; the dye cannot pass through. In some semesters, half of our students selected content response 7c, indicating that water will maintain at equal levels on both sides of the membrane. Of those selecting response 7c, 26–29% of students across all semesters choose the reason response 8b, that water flows freely and maintains equal levels on both sides of the membrane (Table 7).

Among upper-division students who were interviewed, those who selected responses 7c and 8b indicated that atmospheric pressure overrides water's ability to move through the membrane during osmosis. In the words of a senior biology major, “I’m assuming that water's not going to rush in and…go against pressure to…dilute the dye side of things…Atmospheric pressure is going to override the system, as far as water's desire to dilute that.” Thus, students apparently have difficulty weighing and integrating the different factors affecting the movement of molecules in solution. Their intuition about the effects of atmospheric pressure tends to override the less familiar concept of osmotic pressure.

About 10–20% of students across course levels who thought that water levels would be different (correct content response, 7a: “Side 1 is higher than Side 2”) chose reason 8a (incorrect): “Water will move from high to low solute concentration.” These results indicate a major lack of understanding of the basic principles of osmosis and diffusion.

DISCUSSION

Comprehending Osmosis and Diffusion

The data collected from students and biology experts indicate that the ODCA is a valid and reliable tool for assessing students’ understandings of osmosis and diffusion. The consistent response patterns exhibited by random samples of three student groups (nonmajors, lower-division biology majors, and upper-division biology majors), sampled across four successive semesters, reveal that students continue to have difficulty with reasoning scientifically about the basic processes of osmosis and diffusion, topics typically taught in high school biology.

Although the ODCA items are somewhat different from the DODT items, the response patterns from our 2007–2009 student groups were very similar to those seen among the midwestern students studied in 1995 and 2007 (Odom, 1995; Odom and Barrow, 1995, 2007). Only a fraction of college students in the studies fully understood the mechanisms of diffusion and osmosis, reaffirming our initial claim that student mastery of those concepts is difficult to achieve. Furthermore, biology majors in this study did not perform better than nonmajors. This was not surprising, given that others have shown that performance of nonmajors in biology courses can surpass the performance of majors in terms of content comprehension (Sundberg and Dini, 1993; Sundberg et al., 1994).

The challenge remains: Why is it so difficult to successfully teach and learn about osmosis and diffusion? Part of the challenge may be due to the fact that these processes result from the constant, random motion of invisible particles, and a significant fraction of students struggle to comprehend such abstract ideas. Indeed, Garvin-Doxas and Klymkowsky (2008) found that while students understood there to be a random component to biological processes (e.g., diffusion), students were unable to link this “randomness” to emergent systematic behaviors (e.g., net movement of particles through a membrane). Another obstacle to students’ developing a deeper understanding of diffusion and osmosis may be that instructors still tend to rely on more traditional, didactic-style methods of teaching these abstract concepts.

The lecture method has prevailed in college classrooms for decades. Yet there is a growing body of research suggesting that successful scientific understanding is rarely achieved through lecture teaching and passive student listening (e.g., Donovan et al., 1999; National Research Council, 2000; Brewer et al., 2011). Instructional methods that involve dialogue with fellow students (Lemke, 1990), active learning (Ebert-May et al., 1997), problem solving (Mintzes et al., 1998), animation (Sanger et al., 2001), and/or modeling knowledge (Fisher et al., 2000; Odom and Kelly, 2001; Tekkaya, 2003) may lead to significant improvements in understanding (Freeman et al., 2007). Furthermore, research has shown that when instructors employ formative assessment techniques to gather data regarding their students’ knowledge as they teach, they are more capable of designing additional instruction to move their students toward deeper understanding of the material (Black and Wiliam, 1998).

Conceptual Assessments

There are many ways to use reliable, validated conceptual assessments such as the ODCA and the DODT. Many (but not all) conceptual assessments, including the DODT and the ODCA, employ students’ prevalent misconceptions as distracters. When such a conceptual assessment is administered at the beginning of a course, it can provide valuable insight to the instructor regarding students’ prior knowledge and beliefs. When an instructor can build from students’ initial conceptions and demonstrate why or how scientific reasoning is better able to explain observations, students may be more prepared to grasp the scientific concepts.

The project described here focused on creating an appropriate, validated conceptual assessment and establishing baseline data for an academic program. Once available, there are many ways to use such reliable, validated conceptual assessments, and two strategies are described below.

The first obvious use of Conceptual Assessments in Biology is to assess pre- to postlearning gains within a course or curriculum. When students complete a conceptual assessment at both the beginning and end of a course, it is possible to assess learning gains (or losses) within a single semester or to compare different groups of students across semesters to improve course and curriculum designs. This approach is exemplified with the use of CINS (Anderson et al., 2002; Anderson, 2003), and three other genetics assessments: the Genetics Literacy Assessment Instrument (Bowling et al., 2008a,b), the Genetics Concept Assessment (Smith et al., 2008), and the Genetics Two-Tier Diagnostic Instrument (Tsui and Treagust, 2009). This strategy was also used with the Introductory Molecular and Cell Biology Assessment (Shi et al., 2010), the Photosynthesis and Respiration Assessment (Haslam and Treagust, 1987), and Energy and Matter Diagnostic Question Clusters (D’Avanzo et al. 2010; Hartley et al., 2011), as well as the DODT (Odom, 1995; Odom and Barrow, 1995; Christianson and Fisher, 1999). Several authors have demonstrated the use of conceptual assessments for broader programmatic improvement, including Garvin-Doxas and Klymkowsky (2008) using the Biology Conceptual Inventory, Marbach-Ad et al., (2009, 2010) using the Host Pathogen Interactions Concept Inventory, and Wilson et al. (2007) using the Energy and Matter Diagnostic Question Clusters.

When conceptual assessment tools are not used for assessing instruction or measuring student learning gains, instructors may use individual items from those assessments as “concept questions” to generate in-class discussions and facilitate biology learning, as encouraged by Klymkowsky et al. (2003), D’Avanzo (2008), and Shi et al. (2010). This method has been used effectively for promoting learning of a variety of topics, including, for example, physics (Lawson, 1988), geoscience (McConnell et al., 2006), and biology (Meltzer and Manivannan, 2002; Tessier, 2004; Tanner and Allen, 2005; Michael, 2006). Thus, conceptual assessments in biology are resources that allow instructors to plan teaching strategies and help their students to move toward more scientifically appropriate reasoning (Sundberg, 2002).

Validated conceptual assessments such as the ODCA, the DODT, and others (D’Avanzo, 2008; Fisher and Williams, 2011), hold the potential for helping students gain the capacity to talk and think using scientifically principled reasoning, and prepare for solving problems of the twenty-first century (Wood, 2009; Adams and Wieman, 2011). These easily administered instruments afford biology faculty data they can utilize to generate instruction tailored to the current conceptual understandings of their students. When instructors are aware of their students’ misunderstandings (Smith and Tanner, 2010) and utilize interactive instructional strategies (Woodin et al., 2009, 2010; Brewer et al., 2011), students’ comprehension of abstract scientific concepts can be improved.

ACKNOWLEDGMENTS

We are grateful to the students who voluntarily participated in our study. We thank Janessa Molinari-Gruby for her assistance in interviewing students. We thank the SDSU College of Sciences and Department of Biology for supporting our project. We are grateful to the SDSU Instructional Technology Services staff for their help with online deployment of the ODCA. We thank anonymous reviewers for helpful comments of an earlier version of this report. We are also deeply indebted to Louis Odom and L. H. Barrow, on whose shoulders we stand, for their thorough explication of students’ misconceptions about osmosis and diffusion and for their pioneering creation of the DODT.