It's Money! Real-World Grant Experience through a Student-Run, Peer-Reviewed Program

Abstract

Grantsmanship is an integral component of surviving and thriving in academic science, especially in the current funding climate. Therefore, any additional opportunities to write, read, and review grants during graduate school may have lasting benefits on one's career. We present here our experience with a small, student-run grant program at Georgetown University Medical Center. Founded in 2010, this program has several goals: 1) to give graduate students an opportunity to conduct small, independent research projects; 2) to encourage graduate students to write grants early and often; and 3) to give graduate students an opportunity to review grants. In the 3 yr since the program's start, 28 applications have been submitted, 13 of which were funded for a total of $40,000. From funded grants, students have produced abstracts and manuscripts, generated data to support subsequent grant proposals, and made new professional contacts with collaborators. Above and beyond financial support, this program provided both applicants and reviewers an opportunity to improve their writing skills, professional development, and understanding of the grants process, as reflected in the outcome measures presented. With a small commitment of time and funding, other institutions could implement a program like this to the benefit of their graduate students.

INTRODUCTION

Grant writing is a key component of a successful and productive career in research (Inouye and Fiellin, 2005). For example, effective grantsmanship is necessary for the continuation of research, yielding more publications, which, in turn, have been linked to career advancement (Freeman et al., 2001; Kraus, 2007). Thus, exposure to and experience with grant writing at early career stages (e.g., during graduate training) could benefit developing scientists, for whom grant writing may be important for later career success. Additionally, grant writing arguably has intrinsic benefits for the scientific process. During the process of writing a grant, a research project must be conceptualized, defined, and refined (Inouye and Fiellin, 2005). Projects written into grant applications also benefit from the peer-review process; even if a grant is not funded, the principal investigator receives, and, if resubmitting, must respond to comments from reviewers.

Grant-writing skills have traditionally been acquired informally as needed (Kraus, 2007). A majority of faculty members in academic medicine report that they have not received instruction in scientific writing (Derish et al., 2007), despite reporting that “effective writing of grants and publications” is their highest career development need (Miedzinski et al., 2001). One reason for this might be the increasingly difficult funding climate. In 2009, only 21% of reviewed grant applications to the National Institutes of Health (NIH) were funded, compared with 32% 10 yr earlier (Powell, 2010). Of those funded, few are early-career researchers (under the age of 35); in 2001, early-career researchers represented just 4% of NIH grant awardees (National Research Council, 2005).

Nationally, these issues are beginning to be addressed. Training in professional skills is now an expected component of the training plan for National Research Service Awards (NRSAs) from the NIH (i.e., training grants and individual fellowships). Typically, this training takes the form of classes focused on developing professional skills generally or research and grant proposals specifically (for examples, see Mabrouk, 2001; Wasby, 2001; Brescia and Miller, 2005; Tang and Gan, 2005).

Despite a renewed discussion of these points, these ideas are not new. More than 30 yr ago, Leming (1977) recognized a need for universities to develop the professional skills of their graduate students, while Struck (1976) reported that graduate students who participated in a writing course typically found it to be both practical and beneficial to their professional development. These classes provide a structured introduction to the basics of grant writing and can be a valuable first step, especially because novices, regardless of general writing ability, require repeated practice and feedback to master the specific writing conventions of their field (Smith et al., 2011).

A complementary and novel strategy is to engage young scientists in the grant-writing and review process earlier in their careers. The Center for Scientific Review (CSR) at the NIH has recently acknowledged the need for early-stage investigators to become involved in the grant review process earlier. The Early Career Review Program, launched in the Summer of 2011, recruits scientists in active research programs who have not previously taken part in a CSR review session for participation in a study section (CSR, 2011).

The Association for Psychological Science's Student Caucus runs a program that targets scientists even earlier in their careers. This peer-reviewed research grant program specifically targets undergraduate and graduate students and offers support for research expenses up to $500 (Association for Psychological Science, 2011). Applicants submit a research proposal and peer reviewers evaluate it using a standardized scoring system. All applicants receive their reviews at the conclusion of the competition.

These types of programs allow scientists in the earliest stages of their careers to gain real-world experience with both grant writing and grant review. The grant review system can be relatively opaque to scientists-in-training, and participating as either an applicant or reviewer can increase one's understanding of the process and how to apply successfully in the future. Applicants stand to gain the benefits of conceptualization and refinement of their ideas, as discussed above, while reviewers gain experience in critically evaluating research and providing constructive criticism. The authentic nature of these tasks (they involve real proposals and funding) gives them the potential to be more engaging and hence more instructive than a class (McClymer and Knope, 1992).

In 2009, the Medical Center Graduate Student Organization (MCGSO) polled the biomedical PhD student population at Georgetown University (GU). One hundred percent of respondents stated that additional experience with grant writing would be beneficial to their career development. Of the respondents, 65% had previously applied for grants, and 30% had received funding. Eighty-four percent of respondents intended to pursue a traditional postdoctoral fellowship upon completion of a degree. Based on these findings, the Office of Biomedical Graduate Education of GU provided $10,000 to the MCGSO to initiate a pilot grant program with the mission of providing graduate students with educational and practical experience in the grant-writing and review process.

The MCGSO initiated the Student Research Grant Program (SRGP) pilot in April of 2010 with three goals. The first goal was to provide PhD students with experience in writing. The second goal was to provide PhD students with the opportunity to conduct small, independent research projects. The third goal was to provide PhD students with experience in reviewing grants.

The SRGP is one of only a few mechanisms through which GU graduate students can apply for research funding, as opposed to fellowship grants that support graduate training (such as the NIH's NRSA). The small, independent research projects must be separate from ongoing funded research in their mentors’ labs, with the intent of encouraging students to think independently about how to expand upon their thesis projects, rather than using the grants to substitute for funding their mentors should provide. Due to the success of the pilot, the Office of Biomedical Graduate Education increased the funding to $15,000 for 2011 and renewed the program for the same amount in 2012. To evaluate whether the SRGP enhanced students understanding of the grant review process and improved grant-writing skills, we anonymously surveyed SRGP participants, asked for written feedback, and evaluated the improvement in resubmitted grants during the 2011 and 2012 years.

In this paper, we describe how to implement this unique student-run, student-reviewed grant program, which has run successfully at GU Medical Center for the past 3 yr. We also discuss and evaluate the benefits for participants in the SRGP.

METHODS

Student Body Demographics

In the Spring of 2012, Georgetown had 114 PhD students engaged in thesis research in biomedical graduate programs. The enrollment was as follows: 30% in neuroscience, 20% in tumor biology, 15% in pharmacology, and 15% in biochemistry and molecular biology, with the remaining 20% divided between microbiology and immunology; global infectious disease; physiology and biophysics; and cell biology. Of these 114 PhD students, 8% had already successfully obtained a federally funded grant.

SRGP Participant Demographics

Applicants.

There were 11 applicants in 2010, 10 in 2011, and seven in 2012, which is equivalent to approximately 9% of the PhD student body applying per year. Applications were received from all of the biomedical departments/programs at GU. All applicants were at least in the third year of their PhD studies, with the majority (71%) of the applicants being in the fourth or fifth year of their PhD.

Reviewers.

All reviewers were GU biomedical PhD students engaged in thesis research. As part of the initial request for applications, a request for interested reviewers was also issued. Thus, individuals with the desire to review were given first priority. The number of reviewers per research area was matched to the number of applicants per research area (i.e., if three applications dealt with signal transduction, three reviewers with expertise in signal transduction were recruited). Reviewers were also recruited through requests sent to the heads of the departments of students who submitted grants. Program directors and department chairs were very helpful in identifying qualified reviewers.

In 2010, nine reviewers participated in the study section. Due to the popularity of the program, quaternary reviewers were added in the second year, which increased the number of participants in the study section to 11 reviewers in the second year and nine in the third, thus allowing additional participation. All of the biomedical departments/programs at GU were represented. The reviewer demographics broken down by program were as follows: 43% in neuroscience, 14% in tumor biology, 14% in pharmacology, and 18% in biochemistry, with the remaining 11% divided among microbiology and immunology; global infectious disease; physiology and biophysics; and cell biology.

Program Officers.

There were at least two program officers for each SRGP year. For the 2010 and 2011 grant years, the two PhD students who cofounded the SRGP also participated as program officers. They were GU biomedical PhD graduate students in thesis research who were affiliated with the MCGSO. For the 2012 year, another program officer was recruited (a PhD student in her third year) to work with them and ensure continuity once the senior program officers graduate from their respective PhD programs.

Faculty Advisor.

To ensure that the review process was conducted in a manner consistent with processes used by NIH, the National Science Foundation, and many foundations, a faculty member who had participated in NIH study sections and had a federally funded grant was present for all SRGP review sections. This faculty member did not actively participate in the panel, but was available to field questions about how the review process works and to provide feedback on any issues that might have arisen during the reviewers’ discussion. The time commitment for the faculty member was 3–4 h, the length of a study section.

Program Implementation

Eligibility.

Any PhD candidate currently enrolled in a biomedical graduate program at GU was eligible to apply if he or she met the following criteria: 1) applicant must have started dissertation research (i.e., passed the required qualifying exams), 2) applicant must be in good academic standing, and 3) applicant must have applied for extramural funding. Waivers could be granted for individuals who had not submitted an application for extramural funding if they were in the process of applying at the time of SRGP submission or if the applicant was ineligible for extramural funding (such as a non-U.S. citizen who cannot apply for an NRSA).

Application.

The application was modeled after the NIH grant-funding mechanism, as the NIH is the largest single source of biomedical funding for universities in the United States (Moses et al., 2005). Once a year, the SRGP accepted new applications. In the second and third years of the program, a second submission deadline, which was limited to revised applications not funded in the initial submission, occurred 3–6 wk after the scores from the first round were released to the applicants.

Each applicant was required to provide an abstract, specific aims, a research plan (not to exceed three pages plus one page of optional preliminary results), a biosketch, a budget with justification, and confirmation of support from his or her department and mentor. Preliminary data were encouraged, but not required. Letters from collaborators were also accepted. Revised applications had to include a one-page introduction addressing the critiques from reviewers, along with the modified application. As a major goal of the SRGP is to promote training and career development, applicants were strongly encouraged to clearly outline the training benefits of the proposed project (for additional details, please see Supplemental Material S1).

A proposed (but not yet implemented) opportunity to apply for competing renewal of a previously SRGP-funded grant would require applicants to detail what progress has been made on the original grant award, how the new proposal furthers the original proposal, and why the SRGP is the appropriate mechanism for continued support.

Allowable Expenses.

Funding (up to $5000) could be requested for the following expenses: 1) expendable items, such as chemical reagents; 2) animal purchase and husbandry; 3) payment of human participants; 4) use of core facilities (e.g., magnetic resonance imaging [MRI], flow cytometry, microscopy core); or 5) equipment or software, such as surgical tools or software for data analysis. The grant could also fund travel for research conducted outside the thesis lab (either at GU or at another institution) in order to learn a new technique. However, if the lab was outside the Washington, DC, area, additional justification had to be provided as to why training in that particular lab was crucial for learning the technique. If animals or human subjects were included in the proposal, institutional review board or institutional animal care and use committee approval were required prior to funding.

A gray area, so to speak, was what research is considered “independent of the mentor's funding.” To address this, mentors were required to certify that existing funding in the lab could not cover the proposed research and to describe their intellectual contribution to the proposed project. While mentors were encouraged to discuss and review proposals with their students, the applications had to be primarily the work of the students. These requirements were enacted because the purpose of this program is to provide more opportunities for students to write grants and design and implement their own independent experiments. A large contribution from the mentor, either financially or intellectually, would be contrary to these goals.

Types of Grant Applications Received.

In the 3 yr of the program's existence, the proposed projects have had a wide range of foci. These have included: travel to use a new microscope that would allow time-lapse analyses of microbes in ice-core particles, use of the MRI core to assess brain volume changes in patients diagnosed with mild cognitive impairment, and use of microarrays to investigate the antifungal properties of a novel compound. The majority of applicants requested funds from $3500 to $4500 to complete projects; however, requests ranged from $1500 to the maximum $5000. Applicants asked for funding in multiple catagories. Most applicants asked for funding for expendable items, such as reagents and animals (82% and 46%, respectively), but a substantial proportion (43%) also requested use of core facilities.

Review Process.

Reviewers were assigned three to four grants each and were designated as the primary, secondary, tertiary, or quaternary reviewer of each grant. The reviewers received detailed instructions from the program officers on how to review applications using a modified form of the NIH guidelines. Reviewers were also provided with critique template forms to fill out. The same 9-point scale system used by the NIH was used (whole-number scores ranging from 1 to 9, with 1 indicating an exceptionally strong application and 9 indicating several substantive weaknesses). Reviewers assigned preliminary scores based on strengths and weaknesses in four criteria: significance, investigator, innovation, and approach. For each criterion, reviewers were required to list the strengths and weaknesses of the application and give a score; they also summarized the application and explained the overall impact score (see Supplemental Materials S2 and S3). Individual sections were also provided for comments regarding training potential, budget, relation of the proposed project to the applicant's thesis research, and any additional comments the reviewer wanted to make to the applicant.

After determining individual criterion scores, the reviewers assigned a preliminary overall impact score, taking into consideration the individual criterion scores and other considerations, such as training potential, feasibility, and budget. The overall impact score did not need to be an average of the individual criterion scores; instead, the weight of an individual component, such as high significance or great training potential, could outweigh concerns about other factors, such as innovation. Reviewers were reminded to use the full range of the rating scale to decrease the likelihood of all grants being scored within a narrow range.

In addition to detailed instructions regarding application scoring, reviewers were also instructed on how to conduct the review process following the same ethical guidelines used by the NIH. Prior to receiving the applications, reviewers had to sign a conflict of interest, confidentiality, and nondisclosure form certifying they were not lab members with or collaborating with a given applicant, that they had disclosed any conflicts of interest, and that they understood the confidential nature of the review process (meaning that all materials would be returned or destroyed after evaluation, and there would be no discussion of materials associated with the review, applications, review meeting, or critiques with any anyone except as authorized by the program officers; see Supplemental Material S4).

After being given sufficient time (2–4 wk) to review the applications, reviewers submitted critiques, comments, and scores to the program officers prior to the reviewer committee meeting. The program officers ranked the applicants from strongest to weakest, and the reviewers discussed the grants in this order. This process began with the program officers identifying the application to be discussed and asking anyone with a conflict of interest to leave the room. The initial scores from the reviewers were requested, and the primary reviewer then introduced the application, summarized the purpose of the grant and methodology, and cited strengths and weaknesses in the four individual criteria to justify their overall impact score. The secondary, tertiary, and quaternary reviewers presented in order after the primary reviewer, taking care to keep reviews succinct and to not repeat points made by previous reviewers.

Once all reviewers had presented, the program officers repeated the overall impact scores of the assigned reviewers, asked whether they would like to change their initial score based on the proceedings, and then asked the rest of the reviewers in the committee to write down an overall impact score for the application. Committee members were allowed to vote outside the range of the assigned reviewers’ scores but had to announce they were doing so and justify the reasoning in writing. All reviewers at the meeting were given a sheet that had the names of all the applicants with spaces to fill in scores and comments. After all of the applications had been reviewed, final priority scores were determined by averaging the scores from all the reviewers in the committee.

Funding.

For determination of funding, applications were ranked by priority score. During the first year of the program, no applications with scores less favorable than 4.4 were funded. In the second year (2011), the resubmission process was introduced, and a score of 4.4 was used as the funding cutoff for the first submission. Applicants within this score were funded, and applicants with scores that fell outside the cutoff were invited to revise and resubmit.

The program officers, in consultation with the study section, determined the level of funding for each grant that fell within the fundable range by taking into consideration the spread of scores, the quality of the applications, and how much funding was required for each individual grant. This process was somewhat flexible in that the program officers could choose to lower the fundable scoring range to include more grants if the requested funds were low, or they could fund only those that fell within a particular scoring range (e.g., the top 10%) if they chose to allocate the highest possible funding amount ($5000) to a smaller number of grants. After the committee meeting, reviewers had approximately 1 wk to make revisions to critiques before the scores and critiques were released to applicants.

To monitor the degree to which programmatic goals were being reached, funded applicants were required to submit progress reports 6 mo after receiving funding and again at the completion of their project. These progress reports also served as a key component of SRGP annual funding renewal requests.

Program Evaluation

The efficacy of SRGP in multiple domains was evaluated as described below. In addition to the measures presented below, the faculty advisor for year 2 of the program was asked to comment on his overall impressions from the study section and suggest improvements. The request was made verbally immediately following the review panel meeting, and the response was provided via email.

Exemption from human subjects approval was granted by the GU Institutional Review Board, in accordance with the Code of Federal Regulations 45 CFR Part 46.101(b)(1).

Assessment of Improved Grant-Writing Skills.

To test the hypothesis that participation in the SRGP would have a positive effect on grant-writing skills, we evaluated the change in grant scores for the subset of applicants who resubmitted grants (n = 6 from 2011, n = 6 from 2012). Because the resubmission process was initiated in year 2, only participants from years 2 and 3 were included in this analysis. The addition of a resubmission/revision process allowed applicants who had not been funded to incorporate reviewer feedback and reapply.

The primary motivation for instituting a resubmission process was to further the educational mission of the SRGP by encouraging applicants to revise their proposals based on the feedback they had received. As detailed in Review Process, each applicant had four assigned reviewers who gave an overall impact score and individual scores on significance, investigator, innovation, and approach. These scores were evaluated for change between submissions. The same reviewers were assigned for both the first and second submissions.

In 2011, six of nine eligible applicants (one applicant was funded on the first submission and therefore did not need to resubmit) resubmitted. In 2012, six of six eligible applicants (one was funded on the first submission and therefore did not need to resubmit) resubmitted.

Program Evaluation through Awardee Feedback.

For a subset of awardees who had completed their period of SRGP funding (n = 8), we requested (via email) the following information:

Did data generated from the SRGP award contribute to abstracts at professional meetings, professional talks, published manuscripts, manuscripts in preparation, preliminary data for other grant applications, or “other”?

How useful was the SRGP for your professional development? Please elaborate.

Responses were blinded. For question 2, which was open-ended, scorers coded as present or absent for each of the following phrases: improved grant-writing ability, improved curriculum vitae, expanded research scope, enhanced independence, and enhanced networking. Responses were coded by two raters (S.B.D. and P.A.F.) with 100% agreement in ratings.

Program Evaluation Assessed through Participant Feedback

As a final measure of SRGP efficacy, we surveyed applicants about the perceived benefits of SRGP participation on grantsmanship and professional development. Data were collected using the SurveyMonkey website, which allowed for anonymity in responses, mitigating bias in feedback. Data were collected after funding decisions were released to applicants and after resubmission decisions were released in years 2 and 3. All participants (reviewers and applicants) were asked to respond to a short survey about their experiences with the program. Thirty-six participants responded to the survey (18 reviewers, 11 funded applicants, and seven unfunded applicants; see Supplemental Material S5). Participants were asked to what degree their SRGP experience helped them understand the grant review process on a scale of 1–4 (1, not helpful; 2, slightly helpful; 3, moderately helpful; 4, very helpful). Applicants were asked to rate the helpfulness of reviewer comments on the same scale. Participants were also asked to what degree their SRGP experience enhanced their professional development and whether they would recommend participating in the SRGP to their friends or colleagues on a scale of 1–4 (1, not at all; 2, mildly; 3, moderately; 4, strongly).

Statistics.

Data were analyzed using GraphPad Prism version 5.0 (GraphPad, La Jolla, CA). Survey data were analyzed using a one-tailed Wilcoxon signed-rank tests (against a median of 1). Kruskal-Wallis tests with Dunn's posthoc test were used to examine group differences in survey data when three groups were compared; a Mann-Whitney U-test was used when two groups were compared. Overall impact scores from the entire review session on the first submission were analyzed using two-tailed Wilcoxon matched-pairs tests. The mean overall impact score and individual grant scores for each applicant on the first and second submissions were evaluated using a one-tailed Wilcoxon matched-pairs signed-rank test. p values < 0.05 were considered statistically significant.

RESULTS

Effect of Revisions on Grant Writing

A principal goal of the SRGP was to provide additional grant-writing experience for graduate students. This goal was based on the hypothesis that additional grant-writing experience would result in improved grant writing. The 2011 and 2012 resubmission process provided us with a direct opportunity to assess the influence of the review process on the evolution of the grant applications as a measure of grant writing.

In 2011, the mean impact score for the six applicants who chose to resubmit their grants was 5.4 (range: 5.1–6.4). The three applicants who did not resubmit had a mean score of 6.6 (range: 4.8–8.3). The scores from the two groups were not significantly different (p = 0.52), suggesting that it was not merely the applicants with the best-received proposals who chose to reapply. In support of this theory, in 2012, all six applicants who were eligible to resubmit did so (mean score: 4.4). The applicants who chose to resubmit were, however, a self-selected subpopulation of applicants. It is important to note that this subpopulation may be enriched in individuals who are predisposed to appreciate constructive criticism and respond to it effectively, or who are more invested in the proposed project.

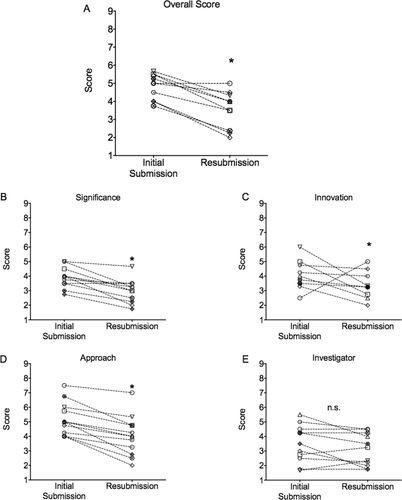

The effect the resubmission process had on the grant applications was determined by comparing the reviewers’ scores for the first and second applications (Figure 1, A–E). As shown by Wilcoxon tests, the overall score (W(11) = −66, p = 0.001), significance score (W(11) = −66, p = 0.001), approach score (W(12) = −78, p = 0.005), and innovation score (W(12) = −56, p = 0.023) significantly improved upon resubmission. The investigator subscore did not significantly improve upon resubmission (W(12) = −23, p = 0.12).

Figure 1. Improvement in grants after receiving feedback from SRGP reviews: (A) overall impact score, (B) significance subscore, (C) innovation subscore, (D) approach subscore, and (E) investigator subscore. *, Significant improvement from first submission to resubmission (p < 0.05, Kruskal-Wallis test).

Effects of SRGP Funding on Professional Development

To date, 14 applications (five in 2010, four in 2011, and five in 2012), with at least one representative from each department/program, have been funded by the SRGP. Awards ranged from $1165 to $5000. Data from the eight SRGP-funded projects in the 2010 and 2011 years have contributed to three published manuscripts, seven abstracts presented at national conferences, three professional talks, six manuscripts currently in preparation, and one patent application. Moreover, six out of eight applicants stated that data generated from the SRGP grant have been used as preliminary data for other grant applications.

When these eight awardees were asked to reflect in an open-ended format whether the SRGP was helpful to their careers, all eight awardees responded affirmatively. Four out of eight responded that SRGP participation improved their grant-writing abilities, consistent with our hypothesis that increased exposure to grant writing is beneficial to graduate students. Five out of eight responded that the SRGP enhanced their résumé, with applicants citing both the benefit of establishing their ability to secure funding and the publications and abstracts that resulted from the project. Seven out of eight also responded that the SRGP allowed them to expand their research in a direction in which it would not otherwise have gone.

Participant Feedback on SRGP

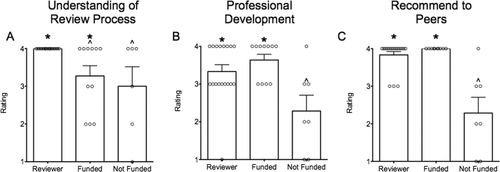

When surveyed about the effect of SRGP participation on their understanding of the review process, all three categories of participants (reviewers, funded applicants, and nonfunded applicants) reported significant gains (p < 0.05, Figure 2A). Seventeen out of 17 reviewers who responded rated the program very helpful (score of 4 out of 4). Eight out of 11 funded applicants who responded rated the program either moderately or very helpful (score of 3 or 4) in increasing their understanding of the review process. Four out of seven nonfunded applicants who responded rated the program either moderately or very helpful. A Kruskal-Wallis test revealed a significant difference among groups (H = 10.59, d.f. = 2, p = 0.005). The reported improvement did not differ significantly between funded and nonfunded applicants (p > 0.99, Dunn's test), but was significantly lower for both applicant groups (p < 0.05) compared with reviewers.

Figure 2. SRGP participants report improved understanding of the grants review process. Reviewers and applicants were asked to rate on a scale from 1 to 4 the degree to which the SRGP has enhanced (A) their understanding of the review process and (B) their overall professional development, and (C) whether the participant would recommend this program to a fellow student. *, Score significantly greater than one (p < 0.05, Wilcoxon signed-rank test); ^, significantly different from other groups (as described in Results; p < 0.05, Kruskal-Wallis test).

All groups, including nonfunded applicants, indicated the SRGP helped significantly (p < 0.05) with their professional development (Figure 2B). A Kruskal-Wallis test revealed a significant difference among groups with respect to professional development (H = 8.255, d.f. = 2, p < 0.05), with nonfunded applicants reporting lower gains than funded applicants (p < 0.05, Dunn's test).

All reviewers and funded applicants and three out of seven nonfunded applicants indicated they would moderately or strongly recommend participation in SRGP to their colleagues (Figure 2C). All groups recommended the program to a significant degree (p < 0.05). Kruskal-Wallis tests revealed that the groups significantly differed in their recommendation (H = 19.0, d.f. = 2, p < 0.001), with both reviewers and funded applicants reporting significantly stronger recommendations than nonfunded applicants (p < 0.0005).

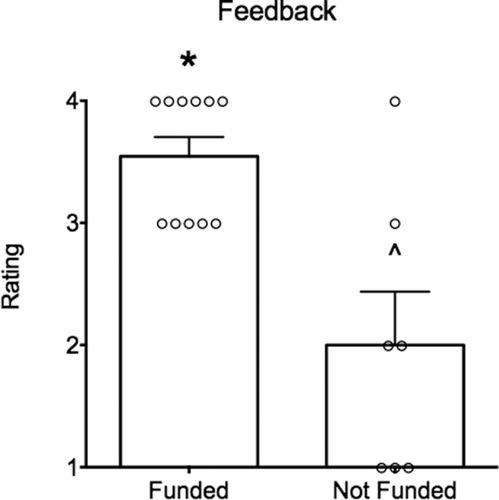

When applicants were asked to evaluate the helpfulness of reviewer feedback, funded applicants rated reviewer feedback as significantly helpful (Figure 3), while those who were not funded did not (Wilcoxon signed-rank test: p = 0.001 and p = 0.125, respectively). Consistent with this finding, funded applicants also rated feedback as significantly more helpful than did nonfunded applicants. (U(17) = 10.50, p = 0.0067).

Figure 3. Funded SRPG applicants rated feedback as significantly more helpful than nonfunded applicants. *, Score significantly greater than one (p < 0.05, Wilcoxon signed-rank test); ^, significantly different from funded applicants (p < 0.05, Mann-Whitney U-test).

DISCUSSION

The SRGP was established to give graduate students an opportunity to conduct small, independent research projects and to give students enhanced exposure to the grant-writing process. In the 3 yr since its inception, our program has met both of these goals.

First, we have been able to fund 13 research projects that lacked funding from the students’ mentors. These projects have led to abstracts, published manuscripts, professional talks, a patent application, and other grant applications, and in one case, assisted in obtaining a postdoctoral position.

Second, the data from applicants who submitted a revised proposal provided a direct measure of improvement in grant writing. The overall score, significance score, approach score, and innovation score significantly improved upon resubmission, suggesting an improvement in grant-writing skills. The investigator subscore did not change, which is consistent with the short amount of time that elapsed between the first and second submissions; thus, the investigator's qualifications were expected to change little during that time.

One caveat of these results is that it is possible that these gains in grant scores reflect improvements in regard to this grant proposal only; a stronger measure of improvement in general grant-writing abilities would be to evaluate whether these gains transfer to the writing of subsequent grants. One way to measure participants’ gains in grant writing would be a blinded assessment of another writing sample using a rubric and trained raters; however, because the applicants are at various stages of their thesis research and come from a variety of graduate programs, there is no common writing sample to assess in this way. Nonetheless, even with these limitations given the data, the improved scores indicate that the program is beneficial to applicants.

Third, participants reported that SRGP participation improved their understanding of the grant review process irrespective of whether they were reviewers or funded or nonfunded applicants (Figure 2A). The fact that both the applicants and reviewers who participated believe that this process significantly helped with their professional development (Figure 2B), coupled with the fact that they would recommend this program to other graduate students (Figure 2C), suggests that similar programs may be well received by (and useful for) students at other institutions. Presumably, participants’ willingness to recommend the program to their colleagues reflects the fact that they found participation to be useful/beneficial. Moreover, the fact that this program was rated as helpful for professional development by all participants (even nonfunded applicants) highlights that it is not just the financial incentive or the scientific experience of completing a project (as the survey was conducted before projects were started) that was beneficial but the participation in submission and review. This may reflect the need highlighted in the Introduction for more grant exposure during graduate training in the biomedical sciences.

Perhaps not surprisingly, nonfunded applicants tended to rate the program and its effects lower than funded applicants and reviewers. These applicants may have been less invested in their project or grant application, less prepared to apply, or less satisfied with the outcomes due to lack of funding. Reviewer feedback, even in this population of applicants, can be translated to other grant proposals and manuscripts in preparation, due to the type of comments provided by reviewers, which focus on areas that include: lack of clarity, feasibility of the proposal, questions regarding techniques, and discussion of expected outcomes. Moreover, this feedback can also be used to modify experiments that are underway or planned. These data support the assertion that even in the absence of an incentive (i.e., funding for research), applicants found the program to be a beneficial experience.

While the survey data presented provide a positive, semiquantitative outcome measure, we also analyzed outcomes from awardees who have completed the awards period, which provided a measure of gains from the SRGP experience. Specifically, they highlight how this program enhances professional development by demonstrating that the funded SRGP proposals (which financed projects that could not be supported by the applicants’ mentors) contributed to publications, abstracts, and professional talks. As the program continues and we obtain larger samples of participants, a larger variety of outcomes measures (e.g., percentage of participants who were successful in other grant-writing endeavors, publications, abstracts, etc.) will merit further investigation.

The Review Session

Because a unique aspect of this program is the opportunity afforded to participating reviewers, this section discusses some of these benefits. Normally, the only role available to students in the grant process is that of applicant, and, while the review process may be explained, this explanation is generally limited to what reviewers look for in an application and what the scoring criteria are. As a result, many aspects of the grant process remain a mystery to graduate students.

The process of reviewing a grant allows students to experience the review process firsthand, thus demystifying the experience of grant review to the benefit of their future grant writing. Reviewers reported that participation in SRGP was an excellent opportunity to hone skills involved in many aspects of the grant process. In addition to noting the benefits that come from practicing critical-reading skills, reviewers stated that identifying strengths and weaknesses in others’ applications allowed them to reflect on the decisions they make in their own grant writing. They also indicated that effective communication is crucial, because failure to convey an important strength or weakness can have a major impact on the final score of an application.

In response to the request by several applicants from the first year to serve as reviewers in the second year, we now encourage all applicants to consider serving as a reviewer in subsequent years. This not only aids in the quality and success of the SRGP, but also serves to further enhance former applicants’ understanding of the review process.

Evolution of the Program and Future Directions

The program has evolved in several ways over the years. During the first year, we offered only a single submission deadline with no opportunity for revised applications. Recognizing the benefit afforded to both applicants and reviewers in allowing revised applications, we instituted a closed (i.e., only open to individuals who submitted for the initial deadline) resubmission for the second and third year of the program.

In the second year of the program, the program announcement was clarified, stressing the intent to fund applicants for both the acquisition of new skills and/or the application of existing skills to new research questions.

Another issue that was clarified in the second year of funding was the eligibility criteria requiring that the applicant must have applied for extramural funding, unless they were ineligible to do so. To avoid limiting the pool of potential applicants, we modified the criteria to allow applicants who were intending to apply for an external grant (such as an NRSA) in the coming year to apply. In our second year, we had two applicants who were able to apply because of this policy change. Interestingly, one of these applicants not only received an SRGP award but also achieved a very high score (seventh percentile) on a subsequent NRSA application.

A policy that continues to evolve is the degree to which existing funding within a laboratory should impact the funding decisions of the grant program. It has been suggested that students coming from laboratories with limited funding should be given preference over those coming from “well-funded” laboratories. We have rejected this notion for several reasons: 1) the proposed research cannot be already covered by a grant to the student's mentor, and 2) the principal goal of this program is to engage students in grant writing, and we have therefore chosen not to discourage any student from applying.

Competing renewals applications have not yet been implemented but are planned for the upcoming program year. One concern with respect to competing renewals is the desire to “spread the wealth” and encourage as many individuals as possible to apply—as opposed to funding the same applicants multiple times. On the basis of feedback from applicants from the first 2 yr, we expect that only a small number (perhaps one per year) would apply for a competing renewal, thus alleviating that concern.

To broaden the base of involvement in the SRGP program, we have discussed the potential of involving a consortium of schools in Washington, DC, in the grant process. Small funding commitments from multiple participating universities would be necessary. This would provide the added benefit of fostering interaction (and potentially increasing collaboration) between graduate students in the DC area. It would also make available a broader base of reviewers to select from and would expose reviewers to a larger variety of science disciplines. Similarly, we are actively recruiting postdoc participation in the SRGP program (on the review/program side) to encourage interaction between graduate students and postdocs and to provide additional grantsmanship experience to postdocs at GU.

Implementation at Other Institutions

With a relatively small institutional financial contribution, a program like this could be implemented elsewhere. At GU, a financial contribution of only $40,000 over 3 yr has funded 13 of 28 applications at around 90% of the requested budgets. To encourage participation, this level of funding appears optimal.

The initial investment of time for this project was ∼30 h; this included gathering the initial survey data and presenting to the funding organization, as well as creating the necessary program announcements and application forms. This effort was split between two program officers who brought the project from inception to pilot over the course of 10 mo. Organizing and running the review panel took approximately 5 h for each review panel meeting.

Once the program has been launched, the time commitment to maintain it in subsequent years is greatly reduced. Small adjustments to the program announcement, collecting applications, and convening the review panels were the key commitments (totaling ∼10 h). An additional time commitment for the program officers (approximately 2 h) was needed for discussing potential aims and proposal topics with applicants.

The members of the review panel spent 1–3 h reviewing each application, and 3–4 h at each of the review sessions. This time commitment has remained constant over the 3 yr of the program. The applicants reported spending 10–20 h completing their applications.

The success of this program at GU was likely aided by two factors: 1) the introduction to grant writing provided by the core courses in several of the graduate programs, and 2) the fact that the student program officers and many of the reviewers (though not all) had previously participated in a “mock study section” during the first year of their PhD programs. Students from the pharmacology and neuroscience programs (which make up around half of all students in the biomedical programs) are required to write grants and discuss grant writing in several of their core courses. The neuroscience program also hosts a mock study section that is required for all first-year students in neuroscience and pharmacology and is optional for students in other programs. This mock study section is a component of a required course called Survival Skills and Ethics. The SRGP, however, is unique among these exercises, because participating students can actually secure funding to complete the projects they propose.

Many institutions now offer instruction in grant writing as part of their curriculum, but the study section format remains opaque to many graduate students. A meeting prior to the first review session to instruct reviewers on the expectations for their reviews and the format of the study section is recommended. A faculty member familiar with NIH study sections may also step in as the program officer during the review session, serving to prompt reviewers, answer any questions about the process, and keep the discussion on track.

CONCLUSIONS

Our data indicate that the SRGP has been successful for both reviewers and applicants based on program evaluations from participant feedback, the number of publications/abstracts resulting from the projects, and the improvement in grant scores in the pool of applicants who resubmitted. These data were echoed by a faculty member present at the review session in year 2, who, when asked for feedback regarding the session, commented via email:

I thought the reviewers were very professional. I was surprised at how often they had read some background literature or had searched for other published work on the experiments being proposed. It was gratifying to see how often reviewers asked their colleagues for advice on individual experiments or components of experiments, and how well everyone listened to each other's comments. The reviewers themselves seemed to learn a lot about what makes a proposal reviewed well and what makes it reviewed poorly; I am sure that none of them will submit their next grant with any typos or run-on sentences, for example. They also clearly learned a lot from each other, being able to compare their reviews from those of others. They learned how difficult and important it is to focus on the main goals of a proposal and to not be too influenced by technical issues that can be corrected.

As participation grows, other outcome measures can be explored, such as success in other grant-writing endeavors. It may be particularly interesting to explore the degree to which reviewers examined background literature to support their review, as the faculty advisor in year 2 had observed occurring during the review sessions. A final area of exploration for future years is the influence SRGP participation has had on reviewers’ and applicants’ understanding of the review process. This may be achieved through surveys or structured interviews performed before and after participation, to reduce reliance on retrospective reports.

In summary, we have presented here a unique student-run, student-reviewed, small research grants program. This program has provided research support to 13 graduate students. Above and beyond financial support, this program provided an opportunity for both reviewers and applicants to improve their writing skills, professional development, and understanding of the granting process.

ACKNOWLEDGMENTS

We thank the Office of Biomedical Graduate Education at GU Medical Center for their generous funding of the program, as well as the members of the Medical Center Graduate Student Organization executive board who helped launch the program. We also thank the 28 applicants and 24 reviewers who participated during the first 3 yr of the program. Finally, we thank Drs. Barry Wolfe and G. William Rebeck, who served as faculty advisors for the first and second years of the program, respectively. They provided insight into the NIH peer-review format and valuable feedback on areas for improving the program. P.A.F. received support from HD046388. S.B.D. received support from F31AG043309 and a National Science Foundation Graduate Research Fellowship, DGE-0903443.