Use of Feedback-Oriented Online Exercises to Help Physiology Students Construct Well-Organized Answers to Short-Answer Questions

Abstract

Postsecondary education often requires students to use higher-order cognitive skills (HOCS) such as analysis, evaluation, and creation as they assess situations and apply what they have learned during lecture to the formulation of solutions. Summative assessment of these abilities is often accomplished using short-answer questions (SAQs). Quandary was used to create feedback-oriented interactive online exercises to help students strengthen certain HOCS as they actively constructed answers to questions concerning the regulation of 1) metabolic rate, 2) blood sugar, 3) erythropoiesis, and 4) stroke volume. Each exercise began with a SAQ presenting an endocrine dysfunction or a physiological challenge; students were prompted to answer between six and eight multiple-choice questions while building their answer to the SAQ. Student outcomes on the SAQ sections of summative exams were compared before and after the introduction of the online tool and also between subgroups of students within the posttool-introduction population who demonstrated different levels of participation in the online exercises. While overall SAQ outcomes were not different before and after the introduction of the online exercises, once the SAQ tool had become available, those students who chose to use it had improved SAQ outcomes compared with those who did not.

INTRODUCTION

Many science disciplines require both a critical arsenal of factual knowledge and an in-depth conceptual understanding so that students can apply their knowledge and understanding to novel problems and contexts (Crowe et al., 2008; Mynlieff et al., 2014). It is the ability of adult learners to use what is learned during lectures to critically evaluate a novel situation and problem solve that is widely recognized as an important goal of university-level teaching of physiology as well as other basic sciences such as biology and chemistry (Pratt, 1993; Zoller, 1993; Tanner and Allen, 2005; Levesque, 2011). Bloom’s revised taxonomy uses six key verbs (remember, understand, apply, analyze, evaluate, and create) to categorize levels of learning; each verb denotes a successively higher level of cognitive ability (Anderson et al., 2001). During the initial years of university, large class sizes and the introductory nature of course content necessitates that assessment of student learning largely take the form of multiple-choice questions (MCQs) that test primarily the lower-order cognitive skills (LOCS) of remembering and understanding. However, as students move through their years of postsecondary education into upper-division courses like physiology, they should be increasingly challenged to develop and display not only LOCS but also the higher-order cognitive skills (HOCS)—analyze, evaluate, and create—as applied to novel or unfamiliar new problems and apply what they have previously learned toward the development of solutions (Zoller, 1993; Michael, 2006; Mayer, 2008; Orr and Foster, 2013; Mynlieff et al., 2014). These abilities fall under the domain of scientific literacy, a competence that should be an important focus of university undergraduate education (Norris and Phillips, 2003; Libarkin and Ording, 2012).

Students progressing through basic science programs often find it difficult to make the transition from answering MCQs that assess primarily remembering, understanding of individual pieces of information, essentially a passive activity, to developing well-worded answers that demonstrate their ability to both apply basic science principles and link them in a logical manner, which is an active process (Zoller, 1993; Bailin, 2002; Crowe et al., 2008). Higher-order learning involves the reorganization of new knowledge and understanding as it is transferred from working memory into long-term memory in a way that forges links with that which is already known and understood so that each student’s long-term memory can evolve as it integrates new components associated with a more complex understanding of scientific processes (Kirschner, 2002; Kirschner et al., 2006). Referred to as “generative processing” (Wittrock, 1992; Mayer, 2010), this type of higher-order learning can be facilitated (Chi et al., 1994) and assessed by asking students to construct concise, well-written answers to short-answer questions (SAQs). For students with limited experience in this type of evaluation, this can be challenging. Indeed, I have noted the following when marking answers to SAQs provided by students during previous years of summative physiology examinations: inaccurate information, the provision of information that, while correct, is not relevant, the omission of key points that would demonstrate an in-depth understanding of relevant concepts, and, finally, an inability to organize the answer logically. I concluded that students needed to practice developing well-organized and accurate answers to SAQs and that, as instructor, I needed to provide that practice before students encounter similarly styled questions on summative examinations (Gagné et al., 1992; Levesque, 2011). Unfortunately, the large sizes of many undergraduate classes make it difficult to offer regular practice in developing explanatory answers and solving problems and, more importantly, to provide students with timely, answer-specific feedback on the written work they have prepared.

It is also important to match course-associated learning activities with summative assessment criteria so that, through practice, students might gain or improve their ability to use HOCS before their work is evaluated via high-stakes summative examinations (Sundberg, 2002; Tanner and Allen, 2004; Bissell and Lemons, 2006; Crowe et al., 2008). Indeed, studies have shown that assessment methodology influences the strategies used by students when preparing to write examinations (Scouller and Prosser, 1994). For example, if it is known that an examination will be exclusively MCQ-based, students will often prepare for it by using surface strategies such as the compilation and memorization of lists of factual information, because they know that assessment methods will be used that address primarily LOCS (Scouller, 1998). However, if students know that the summative assessment has been designed to test HOCS such as analysis, evaluation, and the provision of detailed explanations, then students will use learning strategies that involve the use of HOCS through in-class and out-of-class activities that support these approaches (Scouller, 1998; Crowe et al., 2008).

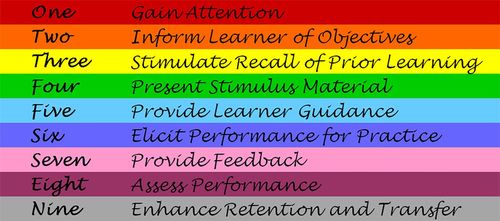

The purpose of this project was to develop an online tool that would allow students enrolled in a third year–level physiology course to practice building, step-by-step, well-organized and comprehensive answers to questions housed within clinical situations or specific physiological scenarios. The development of these interactive exercises was guided by Robert Gagné’s nine events of instruction (Figure 1) so that the ability of this tool to capture student attention and promote interactive, effective learning could be maximized (Gagné et al., 1992). Students will not use an online tool simply because it exists; rather, they must see value in it, including how it links to course objectives and has the potential to improve their summative assessment outcomes (Saunders and Gale, 2012). While it has been more than 20 yr since its original publication, Gagné’s list of instructional goals continues to influence instructional design, because it addresses basic principles, such as the need to motivate and engage learners as well as to guide them toward integrating new knowledge with that which is already known so as to enhance understanding, retention, and transfer (Kinzie, 2005; O’Byrne et al, 2008; Carnegie, 2013). The exercises developed for my study asked students to demonstrate their understanding of four important physiological concepts: 1) the regulation of metabolic rate by thyroid hormone, 2) the control of blood sugar by insulin, 3) the influence of altitude on the regulation of erythropoiesis, and 4) the intrinsic regulation of cardiac stroke volume. While the questions used for these online exercises were not identical to questions students subsequently encountered on midterm examinations, they were representative of the level of cognitive ability the students would be expected to exhibit in the short essay component of summative evaluations. During the completion of each of the four online assignments, students were provided with guidance on answer construction and instantaneous feedback regarding the appropriateness of the items they had selected to include in their answers. At the end of the course, students were asked to complete an anonymous survey detailing their perceptions of the effectiveness of the online tool. In addition, student outcomes on SAQ sections of summative exams were compared between populations that had access to the online tool and those who did not and between subgroups of students who showed various levels of participation in the online SAQ assignments.

Figure 1. Robert Gagné’s nine events of instruction (Gagné et al., 1992).

METHODS

Exercise Creation

PHS3240 is a full-year, third year–level course in mammalian physiology that is team taught by a number of faculty members within the Department of Cellular and Molecular Medicine at the University of Ottawa. Systems within this course that are taught exclusively by the author include the endocrine and cardiovascular systems. Students learn about the endocrine system during the first part of the course and are assessed on that knowledge in exam 1. Similarly, the cardiovascular system is covered during the next section of the course, with summative assessment of that content during exam 2. Examination scores for this course are typically derived in equal parts from MCQs and SAQs, with each SAQ having a value of five to seven points.

Four interactive exercises were developed for students taking PHS3240, using the action maze software Quandary (Arneil and Holmes, 2009). This freeware can be downloaded for use without charge at www.halfbakedsofware.com/quandary_download.php. Advantages of this software include the ability to send students back to retry a question if an incorrect answer is selected and to provide feedback that individually targets every answer choice, be it correct or incorrect. Furthermore, the scoring function of Quandary allows points to be awarded or deducted, as appropriate, and students to see their scores as they proceed through the exercise. Initial exercise planning involved the use of tables to organize the questions, the answer choices, the answer-specific explanatory feedback, and the marking scheme. Interactive exercises were then created by loading that information into Quandary, defining the navigation to be followed by the student, and finalizing the scoring. The first two exercises addressed the endocrine system and were completed by students in preparation for exam 1. The second pair of exercises targeted the cardiovascular system and was completed as students prepared for exam 2.

Each exercise opened with the presentation of a physiological scenario (e.g., training for a cycling competition at high altitude) or a clinical vignette (e.g., a patient displaying symptoms of Graves disease) accompanied by one or more essay-style questions for which students would normally be expected to compose a well-organized and concise written answer (Table 1A). However, rather than being asked to provide a written answer, a student was instead taken through a series of six to eight MCQs in which he or she developed an outline of his or her written answer by clicking on the “submit” button to select items that should be included in that answer and, by virtue of the choices he or she made, specifying the order in which that information should be presented. Distractors that were provided in the MCQs included incorrect information, information that was correct but did not apply to the question being answered, and information that was correct but should not be presented until later in the answer after the groundwork for that particular piece of information had been laid (Table 1A).

|

Feedback was provided for each answer choice, either correct or incorrect. If the answer was correct, the feedback provided a short corroborative explanation, sometimes accompanied by additional pieces of information to further consolidate the concept (Table 1B). If one of the distractors was chosen, the feedback explained why that answer was not the best choice, sometimes provided a hint, and always directed the student back to try again by having him or her click on the “Submit” button associated with that feedback (Table 1C). As an additional effort to reinforce guidance of student thinking (Gagné event 5), the last page of each exercise provided a summary of the correct answers and/or feedback in an effort to show students a worked example of an orderly outline of the elements that should be included in a complete answer to that SAQ (Table 2).

|

In addition to the inclusion of the summary page, care was taken throughout the process of exercise construction to apply Gagné’s nine events of instruction (Figure 1), as detailed in the Reasoning annotations applied to the working document for exercise creation shown in Table 3. The example used is the endocrine SAQ exercise pertaining to the thyroid gland, and shaded Reasoning boxes shown throughout the table identify examples of specific application of Gagné’s instructional design principles. For example, the patient presenting with Graves disease was used as stimulus material at the beginning of the exercise to grab student attention (events 1 and 4) and provide an opportunity for application of knowledge with the goal of enhancing retention and transfer (event 9). Furthermore, the broad questions that appeared at the end of each case description informed students of the objectives to be addressed within that exercise (event 2). Finally, as detailed throughout Table 3, each of the individual MCQs with their answer choices and associated feedback repeatedly addressed Gagné’s remaining events by stimulating recall, providing practice and learner guidance, and assessment and feedback for every answer selected. Finally, the series of MCQs were constructed in such a way that they guided student thinking as to the logical order in which correct items should appear in their answers (event 5).

|

Student Populations, Participation, and Feedback

Student enrollment in PHS3240 numbered 144 and 120, respectively, at the beginning of the 2010 and 2011 academic years. There was some attrition over the duration of the course, resulting in 139 and 108 students, respectively, writing exam 2 in the Winter terms of 2011 and 2012. The SAQ exercises were provided online to students using the assignment function of the Blackboard Vista (Blackboard, Washington, DC) course website. Students had 1 wk to complete each exercise and to submit their scores online using the course website assignment drop box. Each correct answer was worth one point, and this resulted in each assignment having a total possible score of six to eight points, depending on the number of questions in that assignment. While points were not usually deducted for an incorrect answer, on the rare occasion when an answer was selected that was associated with a serious error in fundamental reasoning, a single penalty point was deducted from the student’s score to emphasize that error. The software also allowed students to be blocked from choosing the same answer (correct or incorrect) more than once. Each of the four exercises contributed a possible 1% of the overall final mark for this course.

Student feedback was obtained at the end of the first year of implementation of the SAQ assignments using an anonymous survey that consisted of seven Likert-scale questions and a box for open-ended comments and suggestions. The survey collected data on student demographics, self-assessed level of participation in the assignments, perception of exercise value, and interest in having access to additional similar assignments. These data were compiled and analyzed using Microsoft Excel.

Analysis of Student Outcomes

The possible influence of online SAQ assignments on student outcomes was assessed by comparing student SAQ scores achieved during the 2 yr before implementation of the online exercises with those earned during the subsequent 2 yr, when students had access to these assignments. A marking rubric was used for each summative examination SAQ, and these rubrics, like the summary pages viewed by students upon the completion of each online exercise, consisted of short lists of required answer components. The same rubric was used each time a particular SAQ was included in a summative examination. Within each rubric, points were awarded not only for including the required answer elements but also for defining and/or explaining them completely and for applying them correctly to the solution of the SAQ. The statistical comparison of student outcomes was conducted using the independent-samples t test accompanied by Levene’s test for equality of variances. Possible influences of the level of participation in the SAQ assignments on student MCQ and SAQ outcomes were evaluated using analysis of variance (ANOVA) followed by the Tukey range test to identify statistically significant differences between MCQ mean scores and SAQ mean scores for the three levels of participation. For all statistical evaluations, differences were considered significant at p < 0.05.

RESULTS

Level of Participation

During the 2010–2011 academic year, all of the 144 students enrolled in PHS3240 wrote exam 1. A small number of students dropped the course, resulting in 139 of them (96.5%) remaining to write the subsequent exam (exam 2) during the Winter term. Similarly, 120 (100% of students) completed exam 1 during the 2011–2012 academic year with enrollment dropping to 110 (91.7%) before exam 2. In general, these students showed a high level of participation in the online assignments. Close to 90% (87.5% and 89.2%, respectively, for 2010 and 2011) of students completed the first pair of exercises while studying the endocrine system. However, while 95% of students also completed both of the cardiovascular system–associated exercises during the 2011 Winter term, the level of participation dropped to 82.7% for students given the same assignments during the subsequent academic year. A very small number of students (1.46% and 3.64%, respectively, in each of the two successive academic years) chose not to do any of the exercises at all. In contrast, 84.2% of the 139 students who completed both exams during 2010–2011 participated fully in this aspect of the course, and 74.5% of the 108 students who completed PHS3240 during the second academic year finished all four exercises.

Student Feedback

Survey data were obtained from 71.9% of students who completed PHS3240 during the 2010–2011 academic year. The survey respondents reported that they had completed either three (7%) or all four (93%) of the exercises. The majority of respondents (86%) were in their third or fourth year of undergraduate study, and while many (78%) reported prior experience with SAQs on 50% or more of their summative exams, for the rest of the students (22%), this was their first attempt at composing written work under conditions of exam-related stress.

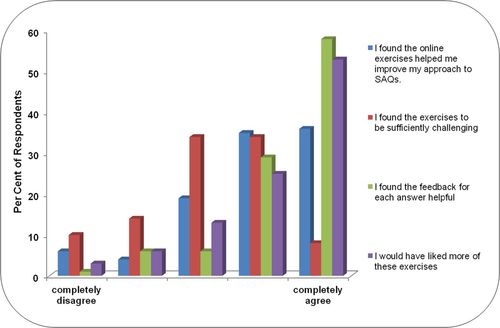

Figure 2 summarizes student feedback regarding the SAQ exercise content and design. The majority of respondents felt that the online exercises did help them improve their approach to SAQs. Interestingly, there was a rather uniform distribution of students with regard to their perception of the level of difficulty of the exercises. While 58% of students showed partial to complete agreement with the statement that the exercises were sufficiently challenging, the remainder (42%) felt that the exercises could have been constructed to be even more demanding. Students (87%) were especially appreciative of the feedback that was provided for both correct and incorrect answer choices. Finally, the majority of students (78%) indicated they would have welcomed the opportunity to complete even more of these exercises before writing summative examinations.

Figure 2. Feedback regarding the SAQ assignments collected from students during the first year (2010–2011) of their implementation (n = 100 respondents from 139 surveyed).

Student Outcomes

A comparison of student outcomes on SAQs pertaining to the endocrine and cardiovascular systems (exams 1 and 2, respectively) between students writing exams during the 2 yr before introduction of the online SAQ exercises and during the subsequent 2-yr period when they did have access to formative online SAQ assignments revealed no significant effect (p > 0.05) of the exercises on these measurements of student success (Table 4). However, while not significant (p = 0.098), it should be noted that there was a trend for students who had access to the formative exercises to perform better on the endocrine system SAQs in exam 1 (69.0 ± 1.40%) than the students who did not have access to the SAQ assignments (65.3 ± 1.7%). In contrast, student outcomes on the cardiovascular system SAQs (exam 2) were virtually identical (p = 0.907) between the two student populations.

| Exam 1 | Exam 2 | |||

|---|---|---|---|---|

| n | SAQ score (%)a | n | SAQ score (%)a | |

| No SAQ exercises | 165 | 65.3 ± 1.70 | 156 | 72.7 ± 1.55 |

| Access to SAQ exercises | 264 | 69.0 ± 1.40 | 247 | 73.0 ± 1.42 |

When the focus was shifted to only the student populations provided with online SAQ exercises, it was found that the level of participation in formative assignment completion was strongly associated (p < 0.001) with student SAQ scores (Table 5). Student outcomes on the endocrine system (exam 1) and cardiovascular system (exam 2) SAQs were compared with their overall MCQ scores in each of these exams in an effort to isolate an effect of the formative exercises on the ability of students to formulate well-organized, complete, and accurate written answers. With regard to exam 1, the small number of students who completed only one SAQ exercise or completed neither had poorer outcomes (p < 0.05 and p < 0.001, respectively) when answering the endocrine SAQs in exam 1 compared with those students who participated fully in the online SAQ work. With regard to the MCQ outcomes, a significant difference was noted (p < 0.001) only when comparing the group who participated fully in the online work with those who did not participate at all. Similarly, for exam 2 and with regard to both SAQ and MCQ outcomes, significant effects of participation in the online assignments on summative examination scores were noted only when comparing those students who participated completely with those who did not attempt either assignment (p < 0.001 and p < 0.05, respectively). It should be noted when comparing student performance on the SAQ versus the MCQ portions of each of the two exams, while these values were within 10% of each other for those students completing both assignments, student outcomes on the SAQ portion dropped to being lower (p < 0.05) than those on the MCQs by 15–23%, suggesting a stronger influence of participation in the online work on student ability to answer summative SAQs.

| SAQ exercises to prepare for | Number of exercises completed | n | SAQ score (%)a | Overall MCQ score (%)a |

|---|---|---|---|---|

| Exam 1 | 2 | 233 | 71.2 ± 1.42b,c | 72.8 ± 0.99d |

| 1 | 21 | 58.3 ± 5.48b | 66.9 ± 3.51 | |

| 0 | 10 | 39.8 ± 5.38c | 54.8 ± 2.62d | |

| Exam 2 | 2 | 216 | 74.7 ± 1.45e | 84.4 ± 0.98f |

| 1 | 20 | 66.8 ± 5.62 | 79.0 ± 3.46 | |

| 0 | 11 | 50.0 ± 6.65e | 72.5 ± 4.45f |

DISCUSSION

These interactive exercises allowed students to practice important HOCS associated with the development of well-organized answers to physiology-based questions before writing their summative examinations. While these exercises did still rely on the use of MCQs, these were MCQs that prompted students to take steps in the construction of explanations pertaining to their SAQ answer by planning the answer foundation, evaluating the relevance of various pieces of physiological information, selecting appropriate information to include, and forging links between related concepts, rather than simply selecting a single answer to a fact-based question. Hence, these exercises provided opportunities for students to use HOCS such as analyze, evaluate, apply, and create as they worked their way through the series of MCQs associated with each assignment. The ability of Quandary to support the provision of a limitless number of answer options, to allow one or more correct answer choices, to either reward or penalize students based on the answer selected, and to provide immediate instructive feedback for every possible answer choice allowed the construction of interactive online exercises that guided students toward the logical organization of new knowledge, the creation of appropriate links, and the development of explanatory arguments as they practiced knowledge application within appropriate clinical and real-life contexts (Arneil and Holmes, 2009). While there are conflicting views in the literature regarding the amount of guidance that should be provided to students, ranging from purely constructivist approaches to a defined curriculum supported by didactic lectures, a strong case has been made for the provision of a reasonable level of guidance that is specifically geared toward supporting the cognitive processing needed during that particular learning process (Mayer, 2004; Kirschner et al., 2006). The provision of learner guidance is also a key instructional design principle that forms the fifth of Robert Gagné’s nine events of instruction (Gagné et al., 1992). These formative exercises also thoroughly addressed the remaining eight of Robert Gagné’s events of instruction by providing feedback-associated opportunities for students to practice both the application of their new knowledge and the organization of their reasoning when doing so. Especially worthy of note was the use of real-life clinical and physiological scenarios to capture attention, motivate learners, and provide contextual relevance. Finally, and with regard to Gagné’s seventh and ninth events, the provision of immediate instructional feedback that addressed both the correct answer and the distractors, in contrast to simply an indication of whether the answer chosen is correct or incorrect, has been shown to be important to students and to promote both knowledge retention and transfer (Moreno, 2004; Mason and Rennie, 2008; Guy et al., 2014).

The high level of student participation in these formative assignments demonstrated that students recognized the educational value of the SAQ exercises, despite the fact that each exercise contributed only 1% toward the final overall grade. The practice questions directed students to apply HOCS, referred to as generative cognitive processing, as they analyzed specific situations, applied their recently acquired physiological knowledge to an understanding of the problem to be addressed, and justified the concepts they applied or courses of action they suggested (Anderson et al., 2001; Clark and Mayer, 2008). Indeed, a strong case has been made for a role of self-generation of explanations in improving deep learning and understanding by students (Chi et al., 1994), and adult learners have frequently been described as being self-directed and learning best when conditions are task oriented (Knowles & Associates, 1984; Pratt, 1993). An additional consolidation-promoting feature of these exercises was that the final screen seen by students provided a summary of the correct answers to all the questions in that exercise, in this way providing them with a worked example of a good outline to be followed when composing a written answer. The provision of worked examples has been reported to promote learning by allowing a greater proportion of the limited capacity of short-term memory to be focused directly on the learning process itself (Kirschner et al., 2006; Clark and Mayer, 2008).

The majority of students reported that they found the SAQ exercises to be helpful, benefited from the feedback, and would like to have access to more of these formative exercises. It therefore did appear that provision of these online formative exercises improved the quality of the learning experience for these students and addressed a need to provide them with sufficient opportunities to participate in activities that would allow them to develop their critical-thinking and problem-solving skills (Crowe et al., 2008; Saunders and Gale, 2012). An important component of higher learning is the development of metacognitive skills—the ability to recognize and reflect on mistakes in order to acquire a deeper level of learning that can be applied to novel situations (Biggs, 1988; Livingston, 1997). The instantaneous feedback provided to students as they worked through the exercises, as well as opportunities to reflect and select a better answer, promoted the attainment of some important metacognitive goals (Livingston, 1997; Mynlieff et al., 2014). While about half of the respondents suggested that the exercises could have been more challenging, it is important to realize that the physiological concepts being addressed in each exercise required similar levels of problem-solving and critical-thinking skills as the SAQs these students would later encounter on their summative exams. For example, the second SAQ formative exercise asked students to identify diabetic ketoacidosis as the cause of symptoms displayed by a patient who arrived at the emergency room and to describe the physiological disruptions responsible for those symptoms, while a SAQ used repeatedly on summative exam 1 asked students to discuss the physiological cause of type 1 diabetes, certain key functions of insulin, and an important test used to monitor the management of a patient with this disease. Both questions targeted content that had been discussed thoroughly during lectures and required students to display an understanding of the key role of insulin in regulating blood sugar levels. However, it is also important to note that the MCQ-based design of the exercises, by taking students step-by-step in the formulation of their answers, would have provided some cues that could trigger information recall. The exercises were also open-book assignments, and students were prompted to go back and try each question until it was answered correctly. These features, combined with the absence of exam-related stress or time constraints, would have promoted student well-being and achievement as they worked through the questions, leading them to perhaps think that the questions written under these conditions were easier than those encountered during summative examinations (Orr and Foster, 2013). And, most importantly, they were gaining practice in organizing and expressing their physiology-based reasoning.

Interestingly, while most students did report that they found the online assignments improved their approach to SAQs, it was not possible to demonstrate a significant effect of the introduction of these exercises on overall summative SAQ outcomes, although it should be noted that there appeared to be a nonsignificant trend toward improved outcomes for exam 1. It is frequently difficult to show a significant effect of a single modification to teaching strategy on student outcomes when average scores are compared between different years for a number of reasons, many of which apply to the current study (O’Byrne et al., 2008). Class composition changes each year, resulting in student populations with differing levels of motivation, ability, and background knowledge. While the examination questions target similar course objectives, the questions themselves do vary from year to year, and each one may not always be at precisely the same level of difficulty. Student examination schedules fluctuate, allowing the introduction of extra challenges posed by clustering of summative examinations within a short time period and the necessity for some examinations to be written in the evening, when students are tired. And, in this study, average class size increased by more than 50% just before the introduction of the SAQ exercises, and a possible confounding detrimental effect of increased class size on the learning success of some students cannot be ruled out (Lindsay and Paton-Saltzberg, 1987). However, the trend toward improved SAQ outcomes noted for exam 1 is encouraging. One would expect that any benefit gained from being guided in the development of an outline for a SAQ answer and seeing worked examples of answer outlines would be greatest with the first exposure to these exercises and that the effect would become less prominent as students became more accustomed to the process of formulating written answers (Libarkin and Ording, 2012).

In contrast, when focusing on only those students who had access to the interactive SAQ exercises, there was found to be a very strong association between the level of student participation in the online assignments and subsequent summative examination outcomes, particularly with regard to the SAQ portions of the examinations. It should be noted that completion of each exercise not only provided students with practice in problem-solving and answer-organization skills but also prompted them to reflect on course content and to consolidate their understanding of a topic when applying it in context. Hence, both learning outcomes, developing an approach to SAQ answer writing and obtaining practice in the application of new knowledge, may have contributed to the vastly improved student outcomes, especially on the SAQ portion of the examinations. But these data must also be interpreted with some caution. The majority of students completed both sets of exercises, resulting in very different sample sizes between the three populations evaluated. Furthermore, when considering the lower average exam scores for students who chose not to complete the online exercise(s), one cannot ignore a very likely inverse association between level of student participation in assignments and motivation to work hard and/or interest in course content when preparing for summative examinations. However, the positive reception of these assignments by students and the beneficial outcomes noted for students who participated fully in assignment completion suggest a value for this online work and that it should be continued and perhaps even expanded.

Finally, why put so much effort into a SAQ portion of an examination and development of student answering skills when that is so much more labor-intensive than computer-graded MCQ examinations? Preparation for an examination that will require students to write, to express their understanding of concepts and their ability to analyze and apply them in context will prompt students to use deep learning approaches (Chi et al., 1994; Scouller, 1998). Furthermore, SAQs have the ability to distinguish between students who can recognize correct answers to MCQs either through memorization of that factual information (surface learning) or a good educated guess after the elimination of some obvious distractors from those students who have acquired an in-depth understanding of that course content and can produce/synthesize their own clear explanations and justifications (Scouller, 1998; Crowe et al., 2008). For example, one study conducted by the author found that a significant proportion of students who selected the correct answer to certain physiology-based MCQs on summative examinations were unable to correctly apply physiological principles to justify that answer choice when subsequently asked to support it with a written explanation (Carnegie, 2006).

In conclusion, online exercises that use a series of feedback-associated MCQs can be used to direct student thinking and provide practice application of HOCS when facing the challenges of teaching large classes by guiding students through the relevant concepts to be explained, linked and applied to the solution of a particular problem. The use of appropriate clinical and physiological scenarios can effectively engage student interest, and the provision of immediate feedback and opportunities to retry questions promotes reflection and an in-depth understanding of physiological concepts, their interrelationships, and their application. Similar approaches would work well for other basic sciences such as biology, chemistry, physics, and pathophysiology, because these online self-testing exercises promote scientific literacy, an important component of university-level education.

ACKNOWLEDGMENTS

This project was funded by a University of Ottawa Faculty Award for Excellence in Education to the author.