A Measure of College Student Persistence in the Sciences (PITS)

Abstract

Curricular changes that promote undergraduate persistence in science, technology, engineering, and mathematics (STEM) disciplines are likely associated with particular student psychological outcomes, and tools are needed that effectively assess these developments. Here, we describe the theoretical basis, psychometric properties, and predictive abilities of the Persistence in the Sciences (PITS) assessment survey designed to measure these in course-based research experiences (CREs). The survey is constructed from existing psychological assessment instruments, incorporating a six-factor structure consisting of project ownership (emotion and content), self-efficacy, science identity, scientific community values, and networking, and is supported by a partial confirmatory factor analysis. The survey has strong internal consistency (Cronbach’s alpha: α = 0.96) and was validated using standard simple and multiple regression analyses. The regression analyses demonstrated that the factors of the PITS survey were significant predictors of the intent to become a research scientist and, as such, potentially valid for the measurement of persistence in the sciences. The PITS survey provides an effective method for measuring the psychological outcomes of undergraduate research experiences relevant to persistence in STEM and offers an approach to the development and validation of more sophisticated assessment tools that recognize the specificities of the type of educational opportunities embedded in a CRE.

INTRODUCTION

The 2012 report to the president Engage to Excel: Producing One Million Additional College Graduates with Degrees in Science, Technology, Engineering, and Mathematics (President’s Council of Advisors on Science and Technology [PCAST], 2012) crystallized the central issue facing science, technology, engineering, and mathematics (STEM) education in the United States. Mainly, what changes can be made to science education in postsecondary institutions across the United States that will facilitate higher rates of retention of students in STEM majors? This question, which has now been echoed in a wide series of reports and publications, posits a connection between two basic components: 1) course design, including ways of teaching, teacher training, and course components; and 2) the departmental and institutional retention of students in a STEM major.

It is well established that undergraduate STEM majors are lost not because of lack of talent but because of the way their courses are taught (Seymour and Hewitt, 1997). There is ample literature to suggest that, when courses are taught in more active and engaging ways, students are more engaged, and more learning happens (Handelsman et al., 2007). In relation to undergraduate laboratory courses, course-based research experiences (CREs) are being developed to provide authentic and engaging research experiences (Hanauer et al., 2006; Auchincloss et al., 2014; Corwin et al., 2015). These changes to the teaching approaches to laboratory and lecture courses can influence student learning experiences and potentially influence the likelihood of students staying in a science major.

The way educational changes influence retention rates can be hypothesized to pass through changes in the psychological self-understandings and professional positioning of students (Chemers et al., 2011). The decision to stay in the sciences, like any other life–career decision, has underpinning psychological components (Chemers et al., 2011; Estrada et al., 2011). In turn, the psychological states of students can be hypothesized to result from specific educational experiences. As such, the way STEM education is conducted influences the psychological state of the student in ways that can promote the likelihood of making the decision to stay in STEM. In this scenario, understanding and measuring those psychological states is a significant concern, and ultimately, it is the psychological state and self-positioning of the student that directs his or her decision to stay in the sciences (Estrada et al., 2011). Measures of this mediating component, the psychological state of the student, are thus a key part for answering the STEM student retention question posed by the PCAST report.

This paper addresses the measurement of psychological states of students relevant for life–career decisions in the ongoing pursuit of a STEM major following participation in undergraduate laboratory courses. We present the theory, psychometric properties, and initial predictive abilities of the student Persistence in the Sciences (PITS) survey. The survey was constructed from a series of existing instruments that have looked at different psychological components relevant to science education and was then validated for its ability to address persistence. The PITS survey is designed for use in conjunction with the development of CREs. It offers a way of assessing the consequences of these courses in terms of student outcomes and retention potential. The analysis we present is a validation study of the PITS survey. The aim is to make the PITS instrument available for those science educators and researchers who are currently developing new innovative courses aimed to enhance STEM retention rates. We offer evidence not only that the instrument provides a way of measuring relevant variables that underpin student persistence decisions but also evidence on the ways in which these measures may be linked to persistence. The paper as a whole also offers an approach to the development and validation of more sophisticated assessment tools that recognize the specificities of the type of educational opportunities embedded in a CRE.

A Psychological Measurement Model of Persistence

The model we propose and evaluate here resulted from three developments relating to the aim of enhancing retention in undergraduate science majors: the pedagogical development of course-based research experiences (CREs; Hanauer et al., 2006; Auchincloss et al., 2014; Corwin et al., 2015); the development of a series of assessment tools focusing on undergraduate research experiences and retention (Chemers et al., 2011; Estrada et al., 2011; Hanauer and Dolan, 2014; Hanauer and Hatfull, 2015); and the development of a theoretical orientation that integrated scholarship relating to persistence (Graham et al., 2013). At its most basic level, the model suggests that retention can be improved through the usage of CREs; that these educational experiences result in a series of measurable psychological states; and that, if desirable levels of these states exist, then the likelihood that these students will stay in the sciences will increase.

Previous research has proposed a series of potential psychological outcomes of research experiences that are worth considering within a broader model of persistence in the sciences. First, and most directly related to current developments in the pedagogy of CREs, is the variable of project ownership. The concept of project ownership emerged directly from the educational work conducted in relation to the development of a large and well-established CRE (Hatfull, 2010) and was validated as a measurement variable in relation to a series of other research experiences and CREs (Hanauer et al., 2012; Hanauer and Dolan, 2014). Based on analyses of dimensionality, project ownership was found to differentiate into two factors: the content scales and emotional scales of project ownership (Hanauer and Dolan, 2014). The importance of project ownership as a variable is that it is an interactional variable that reflects the student’s self-positioning in relation to the experience of the undergraduate research laboratory course. The construct of project ownership includes aspects of engagement, agency, personal connection, the recognition of community and disciplinary value, and positive emotive responses. Validation studies of project ownership have shown it to be particularly sensitive to the differentiation of CREs from traditional laboratories and reflective of student responses to pedagogical change in which they are given more agency and ownership over their research projects (Hanauer et al., 2012; Hanauer and Dolan, 2014; Stanley et al., 2015). Importantly, project ownership has also been shown to be predictive of the degree to which students discuss their research with others (Hanauer and Hatfull, 2015).

Previous work by both Chemers et al. (2011) and Estrada et al. (2011) has established the importance of three additional variables for the PITS survey: self-efficacy, science identity, and scientific community values. The work done by Chemers and his colleagues was directed at understanding the mechanisms of retention in science majors for underrepresented minority students. The researchers hypothesized and then tested the idea that certain psychological outcomes of science education experiences predicted a commitment to stay in the sciences. Self-efficacy, as described by Chemers et al. (2011), assesses the students’ confidence in their abilities to function as scientists, and science identity assesses the degree to which students see being a scientist as part of who they are. For Chemers et al. (2011), self-efficacy and science identity were found to be mediators of the commitment to stay in the sciences for undergraduate science students.

Like Chemers et al. (2011), Estrada et al. (2011) were interested in modeling the mechanisms for retaining underrepresented minority students in science-related undergradaute majors. Estrada and her colleagues built on and extended the work of Chemers and his colleagues and proposed and tested an additional psychological variable: the internalization of scientific community values. Estrada et al.’s (2011) analyses found that self-efficacy, science identity, and scientific community values were all predictive of extended integration in the sciences. However, self-efficacy was less influential than the other two variables and did not predict the intention to stay in the sciences for all groups of undergraduates. In Estrada et al.’s (2011) study, science identity and the internalization of scientific community values offered the best predictors of long-term retention in the sciences. Accordingly, the variable of identification with scientific community values was added to the PITS survey.

Community involvement is an important component of the CRE. Chemers et al. (2011) evaluated community involvement using a set of scales exploring student participation in different types of community events and found these scales to be predictive of student self-efficacy. In more recent work, Hanauer and Hatfull (2015), using a simple measure of community interaction termed “networking,” explored the outcomes of research experiences in terms of the persons with whom the students discussed their research. As explained by Hanauer and Hatfull (2015), a simple applied linguistic principle underpinned the proposed scales: a network at its most basic level is group of people with whom you converse about a particular topic. Accordingly, asking students about the degree to which they discuss their research with different conversation partners in their personal, social, and disciplinary contexts provides a way of assessing the types of networks within which their research is situated. Networking, defined in this way, was found to differentiate between CRE and traditional lab experiences and to be predicted by the project ownership scales. Specifically, increased levels of project ownership facilitated increased discussion with personal, social, and disciplinary partners.

As conceptualized and operationalized here, the PITS survey intergates six existing tools: project ownership–content, project ownership–emotion, self-efficacy, science identity, scientific community values, and networking. The argument is that together these six sets of scales provide a description of the psychological states that characterize being a scientist, and the measurement of these states may offer predictive value in terms of student persistence in the sciences. Previous work on these scales has already shown the value of assessing self-efficacy, science identity, and scientific community values in predicting retention (Chemers et al., 2011; Estrada et al., 2011). The PITS survey extends this previous work in several ways: 1) It provides a broader set of measures that characterize being a scientist. 2) Through the addition of project ownership–content and emotion scales, it directly connects to and measures outcomes of authentic research experiences. 3) By adding the networking scales, it directly addresses the community-building aspects of scientific activity.

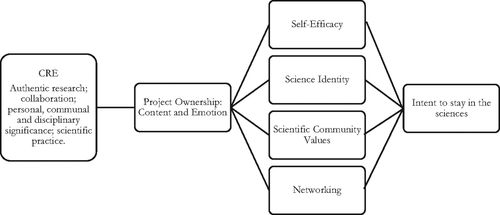

Importantly, the specific composition of the PITS survey suggests a very particular model of persistence enhancement through CREs. The model starts from the assumption that students are situated within educational laboratory courses that provide authentic research experiences. Project ownership–content and project ownership–emotion items have been shown to be sensitive to the differentiation between CRE and traditional laboratory courses, and the same items reflect a very specific self-positionality of the student. Project ownership represents a moment in which the student positively engages with the science, seeing personal, community, and disciplinary values in his or her personal research. The development of project ownership can be seen as foundational to the development of the other psychological states relevant to the intent to stay in the sciences. Basically, the development of higher levels of project ownership directly resulting from engineered aspects of educational design of the CRE should translate into higher levels of all the subsequent psychological states of self-efficacy, science identity, scientific community value, and networking. The development of high levels of these states should then lead to increased intent to stay in the sciences, which in turn should mean that a higher percentage of these students actually graduate within the science major and perhaps continue to gradaute studies in the sciences. Figure 1 presents a schematic model of this potential relationship and, as can be seen in this figure, the model suggests that the relationship between project ownership and the intent to stay in the sciences is mediated through the other psychological states.

FIGURE 1. Model of the psychological outcomes of CREs leading to the intention to stay in the sciences. Schematic description of the relationship between the aspects of a CRE educational program, project ownership, the psychological outcomes of a CRE (self-efficacy, science identity, scientific community values, and networking) and the intent to stay in the sciences.

From a theoretical perspective, the model presented here is closest to the integrative research summary of the persistence framework (Graham et al., 2013). Couched within social, cognitive, and educational psychology, this framework focuses on the importance of increasing retention by working through student agency, motivation, and engagement. In this framework, several psychological components have been suggested as important for staying in the sciences. These include motivation, self-efficacy, engagement, and professional identification, related such that learning and professional identification increase confidence and, consequently, motivation, which in turn spurs academic success and identity as a scientist (Graham et al., 2013). Persistence is thus associated with a cyclical interaction between science education experiences, psychological and sociological outcomes in the student, and future science-related career choices, with each one reinforcing the others. The persistence framework also specifies the importance of early research experiences and offers further theoretical support to the position taken here in terms of the proposed model and measurement of student persistence in the sciences.

Research Questions and Analytical Approach

The aim of the current paper is to present the theory, psychometric properties, and initial predictive abilities of the PITS survey. Specifically, we address the following questions:

What are the psychometric properties (dimensionality, reliability, and validity) of the PITS survey?

Does the PITS survey predict persistence in the sciences?

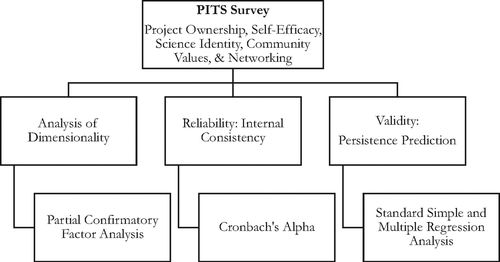

In addressing the dimensionality, reliability, validity, and predictive value of the PITS survey, a study with several different analyses was conducted. These included a partial confirmatory factor analysis (PCFA), reliability analysis, and standard simple and multiple regression analyses. Figure 2 summarizes the overall analytical approach.

FIGURE 2. Study design and analytical procedures. Outline of the three stages used in the validation of the PITS survey. The study addressed dimensionality, reliability, and validity using a PCFA, Cronbach’s alpha, and standard simple and multiple regression analyses.

The first analysis was designed to look at issues of dimensionality of the PITS survey and used a PCFA approach. A PCFA (Gignac, 2009) is a relatively new technique for assessing dimensionality and sits between the more open, exploratory factor analysis (EFA) and the more definitive confirmatory factor analysis (CFA).1 All three techniques (EFA, PCFA, and CFA) have a similar aim of providing insight into the correspondence of observed (survey) items and underpinning, latent (unobserved) constructs and offer some specification of the dimensions that are explored by a given instrument. A PCFA uses the open approach of an EFA for the assignment of items to factors and, accordingly, does not restrict the cross-loadings of items to one factor. A PCFA also uses the confirmatory aspect of a CFA by calculating close-fit indexes for the proposed solution. Absolute close-fit indexes utilize information from the implied model solution and determine how well the a priori model fits the data. For the current analysis, the root-mean-square error of approximation (RMSEA) was calculated. Incremental (or relative) close-fit indexes compare chi-square data from both the null and implied models. For the current analysis, the normed-fit index (NFI; Bentler and Bonett, 1980), the Tucker-Lewis index (TLI; Tucker and Lewis, 1973), and the comparative fit index (CFI; Bentler, 1990) were calculated. While standards vary in terms of what is an acceptable outcome, a better-fitting model should have RMSEA values of 0.8 or less and NFI, TLI, and CFI values approximating 0.95 (Hu and Bentler, 1999). The use of the PCFA in relation to the PITS survey allows a close consideration of the relations between items and emergent factors across the specific items of the existing tools, an evaluation of the dimensionality of the whole tool that emerges from this, and some indication of the quality of the proposed structure.

Following the analysis of dimensionality, reliability of the PITS was addressed. For assessment of reliability, a Cronbach’s alpha for the whole survey and each of its constituent parts was calculated. Once dimensionality had been shown, the internal consistency of the PITS was established using Cronbach’s alpha.

The final set of analyses addressed the validity of the PITS survey in terms of its ability to measure, predict, and model the self-reported intent to become a research scientist. Standard and simple multiple regression analyses were conducted to explore the ability of the variables on the PITS survey to predict the intent to become a research scientist.

METHODS

Participants

The participants in this study were 323 undergraduate students enrolled in nine different biology laboratory courses at the same research university situated in western Pennsylvania. Student participation in the survey was requested through course instructors. Average participation rates by class for students in this sample was 42.5%. Students were not offered any incentive to participate in the survey. Demographic information relating to the participants is presented in Table 1.

| Characteristic | n | % |

|---|---|---|

| Gender | ||

| Male | 112 | 35 |

| Female | 211 | 65 |

| Class | ||

| First year | 191 | 59 |

| Sophomore | 34 | 10 |

| Junior | 12 | 4 |

| Senior | 86 | 27 |

| Race/ethnic identification | ||

| White | 168 | 52 |

| Asian | 29 | 9 |

| Black or African American | 6 | 2 |

| Hispanic or Latino | 2 | 1 |

| Multiple ethnicities | 8 | 2 |

| Other | 3 | 1 |

| Prefer not to respond | 107 | 33 |

Procedures

The request for students to complete the survey was sent in the last 2 weeks of class, and all data were collected by the official end of both the Fall and Spring semesters of the 2014–2015 academic year. The request to participate in the survey and Web-based informed consent process were conducted in accordance with D.I.H.’s home institution, Indiana University of Pennsylvania (IRB approval log no. 14–302), and with institutional review board approval from the University of Pittsburgh.

Materials

The central instrument used in this study consisted of the PITS survey. The PITS survey has 36 rating scales organized into the following six components:

Project Ownership—Content: Ten rating items dealing with the degree of ownership the student feels over his or her laboratory research work (Hanauer and Dolan, 2014).

Project Ownership—Emotion: Six rating items dealing with specified positive emotive responses to the laboratory research experience (Hanauer and Dolan, 2014).

Science Self-Efficacy: Six rating items dealing with participant’s confidence in functioning as a scientist (Chemers et al., 2011; Estrada et al., 2011).

Science Identity: Five rating items dealing with ways in which the participant thinks about himself or herself as a scientist (Chemers et al., 2011; Estrada et al., 2011).

Scientific Community Values: Four rating items dealing with the participant’s affinity to values in the scientific community (Estrada et al., 2011).

Networking: Five rating items dealing with types of people a students talks to concerning participation in a laboratory course; the networking scales differentiate between personal and professional discussion partners (Hanauer and Hatfull, 2015).

A full version of the PITS survey can be found in the Supplemental Material.

As an outcome variable relating to the decision to stay in the sciences (persistence), an additional variable was added: the self-reported intent to become a research scientist. The development of these rating items was based on the idea that the intent to become a research scientist in the future was a positive indicator of the desire to stay in the sciences. As a long-term goal, the outcome of wanting to be a research scientist can direct a range of smaller decisions on staying in the sciences.

RESULTS

The Psychometric Properties of the PITS Survey

Statistical analyses were conducted to investigate the psychometric properties of the PITS survey. The PITS survey was constructed from five existing instruments: project ownership, self-efficacy, science identity, science community values, and networking. Previous evaluation of the structure of project ownership was found to have a two-factor internal structure differentiating between project ownership–content and project ownership–emotion factors (Hanauer and Dolan, 2014). Because there was prior evidence of the potential structure of the instruments that comprise the PITS survey, we hypothesized that a six-factor solution would define the dimensions of this survey (with project ownership–content, project ownership–emotion, self-efficacy, science identity, scientific community values, and networking each as constructs of the PITS survey). To evaluate this hypothesis, we conducted a maximum-likelihood factor analysis with direct oblimin rotation (to accommodate nonorthogonal relationships between the factors, or variables) with a forced six-factor solution. Initial eigenvalues for a six-factor solution were in accordance with the Kaiser criterion for keeping factors with eigenvalues above 1. The 323 students completed the PITS survey with a participant to variable ratio of 9:1, and sampling adequacy was evaluated using a Kaiser-Meyer-Olkin (KMO) analysis; the KMO value of 0.948 supports a suitable sample size for factor analysis. Descriptive statistics for each of the rating items used in the factor analysis were calculated to make sure that the assumption of normality was not violated. Bartlett’s test indicated that the data were suitable for a factor analysis (x2 [630] = 9918.9, p < 0.0001).

Because the sample was composed of students from nine different laboratory courses, suggesting a potential nested structure and the requirement of a multilevel factor analysis (MFA), interclass correlations (ICCs) were calculated for each of the individual items on the PITS survey. The ICC is an index that compares in-group variability with variability across the group and establishes whether there is a group effect that would necessitate an MFA approach. ICC values close to 0 in the ratio between in-group and across-group variances suggest the absence of a group effect. Specifically, ICC values above the 0.05 range are considered to constitute a situation requiring an MFA approach (Snijders and Bosker, 1999). Table 2 presents the outcomes of a two-way, mixed-effects, single-measure model ICC for the six contributing instruments and nine different laboratory courses. As can be seen in Table 2, all ICC measures are close to 0 with a range of −0.05–0.05. Accordingly, it was decided that the data did not have a nested structure and did not necessitate an MFA approach.

| Factor | |||||||

|---|---|---|---|---|---|---|---|

| Individual items on the PITS survey | ICC | 1 Project ownership–emotion | 2 Science community values | 3 Self- efficacy | 4 Networking | 5 Project ownership–content | 6 Science identity |

| Joyful | 0.04 | 0.927 | |||||

| Delighted | 0.03 | 0.883 | |||||

| Happy | −0.02 | 0.824 | |||||

| Amazed | 0.01 | 0.71 | 0.13 | ||||

| Astonished | −0.05 | 0.675 | |||||

| Surprised | 0.01 | 0.558 | |||||

| A person who thinks it is valuable to conduct research that builds the world’s scientific knowledge. | −0.003 | 0.875 | |||||

| A person who thinks discussing new theories and ideas between scientists is important. | −0.01 | 0.784 | |||||

| A person who thinks that scientific research can solve many of today’s problems. | 0.01 | 0.772 | |||||

| A person who feels discovering something new in the sciences is thrilling. | 0.01 | 0.755 | |||||

| The daily work of a scientist is appealing to me. | −0.05 | 0.13 | 0.445 | 0.32 | |||

| I derive great personal satisfaction from working on a team that is doing important research. | −0.03 | 0.22 | 0.33 | −0.14 | 0.16 | 0.12 | |

| I am confident that I can create explanations for the results of the study. | −0.04 | −0.864 | |||||

| I am confident that I can figure out what data/observations to collect and how to collect them. | 0.01 | −0.834 | |||||

| I am confident that I can develop theories (integrate and coordinate results from multiple studies). | −0.04 | −0.797 | 0.11 | ||||

| I am confident that I can generate a research question to answer. | −0.05 | −0.765 | 0.11 | ||||

| I am confident that I can use scientific literature and reports to guide my research. | −0.01 | −0.751 | |||||

| I am confident that I can use technical science skills (use of tools, instruments, and techniques). | −0.05 | 0.13 | −0.633 | 0.14 | |||

| I have discussed my research in this course with my friends. | -0.04 | 0.13 | 0.854 | −0.12 | −0.11 | ||

| I have discussed my research in this course with students who are not in my class but in my institution. | −0.03 | 0.842 | |||||

| I have discussed my research with students who are not at my institution. | −0.001 | 0.1 | 0.655 | ||||

| I have discussed my research in this course with my parents (or guardians). | −0.03 | 0.562 | 0.12 | ||||

| I have discussed my research in this course with professors other than my course instructor. | −0.03 | 0.377 | 0.14 | 0.33 | |||

| My research was interesting. | −0.01 | 0.28 | 0.13 | 0.709 | |||

| My research was exciting. | −0.04 | 0.32 | 0.11 | 0.651 | |||

| The research question I worked on was important to me. | −0.03 | 0.13 | 0.646 | 0.2 | |||

| My research will help to solve a problem in the world. | 0.02 | 0.627 | 0.12 | ||||

| My findings were important to the scientific community. | 0.05 | 0.613 | 0.17 | ||||

| The findings of my research project gave me a sense of personal achievement. | 0.05 | 0.18 | 0.12 | 0.609 | |||

| I faced the challenges that I managed to overcome in completing my research project. | 0.02 | −0.19 | 0.12 | 0.57 | −0.1 | ||

| In conducting my research project, I actively sought advice and assistance. | 0.01 | 0.11 | −0.12 | 0.557 | −0.15 | ||

| I had a personal reason for choosing the research project I worked on. | 0.05 | 0.1 | −0.1 | 0.552 | 0.25 | ||

| I was responsible for the outcomes of my research. | 0.05 | −0.23 | 0.12 | 0.519 | −0.15 | ||

| I feel like I belong in the field of science. | 0.01 | 0.36 | −0.15 | 0.404 | |||

| I have come to think of myself as a “scientist.” | 0.01 | 0.18 | 0.18 | −0.23 | 0.391 | ||

| I have a strong sense of belonging to the community of scientists. | −0.05 | 0.19 | −31 | 0.12 | 0.387 | ||

Using a maximum-likelihood factor analysis, six factors were extracted accounting for 71.16% of the total variance of the observed variables. Table 2 presents the factor pattern matrix and the regression coefficients of each item on each of the factors and cross-loadings with other factors. As can be seen in Table 2, the six-factor solution parallels the underpinning imposed structure of an instrument constructed from existing tools, and these factors are identified as project ownership–emotion, science community values, self-efficacy, networking, project ownership–content, and science identity (Table 2). However, there is one point of divergence from the underpinning assumption of the dimensionality of this survey based on the integrated tool: the science identity items did not factor into a single unit. Two of these items factored with the science community values factor (“The daily work of a scientist is appealing to me,” “I derive great personal satisfaction from working on a team that is doing important research”). As might be expected, these items have relatively low regression coefficients. A close consideration of the cross-loading patterns suggests there are four specific items with cross-loadings above the 0.32 threshold level (Tabachnick and Fidell, 2001), two of which are situated in both science identity and scientific community values: “The daily work of a scientist is appealing to me,” “I have a strong sense of belonging to a community of scientists,” “I have discussed my research in this course with professors other than my course instructors,” and “My research project was exciting.” These results suggest there is some relationship between the factors of science identity and scientific community values, but overall, the factor loadings are reflective of the underpinning assumed structure with cross-loadings being relatively limited on other factors.

The first factor, project ownership–emotion, accounted for 46.44% of the total variance. The second factor, science community values, accounted for 7.8% of the total variance and consisted of the four science community value scales and two science identity scales. The third factor, self-efficacy, accounted for 5.96% of the total variance and consisted of the original items on the self-efficacy instrument. The fourth factor, networking, accounted for 4.2% of the total variance and was constructed exclusively from the original networking scales. The fifth factor, project ownership–content, accounted for 3.69% of the total variance and was constructed from the content items of the original project ownership survey. The sixth factor, science identity, accounted for 3% of the total variance and consisted of the three remaining science identity items. The results of this factor analysis support the underpinning assumption of the survey dimensionality based on the presence of five different instrument with six dimensions. However, it is important to note that the way in which science identity and science community values were integrated suggests some overlap between these categories.

In accordance with the PCFA approach, the proposed factor analysis solution was evaluated for its fit quality. Table 3 presents the model fit indexes for four different model factor solutions for the PITS survey. As can be seen in Table 3, a six-factor solution offers the best fit quality. The close-fit indexes for the six-factor solution approximate but do not all reach recommended levels (RMSEA ≤ 0.8; NFI, TLI, and CFI ≈ 0.95) for an appropriate outcome. The RMSEA and CFI for the six-factor solution are in the acceptable model fit range (RMSEA = 0.07; CFI = 0.91), while the NFI and TLI are below acceptable fit levels. However, it should be noted that the concept of acceptable fit indexes threshold levels is contested, and specific consideration of the presented model needs to be discussed (Marsh et al., 2004). In this case, it is clear that the six-factor solution offers the best option for describing dimensionality in the PITS with this sample when compared with other specified factor solutions. It is also clear that there are areas of local strain within the model. Some of the items that have been factored together—especially those with low loading values and cross-loadings—have thus reduced the appropriateness of the factor solution. These are the items related to science identity, of which two were integrated in the scientific community values factor and the others in a factor by themselves but with low loadings. Although an argument could be made for deleting these items to increase the appropriateness of the model, we consider it prudent to maintain them at this stage of the validation and re-evaluate the structure when new data sets are available.

| Specified model | NFI | CFI | TLI | RMSEA |

|---|---|---|---|---|

| Two factor | 0.7 | 0.7 | 0.7 | 0.11 |

| Four factor | 0.8 | 0.85 | 0.8 | 0.09 |

| Five factor | 0.84 | 0.88 | 0.82 | 0.08 |

| Six factor | 0.87 | 0.91 | 0.86 | 0.07 |

To further explore the psychometric properties of the PITS survey, we examined its internal consistency in relation to the contributing instruments. When all of the PITS survey items were included, a high degree of internal consistency was observed (Cronbach’s α = 0.96). Item-total correlations were calculated but no item was found to reduce the reliability of Cronbach’s α value for the whole scale. Internal consistency was also calculated for each of the component instruments in the PITS survey, with Cronbach’s α values being 0.96, 0.96, 0.92, 0.87, 0.88, and 0.85 for project ownership–content, project ownership–emotion, self-efficacy, science identity, scientific community values, and networking, respectively.

Overall, the psychometric properties of the PITS survey suggest that this is an instrument that has high levels of internal consistency and a six-factor structure overlapping with its underpinning construction from existing instruments. Based on this analysis, the PITS survey provides reliable measurement of project ownership–emotion, project ownership–content, self-efficacy, science identity, scientific community values, and networking.

Predicting Persistence: Regression Analyses

The aim of this section of Results is to establish the value of the factors of the PITS survey as predictors of the intent to become a research scientist. This is a basic validity question in the sense that, if the psychological constructs represented by the different dimensions of the PITS survey have value in terms of persistence, they should be predictors of the future intent to become a scientist. The evaluation of the psychometric properties of the PITS survey suggest that the dimensionality of the survey does correspond to the six-factor structure of project ownership–emotion, project ownership–content, self-efficacy, science identity, scientific community values, and networking. However, the cross-loadings and relatively weak loading factors for some items in the science identity and scientific community values items does not allow the assumption of unidimensionality for these factors. Accordingly, the analysis of the predictive relationship between factors and the intent to become a research scientist was conducted on the specific items themselves for science identity and scientific community values and on averaged mean scores for the factors of project ownership–emotion, project ownership–content, self-efficacy, and networking.

A standard linear multiple regression was calculated using each of the individual items of the science identity and scientific community values factors as predictors and the intent to become a research scientist as an outcome variable. The assumptions of a multiple regression analysis were checked. An analysis of standard residuals was carried out, which showed that the data contained no outliers (standard residual minimum = 2.86; standard residual maximum = 2.36). Tests to see whether the data met the assumption of collinearity indicated that multicollinearity was not a concern, with tolerance values ranging from 0.31 to 0.49 and VIF values ranging from 2.02 to 3.16. The data met the assumption of independent errors (Durbin-Watson value = 2.06). The histogram of standardized residuals indicated that the data contained approximately normally distributed errors, as did the normal P-P plot of standardized residuals. The scatter plot of standardized residuals showed that the data met the assumptions of homogeneity of variance and linearity. The ratio of participant to variable was 38:1, which is well above the required ratio for this type of analysis.

The regression model was statistically significant (F(9, 323) = 37.357, p < 0.0001) and accounted for ∼50% of the variance of the intent to become a research scientist (R2 = 0.51, adjusted R2 = 0.49). The intent to become a research scientist was predicted by five of the nine items in this analysis. The first four came from the science identity factor: “I have a strong sense of belonging to the community of scientists,” “I have come to think of myself as a scientist,” “I feel like I belong in the field of science,” and “The daily work of a scientist is appealing to me.” The last predictive item came from the scientific community values factor: “A person who feels discovering something new in the sciences is thrilling.” The raw and standardized regression coefficients of the predictors, together with their squared semipartial correlations and significance tests, are shown in Table 4. As can be seen in this table, the item that makes the most contribution to the prediction is “The daily work of a scientist is appealing to me,” which accounts for 26% of the total variance in the outcome variable, with all other significant items contributing 2% or less of unique variance.

| Model | B | SE-b | Beta | t | Sig. | sr2 | VIF |

|---|---|---|---|---|---|---|---|

| Constant | −0.514 | 0.313 | −1.62 | 0.104 | |||

| I have a strong sense of belonging to the community of scientists. | −0.19 | 0.07 | −0.15 | −2.63 | 0.009 | 0.02 | 2.38 |

| I derive great personal satisfaction from working on a team that is doing important research. | 0.008 | 0.07 | 0.006 | 0.11 | 0.91 | 0 | 2.21 |

| I have come to think of myself as a scientist. | 0.19 | 0.06 | 0.175 | 2.84 | 0.005 | 0.02 | 2.49 |

| I feel like I belong in the field of science. | −0.14 | 0.07 | −0.1 | −01.95 | 0.05 | 0.01 | 2.02 |

| The daily work of a scientist is appealing to me. | 0.66 | 0.06 | 0.59 | 10.56 | 0.0001 | 0.26 | 2.06 |

| A person who thinks discussing new theories and ideas between scientists is important. | 0.01 | 0.07 | 0.01 | 0.15 | 0.87 | 0 | 2.46 |

| A person who thinks it is valuable to conduct research that builds the world’s scientific knowledge. | 0.11 | 0.08 | 0.08 | 1.25 | 0.21 | 0.004 | 3.16 |

| A person who thinks that scientific research can solve many of today’s world challenges. | −0.001 | 0.08 | 0 | −0.007 | 0.99 | 0 | 2.16 |

| A person who feels that discovering something new in the sciences is thrilling. | 0.198 | 0.07 | 0.17 | 2.74 | 0.006 | 0.02 | 2.44 |

To further understand the predictive potential of the variables on the PITS survey, we conducted a second set of regression analyses. In this case, the items scores for each of the variables of project ownership–content, project ownership–emotion, self-efficacy, and networking were averaged and positioned as predictor variables for the outcome of the intent to become a research scientist. Four simple linear regression analyses were conducted. The assumptions of a simple linear regression were checked, and no violations of normality or linearity were found. For all four of these variables a significant regression equation was found: project ownership–content: F(1, 332) = 43.05, p < 0.0001; project ownership–emotion: F(1, 332) = 64.3, p < 0.0001; self-efficacy: F(1, 331) = 22.92, p < 0.0001); and networking: F(1, 332) = 73.85, p < 0.0001). Project ownership–content explained ∼11% of the variance in the outcome variable (R2 = 0.11, adjusted R2 = 0.11); project ownership–emotion explained ∼16% of the variance in the outcome variable (R2 = 0.16, adjusted R2 = 0.16); self-efficacy explained ∼6% of the variance in the outcome variable (R2 = 0.06, adjusted R2 = 0.06); and networking explained ∼18% of the variance in the outcome variable (R2 = 0.18, adjusted R2 = 0.18). Table 5 summarizes the results of the four regression analyses.

| Model | B | SE-b | Beta | t | Sig. |

|---|---|---|---|---|---|

| Outcome variable: intent to become a research scientist | |||||

| Constant | 1.46 | 0.25 | 5.75 | 0.0001 | |

| Project ownership–content | 0.47 | 0.07 | 0.34 | 6.56 | 0.0001 |

| Constant | 1.53 | 0.2 | 7.54 | 0.0001 | |

| Project ownership–emotion | 0.48 | 0.06 | 0.4 | 8.01 | 0.0001 |

| Outcome variable: intent to become a research scientist | |||||

| Constant | 1.29 | 0.38 | 3.39 | 0.001 | |

| Self-efficacy | 0.44 | 0.09 | 0.25 | 4.78 | 0.0001 |

| Outcome variable: intent to become a research scientist | |||||

| Constant | 1.35 | 0.21 | 6.39 | 0.0001 | |

| Networking | 0.55 | 0.06 | 0.42 | 8.59 | 0.0001 |

The results of the multiple and simple regression analyses suggest that the items and variables on the PITS survey have predictive value in terms of persistence. As might be expected, not all of the individual items on the multiple regression analyses for the science identity and scientific community values were predictive, and further consideration of the differences of these items as persistence indicators is required. All of the simple regression analyses for the averaged constructs (project ownership–content, project ownership–emotion, networking, and self-efficacy) showed a significant role for the variables in predicting the intent to become a research scientist. Overall, the PITS survey can be seen as a valid way to evaluate persistence in the sciences.

DISCUSSION

Over the past few years, CREs have been developed in a wide range of educational settings and offer a direct response to the mandate specified in the PCAST report by addressing the need for enhanced retention and improved educational outcomes across STEM education. Assessing the outcomes of these courses, however, is still a challenge. The validation study presented in this paper, in conjunction with the broad assessment development approach used here to model the specificities of a CRE and integrating existing theoretical and empirical assessment scholarship, may offer the beginning of an assessment answer.

We have described here a simple but powerful PITS survey tool to facilitate curricular innovations in science education aimed at promoting STEM retention. The PITS survey builds on the previously established persistence framework (Graham et al., 2013) and integrates a series of existing tools that deal with the psychological outcomes of participating in a research experience. We show that the PITS survey provides a reliable and valid way of measuring relevant variables underpinning student persistence decisions and propose that the PITS survey is particularly suitable for CREs designed for early-career (first- and second-year) undergraduate students.

Psychometric evaluation of the PITS survey suggests a six-factor model involving project ownership–emotion, self-efficacy, scientific community values, science identity, networking, and project ownership–content. As might be expected from a first validation of the combination of existing tools into a single survey, the dimensionality of the survey is not perfect, and some questions have arisen in relation to the differentiation of the factors of science identity and scientific community values. There is a need for a larger sample and a CFA approach to further validate the PITS survey. Evaluations of the internal consistency of the PITS survey revealed that the whole of the survey and each of its sections were highly reliable. Overall, from a psychometric perspective, the PITS survey offers a reliable and structured approach to the measurement of the psychological outcomes of a research experience relevant to persistence.

A series of regression analyses showed that the variables on the PITS survey do predict the intent to become a research scientist and offer some evidence that the PITS survey can provide insight into the relationship of specific educational designs and persistence outcomes. Combined with the theoretical developments of CRE educational designs, the ability to measure the psychological aspects of research experiences such as those integrated in the PITS survey should allow future studies to carefully assess programs that promote persistence through research experiences and, as detailed in the initial sections of this paper, may lead to educational models of how CREs achieve their educational aims.

The PITS survey is a measurement tool and offers the potential to consider relationships among psychological variables relevant to the question of enhancing persistence. The 2012 PCAST report places a specific charge on the undergraduate science education community to increase student retention. The data presented here offer a response to this charge. The PITS survey is a tool that can be used to evaluate whether the psychological states conducive to staying in the sciences emerge from particular educational designs and specific courses or programs of STEM education. Science education researchers can use the PITS survey to evaluate changes in how students think about and approach science and their decision processes about whether to stay in science.

FOOTNOTES

1 See Gignac (2009) for an excellent introduction to the technique of PCFA.

ACKNOWLEDGMENTS

We thank Dr. Nancy Kaufmann, Dr. Welkin Pope, Dr. Vernon Twombly, and Dr. Marcie Warner for their assistance in collecting data for this study.