An Analysis of the Perceptions and Resources of Large University Classes

Abstract

Large class learning is a reality that is not exclusive to the first-year experience at midsized, comprehensive universities; upper-year courses have similarly high enrollment, with many class sizes greater than 200 students. Research into the efficacy and deficiencies of large undergraduate classes has been ongoing for more than 100 years, with most research associating large classes with weak student engagement, decreased depth of learning, and ineffective interactions. This study used a multidimensional research approach to survey student and instructor perceptions of large biology classes and to characterize the courses offered by a department according to resources and course structure using a categorical principal components analysis. Both student and instructor survey results indicated that a large class begins around 240 students. Large classes were identified as impersonal and classified using extrinsic qualifiers; however, students did identify techniques that made the classes feel smaller. In addition to the qualitative survey, we also attempted to quantify courses by collecting data from course outlines and analyzed the data using categorical principal component analysis. The analysis maps institutional change in resource allocation and teaching structure from 2010 through 2014 and validates the use of categorical principal components analysis in educational research. We examine what perceptions and factors are involved in a large class that is perceived to feel small. Our analysis suggests that it is not the addition of resources or difference in the lecturing method, but it is the instructor that determines whether a large class can feel small.

INTRODUCTION

Over the past few decades, higher education has experienced a dramatic expansion, such that in 2000, approximately 20% of age-appropriate people were enrolled in some form of tertiary education (Schofer and Meyer, 2005). In recent years, this number has increased to 50% in developed nations (Collins, 2013, as cited in Allais, 2014). With this increase in access to tertiary education, the amount of available resources per student has decreased. Between 2000 and 2010, the average student to teaching staff ratio in Organization of Economic Co-operation and Development (OECD) countries (normalized to full-time equivalent units, including all levels of tertiary education) increased from 12.7 to 15.5, likely due to an increase in enrollment in vocational and professional institutions (OECD 2002–2014). In the United States, for which there is the most complete data set, student to teaching staff ratios increased from 13.5 in 2000 to 16.0 in 2012 (OECD 2002–2014). In the province of Ontario, Canada, enrollment in undergraduate postsecondary education increased by up to 50% between 2001 and 2011 (Kerr, 2011), while budgetary increases remained between 1% and 2% per annum (Ontario Confederation of University Faculty Associations, 2012). Some of these universities experienced budgetary deficits up to $8 million per year, spurring the introduction of cost-analysis programs such as the Program Prioritization Project (University of Guelph, 2012). The reason for the sustained high class sizes may have been the realized cost efficiency of large class enrollments that had not been previously experienced on a large scale (Cuseo, 2007; Kerr, 2011).

Qualitative studies have identified the challenges of teaching and learning in large classes (Wulff et al., 1987; Carbone and Greenberg, 1998; Cooper and Robinson, 2000). The student perspective in large classes is mostly a feeling of isolation from support measures and anonymity, with a resulting decrease in motivation. These negative perceptions have been associated with decreased student retention and persistence to completion (Wulff et al., 1987; Carbone and Greenberg, 1998; Mulryan-Kyne, 2010). From the instructor’s perspective, large classes are the most challenging in terms of engaging and interacting with students as individuals (Gibbs et al., 1996; Carbone and Greenberg, 1998) and require more resources in order to incorporate evidence-based teaching practices such as active learning (Vajoczki et al., 2011; Connell et al., 2016; Elliott et al., 2016), especially at the time of transition from a more traditional lecture format (Justice et al., 2009; Ueckert et al., 2011; Connell et al., 2016).

Strictly quantitative analyses in education research on large classes have been less frequent. To our knowledge, only one multivariate analysis investigating class size has been performed. The author found that the institutional status (by size and sector) and course structure are the most accurate predictors of class size (Chatman, 1997). Other quantitative studies have reported on the cost efficiency of large classes and investigated various student outcomes in relation to class size (Lopus and Maxwell, 1995). With respect to student academic performance, some studies report better outcomes in larger classes (Hou, 1994), some report worse (Gibbs et al., 1996; Becker and Powers, 2001; Arias and Walker, 2004), while others report no change (Kennedy and Siegfried, 1997). Longer-term outcomes such as approaches to learning and persistence to completion have also been quantified based on class size, again showing varied results (West, 2004; Baeten et al., 2010).

Research demonstrating the negative effects of large classes on student learning and other variables is extensive. Though a few studies have demonstrated some positive outcomes (Christopher, 2011), successes are often described as having been “despite” rather than “due to” the large class size. More commonly, it was found that, in large classes, student engagement and interest are diminished (Collins, 1998; Cooper and Robinson, 2000) and the frequency and quality of student–faculty interactions are reduced (Mahlera et al., 1986; Wulff et al., 1987) to the point of zero interaction (Cuseo, 2007). Consequently, student persistence to completion is affected (Delaney, 2008). On the other hand, smaller class size has also been implicated in the effective long-term retention of critical-thinking skills (Gibbs et al., 1996; Baeten et al., 2010). Recent studies have attempted to improve the large class environment through collaborative learning strategies and problem-based learning (Vajoczki et al., 2011; Cole and Spence, 2012; Nomme and Birol, 2014; Connell et al., 2016). These studies can be further divided into two approaches: those that scale up using the same resources, and those that restructure courses to engage more students (Gordon et al., 2009; Francis, 2012). Even with the evidence supporting the implementation of these strategies, the creation of an effective learning environment relies largely on instructor initiative (Waldrop, 2015). Without incentive or encouragement to change their teaching practices, instructors report using lecturing as their main instructional method despite the known pitfalls for student learning (Carbone and Greenberg, 1998; Cuseo, 2007; Kerr, 2011; Waldrop, 2015).

Over the past decade, there has been increasing research into the benefits of using active-learning techniques in large classes (e.g., Moravec et al., 2010; Perez et al., 2010; Ueckert et al., 2011; Tune et al., 2013; Freeman et al., 2014; Jensen et al., 2015; Adams et al., 2016; Connell et al., 2016; Elliott et al., 2016; although not all of these studies were conducted on “large classes” as characterized by our research and defined by students and instructors; but see Hartling et al., 2010). Often, the flipped-classroom model is used to free up class time for active-learning techniques, though studies have failed to demonstrate the importance of flipping as separate from active learning (Jensen et al., 2015; Adams et al., 2016), and Van Vliet et al. (2015) noted some gains that did not persist. It is therefore suggested that using active learning can be beneficial at any stage of the course (Jensen et al., 2015), though it is noted that studies demonstrating the benefits of active learning are usually conducted using teaching scholarship professionals rather than regular faculty. Andrews et al. (2011) found no learning benefit of active learning when regular faculty participated in the study, and Elliott et al. (2016) found evidence of improved student learning in an experiment in which regular faculty were mentored by postdoctoral education research scholars. Importantly, it has been found that the instructor contact time is the most important variable contributing to a student’s perception of learning than any other (Wood and Tanner, 2012; Jensen et al., 2015) and that one-on-one instruction is the best learning environment (Slavin, 1987; review by Wood and Tanner, 2012). This suggests that it is the instructor’s individual ability and degree of access by students (e.g., office hours, student:instructor ratio, lecture vs. seminar or laboratory) that facilitates learning.

Comprehensive research has been performed on the impact that large classes have on the student experience (Wulff et al., 1987; Carbone and Greenberg, 1998), student learning (Baeten et al., 2010), student academic performance (Gibbs et al., 1996; Becker and Powers, 2001; Toth and Montagna, 2002; Arias and Walker, 2004), and student retention (Ashar and Skenes, 1993). An up-to-date understanding of a definition of a large class in the biological sciences and which aspects characterize class size designations with respect to institutional investment and resources would contribute to the education research field. We use here a quantitative approach to create a classification of courses in the Department of Molecular and Cellular Biology at a medium-sized Canadian postsecondary institution (undergraduate population in 2015: 18,289). We combine this analysis with those of a survey to investigate what large classes feel like from the students’ and the instructors’ perspectives as we narrow in on answering the question: How can we make a large class feel smaller?

METHODS

Survey of Student and Instructor Perceptions

Given the literature that exists on the potential learning outcomes associated with class size alone, surveys targeting undergraduate students and faculty in the College of Biological Sciences were developed to answer the following questions: 1) At what student enrollment does a class become perceived as large by both students and instructors? 2) What does a large class feel like? 3) Can a large class be perceived as small? We assessed the students’ and instructors’ perceptions and attitudes about small, medium and large classes with an online survey using Qualtrics. Approval for the survey was received from the University of Guelph (Research Ethics Board #15JA022) and consent was obtained from the anonymous participants. All registered students and employed faculty of the college were sent an email during the Winter semester and asked to participate in our survey (see the Supplemental Material). We have an 18% (n = 534) and 44% (n = 48) response rate for students and faculty, respectively.

All data were collected from questions that were closed-ended (selection from a limited set of answers, or the use of Likert scales), open-ended (participants are free to answer the question and are given a text box with an unlimited character count), or numerical (participants entered a number). Separate surveys were developed for students and instructors to determine potential differences between their perceptions on similar topics. The following information was obtained in the survey:

Student data: Degree major of study and number of semesters completed (closed-ended questions).

Class size: the minimum and maximum number of students associated with a small, medium, and large class (numerical values).

Characteristics of class size: Descriptors, other than the number of students, of small, medium, and large classes (open-ended questions).

Attitudes about class size: A series of statements related to course structure, student experience and interactions, and the learning environment. Students and instructors were asked to associate each statement with the class size categories of small, medium, and large (closed-ended questions).

Feeling small: Students were asked whether they had taken a large class that felt small and, if so, to provide reasons for why it felt small (open-ended questions).

First-year students were not included as participants in the study, because the majority of them would have largely experienced only large university classes. The minimum and maximum reported class sizes were analyzed in SPSS (version 22) with a two-way analysis of variance to identify statistically significant differences. All p values ≤0.05 were considered significant.

The qualitative response data derived from the survey (that is, responses to open-ended questions such as “How would you describe a small class, aside from the number of students?”) were transferred to an Excel spreadsheet for coding. Fifty of the 159 total responses were randomly sampled by one researcher (J.L.) to develop a series of 11 unique themes that emerged from the responses. This first version of this series of themes met with 82.5% and 98% agreement by the two other researchers (S.P.G. and S.R.J.). The themes (Table 1) were revised by a process of consensus until all researchers were in agreement. All responses were coded according to these themes, and any responses (full or incomplete) that did not correspond to a theme were also recorded. Two novel themes were subsequently identified that captured these other responses. The same procedure was followed for the analysis and coding of responses related to attitudes toward courses of different sizes and the identification of large classes that feel small. The themes in the responses to these questions are listed in Table 1.

| Describe a (small, medium, or large) class, other than by the number of students in it | Describe why it was your favorite classb |

|---|---|

| Location (type of classroom and/or specific building) | Professor |

| Use and type of communication aid (e.g., microphone) | Degree of interactivity (between students and with instructor, inside and outside class time) |

| Evaluation methods/assessment scheme | Class size |

| Instructor–student interactions | Course structure |

| Anonymity/community | Community in class |

| Stress level (associated with course load and the difficulty of finding a seat before class) | Practicality of content |

| Distractions in class | Course content (other) |

| Course structure | Fairness of evaluations |

| Course level | Number of evaluations |

| Course content | |

| Course required for program |

Properties of Small, Medium, and Large Classes

Data were gathered on 171 offerings of 39 unique courses in the Department of Molecular and Cellular Biology (MCB) between Fall 2010 and Winter 2014 at a medium-sized public Canadian university (Table 2). The variables (Table 3) were gathered from course outlines (syllabuses); courses were selected on the basis of consistent course availability throughout those years. Variables were nominal, ordinal, and numerical, therefore requiring analysis by a categorical (instead of standard) principal component analysis (PCA) (Linting et al., 2007). PCA is a multivariate statistical dimension reduction tool that assesses variance in a data set by combining multiple correlating variables into a reduced number of uncorrelated principal components (PC). Categorical PCA optimally quantifies nonnumerical data into numerical categories, creating variance in nominal and ordinal variables that is otherwise absent but required for dimension reduction. Categorical PCA is nonlinear because it does not assume that there is a linear relationship within the variables. This categorical PCA quantified the seven variables (Table 3) that investigate the structure and resources attached to courses in MCB (Table 2).

|

| Variable | Category options or numerical range | Variable designation | PC1 Score | PC2 Score |

|---|---|---|---|---|

| Number of graduate TAs | 0–14 TA units/semester | Numerical | 0.609 | −0.597 |

| Number of course coordinators | 0 or 1 | Numerical | 0.771 | |

| Number of instructors | 1 or 2 | Numerical | −0.846 | |

| Nature of instructor office hours | Not offered; by appointment only; weekly scheduled hours | Ordinal | −0.463 | |

| Number of evaluations/semester | 1–23 | Numerical | 0.713 | 0.541 |

| Variety of evaluations/semester | 1–15 | Numerical | 0.740 | 0.508 |

| Course structure | Lecture-only; lecture and seminars; lecture and labs; lab-based | Ordinal | 0.771 |

Categorical PCA was performed using SPSS (version 22) using the Dimension Reduction, Optimal Scaling tool. Two components were selected for extraction (see Component Extraction for justification); all other options for optimal scaling remained at default values throughout the analysis.

Component Extraction

Unlike numerical (standard) PCA, categorical PCA requires that the number of PCs be established before analysis. Two components were selected as the optimum number for this data set on the basis of the “elbow rule” using a scree plot (Reise et al., 2000). PC1 (40%) and PC2 (27%) accounted for 67% of the variance in the data set, while extraction of a third component contributed less than 2% to the total variance. Variables with contributions less than 0.3 in either component were not considered significant (Linting et al., 2007).

Variable Assembly

The variables used in the analysis (Table 3) were those identified as inputs or resources added to the course rather than the outcomes or the content. This approach was used to see what course resources could be associated with courses that students identified in the survey as feeling small despite a large enrollment.

The number of instructors, lab/course coordinators (defined as: teaching support staff not employed as faculty), and graduate teaching assistant (TA) units were included to assess the number of human resources allocated to a class. These variables were expected to quantify the potential for student interaction with their instructors, because even informal interactions can have a positive effect on student outcomes (Cuseo, 2007). The number of TAs and lab coordinators also reflects the course structure, because laboratory and seminar components are typically facilitated by them rather than the instructors.

Courses were divided into one of four possible structures, differentiating courses with only a lecture portion from those with seminars and labs. In MCB the typical lab is a 3 hour weekly or biweekly session, while a tutorial is a 50 minute weekly or biweekly session. Both are facilitated by graduate student TAs. The aim with categorizing the course structure was to further differentiate resource allocation to each course, since there are both financial and time-related differences in establishing and administering lectures, seminars, and laboratory sessions. Furthermore, lab-methods classes were differentiated from other lab courses, because more than 50% of class time is spent in the laboratory compared with classes for which labs are shorter and less frequent, accounting for approximately 25% of class time. This extra time spent in laboratory in lab-methods classes reflects a difference in amount of resources and was thus categorized separately.

The style of an instructor’s office hours was used to assess instructor availability outside class time. Office hours were binned into one of three ordinal categories: not offered, by appointment only, or scheduled hours. It is expected that instructors who offer scheduled office hours would have a greater potential for student interaction than if they were scheduled by email only, particularly in lower-division courses (first and second year) when students might feel less confident about contacting a professor.

The number and variety of student assessments were collected as indication of the course structure in terms of time invested in assignment development and marking. The overwhelming majority of the assessments (>95%) were summative. A greater number of assignments requires more time spent on grading, and a greater variety of assessment formats requires more time on the part of the instructor in developing each one. The number of assessments included all forms of graded and ungraded submissions related to course content (e.g., online quizzes, peer assessments, midterms, and final exams).

Sample classifications of three very different courses are presented in Table 4. The first exemplar is a first-year, introductory biology course with weekly tutorials and a final summative project wherein the students collaborate in interdisciplinary groups of three to create a written report and poster presentation. This course has a high number of TAs to facilitate weekly tutorials and a large amount of marking, and the most diverse assessment scheme of all lower-division courses in the department. It has been taught by two instructors who offer scheduled office hours throughout the semester. The second example is a course with a simple structure: a third-year core biochemistry course that has only a lecture component, one midterm, and one final examination. It does not have a course coordinator outside the two instructors and has few TAs for the high number of students required to complete the course. There are no additional resources by way of seminars or labs assigned to this course. The third example is a third-year genetics lab-methods class with a remarkably high number of assessments with low variation: in this case, students are handing in several lab reports throughout the semester. This lab-methods course has a midrange number of TAs moderating the laboratories and marking the lab reports.

| Course namea | Course codea | Course levela | Course structure | Number of instructors | Instructor office hour availability | Number of TA units | Number of course coordinators | Number of evaluations | Variety of evaluations |

|---|---|---|---|---|---|---|---|---|---|

| Introductory Molecular and Cellular Biology | BIOL 1090 | 1 | Lecture and seminar | 2 | Scheduled for 2–3 hours once weekly | 9 | 1 | 10 | 6 |

| Midterm exam; final exam; oral presentation; literature review; research paper; bibliography | |||||||||

| Structure and Function in Biochemistry | BIOC 3560 | 3 | Lecture only | 2 | Mixed | 0 | 2 | 1 | |

| Midterm exam; final exam | |||||||||

| Analytical Biochemistry | BIOC 3570 | 3 | Lecture and lab | 1 | By appointment | 1 | 0 | 11 | 4 |

| Two midterm exams; final exam; seven lab reports; oral presentation |

Cluster Analysis

Clustering and stratification of courses according to their PCA scores were assessed using a two-step cluster analysis. Two-step cluster analyses combine hierarchical and k-means clustering methods and assume independence of continuous input variables. Allowing for an automatic selection of the number of clusters, three clusters emerged in our analysis. PC1 and PC2 scores were the only variables used to determine clustering. The cluster analysis was conducted in SPSS (version 22) using the Classify: Two-Step Cluster tool.

RESULTS

Student and Faculty Perception of Class Size

Our qualitative analysis aimed to determine the environmental factors associated with different class sizes and which course resources and structures had the biggest impact on students’ and instructors’ perceptions and overall satisfaction.

1. At What Student Enrollment Does a Class Become Perceived as Large by Both Students and Instructors?

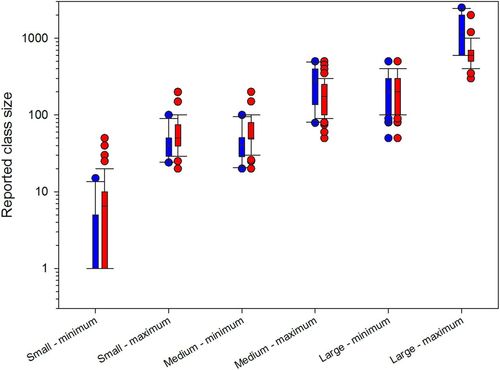

From our survey, we found that students and instructors generally agreed that a large class begins around 240 students (Figure 1). Both groups reported very similar ranges for small and medium classes: up to about 50 students in a small class, and up to 240 students in a medium class. In contrast, the reported maximum sizes of a medium class and a large class were different between the two groups. Students thought that 600 is the maximum large class size, whereas instructors reported 1000 as the maximum (F(1, 587) = 47.1, p < 0.001, Figure 1). The difference may be attributed to instructors considering multiple sections of the same course as a single class. This idea is supported by examining the size of classrooms at the University of Guelph; the maximum lecture theater capacity is 600 students; however, some courses have two sections. Thus, it appears that instructors consider the whole course with its multiple sections as one, whereas students likely think only of the classroom in which they are sitting.

FIGURE 1. Reported definition of class sizes. Average reported minimum and maximum class size for small, medium, and large classes by students (blue) and instructors (red) for courses in the MCB. Data are shown as box plots in which the narrow black horizontal bar represents the mean, the colored rectangle represents the first to third quartile, and the wide black bars represent the minimum and maximum values. Values that are larger or smaller than 1.5 times interquartile range (1.5 × IQR) are considered to be outliers and are shown as individual symbols. The y-axis (Reported class size) is shown as a common log scale.

2. What Does a Large Class Feel Like?

Students characterized large classes more often by the external environment than by their personal learning experiences. That is, students referred to the extrinsic experiences and features of large classes rather than the intrinsic experiences or outcomes. The most common descriptor of a large class was that it was held in a large lecture theater. Students also described large classes as ones that require the use of a microphone and have limited assessment schemes (midterms and final examinations with multiple-choice questions). When students did describe their personal learning experiences in large classes, they indicated the challenge of asking the instructor questions inside and outside the scheduled class. The large class environment was described as impersonal and anonymous and as an atmosphere where neither their peers nor their instructor noticed whether they were absent or attentive. Students noted the great potential for distractions in large classes, most often in terms of noise and their peers accessing the Internet.

The student descriptions of small classes emphasized personal learning experiences more often than the physical environment. Comments on the feeling of a sense of community and curricular flexibility dominated, characterizing small classes as places where the instructor was able to learn student names and adjust the lecturing pace and occasionally alter the course content throughout the semester. The introduction of group or individual presentations and an emphasis on practical skill development were further descriptors of small classes, with the most common one being the integration of a discussion within a lecture.

Students described medium-sized classes with a unique set of characteristics that were not simply a blend of small and large class characteristics. They revealed that instructors were accessible in medium-sized courses and that there was a greater potential for instructor–student interaction, such as asking questions, when compared with large ones. According to students, it was much easier to ask questions in a medium-sized class than in a larger one. Furthermore, a sense of community began to form: students started to recognize their peers and feel more comfortable interacting with their instructors. The methods of assessment were also described; medium-sized classes tended to include writing assignments and fewer multiple-choice examination questions.

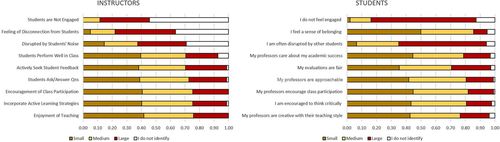

Instructors used many of the same themes as students in their characterizations of small, medium, and large classes; small classes enable personalization of course structure and more interaction with students. Both instructors and students felt it was challenging to connect and interact with one another in large classes. The students’ sense of instructor availability paralleled the instructors’ sense of being disconnected from their students. Students indicated instructor availability was lowest in large classes, and instructors indicated the greatest sense of being disconnected (Figure 2). Similarly, both groups assigned a statement about in-class distractions to large classes, although proportionally more students also assigned that statement to small classes than instructors did. As with students, instructors commented more often on the assessment methods used in large classes than in small classes. The creation of a sense of community in medium-sized classes was equally noted by both groups, with the establishment of an environment in which instructors knew students’ names occurring most often in small classes.

FIGURE 2. Representation of six statements that might be associated with small (1–49 students, brown), medium (50–239 students, yellow) or large (≥240 students, red) classes according to instructors (left) and students (right). White bars denote those statements for which a respondent did not identify with the content in association with a class size.

Other descriptions of different class sizes were unique to the instructors. For example, instructors discussed active learning, while students did not. Instructors reported it was more natural for them to include active-learning strategies in small classes, and it required more coordination and an uncomfortable degree of risk to reputation to incorporate them in large classes. Instructors more often characterized each class size by the type of resources allocated, particularly the number of TAs.

The development of critical-thinking skills often requires active learning through class participation. When asked about these two aspects with respect to class size, both students and instructors agreed that they are features associated with small classes, though a small proportion identified them as techniques also used in large classes. Similarly, instructors and students were in agreement regarding the encouragement of students to participate in class; this encouragement was more often associated with small classes (Figure 2). When investigating student engagement, instructors reported very strongly that they felt their students were the least engaged in large classes. No instructor felt that students were not engaged in small classes, yet a small proportion of students reported feeling this way.

3. Can a Large Class Be Perceived as Small?

In the survey, we specifically asked the question “Has there ever been a time when a numerically large class felt small? If yes, please elaborate.” In the survey, 42% of undergraduate students indicated that they have experienced this. The most common theme associated with large classes feeling small was the instructor. Students referred to engaging instructors who made them feel like important individuals despite being surrounded by hundreds of their peers. Instructors who walked through the aisles while lecturing and who learned their students’ names were deemed the most memorable and most effective at making a class feel smaller. Those instructors who incorporated small-group activities such as breakout groups in their lectures were also mentioned. Students described these activities as effective ways of breaking up the monotony of lecture. Many students also indicated that the labs and seminars similarly reduced the effective class size, giving them an opportunity to connect with their peers in a way that would feel awkward or disruptive in a lecture. Other students discussed strategies that could be used in all of their classes, regardless of instructor performance. The most common of these was to sit in the front rows of the lecture theater and ignore the rows of peers behind them.

Quantitative Characterization of Class Size

In addition to the qualitative student and instructor survey, we also developed a quantitative approach for describing classes using a categorical principal component analysis (CATPCA). In brief, PCA looks at the relationships among several different variables by reducing them to a smaller number of variables called principal components in an attempt to account for the variability in the original variables. CATPCA is similar to PCA, except that it does not assume a linear relationship between the variables.

The CATPCA incorporated seven variables obtained from course outlines (Table 3) that can be used to describe the resources and structure of courses of all enrollment sizes in the MCB. Note that at no point was class size used as a PCA variable. Our goal was to determine whether we could quantitatively describe how courses of different enrollment sizes may be similar or different based on their structure and resources, rather than simply the number of students.

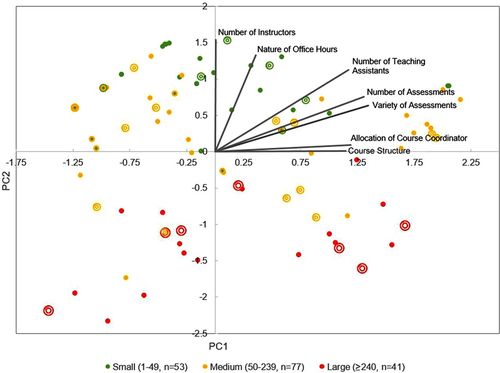

Principal Components

PC1 highlights the strong correlation between a wide range of the variables: the course structure, allocation of a course coordinator, the number and variety of assessments, and the number of TAs in a class. But, despite accounting for the greatest cumulative variance (40%), PC1 does not distinguish the classes by size (Figure 3). Thus, course structure is not directly attributable to class size, and large classes do not necessarily have the fewest TAs per student. The largest classes in the department were predominantly “lecture and seminar” type courses, while the smaller courses were more often “lecture only.” This suggests that the structuring of large classes into formalized seminar groups may be compensating for the discussion that can happen spontaneously in a small class.

FIGURE 3. Biplot of 171 classes plotted according to PC1 and PC2 with overlay of individual variable component loadings onto PC1 and PC2. The vectors show the projection of the weighting of a variable onto PC1 and PC2 (i.e., a particular variable’s contribution to PC1 and PC2). Each data point represents one iteration of a course between 2010 and 2014. Data points with colored rings represent instances of multiple classes with identical PC scores. Data points are colored according to class size in three categories: small (green: 1–49 students, n = 53), medium (yellow: 50–239 students, n = 77), and large (red: ≥240 students, n = 41). Most of the variation in PC1 is determined by differences in course resources, and most of the variation in PC2 is determined by differences in personnel. Differences among courses based upon enrollment (green, yellow, red) are therefore distinguished primarily by differences in personnel. There is significant variation in course resources across all course size categories.

PC2 is composed of the number of instructors, the number of TAs, and, to a lesser degree, the availability of instructors outside class time through their office hours. PC2 differentiates courses in the department by class size, because the larger classes usually have more than one faculty member assigned to teach them.

The number and variety of assessments were positively correlated with one another. In general, many assignments meant a large variety of assignments in a course. Typically, the addition of an assessment in a course (from one semester to another) was offered in a different format. As a combined unit, the number and variety of assessments can be called the “assessment methods” of a course, and the assessment methods were associated with both PC1 and PC2. The number and variety of assessments were positively associated with PC2, which can be interpreted as the largest classes having the fewest assessments and the least diverse set of assignments. In contrast, they were negatively associated with PC1, indicating that classes with more TAs and a method course structure that had additional components, like labs or seminars, had a larger number and more diverse set of assessments.

Another way of visualizing the ordinal variables in the analysis is by centroid coordinates instead of vectors. Centroids are the average PC score for all objects of the same category of a variable. For example, the centroid of “lecture-only” (Figure 4) is the average score of all classes that had that course structure. With respect to the analysis of course structure, the centroids for “lecture and seminar” and “lecture and lab” are located more closely among the large courses. The “lab-based” centroid is the farthest from all other centroids but is closer to the combined courses (those with labs and seminars) than the strictly “lecture-only” courses. This may reflect the difference in resource allocation and overall course structure between lab-based and lecture-only courses.

FIGURE 4. Centroid plot of 171 classes plotted according to PC1 and PC2 with centroid coordinates for course structure shown in black. Data points with colored rings represent instances of multiple classes with identical PC scores. Each data point represents one iteration of a course between 2010 and 2014. Data points are colored according to class size in three categories: small (green: 1–49 students, n = 51), medium (yellow: 50–239 students, n = 71), and large (red: ≥240 students, n = 44).

Cluster Analysis

Clustering allows us to identify groups of courses with similar characteristics. We can then determine whether these groups are also characterized by a similar class size. The four clusters identified by a two-step cluster analysis on PC1 and PC2 scores show the strong differentiation between lecture-only and all other types of course structure. Clusters 1 and 2, located largely in the left-hand quadrant (Figure 4) are differentiated from clusters 3 and 4 by having a predominantly lecture course structure. Clusters 1 and 2 are differentiated by class size with cluster 1 containing small and medium-sized classes and cluster 2 large classes. They are separated by the variables contributing to PC2, which include number of instructors. Clusters 3 and 4 encompass all the other courses with additional class–time resources such as seminars and labs and are separated from each other in the same way as cluster.

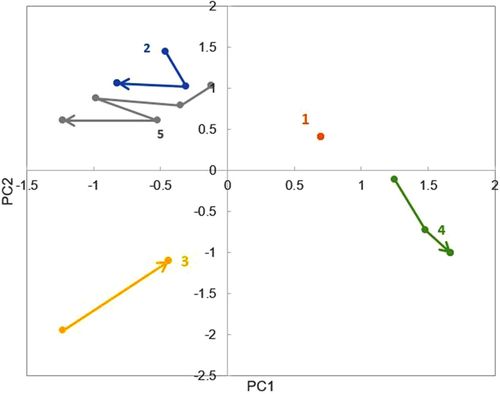

Single-Score Hotspots to Map Institutional Change

In addition to an analysis of course size, the PCA can also be used to map institutional change (changes to courses with respect to structure or resource input; Figure 5). We identified four types of course change: 1) courses that were static and underwent no change, 2) courses that made a change and then returned to the previous state, 3) courses whose development followed a specific direction (either upward or downward according to PC score), and 4) courses whose change was haphazard (i.e., in multiple directions). The courses that underwent no change whatsoever in the 4-year analysis were both large, core genetics and biochemistry courses with limited assessment methods (a midterm, a final examination, and small-value assignments). An example is BIOC 3560 (see Table 2 for the list of course codes used in the PCA and their titles). Student enrollment and all PCA variables were identical for all offerings of this course between 2010 and 2014. Single-score hotspots indicated when multiple iterations of the same course repeatedly received the same PC score and thus underwent no modification from one semester to the next.

FIGURE 5. Five types of course change. Each series of joined data points represents one course as it progresses chronologically. Some courses show (1) no change (BOT 2100), (2) a change followed by a reversal (MICR 4010), (3) steady upward change of PC score (MICR 3230), (4) steady downward change of PC score (BIOL 1090), and (5) haphazard change (MBG 4080).

The second type of course change was represented by MCB 2050, a second-year introductory cell biology course (Table 2). The large upward change in PC score shows the modification of only one aspect of the course: an increasing number of TAs. This is an example of a course that scaled up its resources but did not change its structure as student enrollment increased. An example that moves in the opposite direction is represented by MBG 4110 (Table 2), which, unlike MCB 2050, has modified its assessment methods to incorporate first a presentation component, and then in subsequent offerings, a series of in-class quizzes throughout the semester. Notably, MBG 4110 received no change in TA support with the addition of these components and did not undergo these changes in response to an increase in class size. This is opposite to MCB 2050, which increased its number of TAs without modifying its assessment methods.

The third type is represented by BIOC 2580 (Table 2), a course that showed a strong modification in one direction—the use of multiple weekly quizzes instead of a midterm examination in one offering—that was removed the following semester. This is an example of a course that modified one part and then returned to its previous state.

Courses that underwent haphazard change were all small and medium-sized upper-year courses that changed their assessment methods and number of TAs from semester to semester. This last type was found, for example, in a medium-sized third-year lab-based course (MBG 3350), whose assessment methods were modified almost every semester, with onetime and repeated instructors and a narrow range of student enrollment.

In general, the most common pattern for course modification in the department was of an upward trajectory according to the PC score. That is, courses are most often increasing the number of TAs and instructors, that is, scaling up rather than restructuring. About half (21% vs. 40%) of courses moved in the downward direction, driven by changes in the assessment methods instead of the number of TAs. Interestingly, courses that modified their assessment methods did not lose TA support: thus, these modifications did not result in fewer resources, because the number of TAs is determined through collective bargaining, and the calculation is based on the number of students in a course rather than the instructor’s needs.

More common than a change to course structure or resources were courses that underwent a change and then reverted to a previous state. Nearly 95% of the courses had unique PC scores for each iteration of the course, showing variation between semesters. However, more than a quarter of these courses made a change and then reverted to a previous state. This indicates that attempts were made to change structure or resources, but they were often not maintained in subsequent semesters.

Large classes received the same PC scores twice as often (66%) as small and medium courses (30% and 33% respectively). In this way, large classes were modified far less frequently than small or medium-sized courses. Furthermore, large classes that did change showed mostly an upward movement, demonstrating an increase in TA support or scaling up each semester instead of restructuring.

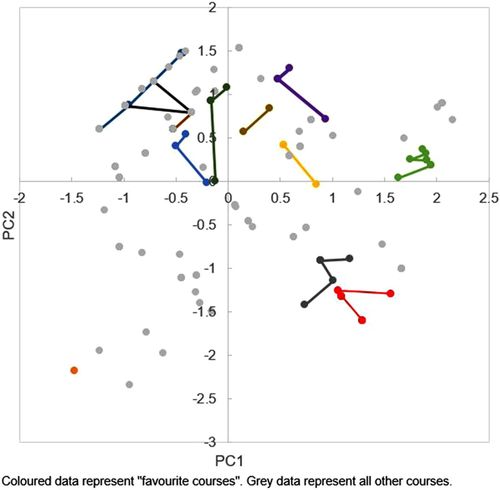

Relating the Favorite Courses to the Quantitative Analysis

The qualitative online survey included a question asking students to name their favorite courses. Figure 6 shows the distribution of the top 12 favorites according to their PCA scores. The most common typology of a favorite course is a small to medium-sized upper-year course that requires group work. After this, the most common course structure was “lecture and lab.” The only “lab-methods” course in the department was the course most commonly indicated as a favorite. Only one “lecture-only” class was mentioned by students; a third-year core biochemistry course (BIOC 3560). Otherwise, all numbers of instructors, TAs, and ranges of assessment methods were seen in the 12 favorite courses named by students.

FIGURE 6. The distribution of students’ favorite courses according to PC scores. The top 12 favorite courses named by students in an online survey are indicated by colored data points connected in chronological order (orange: BIOC 3560; red: BIOC 2580; dark gray: MICR 2420; light green: MBG 3350; yellow: MBG 3050; purple: BIOC 3570; brown connected by gray dots: MICR 3090; dark green: MICR 4330; blue: MBG 3080; brown connected by brown dots: MBG 4240; black: MBG 4280; teal: MBG 4110). Courses not listed as favorites are indicated by gray data points.

One of the goals of our project was to search for courses that were numerically large but were still indicated to be a favorite among students. While the PCA did not find a specific pattern in resource allocation or course structure to characterize a favorite course, the qualitative data suggest a more effective qualification: the instructor. The efficacy, humor, and engagement of an instructor was overwhelmingly the most common explanation given by students as to why a course was a favorite, often being the first aspect that students listed in their responses. The degree of interactivity was the next most important reason given by students. Courses in which they felt supported by their professors and their peers to not only succeed academically but also enjoy the material for the sake of learning were cited as reasons. In this way, it appears that the perception of the instructor by the students and the community built through the class are the crucial aspects in creating a positive learning experience in a course of any size.

DISCUSSION

Our survey of students and instructors indicates themes and attitudes about class size that are similar to those that were cited as early as 85 years ago (Hudelson, 1930). The results indicate that the frequency and quality of instructor–student interactions are perceived by both parties to be the least common in large classes. The importance of creating an environment for quality instructor–student interactions is now more urgent, because instructor–student interactions are a predictor of student persistence to graduation (West, 2004) and because class sizes in upper-year courses will continue to inflate as large cohorts are continually accepted to postsecondary institutions as predicted (Kerr, 2011).

Based on the qualitative data, the key variable that determined satisfaction from the student perspective within a course was consistently identified as the instructor. Moreover, the classes that students reported as their favorites came in all forms and sizes (verifiable through the quantitative data), implying that there is no formula for creating a well-liked course. It is instead the quality of an engaging instructor that makes a worthwhile learning experience. It follows that if instructors are capable of engaging the majority of students in a large class, reducing class size may not be a requirement for increasing engagement.

The PCA results mapped the nature and degree of change in course structure and resources over time. Within the time span of the data examined, three courses in the department underwent major revisions. Both of these changes were detected in the PCA results in addition to changes that were implemented in other courses. The results are not intended to indicate whether one method is more effective than another, since measures of student performance or satisfaction were not actively gathered to compare between groups. Instead, this showcases the ability of PCA to track these sorts of changes on a large scale, and future research could be designed to evaluate these changes. With increased focus on establishing best practices for course redesign (Gordon et al., 2009; Francis, 2012; Chiang et al., 2014), the PCA methodology could be an excellent mechanism to study the impact of these changes on various outcomes. The visual representation of data using PCA (shown in Figures 3–5) is another asset, providing a way of linking multiple variables in a single graph. With respect to policy change, PCA could be used to compare success or satisfaction rates of current practices with those of newly implemented ones. It can also demonstrate which changes (variables) are related to one another, as different variables are assembled into a few PCs. This too can help narrow down which practices or effects are relevant to a selected outcome.

The PCA results demonstrated that the strongest way to differentiate among courses of different size was by their structure. This finding is shared by Chatman (1997) in a multivariate analysis that investigated indicators of class size in private and public American postsecondary education institutions (the current study investigated a single public Canadian postsecondary institution). In Chatman’s analysis, course structures were sorted into three categories based on the relative amount of student activity (high, medium, and low). His research, in combination with this project, suggests the potential for using PCA in research that is more focused on outcomes of pedagogical approach rather than just resource investment. Whereas our study was retroactive and examined the structural aspects of courses, future studies could be cohort based and monitor differences in teaching style on various student outcomes. For example, variables such as student satisfaction, academic performance, depth of learning, or degree of critical thinking could be tracked and compared based on different in-class teaching styles. This is especially relevant given the focus on evidence-based decision making and the influx of novel teaching strategies, such as the flipped classroom and problem-based learning, in postsecondary education. Such studies could corroborate evidence in favor of adopting new teaching practices. The suitability of categorical PCA for use with a wide range of data is useful in this case, in addition to its design for use with large data sets. It could even, as in Chatman’s study, be used cross-institutionally, especially as educational data become increasingly available, including data sets that are collected over several years. It could be suitable for studies in primary, secondary and postsecondary institutions, where research interests and budgets vary widely.

CONCLUSION

Can a large class be perceived as small? Our results revealed that neither qualitative nor quantitative evidence alone can provide a simple formula for capturing the feel of a small class. From the student survey, we found that the instructor’s behavior is an important contributor to a student’s perception of class size. With the quantitative analysis of the courses, we showed that the course structure is an important variable that can be used to distinguish among courses of various sizes. We also showed that both strategies, scaling up and restructuring, have been used to manage growing class sizes. Future studies could investigate more specific outcomes, such as student performance in response to these types of changes. The combined results lead us to the following conclusion: making a class feel smaller requires adjustments on two fronts—the instructor and the course structure. The ability to map and measure institutional change over time is an excellent tool to test hypotheses about the effects of the introduction of novel instructional methods. The categorical PCA emerges as an exciting tool to investigate the multifaceted nature of learning in higher education.