Mixed-Methods Design in Biology Education Research: Approach and Uses

Abstract

Educational research often requires mixing different research methodologies to strengthen findings, better contextualize or explain results, or minimize the weaknesses of a single method. This article provides practical guidelines on how to conduct such research in biology education, with a focus on mixed-methods research (MMR) that uses both quantitative and qualitative inquiries. Specifically, the paper provides an overview of mixed-methods design typologies most relevant in biology education research. It also discusses common methodological issues that may arise in mixed-methods studies and ways to address them. The paper concludes with recommendations on how to report and write about MMR.

INTRODUCTION

An increasing number of studies in biology education are reporting the use of mixed-methods research (MMR), in which quantitative and qualitative data are combined to investigate questions of interest in biology teaching and learning (e.g., Andrews et al., 2012; Jensen et al., 2012; Höst et al., 2013; Ebert-May et al., 2015; Seidel et al., 2015). This increase coincides with general growth and expanded interest in mixed-methods approaches to research in various fields of study over the past 30 years (Plano Clark, 2010). Consequently, several handbooks and articles have been written that describe the use of mixed methods in the social and behavioral sciences (Tashakkori and Teddlie, 1998, 2010; Creswell et al., 2003; Creswell and Plano Clark, 2011; Greene, 2008; Terrell, 2011), focusing on both the theoretical underpinnings and procedural steps of conducting MMR. However, given the disciplinary ethos and divergent content perspectives of academic disciplines, it is important that researchers planning to use MMR become familiar with the theory and designs most commonly used within their disciplinary context. This article, therefore, focuses on the various ways in which quantitative and qualitative methods can be combined to address questions of interest in biology education and the many productive ways in which MMR can be used to support claims about biology teaching and learning.

The paper is organized into three parts. Part 1 provides introductory remarks that situate MMR within the larger context of research paradigms in science education. Part 2 provides a general description of mixed-methods approaches commonly found in biology education research (BER). Part 3 provides general guidelines on how to select an appropriate MMR design and attend to methodological issues that may arise when using MMR.

PART 1: UNDERSTANDING MIXED METHODS

Mixed methods emerged as a credible research design on the heels of a larger debate on research paradigms in education and the social sciences in the mid-1980s (Johnson and Onwuegbuzie, 2004; Tashakkori and Teddlie, 2010; Treagust et al., 2014). Biology researchers, however, have long used mixed-methods approaches to address issues of interest in biological sciences. It is, for example, common to determine the effect of a gene mutation by quantitative analysis and then characterize the context of that effect through qualitative analysis. It is also common to define behaviors of an animal and then count and analyze their frequency in different circumstances. In educational settings, the new approach provided a “third methodological” pathway that permitted combining quantitative and qualitative modes of social inquiry (Johnson et al., 2007; Tashakkori and Teddlie, 2010). In the words of Jennifer Greene (2008),

A mixed-methods way of thinking is an orientation toward social inquiry that actively invites us to participate in dialogue about multiple ways of seeing and hearing, multiple ways of making sense of the social world, and multiple standpoints on what is important and to be valued and cherished. (p. 20)

Green's description captures the essence of mixed methods—a pragmatic choice to address research problems through multiple methods with the goal of increasing the breadth, depth, and consistency of research findings. Integration of research findings from quantitative and qualitative inquiries in the same study or across studies maximizes the affordances of each approach and can provide better understanding of biology teaching and learning than either approach alone. While quantitative methods can reveal empirical evidence showing causal or correlative relationships or the effects of interventional studies, qualitative methods provide contextual information that colors the experiences of individual learners. The goal of mixed methods is not, however, to replace either the quantitative or the qualitative approaches. Certain problems—for example, addressing gains in standardized test scores—are better addressed through quantitative methods (e.g., Knight and Wood, 2005), and some—for example, understanding the meaning students assign to reaction arrows—merit qualitative research (e.g., Wright et al., 2014). Rather, the goal of mixed methods is to build on the strengths of both methods and minimize their weaknesses when the research merits using more than one method (Creswell et al., 2003; Johnson and Onwuegbuzie, 2004). Recent studies from the biology education literature will help illustrate the types of research that benefit from a mixed-methods approach.

In a recent study that used both quantitative and qualitative methods, Seidel et al. (2015) investigated non–content related conversational language, such as procedural talk, used by course instructors in a large reform-based introductory biology classroom cotaught by two instructors. Such language, which the authors termed “Instructor Talk,” is the language used to facilitate overall learning in the classroom, for example, language used to give directions on homework assignments or justifying use of active-learning strategies. Instructor Talk is distinct from language used to describe specific course concepts. To understand the prevalence of such language in biology classrooms, the authors asked, “What types of Instructor Talk exist in a selected introductory college biology course?” This question was exploratory in nature and merited qualitative inquiry that focused on identifying the types of Instructor Talk the two instructors used. The authors’ subsequent question, “To what extent do two instructors differ in the types and quantity of Instructor Talk they appear to use?,” aimed to enhance the findings from the qualitative phase and provided ways to further study and generalize this construct in a variety of class types (Seidel et al., 2015). The authors were able to address their initial research question through analysis of classroom transcripts containing more than 600 instructor quotes, identifying five emergent categories that were present in the analyzed sessions. They followed this exploratory qualitative phase of the study with statistical analyses that compared how often the instructors used identified categories and the average instances of Instructor Talk per class session. Without first characterizing and identifying patterns of Instructor Talk through the exploratory initial qualitative data, the authors could not have addressed the second question. Neither qualitative nor quantitative method was sufficient to address both research questions, but combining them strengthened the overall findings of the study.

In another BER study, Andrews et al. (2012) used a mixed-methods study to investigate undergraduate biology students’ misconceptions about genetic drift. Using qualitative data analysis, the authors identified 16 misconceptions students held about genetic drift that fit into one of five broad categories (e.g., novice genetics, genetic drift comprehension). Subsequent use of quantitative methods examined the frequency of misconceptions present before and after introductory instruction on genetic drift. The quantitative data supplemented the results of the qualitative analysis and shed light on changes in student misconceptions as a result of instruction. In this study, although data collection was separated in time and space, the quantitative and qualitative analyses were integrated, and the different data sets were used to generate the categories of misconceptions about genetic drift and to corroborate the findings. Again, we see the utility of both methods within the same study.

The Andrews et al. (2012) and Seidel et al. (2015) studies illustrate the types of research problems that merit a mixed-methods study: research problems in which a single method, qualitative or quantitative, is insufficient to fully understand the problem (Creswell et al., 2003). Another role of MMR research is to use qualitative work to follow up/elaborate on quantitative findings or to validate findings in multiple ways. More broadly, teaching and learning occur in social environments with specific cultural contexts, personal value systems, and classroom dynamics that color how students learn and teachers teach. In such environments, understanding the educational processes in which teachers and students engage becomes crucial to understanding how students learn. MMR is particularly appropriate for BER, because it contextualizes quantitative differences observed in BER studies, capturing the contextual, sociocultural norms and the experiential factors that characterize undergraduate biology classrooms.

PART 2: GENERAL TYPOLOGIES OF MIXED-METHODS DESIGNS

For those questions that merit a mixed-methods approach, this section of the paper describes different typologies of mixed-methods designs available for biology educators. General guidelines on the use of MMR and the methodological issues to consider are described in Part 3.

Based on a review of the literature (see Creswell et al., 2003), there are three general approaches to mixed methods—sequential, concurrent, or data transformation—that are most applicable to BER studies. These basic designs can get more complicated and advanced as merited by the phenomenon studied. More advanced variants of MMR, for example, multiphase designs or the transformative designs appropriate for social justice, are not addressed in this paper (Creswell et al., 2003; Terrell, 2011). The following paragraphs discuss each of the basic designs with respect to data-collection sequencing, method priority, and data-integration steps. As discussed below, these decisions often influence which MMR design to choose.

Sequential Designs

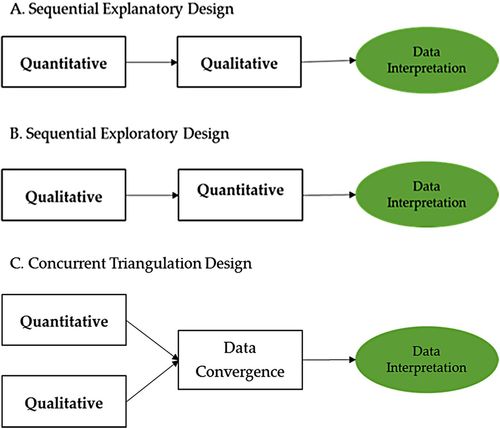

The sequential design approach implies linear data collection and analysis in which the collection of one set of data (e.g., qualitative) is followed by the collection and analysis of the other (e.g., quantitative). There are two general approaches within this design (see Figure 1, A and B) based on the implementation sequence of the data and their intended usage (Creswell et al., 2003): 1) sequential explanatory and 2) sequential exploratory. Each subdesign, along with illustrative examples in biology education, is further described in the following sections.

FIGURE 1. Basic typologies of MMR. There are three basic designs of mixed methods that differ in how data collection and analysis is sequenced: (A) sequential explanatory design, in which the quantitative method precedes the qualitative method; (B) sequential exploratory design, in which the qualitative method precedes the quantitative method; and (C) concurrent triangulation design, in which qualitative and quantitative data are collected concurrently.

Sequential Explanatory Design

The sequential explanatory approach is characterized by two distinct phases: an initial phase of quantitative data collection and analysis followed by a second qualitative data-collection and analysis phase (see Figure 1A). Findings from both phases are integrated during the data-interpretation stage. The general aim of this approach is to further explain the phenomenon under study qualitatively or to explore the findings of the quantitative study in more depth (Tashakkori and Teddlie, 2010). Given the sequential nature of data collection and analysis, a fundamental research question in a study using this design asks, “In what ways do the qualitative findings explain the quantitative results?” (Creswell et al., 2003). Often, the initial quantitative phase has greater priority over the second, qualitative phase. At the interpretation stage, the results of the qualitative data often provide a better understanding of the research problem than simply using the quantitative study alone. As such, the findings in the quantitative study frequently guide the formation of research questions addressed in the qualitative phase (Creswell et al., 2003), for example, by helping formulate appropriate follow-up questions to ask during individual or focus group interviews. The following examples from the extant literature illustrate how this design has been used in the BER field.

In an interventional study with an overtly described two-phase sequential explanatory design, Buchwitz et al. (2012) assessed the effectiveness of the University of Washington’s Biology Fellows Program, a premajors’ course that introduced incoming biology majors to the rigor expected of bioscience majors and assisted them in their development as science learners. The program emphasized the development of process skills (i.e., data analysis, experimental design, and scientific communication) and provided supplementary instruction for those enrolled in introductory biology courses. To assess the effectiveness of the program, the authors initially used nonhierarchical linear regression analysis with six explanatory variables inclusive of college entry data (high school grade point average and Scholastic Aptitude Test scores), university-related factors (e.g., economically disadvantaged and first-generation college student status), program-related data (e.g., project participation), and subsequent performance in introductory biology courses. Analysis showed that participation in the Biology Fellows Program was associated with higher grades in two subsequent gateway biology courses across multiple quarters and instructors. To better understand how participating in the Biology Fellows Program may be facilitating change, the authors asked two external reviewers to conduct a focus group study with program participants. Their goal was to gather information from participants retrospectively (2 to 4 years after their participation in the program) about their learning experiences in and beyond the program and how those experiences reflected program goals. Students’ responses in the focus group study were used to generate themes and help explain the quantitative results. The manner in which the quantitative and qualitative data were collected and analyzed was described in detail. The authors justified the use of this design by stating, “A mixed-methods approach with complementary quantitative and qualitative assessments provides a means to address [their research] question and to capture more fully the richness of individuals’ learning” (p. 274).

In a similar study, Fulop and Tanner (2012) administered written assessments to 339 high school students in an urban school district and subsequently interviewed 15 of the students. The goal of this two-phased sequential study was to examine high school students’ conceptions about the biological basis of learning. To address their research problem, they used two questions to guide their study: 1) “After [their] mandatory biology education, how do high school students conceptualize learning?,” and 2) “To what extent do high school students have a biological framework for conceptualizing learning?” The authors used statistical analysis (post hoc quantitative analysis and quantification of open-ended items) to score the written assessment and used thematic analysis to interpret the qualitative data. Although the particular design of the sequential explanatory model is not mentioned in the article, the authors make it clear that they used a mixed-methods approach and particularly mention how the individual interviews with a subset of students drawn from the larger study population were used to further explore how individual students think about learning and the brain. In drawing their conclusions about students’ conceptualization of the biological basis of learning, the authors integrated analysis of the quantitative and qualitative data. For example, on the basis of the written assessment, the authors concluded that 75% of the study participants demonstrated a nonbiological framework for learning but also determined that 67% displayed misconceptions about the biological basis of learning during the interviews.

Sequential Exploratory Design

The sequential exploratory approach is similarly characterized by two distinct phases: an initial qualitative phase followed by a second phase of quantitative data collection and analysis (see Figure 1B). Similar to the sequential explanatory approach, findings from both phases in this design are integrated during the data-interpretation stage. Unlike the sequential explanatory approach, the general aim of this approach is to further explore the phenomenon under study quantitatively or to perform quantitative studies to generalize qualitative findings to different samples (Tashakkori and Teddlie, 2010). Given the sequential nature of data collection and analysis, a fundamental research question in a study using this design often asks, “In what ways do the quantitative findings generalize the qualitative results?” (Creswell et al., 2003).

As a research method, the sequential exploratory approach is often the most appropriate design when developing new instruments or when a researcher intends to generalize findings from a qualitative study to different groups (Tashakkori and Teddlie, 1998, 2010; Creswell et al., 2003). Consider, for example, the case of a biology education researcher interested in examining student misconceptions in evolution. Using the sequential exploratory approach, the researcher would collect qualitative data from interviews to identify commonly held student misconceptions in evolutionary concepts. The researcher can then use the qualitative data to develop an instrument on evolution misconceptions that allows the collection of quantitative data from a large number of participants in various settings and institutions (after instrument validation and psychometric analysis). In this case, the initial qualitative data would inform the design of the instrument used to collect the quantitative data, often using identified student misconceptions as distractors. An example of studies that followed the instrument development process outlined here can be found in Hanauer and Dolan (2013) and Hanauer et al. (2012).

Pugh et al. (2014) used the sequential exploratory design in a study that investigated high school biology students’ conceptual understanding of the concept of natural selection and their ability to generatively use the newly learned concepts across knowledge domains in biology. To assess students’ transfer ability and conceptual understanding, the authors first collected qualitative data by administering open-response items to 138 students and were able to identify, on the basis of thematic analysis, particular patterns of surface and deep-level transfer. Subsequently, the authors collected quantitative data that showed a small but significant relationship between deep-level, but not surface-level, transfer and conceptual understanding. The principal methodology of the study was qualitative in nature and in turn informed the quantitative component of the study. The combination of the two methods shed light on the relationship between concept understanding and the patterns of knowledge transfer.

Strengths and Weaknesses of the Sequential Designs

In both of the sequential models described above (exploratory and explanatory), the data collection and analysis proceeded in two distinct phases. As illustrated by the examples from the BER literature, the main strengths of the sequential designs include the ability to 1) contextualize and generalize qualitative findings to larger samples (in the case of sequential exploratory); 2) enable one to gain a deeper understanding of findings revealed by quantitative studies (in the case of sequential explanatory); and 3) collect and analyze the different methods separately. Additionally, the two-phase approach makes sequential designs easy to implement, describe, and report.

One weakness of sequential designs is the length of time required to complete both data-collection phases, especially given that the second phase is often in response to the results of the first phase. That is, by collecting the data at two different time points, one essentially doubles the length of time required to complete a single-method study. Moreover, because data collection is sequential, it may be difficult to decide when to proceed to the next phase. It may also be difficult to integrate or connect the findings of the two phases. For those projects with shorter time lengths, concurrent designs in which both data sets are collected in a single phase may be more appropriate. The next section of the paper provides details of concurrent designs of MMR.

Concurrent Designs

In the concurrent design, both qualitative and quantitative data are collected in a single phase. Because the general aim of this approach is to better understand or obtain more developed understanding of the phenomenon under study, the data can be collected from the same participants or similar target populations. The goal is to obtain different but complementary data that validate the overall results. There are two basic approaches within concurrent design: 1) concurrent triangulation (Figure 1C) and 2) concurrent nested (Figure 2A). These are described below.

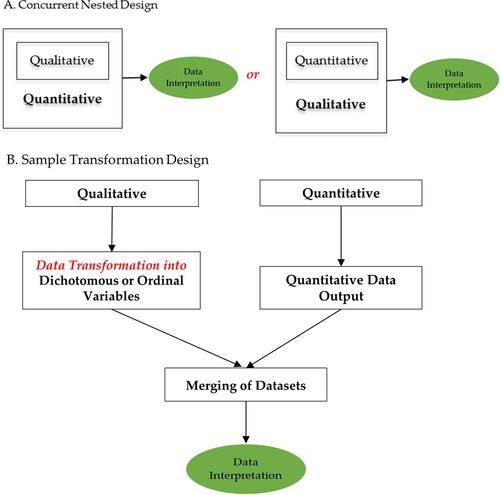

FIGURE 2. Complex typologies of MMR. Two complex forms of MMR are (A) concurrent nested design, in which either the qualitative or the quantitative method is nested within a primary quantitative or qualitative approach (in this case, the main difference hinges on data prioritization); and (B) transformation design, in which one data form is transformed to the other (e.g., qualitative to quantitative).

Concurrent Triangulation Design

The concurrent triangulation design is the most common approach used in BER studies. The main objective is to corroborate or cross-validate findings by using both quantitative and qualitative studies. Data collection and analysis is done separately but merged afterward (see Figure 1C). In interpreting the overall merged results (Figure 1C), one looks for data convergence, divergence, contradictions, or any relationship the separate data analyses reveal. This can be done using several strategies, for example, through side-by-side comparison that discusses how the findings of one data set confirm or refute findings of the other data set. As the following examples from the BER literature illustrate, one method (qualitative or quantitative) can have priority over the other in the concurrent triangulation approach.

In a recent study that used the concurrent triangulation approach, Jensen et al. (2012) explored the effectiveness of a first-year class project in supporting student progress toward selected student learning and development outcomes. The students were required to complete a group video project focused on nutrition and healthy eating as a capstone class assignment. Using a structured rubric to track frequency data, the authors collected and analyzed quantitative measures of student behavior. They similarly collected qualitative data through observations and interviews with representative individual students and a focus group. In justifying why they used this design, the authors stated, “the data-collection techniques used in this study provide a degree of triangulation aimed at establishing validity of the conclusions drawn from the evaluation” (p. 72). The study was primarily qualitative in nature, with the objective of understanding a particular student experience validated by the quantitative measures. In this case, the authors found convergent results that strengthened the overall study—student behaviors as measured by both the quantitative and qualitative results were consistent with targeted learning and development outcomes.

In a similar manner, Höst et al. (2013) used a concurrent triangulation design to investigate the impact of using two external representations of virus self-assembly, an interactive tangible three-dimensional model or a static two-dimensional image, on student learning about the process of viral self-assembly. All the students in a biochemistry course at a Swedish university engaged in a small-group exercise that included the same series of tasks. They were randomly assigned to groups, some of which used the three-dimensional tangible model and some of which used the static images. Students completed a test before and after the group exercise. Researchers used an analysis of variance to test for an association between two factors (external representation: tangible model versus image; and testing time: pre versus post) and the score a student earned on the test. The authors found that test scores differed between the pre- and posttests but not between the two types of external representations. The researchers used qualitative analyses of open-response questions to further assess how the group exercise influenced students’ conceptual understanding of self-assembly. The findings from the qualitative analysis corroborated the findings from the quantitative analysis.

In the preceding examples of the concurrent triangulation design, the authors collected quantitative and qualitative data concurrently, using closed-ended and open-ended items. In the Jensen et al. (2012) study, the qualitative data had priority over the quantitative data. In the Höst et al. (2013) study, the quantitative data appear to have had priority over the qualitative data. In concurrent triangulation studies, either method can have priority over the other or both can be on equal footing. In both studies, the authors justified their use of the specific concurrent method as a way to triangulate their findings. The next section contrasts this triangulation approach with the concurrent nested design.

Concurrent Nested Design

In the concurrent nested design, a strong supplemental study is collected during the data-collection and analysis phase of the primary study (see Figure 2A). In this type of study, it does not matter whether the primary study is quantitative and the nested study qualitative or vice versa. The major aim of this design, which is often used in the health sciences, is to use the nested analysis to address different research questions than those addressed by the primary method or to use the nested method to seek information about different levels of the research problem. The general idea is that a need arises to address different types of questions within the research project that require different methods (Creswell et al., 2003). Most published mixed-methods studies that use this approach tend to be experimental designs that require qualitative aspects to examine how an intervention is working or to follow up specific aspects of the experiments (Creswell et al., 2003). An illustrative example in the context of biology education would be for a researcher to implement a specific interventional study in his or her classroom and to evaluate the effectiveness of the intervention by using quasi-experimental pre/posttest measures. However, in between the two measurements, the researcher interviews or collects open-ended written responses from the students to examine students’ experiences with the intervention.

A study by Tomanek and Montplaisir (2004) used this approach. In this study, the authors examined the study habits of students enrolled in a large introductory biology class. The authors collected two types of data: 1) pre/posttest assessment data from lecture sessions that covered cell division and Mendelian genetics, and 2) preinstruction and postinstruction interviews with a purposely selected sample of 13 students. The pre/posttest items constituted the quantitative data, while the pre/postinstruction interviews constituted the qualitative data. Because the main goal of the study was to understand students’ study habits both during lecture (e.g., how they used the information presented in lectures) and outside the classroom (e.g., how they studied, what resources they used), the study was primarily qualitative. The quantitative data were concurrently collected but addressed a different question: Did the study tasks and habits in which the students engaged help them academically? The quantitative data were thus nested within the larger qualitative study, illustrating the general scheme of the concurrent nested design. This research illustrates a distinguishing feature of nested and triangulated designs: in the nested design the two methods of analysis often address different research questions, whereas in triangulated design the two methods address the same question.

Strengths and Weaknesses of the Concurrent Designs

In both of the concurrent designs described above (triangulation and nested), the data collection occurs during a single phase of the research and the analysis occurs separately. Given the shorter period of time and the separate nature of the analysis, the concurrent designs tend to be the most efficient of the mixed-methods typology. There are two main drawbacks of the concurrent designs. For one, the concurrent nature of data collection precludes follow-up on any interesting or confusing issues that may arise as analysis unfolds. Second, data integration may become an impediment if the results are contradictory and/or diverge. The difficulty in this case becomes how to resolve divergent results short of declaring the study a failure. Additionally, it may be difficult to compare and contrast qualitative and quantitative data without transforming them to a common scale—for example, by transforming the qualitative data to dichotomous variables that can be subjected to statistical analysis, thus enabling comparison with the other quantitative data. However, as described later, such transformation may sacrifice the depth and the contextual data associated with the qualitative research.

Data-Transformation Designs

The data-transformation design implies changing one data set (e.g., qualitative) to another (e.g., quantitative) through either quantitating or qualitizing. Quantitating refers to the act of transforming qualitative data (codes) into quantitative data (variables), whereas qualitizing is the act of transforming numerical data (variables) into codes (or themes) that can be analyzed qualitatively (Tashakkori and Teddlie, 2010). For example, Witcher et al. (2001) examined preservice teachers’ perceptions of the characteristics of effective teachers. To address their research questions, the authors collected quantitative data but then transformed those data into six general themes (e.g., student-centeredness, enthusiasm) that were prevalent in the participants’ responses. While qualitizing quantitative data, as done by Witcher et al. (2001), is theoretically possible, in practice, quantitating of qualitative data is far more common, a practice reinforced by the rhetorical appeal of numbers and their association with rigor (Sandelowski et al., 2009). Figure 2B shows a general scheme of qualitative data that are transformed into quantitative form. As can be seen in the figure, data collection can happen sequentially or concurrently (as described earlier) for the sequential and concurrent designs. The underlying rationale for choosing this design is also similar to that described previously for sequential and concurrent designs. The only difference in this case is that one data set (quantitative or qualitative) is transformed. Comparison and merging of the two data sets occurs at the data-interpretation stage.

To illustrate this design, imagine a researcher who examines student attitudes toward course-based undergraduate research experiences (CUREs). In her study, the researcher asked two questions: 1) “What are students’ overall attitudes toward CUREs?,” and 2) “Are there differences between biology and non-biology majors’ attitudes toward CUREs?” To address these questions, the researcher collected survey and in-depth interview data from students who completed an introductory biology course that uses CUREs in lab sessions. The survey questions were closed-ended and consisted of positively worded prompts (e.g., CURE labs are enjoyable and stimulating) and negatively worded prompts (e.g., CURE labs are confusing and lack directionality) on a five-point Likert scale. During the interviews, the researcher used a semistructured interview protocol to further probe the students’ overall attitudes toward CUREs. Analyses of the data proceeded as follows (refer to Figure 2B):

The quantitative survey data were entered into a chosen database (e.g., Excel) and organized under two broad categories of positive and negative attitudes.

The qualitative interview data were also analyzed and coded as positive and negative responses. This data set was then quantified into dichotomous variables 0 or 1 based on the absence or presence of negative and positive responses.

The two data sets were merged, and the combined data were analyzed for association using statistical analyses.

The overall data interpretation examined the prevalence of positive versus negative attitudes in the student population.

In this hypothetical case, the qualitative interview data are transformed into dichotomous variables corresponding to negative and positive attitudinal aspects of CUREs, categories predetermined before data collection. The overall data analysis occurred after the qualitative and the quantitative data were integrated. The goal of the interview data was to capture any contextual variables that were not explained by the survey data (not to triangulate the findings). That is, the interview data had been used to interpret the survey data and fill any holes that the survey did not capture, as it might not have identified a priori all the things that students might have feelings about with respect to CURE labs. The combined data thus provided a more complete picture than was possible only with the survey (i.e., quantitative) data.

The study by Ebert-May et al. (2015) is also an example of this type of research. In their study, Ebert-May and colleagues examined the extent to which biology postdoctoral fellows (postdocs) believed in and implemented evidence-based pedagogies after completion of a 2-year professional development program. The authors used the Approaches to Teaching Inventory (Trigwell and Prosser, 2004) and local surveys to characterize the postdocs’ beliefs about teaching and knowledge and experiences with active-learning pedagogies. To capture and analyze the postdocs’ teaching practices, the participants submitted videos for at least two complete class sessions for each full course that they taught while participating in the professional development program. To analyze teaching practices captured by the videos, the authors chose a validated measurement of teacher practices in the classroom, Reformed Teaching Observation Protocol (Sawada et al., 2002), to measure the degree to which classroom instruction used active-learning pedagogies. The authors did not develop thematic analysis of the postdocs’ teaching practices but rather transformed the qualitative video data into numerical units that were analyzed with statistical tools. Thus, despite the collection of both quantitative data (i.e., surveys) and qualitative data (i.e., videos), only quantitative data-analysis strategies were ultimately used to examine their research question.

Strengths and Weaknesses of the Transformation Designs

The transformation designs can enable researchers to convert qualitative data into a quantitative format to meet specific goals of quantitatively oriented research, such as evaluating the effect size of an intervention or generalizing. This advantage, however, also reveals a major weakness of this design: transformation of qualitative into quantitative data causes some richness and depth of the qualitative data to be lost. Some researchers (e.g., Bazeley, 2004) contend that transforming qualitative data into dichotomous variables makes them one-dimensional and strips them of the flexibility associated with thematic analysis; that is, the quantitated data are no longer mutable to analysis of emergent themes characteristic of qualitative research. For this reason, the transformation designs may be most effective when the focus is quantification of a phenomenon rather than an in-depth, comprehensive understanding of the phenomenon.

PART 3: PRACTICAL GUIDELINES AND ISSUES TO CONSIDER

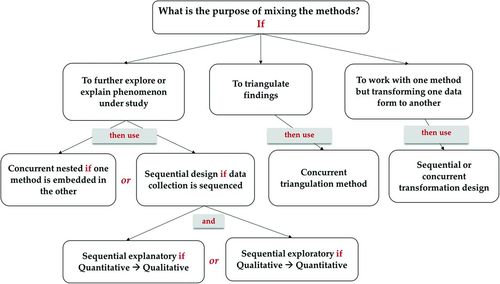

Having determined that a research question merits a mixed-methods approach, it is necessary to select an appropriate MMR design. As we have seen, the major influence on which design to choose is driven by data-collection sequencing, method priority, and the planned data-integration steps. Figure 3 provides summary guidelines on how to select a specific design among the different MMR topologies discussed in the previous section. In this section, we discuss major methodological issues that may arise during the study design.

FIGURE 3. MMR design decision tree. This if–use dichotomous key is designed to help researchers select appropriate MMR design based on the intent of their research. Refer to part 2 for the main differences between the different MMR designs.

Methodological Issues to Consider

In addition to issues surrounding the selection of an appropriate MMR design, several methodological issues such as those listed in Table 1 may arise. These issues (sampling, participant burden, data analysis, and transparency) are crucial in all research methods but more so when mixing quantitative and qualitative methods. For example, if a researcher collects both qualitative and quantitative data from the same participants, what burden does that place on the participants? On the other hand, if the researcher collects data from different participants, what complications does that present for data analysis? One has to be cognizant of both the burden and the complexity that arises from data collection when conducting a mixed-methods study.

| Issues to consider | Questions to ask |

|---|---|

| 1. Sample size | What sample size is appropriate for research addressed? |

| ○ Sample size is likely to vary between quantitative and qualitative work due to practical concerns like money, time, effort, and the main purposes of the study. | |

| ○ A targeted or deliberate sample often better meets study objectives in qualitative work, whereas a large sample is necessary for quantitative work. One must consider trade-offs in sample size when deciding which method should take priority in the chosen design | |

| 2. Participant burden | What burden is placed on participants? |

| ○ If collecting both qualitative and quantitative data, do both kinds of data need to be collected from the same individuals? | |

| ○ A researcher must consider what issues could arise if data are collected from different participants | |

| 3. Analysis and interpretative issues | What to consider when analyzing the data? |

| ○ One must consider how analysis of different types of data can strengthen the collection and analysis of the other data type, and plan the study accordingly | |

| ○ One must also recognize that study design may need to change midstudy based on early findings. | |

| 4. Transparency | What to report? |

| ○ For each design, one must report how the mixed-methods study design addressed shortcomings often associated with single-method studies | |

| ○ For sequential designs, how did the results in the first phase inform research processes in the second phase? | |

| ○ For concurrent designs, how was data integrated, especially if the findings diverged? | |

| ○ For data-transformation designs, was richness and depth that was associated with the qualitative approach lost when data were transformed? If so, does this loss affect study outcomes? |

Sampling is one issue that may present complications, as it is likely to vary between quantitative and qualitative work due to practical concerns like money, time, and effort, but also because the purpose of these different methods varies. The purpose of qualitative work is generally not to infer to a broader population, so a large random sample is not necessary, as may be the case in a quantitative study. The trick for a researcher designing a mixed-methods study is to consider questions that are specific to quantitative analyses (i.e., power) and those that are specific to qualitative analysis (i.e., variations in views and perspectives, representativeness of the qualitative sample). Thus, one has to consider what sample size is appropriate to make useful inferences from both the quantitative and qualitative data.

Certain sampling issues are specific to mixed-methods approaches, For example, one must consider whether the interview sample must be a subset of the quantitative sample or from a different population. If it is a subset, how will that subset be targeted—for example, should it be representative of extreme performances or large enough to capture the most common groups? There are advantages and disadvantages to both approaches. If one chooses to represent extreme performances, that allows using a smaller sample but necessitates a sequential or at least a nested design. If, on the other hand, one chooses to interview a sample large enough to capture the most common groups, trade-offs come in terms of investment of time and resources versus the ability to make generalizable inferences as a result of the large sample size. These issues require thought and attention as one designs a mixed-methods study.

Data integration is another methodological issue that may arise. For example, in sequential designs, data analysis occurs separately, and findings are integrated at the interpretation stage. In contrast, when using concurrent triangulation designs, data analysis occurs simultaneously. Theoretically, data analysis can occur at any point in the research process. So, when is the best time for analyzing the data and how should they be integrated? Some authors (e.g., Yoshikawa et al., 2008) argue that it is not the best methodological choice to separate analysis of quantitative and qualitative data but instead it is preferable to integrate the results throughout the analysis phase of the research project. Yoshikawa et al. (2008) argue that such an integration approach (i.e., integrating the results throughout the analysis phase) can result in rich integration across methods and analyses. Such integration, however, will require expertise and a certain level of competence in both qualitative and quantitative methods, as qualitative and quantitative methods require different skill sets. These issues can be resolved through collaboration and utilization of the skill sets of different team members who can take the lead in specific aspects of the research while communicating with the research team on the results.

Finally, given the varied nature and purposes of the different methods used in MMR, it is important to report in detail how analyses are conducted. For sequential designs, the need is to discuss matters such as how results from the first phase informed data collection and analysis in the second phase. For concurrent designs, the need is to discuss what strategies are used to resolve conflicts that may arise from contradictory results (e.g., gathering more data to address the conflict). One must also describe how it was ensured that the depth and flexibility associated with qualitative data were not lost in the analysis, especially if a transformation design was used. If at all possible, it helps to publish data-collection tools such as interview protocols, quantitative instruments, and visual representations of data-integration plans. That is, methodological transparency becomes an important consideration in mixed-methods studies.

Recommendations for Writing about MMR

When reporting mixed-methods study, the writing must

communicate the intent of the study (e.g., “this mixed-methods study examined …”);

specify which design was used (e.g., “we used sequential exploratory design to…”);

describe how both data forms were collected (e.g., “through structured interviews, we addressed the question of … participants were also surveyed …”);

provide the rationale for why both quantitative and qualitative data sets were collected (e.g., “the qualitative study addressed [i.e., the research question]; the quantitative study addressed [i.e., the research question]…”); and

describe how validity and reliability (or “trustworthiness”) were established in the chosen design.

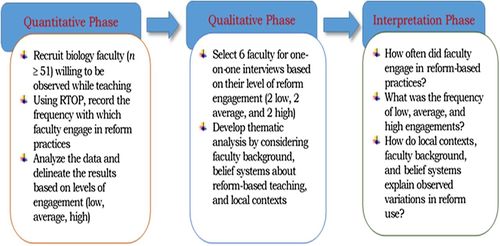

Ivankova et al. (2006) provide a sample MMR study featuring most of the elements outlined above and discuss additional guidelines on how to communicate about MMR studies. Table 2 provides further references on general approaches to MMR and writing about mixed methods, including the use of diagrams. Mixed methodologists particularly recommend the use of visual representations to depict the procedural steps involved in a mixed-methods study, such as the one shown in Figure 4 for a hypothetical two-phased sequential explanatory study. As can be seen in Figure 4, the visual diagram depicts the progression of research activities from data collection and analysis in the initial quantitative phase to qualitative data collection and analysis in the second phase to questions that may help integrate and interpret the findings. Most studies in the MMR literature use similar visual depictions that portray the complexity and the sequence of research activities in MMR studies (Ivankova et al., 2006; Creswell and Plano Clark, 2011).

FIGURE 4. Sample diagram for a hypothetical BER study. Researchers using mixed-methods approaches are encouraged to visually depict the procedural steps involved in their study, as shown in this figure.

| Key references on MMR (general approaches and methodological issues) | Creswell and Plano Clark (2011). Designing and Conducting Mixed Methods Research, 2nd ed., Thousand Oaks, CA: Sage |

| Morgan (2007). Paradigms lost and pragmatism regained: methodological implications of combining qualitative and quantitative methods. Journal of Mixed Methods Research 1, 48–76. | |

| Tashakkori and Teddlie (1998). Mixed Methodology: Combining Qualitative and Quantitative Approaches, Thousand Oaks, CA: Sage. | |

| Tashakkori and Teddlie (2010). SAGE Handbook of Mixed Methods in Social and Behavioral Research, 2nd ed., Thousand Oaks, CA: Sage. | |

| References for providing justification for mixed-methods studies | Bryman (2006). Integrating quantitative and qualitative research: how is it done? Qualitative Research 6, 97–113. |

| Greene (2008). Is mixed methods social inquiry a distinctive methodology? Journal of Mixed Methods Research 2, 7–22. | |

| Johnson and Onwuegbuzie (2004). Mixed methods research: a research paradigm whose time has come. Educational Researcher 33(7), 14–26. | |

| References for how to write about MMR | Creswell and Plano Clark (2011). Designing and Conducting Mixed Methods Research, 2nd ed., Thousand Oaks, CA: Sage. |

| Ivankova et al., (2006). Using mixed methods sequential explanatory design: from theory to practice. Field Methods 18, 3–20. | |

| Plano Clark and Badiee (2010). Research questions in mixed methods research. In: Handbook of Mixed Methods Research, 2nd ed., ed. A. Tashakkori and C. Teddlie, Thousand Oaks, CA: Sage, 275–304. |

CONCLUSION

In the realm of biology education, the social nature of educational inquiry often merits the use of multiple perspectives, as was the case with the BER studies cited in this paper. Given the various approaches of mixed methods discussed here and elsewhere (Creswell et al., 2003; Tashakkori and Teddlie, 2010), it is important for mixed-methods researchers to describe the decisions that went into their MMR design selections and guided their research projects. Three factors were discussed in this paper that can guide that selection: data-collection sequencing, method priority, and data integration. Understanding how these factors effect which MMR design to select, being clear about data-analysis procedures, and attending to methodological issues that arise will only strengthen MMR studies in our field and undoubtedly enrich biology teaching and learning through the use of multiple perspectives.

ACKNOWLEDGMENTS

I thank Drs. Anita Schuchardt and James Nyachwaya for close reading of this article and the editor and two anonymous reviewers for their constructive feedback on an earlier version of this article.