Moving the Needle: Evidence of an Effective Study Strategy Intervention in a Community College Biology Course

Abstract

Many science, technology, engineering, and math (STEM) community college students do not complete their degree, and these students are more likely to be women or in historically excluded racial or ethnic groups. In introductory courses, low grades can trigger this exodus. Implementation of high-impact study strategies could lead to increased academic performance and retention. The examination of study strategies rarely occurs at the community college level, even though community colleges educate approximately half of all STEM students in the United States who earn a bachelor’s degree. To fill this research gap, we studied students in two biology courses at a Hispanic-serving community college. Students were asked their most commonly used study strategies at the start and end of the semester. They were given a presentation on study skills toward the beginning of the semester and asked to self-assess their study strategies for each exam. We observed a significantly higher course grade for students who reported spacing their studying and creating drawings when controlling for demographic factors, and usage of these strategies increased by the end of the semester. We conclude that high-impact study strategies can be taught to students in community college biology courses and result in higher course performance.

INTRODUCTION

A substantial proportion of students who begin a degree in science, technology, engineering, and math (STEM) will not complete it. A study that tracked more than 13,000 STEM students in associate’s or bachelor’s degree programs in the United States for 6 years found that 48% of students either switched to a non-STEM major or left college without earning a degree (Chen, 2013). For biology students, the rates were nearly identical: 46% of bachelor’s students left the discipline (Chen, 2013). Such attrition is problematic for meeting the increased demand for STEM workers in the United States (National Science Board, 2007; National Science and Technology Council, 2018).

The characteristics of students who leave STEM academic pathways raise concerns about equity. Students who are female and/or first-generation, have low socioeconomic status, or were historically excluded based on their ethnicity or race are less likely to complete a STEM degree. Students in the lowest quartile for income had attrition rates (24%) that are almost twice those of students in the highest income quartile (13%; U.S. Department of Education, 2018). The 6-year completion rate (for a certificate and up to a 4-year degree) for students who started in 2014 at 2- or 4-year schools is 51% for students who are Asian, 49% for students who are White, but 36% for students who are Hispanic and 28% for students who are Black (Causey et al., 2020).

An important population to focus on is students from community colleges. The mission of community colleges is open access and includes significant emphasis on both transferring students to 4-year degrees and providing vocational training and certificates (Bragg, 2001; Labov, 2012). Community colleges across the United States enrolled about 5.5 million students in Fall 2019, roughly 32% of all undergraduates (Community College FAQs, 2021). Nearly half of the students receiving a bachelor’s degree in STEM from the University of California come from a California Community College (2021). Community colleges also provide a more affordable option than 4-year colleges. Average full-time tuition in 2019–2020 was $3700 per year at community colleges, compared with $10,400 annually at public 4-year colleges (Ma et al., 2019). Only 36% of community college students take out loans, compared with 60% of students at public 4-year institutions (Community College FAQs, 2021). Community colleges also support large numbers of historically underrepresented students. Fifty-five percent of undergraduate students who are Hispanic and 44% of undergraduate students who are Black attended community college (Community College FAQs, 2021).

While community colleges are the primary starting place for many undergraduate students, these students deal with more barriers to completion. A higher proportion of community college students work full-time (47% vs. 41%, Campbell and Wescott, 2019). Students who are parents are much more likely to enroll in community college (Reed et al., 2021). Many community college students are capable of being successful in transfer-level courses (Belfield and Crosta, 2012; Bahr et al., 2019), and surveys show they spend the same amount of time on their course work as students at primarily undergraduate institutions or research universities (Freeman et al., 2020). However, because students entering community college often have low scores on math and reading proficiency exams (Clovis and Chang, 2021), almost 70% of community college students are placed in developmental courses (historically called remedial courses; Center for Community College Student Engagement, 2016). Some community college systems now allow new students to skip developmental courses, which increases their persistence in college but can lower their grades as they work to master the material (Park et al., 2018). Additionally, students who have financial limitations or are supporting families are less likely to graduate or graduate quickly (Johnson et al., 2016). Given the challenges that community college students face, effective study strategies are critical and can help students reduce the need for developmental courses.

This study focuses on the effect of providing high-impact study strategies to community college students in biology classrooms. We hypothesize that equipping students with effective study strategies during the early years of college, particularly at a community college, will lead to increased success in the form of higher grades. Because community college students often have less college preparation, they are more likely to require explicit instruction on effective study strategies. Teaching students to use high-impact study strategies can boost academic achievement, especially for students at risk of attrition (Rodriguez et al., 2018).

Not all learning strategies are equally effective for difficult exams at the college level, but many students assume they can use techniques that were effective in high school (McCabe, 2011). Wood et al. (1998) surveyed 50 high school students and 50 university first-year students and found both used rereading of notes and text as the most common strategy. Other common strategies for new college students are flash cards (Karpicke et al., 2009), recopying notes (Persky and Hudson, 2016), and watching video lectures (Rodriguez et al., 2018).

Current educational psychology research argues instead that two study strategies show the greatest effects on learning: self-testing and spacing (Dunlosky et al., 2013). But these strategies are not always used by students or by all students equally. Self-testing consists of any attempt to retrieve course content from memory. Examples are completing practice problems or writing out course content without looking at notes. Self-testing is a strategy used by many students, with Hartwig and Dunlosky reporting 71% of students (2012), and Morehead et al. reporting 72% of students (2016) saying they self-test, but this strategy is less often used by students historically excluded from STEM because of race or ethnicity (Rodriguez et al., 2018; Williams et al., 2021). Spacing is spreading out studying across multiple days (Carpenter, 2012). Studies that surveyed students about their study timing found many students do use spacing (47% in Hartwig and Dunlosky, 2012; 48% in Morehead et al., 2016; 57% in Rodriguez et al., 2018), though clearly there is room for improvement.

A third study strategy also has experimental support but is less often mentioned in lists of recommended study strategies—the use of drawings (both representational drawings and concept maps). Van Meter and Garner (2005) describe a generative theory of drawing that comes from Meyer’s more general cognitive theory of multimedia learning. Drawing an image that represents a scientific process (such as stages of a bacterial infection or steps of an action potential) requires students to select information (often from text), organize the information, form a mental representation, and then translate this newly formed model to a physical representation. While student representational drawing is backed by research (e.g., Bobek and Tversky, 2016) and occasionally recommended (Fiorella and Mayer, 2016), the research also indicates implementation can be difficult (Fiorella and Zhang, 2018). Concept maps, another form of generative drawing, were developed as a learning tool in the 1970s (Novak and Gowin, 1984). In this type of drawing, students organize the relationships between concepts or steps in a pathway as text boxes and labeled arrows. Like representational drawing, the drawing of concept maps has been shown to improve learning (Blunt and Karpicke, 2014; Wong et al., 2021), but it is likely context specific to disciplines that have significant pathways to memorize and abstract concepts to understand (Novak, 1990). Drawing of any type is infrequently used, with Hartwig and Dunlosky finding only 15% of students using drawing (2012) and Morehead et al. (2016) finding only 24% of students using drawing as a main study strategy (2019).

This study explores the impact of a community college instructor encouraging students to reflect upon their current study skills and consider changing their strategies to ones that the literature has demonstrated to be high impact. The instructor (author S.V.) emphasized spacing, analyzing textbook practice problems, and creating representational drawings and concept maps. Students were given a single presentation on high-impact study strategies. After each exam, they were encouraged to decide whether their current study strategies were improving their learning and to modify their strategies if they felt this was not the case. This post-exam work was done with a paper “exam wrapper” filled out after each exam (Lovett, 2013). While the instructor did not emphasize metacognitive skills in general in their course, the encouragement to reflect on the effectiveness of studying is recommended as metacognition (Tanner, 2012). Students were surveyed on their study strategies at the beginning and end of the semester. We report here on the results of this intervention. Specifically, we addressed the following research questions:

What study skills do community college students use, and do they differ based on gender, race, or age?

What study skills are correlated with academic achievement in two community college courses?

Can an intervention consisting of exam wrappers and an instructor-led discussion of study skills increase in the use of high-impact study skills among community college students?

METHODS

Participants

The data for this study were collected from a single suburban community college in the midwestern United States. The college is designated as a Hispanic-serving institution, meaning that student enrollment is at least 25% Hispanic (U.S. Department of Education, 2019). Included in our study were students from one section of a four-credit sophomore-level anatomy and physiology course (51 students completed the course, 25 students completed both surveys) and one section of a four-credit, sophomore-level microbiology course (48 students completed the course, 27 students completed both surveys). These courses were taught by the same instructor during Fall 2019. Approval for our study was provided by the college’s institutional review board.

Data Collection

We acquired information on age, reported race, and gender from the college. In addition to demographic data, we also collected indicators of academic achievement that included grades on five lecture exams and the final course grade, which included scores from exams, lab assignments, quizzes, and other work. Exams in the two classes were similar: Anatomy & Physiology was roughly 50% recall, 50% application/case study. Microbiology exams were roughly 30% recall, 20% connection of different concepts, 50% application/case study.

A survey that assessed study habits was administered to students twice during each course: once during week 2 of the 16-week semester (pre survey) and the second during week 16 (post survey). All students who completed both surveys were awarded 5 points of extra credit. The survey asked students to 1) indicate whether they engaged in spacing or cramming and 2) identify their top three study strategies (based on frequency of use). Our survey was a slightly modified version of the survey instrument used by Morehead et al. (2016). The questions used in this research project are shown in Table 1.

| Survey Questions |

| 1. Which of the following best describes your study patterns? |

|

| 2. Select the top 3 study strategies you use most regularly. Please select ONLY 3. |

|

Intervention

The intervention consisted of a presentation on high-impact study skills and repeated exam wrappers (see Supplemental Material). During the third week of the semester, 1 week after the pre survey was completed, students were given a 15-minute presentation by the instructor on the benefits of evidence-based study practices. The focus of this presentation and subsequent class discussion was to inform students of the benefits of spacing and choosing high-impact study skills such as studying practice problems and making drawings.

Additionally, after every lecture exam in each course, an exam wrapper was administered in class for students to reflect on their study habits for that exam (Schuler and Chung, 2019). The exam wrapper prompted students to reflect on two components relevant to the study: exam preparation (use of study strategies) and adjustments for future learning (modifications to study strategies to better prepare for the next exam). Questions on the exam wrapper consisted of a mix of yes/no, open-ended, and Likert-scale items. Each course had five lecture exams, with an exam wrapper administered after each exam (administered during weeks 4, 7, 9, 12, and 15).

Statistical Analysis

Data analyses were carried out using the MASS (Venables and Ripley, 2002) and lme4 packages (Bates et al., 2015) in the open-source R programming environment (R Core Team, 2017). McNemar tests were used to compare student use of study strategies before and after the intervention. The McNemar test is used for matched pairs of subjects to determine whether there is marginal homogeneity (McNemar, 1947). More specifically, the McNemar test asks whether there was a higher proportion of students who used a particular study strategy at the end of the term (post) compared with the beginning of the term (pre). The McNemar test is a nonparametric test for the difference in proportions of paired samples and is valid as long as the sum of the discordant pairs is at least 10 (McNemar, 1947). The assumptions of this test were met for our data. We note that the sum of the discordant pairs is represented by the total number of students who switched strategies. Regression analyses were used to determine whether course performance is associated with study skills after controlling for student demographics. The final model for course performance was fit to the data using linear mixed-effects models to account for the correlation of students nested within a class (Theobald and Freeman, 2014; Theobald, 2018), models which are well studied (Fahrmeir and Tutz, 2001; Diggle et al., 2002) and developed by Laird and Ware (1982). Course performance is modeled as a linear combination of the student-level covariates and the random error, which represents the influence of class on the student that is not captured by the observed covariates. The variables considered for the model were demographic characteristics and each of the study strategies. Variable selection was performed using stepwise regression to find the combination of study strategies and demographic characteristics that were most predictive of course performance. For linear mixed models, increasing the cluster size leads to increases in statistical power to estimate the random effects (Austin and Leckie, 2018), but small cluster sizes do not lead to serious bias (Maas and Hox, 2005; Clarke and Wheaton, 2007; Clarke, 2008; Bell and Rabe, 2020). Moreover, Maas and Hox (2005) found that, for sample sizes greater than 50, the estimates of the regression coefficients, the variance components, and the standard errors are unbiased and accurate. Thus, while our course clusters are only moderate in size and our overall sample size is above 50, both are large enough to carry out the linear mixed models that will yield unbiased estimates of regression coefficients and standard errors.

RESULTS

Student Characteristics

A total of 52 students completed both the pre and post surveys, a 60% response rate. Of these participants, 43.7% of students were from traditionally underrepresented ethnic or racial populations (96% of these were Hispanic), 72.9% were first generation, and 84.6% identified as women. About half the students (54%) were 18–22 years old, while the remaining students (46%) were 24–50 years old; none of the participants was 23 years old. We split at age 22 because it was the median age (it gave us a roughly 50-50 split) and it naturally split traditional age learners and adult learners. Most of the students (65.4%) had part-time status. We did not receive institutional data on how many other STEM courses had been taken by our students when they took either of the courses studied in this project (Microbiology and Anatomy & Physiology). For the purposes of this project, the results for both classes are combined.

Research Question 1: What Study Skills Do Students Use, and Do They Differ Based on Gender, Race, or Age?

Students were asked two questions at the beginning and the end of the course: whether they spaced their studying, and what their top three most regularly used study strategies were. Students at the beginning of the course were rather evenly split on spacing, with 55.2% saying they spaced out their studying over multiple days, and 44.8% saying they did most of their studying right before the test. By the end of the semester, spacing had become more common, with 63.9% of students saying they used it, and only 36.1% saying they did not (Table 2A and Figure 1).

| A. Spacing | Pre | Post |

|---|---|---|

| I most often do my studying right before the test | 44.8 | 36.1 |

| I most often space out my study sessions over multiple days/weeks | 55.2 | 63.9 |

| B. Study technique | Pre | Post |

| Reread chapters, articles, notes, etc. | 55.8 | 57.7 |

| Test yourself with questions or practice problems | 36.5 | 50.0 |

| Use flashcards | 53.9 | 38.5 |

| Make diagrams, charts, or pictures | 9.6 | 32.7 |

| Condensing/summarizing your notes | 17.3 | 30.8 |

| Study with friends | 23.1 | 21.2 |

| Underlining or highlighting while reading | 42.3 | 19.2 |

| Watch/listen to recorded lessons (instructor or outside source) | 21.2 | 15.4 |

| Recopy your notes word-for-word | 11.5 | 13.5 |

| Recopy your notes from memory | 0.0 | 9.6 |

| Absorbing lots of information the night before the test | 28.9 | 9.6 |

| Other | 0.0 | 0.0 |

FIGURE 1. Frequency of reported use of the most relevant study strategies by students at the beginning and end of the course. Only strategies that started or ended above use by 30% of students are shown. Blue lines indicate increases in frequency, gold lines indicate a decrease. Students reported their top three strategies in each survey. The spacing question was a stand-alone question in the surveys, so it is shown in darker blue. No strategies showed a significant change with the Bonferroni correction with this sample size (***p < 0.004).

The second question asked about implementation of different study strategies. The most common study strategies chosen by students at the beginning of the course were “Reread chapters, articles, notes, etc.” at 55.8%, “Use flashcards” at 53.9%, and “Underlining or highlighting while reading” at 42.3%. The least common strategies were the rewriting of notes, word for word (11.5%) or making diagrams, charts, or figures (9.6%). Two strategies were rarely chosen and will not be discussed further: “Recopy notes from memory” and “other.”

At the end of the semester (roughly 15 weeks after the first survey), students showed changes in frequency of many strategies. Among the most common initial strategies, “Reread chapters, articles, notes, etc.” remained the most frequently used strategy, with 57.7% of students saying they used it. “Use flashcards” dropped to 38.5%, and “Underlining or highlighting while reading” dropped to 19.2%. Among moderately common strategies, the use of self-testing or practice problems increased from 36.5% to 50.0% and absorbing a lot of information the night before the exam dropped from 28.9% to 9.6%. “Condensing/summarizing your notes” increased from 17.3% to 30.8%. Among the least common strategies, “Rewriting of notes, word for word” remained almost the same at 13.5%, while “Make diagrams, charts or figures” increased to 32.7%. Because 12 comparisons were made, we employed a Bonferroni correction and required a p < (0.05/12) = 0.004 for significance. The increase in the use of drawing did not meet the stricter requirements for significance (p < 0.010). The full list of reported strategies at the beginning and end of the classes is in Table 2B.

To determine whether there were differences among students who chose each strategy, we performed general linear regressions on the effects of academics and demographics on choice of study strategy. The variables examined include the grade point average (GPA) of science classes, gender, first-generation status, underrepresented minority (URM) status, and age (< 22 years vs. 22 years and older). At the stricter requirement for significance due to multiple comparisons, there was only one significant effect of demographics or academics on chosen study strategy: At the start of the course, students who chose underlining and highlighting as a primary study strategy were more likely to be students historically excluded because of race (z value = −3.07, p = 0.002). This relationship was no longer significant in the survey at the end of the course.

Research Question 2: What Study Skills Are Correlated with Academic Achievement?

Many students changed their strategies over the course of the semester. Our regression analysis was designed to measure whether either the starting or ending study strategies were associated with course performance, while controlling for demographic factors (GPA of sciences classes, gender, first-generation status, URM status, and age (younger or older than 22 years). There were no demographic factors that were significantly related to course grade. But there were two study strategies that showed a significant effect: Students who reported that they spaced their studying at the end of the semester (question 1 in Table 1) and students who said they “make diagrams, charts or pictures” as a study strategy at the end of the semester (question 2 in Table 1) had higher grades (Table 3).

| Estimate | SE | t value | p value | Significance | |

|---|---|---|---|---|---|

| (Intercept) | 75.24 | 3.78 | 19.90 | <0.001 | * |

| Factor = Spacing (post) | 5.32 | 2.09 | 2.55 | 0.011 | * |

| Factor = Diagrams (post) | 7.07 | 2.02 | 3.49 | <0.001 | * |

| R-squared | 0.23 |

Research Question 3: Can an Intervention Increase the Use of High-Impact Study Skills?

Our results suggest that students can change their study strategies. The instructor used exam wrappers and had a discussion with students about study strategies with a focus on high-impact strategies of spacing, studying practice problems, and drawing. Examples of the drawings made by the instructor and students are provided in the Supplemental Material. Other general practices like minimizing outlines of the entire book and only using flash cards for memorization tasks were also discussed.

We used McNemar analyses to compare students’ use of strategies before and after the instructor encouraged the use of particular strategies. While several study strategies increased or decreased in frequency, none was significantly different at the end of the course (p < 0.004 due to Bonferroni correction). The increase in use of drawings was marginally significant, with use changing from five students to 17 (p = 0.010). The decrease in cramming (from 15 down to five students) and underlining and highlighting (from 22 students to 10) were also near significance (p = 0.016 and p = 0.014, respectively).

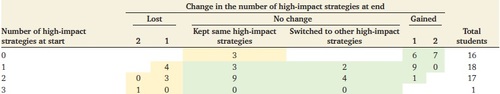

Of course, students who change to a valued strategy may be giving up either a less useful or a more useful strategy. If we group our high-impact study strategies (spacing, self-testing, drawing) and look at the distribution of students who used these strategies, we can see evidence that not all students moved toward an increase in high-impact study strategies (Table 4).

|

Most of the 16 students who used none of the three high-impact strategies at the beginning of the semester gained one or two by the end of the course. Spacing was added five times, drawing was added seven times, and self-testing was added seven times (some students added more than one strategy). But two of the 18 students who started the class using one high-impact strategy switched to a different strategy, and four stopped using a high-impact strategy. Of the 17 students who started the class with two high-impact strategies, four switched to a different high-impact strategy, and three lost a high-impact strategy. And the one student who reported using all three high-impact strategies at the start of the class dropped two of them by the end. Nevertheless, more students gained high-impact strategies (23 students) than lost them (eight students).

DISCUSSION

In this study, we surveyed a small, diverse student population of undergraduates across two science courses at a community college. By combining the survey results with institutional demographic data, we were able to associate specific study strategies implemented by students with their course performance. We were also able to explore whether an intervention could increase more high-impact study strategies. We will discuss our findings in the context of each research question.

Research Question 1: What Study Skills Do Students Use?

The most common study strategies used by our students when they began the class was rereading the book and their notes (55.7%), followed by using flash cards (53.9%). The rereading of notes has been identified as a primary study strategy by undergraduate students (Karpicke et al., 2009) and graduate students (Persky and Hudson, 2016). A meta-analysis by Miyatsu et al. (2018) found rereading to be the most common study strategy reported by undergraduates (at 78%), with flash cards as the fifth most common strategy (at 55%). At the end of our classes, rereading remained common, but there were increases in self-testing (from 36.5% to 50%) and in making “diagrams, charts or pictures” (from 9.6% to 32.7%), and decreases in use of flash cards (to 38.5%). The use of drawing as a popular study strategy is rare in most surveyed students, reported at 13% for new undergraduates in Williams et al. (2021), at 15% of undergraduates in Hartwig and Dunlosky (2012), and at 24% of undergraduates in Morehead et al. (2016). We attribute this high use of drawing to the instructor’s intervention, discussed further in later sections.

We also examined whether students began the class either spacing their studying or cramming. While 55% reported spacing at the start of the class, students increased this percentage to 64% by the end of class. This amount of spacing matches the 64% of students who reported spacing their studying in Rodriguez et al. (2018). It is higher than that reported in Hartwig and Dunlosky at 47% (2012) and in Morehead et al. at 48% (2016).

We did not find a significant effect of gender, race, or age on the study strategy choice of our students. This is unlike the results found in Williams et al. (2021), which found students historically excluded based on race are less likely to start college using self-testing, and women are more likely to start college using underlining, highlighting, and flash cards. The lack of significant effect of demographics in our study may be because of the small sample size (and thus lack of power in our test).

The research on community college students’ choice of study strategies is rare, but our results indicate that these students use the same strategies in similar proportions as undergraduate students from 4-year institutions. Of particular interest in our study is the use of drawing, which was encouraged by the instructor. Our student sample was slightly less likely to use drawings than other surveyed undergraduates at the start of the course but became more likely to use the strategy than other surveyed undergraduates by the end of the course. Community college students also used spacing at the end of the course at levels higher than many undergraduates. They did not, however, increase their self-testing (50%) to levels equivalent to other undergraduate surveys, found to be 71% in Hartwig and Dunlosky (2012), 72% in Morehead et al. (2016), and 62% in Williams et al. (2021).

Research Question 2. What Study Skills Are Correlated with Academic Achievement?

In our sample of 52 students, there was a significant, positive effect of both spacing and drawing on course grade. There was not a significant effect of self-testing. We explore here how these results fit in with the literature in K–12 and 4-year environments.

The research on study strategies has indicated some strategies have a higher impact than others. Generally, rereading, highlighting, and copying notes word for word are not considered strategies associated with improved learning (Dunlosky et al., 2013; Rodriguez et al., 2018; Williams et al., 2021). On the other hand, more generative strategies, such as self-testing and spaced studying, have significant evidence of success in both laboratory settings (Carpenter, 2012; Cepeda et al., 2006; Roediger and Butler, 2011) and classrooms (Karpicke et al., 2009; Roediger et al., 2011; Rodriguez et al., 2018, 2021). We will explore these in more detail in the following sections.

Spacing.

Spacing, also called distributed practice, is defined as spreading out study sessions separated by time (Wiseheart et al., 2019). This is in contrast to massed studying or cramming, in which all of the content is studied in one sitting. Much of the research (see, for instance, the review article by Cepeda et al., 2006) is focused on modifying the intervals between presentation of the material in a research lab environment in order to better understand the cognitive processes of forgetting and relearning associated with spacing. We focus instead on examples of classroom research that show benefits of spaced practice on student performance.

In elementary-aged children studying vocabulary words (Sobel et al., 2011) and in high school students studying French vocabulary (Bloom and Shuell, 1981), spacing of word pairs over days increased performance later in the week compared with studying during a single session. College students in a precalculus course showed a significant improvement in an exam the next semester when quiz questions also contained questions from past learning objectives rather than focusing on only the current objectives (Hopkins et al., 2016; Lyle et al., 2020). Rodriguez et al. (2019) surveyed students in an undergraduate biology course and found that students who said they used spacing performed better in the course than students with the same academic preparation who did not.

The type of study sessions that are spaced are also subject to research. Spaced studying might involve just rereading the material or might use self-testing, also called retrieval practice. Spacing combined with self-testing is considered more effective than spacing with simply rereading. Rawson et al. (2013) had undergraduate students memorize psychology concepts and found spaced rereading was less effective than spaced self-testing. In a precalculus course study (Lyle et al., 2020), the authors found spacing plus problem solving increased performance at the end of the semester. A recent review of 29 studies on retrieval practice using different spacing schedules found a strong positive relationship between the use of the two strategies and student performance (Latimier et al., 2020).

In our study, we saw a positive relationship between students who said they spaced their studying and higher course grades. We also found that the percentage of students who said they used spacing increased from 55% to 64% during the semester. We saw no significant effect of self-testing in our class, although its use increased from 36.5% to 50% during the course of the class. We hypothesize this is because the instructor encouraged students to carefully study the right and wrong answers in the textbook-provided problems but did not provide a quantity of practice problems or practice quizzes for students. If a course has more explicit opportunities for regular self-testing, more use is likely.

Making Representational Drawings and Concept Maps.

Creating visual representations of science processes can be roughly categorized into either drawing the process itself (Ainsworth et al., 2011) or organizing conceptual information in nodes to emphasize relationships or causality (O’Donnell et al., 2002). In this study, the instructor encouraged students to use both representational drawings and basic concept maps while studying to help organize the steps of important physiological and microbiological processes. The instructor provided examples of concept maps of different types, including differences in bacterial cell walls and categorizing synaptic transmission in excitatory and inhibitory postsynaptic potentials. Our data showed that students who reported making “drawings, charts, or pictures” as one of their primary study strategies at the end of the course tended to have higher grades. Some examples of instructor and student drawings are provided in the Supplemental Material.

This association matches the results of a number of research studies that show a positive benefit to students generating drawings of a process to help learn the process. Studies generally compare test performance between students who read and then generate a drawing compared with students who read and then write about the information. For example, Edens and Potter (2003) found a significant improvement in elementary-age students who drew diagrams on the law of conservation of energy. Bobek and Tversky (2016) found an improvement in middle school students who created visual explanations of a bicycle pump and chemical bonding. In college students, Alesandrini found a positive effect of drawing for students learning electrochemistry (1981); Schmidgall et al. (2019) also found a positive effect of generating drawings for undergraduate students learning about the biomechanics of swimming. Note that research in this field tends to compare students who are asked to use drawing with students who use another method, rather than measuring how switching to drawing improves outcomes.

There have also been many studies that find a benefit to student generation of concept maps, or node-style diagrams (see Nesbit and Adesope, 2006; Schroeder et al., 2018). If students are fully engaged in the drawing, they are encoding the relationship between events or structures and practicing the retrieval of those relationships (Karpicke and Blunt, 2011). A recent meta-analysis of 142 research studies of concept map use for student learning found better results when 1) students worked with concept maps for 4 weeks or more, and 2) students generated rather than studied a map (Schroeder et al., 2018). The authors also found a positive effect of maps regardless of whether students worked individually or in groups.

Research Question 3: Can an Intervention Increase the Use of High-Impact Study Skills?

In this study, the instructor attempted to guide the students in high-impact (research-supported) study strategies using two techniques—exam wrappers and a short presentation on study strategies. We found that the reported use of high-impact study strategies increased by the end of the class (spacing, drawing, self-testing), while some low-impact strategies decreased (highlighting and underlining, flash cards).

Students regularly choose to use some study strategies that have little evidence of effectiveness in college classrooms. Our own survey showed the questionable strategies of rereading, flash cards, and underlining and highlighting were the most common ones used by students at the beginning of term. Other studies show similar results (Hartwig and Dunlosky, 2012; Morehead et al., 2016; Williams et al., 2021). Students can be resistant to changing their study strategies in the absence of specific training. Wood et al. (1998) surveyed high school students and first-year college students and found both groups chose rereading as their most frequently used strategy. The university students in Wood’s study tended to add a second strategy, whereas high school students did not, with summarizing notes being the most common second strategy. Persky and Hudson (2016) surveyed students in a graduate pharmacy program and found rereading was maintained as the most used strategy from the beginning to the end of the program. Completing practice problems declined slightly from second most used to third most used, and outlining increased slightly from third most used to second most used (Persky and Hudson, 2016).

When considering the use of an intervention, it is worth considering whether students will likely need support in carrying out the suggested strategy, particularly with drawing. For instance, a 2010 study asked high school students to draw the effects of soap on dirt. Students who were given extra instructions to find the main topics and include them in the drawing and who were given examples to draw (water molecule, detergent molecule) had improved test scores (Schwamborn et al., 2010) There is also evidence that not all students can generate a concept map from scratch. A 2008 study found that, while university physics majors could connect and describe nodes in a way that generated learning, high school students learned more by labeling existing connectors to the nodes (Gurlitt and Renkl, 2008). In a university chemistry class, lower-performing students did better on a short-answer exam after studying an instructor-generated concept map rather than filling in the blanks themselves (Wong et al., 2021). Given that we saw only some students choosing to use drawings and concept maps, we might benefit the more tentative students by providing clearly outlined prompts and goals (Fiorella and Zhang, 2018) and more class time for students to work on drawings and concept maps so feedback can be provided.

We used exam wrappers in this class. In the classic use of exam wrappers, students complete a short series of questions about their study habits before they take an exam and are then encouraged to reflect on how effective those habits were when they see their exam grades. While this seems like a helpful and sensible activity, it often does not change future exam grades (Soicher and Gurung, 2017; Root Kustritz and Clarkson, 2017). A large-scale study of exam wrappers across multiple STEM courses found the use of exam wrappers did not correlate with grades or increased metacognitive awareness scores (Hodges et al., 2020), but courses that used wrappers had overall higher metacognitive awareness scores then courses that did not. Another group of researchers report that a single 10-minute lecture at the beginning of a molecular biology course plus weekly reminders to use spacing and self-testing resulted in more students saying they used these strategies at the end of the course (Rodriguez et al., 2018). We interpret this to mean that simply providing exam wrappers is unlikely to guide students to better study strategies; the instructor should also regularly discuss metacognitive skills and study strategies in addition to the exam wrappers as regular reinforcement of these ideas to generate more effective studying.

Limitations of the Study

To carry out education research projects in the classroom, it is helpful to have control sections without the intervention being studied, and large sample sizes to increase the likelihood of effects reaching significance. We carried out this study without a traditional control, which limits our ability to claim that our intervention was the cause of improved studying skills. A larger sample size would have likely provided additional statistically significant findings, such as differences in use of initial study strategies by different demographic groups or a significant effect of self-testing on course grade. But requiring a large sample size would limit research in the community college environment, as courses are often small. It is also possible that use of a self-report survey to measure study skills encouraged students to merely “report” what they felt the instructor wished to hear. But reports of drawing increased to levels higher those that seen in most college classrooms, students who reported switching to drawing had higher exam performances, and drawing was a particular emphasis of our intervention, so we are comfortable recommending that instructors encourage use of drawing when they teach classes with complex biological pathways and relationships.

CONCLUSION

This project is an example of the sort of scientific teaching that can be successfully carried out by community college faculty, even though there are often minimal resources or training available to them (Bailey et al., 2005). In the process of implementing this intervention and analyzing and writing up the results, we learned a great deal about study strategies and best practices for interventions. While we saw significant improvements in our students, the instructor plans to make changes in the next iteration of the implementation to make it even more effective. First, they have organized the study strategies we discuss into categories of “high impact” (spacing, self-testing, generative drawings) and “lower impact” (flash cards, rereading, highlighting), and will emphasize these differences to students in their presentation. Second, they have shortened the exam wrapper and will be moving it to their learning management system, and they are sorting study strategies by “high impact” and “lower impact” in the exam wrapper. This will allow the instructor to quickly scan the student responses and discuss them with the class after each exam. The process of iterative analysis and improvement is attainable in community college classes and is a step toward the success of this important group of undergraduates.

ACKNOWLEDGMENTS

The authors wish to thank the Community College Biology Instructor Network to Support Inquiry Into Teaching and Education Scholarship (CC Bio INSITES) for their mentorship and Matthew R. Fisher for his help with the early drafts of the article.