A Model for Using a Concept Inventory as a Tool for Students' Assessment and Faculty Professional Development

Abstract

This essay describes how the use of a concept inventory has enhanced professional development and curriculum reform efforts of a faculty teaching community. The Host Pathogen Interactions (HPI) teaching team is composed of research and teaching faculty with expertise in HPI who share the goal of improving the learning experience of students in nine linked undergraduate microbiology courses. To support evidence-based curriculum reform, we administered our HPI Concept Inventory as a pre- and postsurvey to approximately 400 students each year since 2006. The resulting data include student scores as well as their open-ended explanations for distractor choices. The data have enabled us to address curriculum reform goals of 1) reconciling student learning with our expectations, 2) correlating student learning with background variables, 3) understanding student learning across institutions, 4) measuring the effect of teaching techniques on student learning, and 5) demonstrating how our courses collectively form a learning progression. The analysis of the concept inventory data has anchored and deepened the team's discussions of student learning. Reading and discussing students' responses revealed the gap between our understanding and the students' understanding. We provide evidence to support the concept inventory as a tool for assessing student understanding of HPI concepts and faculty development.

INTRODUCTION

As faculty members at a research university with expertise in Host Pathogen Interaction (HPI), we have established a faculty community to better understand student learning, facilitate faculty development, and affect evidence-based curriculum reform in a set of nine linked microbiology courses (Table 1). Our goal is to improve our students' learning experience. We want our students to learn science in a deep and meaningful manner, acquire conceptual understandings (Anderson and Schonborn, 2008; Schonborn and Anderson, 2008) of important HPI concepts at an appropriate level (introductory versus upper level courses), and be able to apply HPI concepts within the context of their own motivations for taking the courses (e.g., program requirement or future professional pathways, such as graduate school, medical school, or nursing).

| Course | Enrollment (students per year) |

|---|---|

| BSCI 223 General Microbiology | 700 |

| BSCI 380 Bioinformatics | 30 |

| BSCI 437 General Virology | 120 |

| BSCI 424 Pathogenic Microbiology | 120 |

| BSCI 425 Epidemiology | 100 |

| BSCI 412 Microbial Genetics | 80 |

| BSCI 417 Microbial Pathogenesis | 25 |

| BSCI 422 Immunology Lecture | 100 |

| BSCI 423 Immunology Lab | 80 |

For several decades, the science education community has stressed the importance of teaching and learning in meaningful ways (e.g., Anderson and Schonborn 2008; Ausubel, 1968, Mayer, 2002, Schonborn and Anderson, 2008). Although there are many definitions and interpretations of meaningful learning, we favor the definition in which meaningful learning results when students are first introduced to concepts in a simple, intuitive manner and then are challenged to connect learned concepts with newly presented information (Bruner, 1960). We believe students should be able to transfer understanding to a higher level in the form of a “learning progression” or spiral curriculum (Bruner, 1960; Mayer, 2002; National Assessment of Educational Progress [NAEP], 2006; National Research Council [NRC], 2006; Smith et al., 2006; Duschl et al., 2007; Anderson and Schonborn, 2008; Schonborn and Anderson, 2008).

As we move forward in improving our students' learning experiences, we recognize the need for reliable and easy-to-use assessment tools that measure student understandings and provide evidence for the need to change the curriculum. Concept inventories have been used by many science education researchers as pre- and postmeasures to gauge student learning of fundamental concepts in specific courses (Michael et al., 2008; Bioliteracy - Conceptual assessment, course & curricular design in the biological sciences, http://bioliteracy.net). Several groups have been working to identify the fundamental concepts representative of various science disciplines and to design questions that target student understanding of these concepts (Hestenes and Wells, 1992; Hestenes et al., 1992; Odom and Barrow, 1995; Hake, 1998; e.g., Anderson et al., 2002; Mulford and Robinson, 2002; Khodor et al., 2004; Garvin-Doxas et al., 2007; Garvin-Doxas and Klymkowsky, 2008; Smith et al., 2008).

Here we describe the use of a concept inventory as a lever for evidence-based curriculum reform and faculty professional development. To guide our use of the HPI Concept Inventory (HPI-CI) (Marbach-Ad et al., 2009) we developed seven goals with related research questions (Table 2) and used multi-year HPI-CI data across all courses to address these questions. We reconciled student HPI-CI scores with our expectations of student learning, investigated the possible correlation between background variables (major, ethnicity, gender, year in school, previous course work) and student learning, compared student performance across institutions, assessed how changes in our teaching techniques affected student performance, and tracked HPI-CI data across all courses in our program. The HPI-CI was particularly useful in challenging beliefs that faculty had about student learning in their courses and spurring deep conversations about student learning.

| Goal | Research question |

|---|---|

| 1. To investigate whether or not students are making significant progress in their learning in individual courses and to check for student retention of concept knowledge. | 1. a) Are students making adequate progress in their learning within each course? |

| b) How well do students retain concept knowledge from previous courses as they move to subsequent courses? | |

| 2. To identify in which courses particular concepts are expected to be taught, and at what depth. | 2. Are all important concepts expected to be covered somewhere in the curriculum, at sufficient depth, and in a logical order? |

| 3. To compare the instructors' reported curriculum coverage with students' actual understanding of each concept. | 3. Do students learn in a course what we intend for them to learn? |

| 4. To investigate possible effects of background variables such as gender, ethnicity, GPA, age, and previous education on student learning. | 4. What are the effects of background variables such as gender, ethnicity, and prior learning? |

| 5. To compare outcomes from different versions of a course—for example, from the same course as offered at two different universities. | 5. Is the Concept Inventory a good tool to foster cross-institution conversations on student learning? |

| 6. To explore the impact of number and combinations of HPI courses taken by the student. | 6. Can we identify combinations of HPI courses that lead to better success? |

| 7. To compare learning outcomes for different teaching methods—for example, one class has exercises that the other class lacks. | 7. Do innovative teaching techniques enhance concept learning? |

Using the HPI-CI for nine courses allowed us to look at student growth in understanding over time as students progressed in our program. Discussing student performance as a group allowed us to think seriously about outcomes-based assessment and how we truly know what students learn in our courses and how effective our teaching is.

The communal analysis of the HPI-CI data contributed significantly to our professional development and has been the most significant motivator of faculty change since the institution of our group in 2004. It has been suggested that “to transform a culture, the people affected by the change must be involved in creating that change” (Brigham, 1996, p. 28). Silvethorn et al. (2006) particularly recommended developing faculty learning communities along the model practiced by Cox (2004) where faculty learning communities provide regular opportunities for faculty to discuss questions that emerge from classroom teaching experiences, receive coaching and feedback on experiments with instructional strategies, address assessment issues, and interact within a supportive environment with like-minded colleagues. This is the practice that has developed among our community.

THE HPI TEAM AND COURSES

Our HPI teaching community was founded on shared research and teaching interests. It has been successful in part as it mirrors our research practice where classic research groups meet regularly to share ideas, review data, and discuss current findings (Marbach-Ad et al., 2007). The team has also been successful as we operate as a Community of Practice (COP; Wenger, 1998; Ash et al., 2009), where each of us brings his/her various expertise and experiences and we learn from each other. Our team includes 19 members representing all faculty ranks, including those who have primarily teaching responsibilities (lecturers and instructors), and tenured/tenure-track faculty with substantial, externally funded research programs in HPI areas. In addition, the group includes a science education expert and several graduate students with a strong interest in the joint missions of research and teaching. Originally the team was solely composed of University of Maryland (UM) faculty. In 2009, in an interest to broaden our discussion, one faculty member from Montgomery College (MC)—UM's most significant feeder community college—joined the team (For more details about the team members go to: http://chemlife.umd.edu/hostpathogeninteractionteachinggroup). We have responsibility for teaching nine HPI undergraduate courses (Table 1), in which General Microbiology serves as a prerequisite for all eight upper-level courses. These courses are all part of the UM microbiology major, which allows students to select various course options for completing their program requirements. There is no required progression through upper-level courses; however, advisors suggest a progression as listed in Table 1 with students often enrolling in more than one advanced course in each semester of their senior year.

THE HPI CONCEPT INVENTORY

The HPI-CI was developed collaboratively by the HPI teaching team (Marbach-Ad et al., 2009). Our previous papers have described the process through which we validated the tool and each question (Marbach-Ad et al., 2007, 2009). We have used the HPI-CI to assess our program of courses since fall 2006. We administered the HPI-CI as a pre- and postsurvey in four to six courses per year. The HPI-CI is a multiple-choice inventory consisting of 17 questions. Each question is designed to target one or more concepts from a list of 13 concepts previously identified by the group as being the most important for understanding how hosts and pathogens interact (Marbach-Ad et al., 2009). The distractors for each question target students' specific misconceptions of HPI concepts (see Table 5 for an illustrative question). Each multiple choice question also asks students to explain their selected response, providing us with insight into the students' thought processes.

To validate the questions we used an iterative approach that involved administering the multiple-choice questions and then asking students to explain their response to each question. We reviewed students' responses, and used those responses to evaluate the HPI-CI distractors. As a team we also reviewed students' explanations that supported the selected response. This process has occurred each semester in which we have administered the HPI-CI both as a pre- and postsurvey in our courses. Our original intent was simply to validate our HPI-CI. However, during the process we realized there was value in the iterative review and group discussion of student responses beyond inventory development. We learned a great deal about our students as the process became a faculty development exercise. As we read student responses, we analyzed them in context of the courses in which the students were enrolled. Comparing how students performed both at the beginning and end of the various courses, this review process evolved into a process for assessment of student learning and faculty professional development.

In this article we tell the story and present the data showing how the HPI-CI has influenced the work of our group and how it can serve as a potential model to other programs using a concept inventory to assess student learning and curricular change. We describe our findings according to our set of goals and Research Questions (RQ; Table 2).

USING THE HPI-CI TO ADDRESS CURRICULUM REFORM GOALS

Goal 1: To Investigate Whether or Not Students Are Making Significant Progress in Their Learning in Individual Courses, and to Check for Student Retention of Concept Knowledge

RQ 1a: Are Students Making Adequate Progress in Their Learning within Each Course? To examine whether or not students were making significant progress in their learning in individual courses, we reviewed a complete data set without controlling for students' background or how students responded to individual questions (these tests will be described later). For each course we compared pre– and post–HPI-CI mean scores using t tests and calculating mean scores based on a maximum score of 100. The results from three years of data collection (2006–2009) are shown in Table 3.

| Course | Pre | Post | Sig. |

|---|---|---|---|

| General Microbiology - BSCI 223/Fall 2006 (n = 109, 16 questions) | 31.1 ± 15.6 | 48.1 ± 16.9 | <0.001*** |

| General Microbiology - BSCI 223/Spring 2007 (n = 127, 16 questions) | 31.9 ± 15 | 44.2 ± 19.4 | <0.001*** |

| General Microbiology - BSCI 223/Fall 2008 (n = 90, 18 questions) | 26.1 ± 15.6 | 47.1 ± 16.2 | <0.001*** |

| General Microbiology - BSCI 223/Spring 2009 (n = 107, 17 questions) | 30.1 ± 14.8 | 49.1 ± 14.1 | <0.001*** |

| Bioinformatics - BSCI 380/Fall 2008 (n = 18, 18 questions) | 46.3 ± 15.2 | 49.1 ± 14.9 | 0.252 |

| Pathogenic Microbiology - BSCI 424/Fall 2006 (n = 96, 16 questions) | 43.9 ± 16.9 | 51.1 ± 18.1 | <0.001*** |

| Pathogenic Microbiology - BSCI 424/Fall 2008 (n = 50, 18 questions) | 45.2 ± 15.0 | 51.0 ± 13.6 | <0.001*** |

| Epidemiology - BSCI 425/Spring 2007 (n = 52, 16 questions) | 44.1 ± 20.6 | 42.8 ± 22.5 | 0.606 |

| Microbial Genetics - BSCI 412/Spring 2007 (n = 45, 16 questions) | 51.4 ± 16.25 | 49.0 ± 20.0 | 0.266 |

| Microbial Genetics - BSCI 412/Spring 2008 (n = 35, 18 questions) | 53.8 ± 17.4 | 52.8 ± 16.6 | 0.72 |

| Microbial Genetics - BSCI 412/Spring 2009 (n = 33, 17 questions) | 54.0 ± 14.2 | 56.2 ± 18.1 | 0.407 |

| Microbial Pathogenesis - BSCI 417/Spring 2008 (n = 18, 18 questions) | 58.9 ± 20.4 | 65.0 ± 18.3 | 0.24 |

| Microbial Pathogenesis - BSCI 417/Spring 2009 (n = 12, 17 questions) | 51.0 ± 13.4 | 52.5 ± 14.3 | <0.05* |

| Immunology Lecture - BSCI 422/Spring 2007 (n = 48, 16 questions) | 59.9 ± 19.4 | 64.3 ± 21.9 | <0.05* |

| Immunology Lecture - BSCI 422/Spring 2008 (n = 53, 18 questions) | 53.7 ± 16.9 | 56.6 ± 17.8 | 0.132 |

| Immunology Lecture - BSCI 422/Spring 2009 (n = 31, 17 questions) | 58.8 ± 15.7 | 61.6 ± 16.4 | 0.572 |

We found significant gains in learning for General Microbiology, Pathogenic Microbiology, and for Immunology Lecture (Spring 2007 only). For General Microbiology the magnitude of the gain was consistent over the three years, even though the course was taught by different instructors each year. General Microbiology is taught using an active-learning format (Smith et al., 2005). Immunology lecture is generally the last course taken by students in the spring of their senior year. The average HPI-CI postscore following the Immunology courses is around 60%. There is a significant improvement in students' scores from the pre–General Microbiology BSCI 223 to post-Immunology BSCI 422.

RQ 1b. How Well Do Students Retain Concept Knowledge from Previous Courses as They Move to Subsequent Courses? Because there is no required specific order in taking the advanced courses, we were most interested to see whether the students retained the knowledge from the prerequisite course (General Microbiology) when they moved on to the advanced courses. To examine this we compared mean scores on the General Microbiology postsurvey to the mean presurvey scores of the advanced courses (Table 3). The presurvey scores for advanced courses were higher than or similar (not significantly different) to postsurvey scores for the prerequisite General Microbiology course.

RQ 2. Goal 2: To Identify in Which Courses Particular Concepts Are Expected to be Taught, and at What Depth

RQ 2. Are All Important Concepts Expected to be Covered Somewhere in the Curriculum, at Sufficient Depth, and in a Logical Order? In reviewing student performance on specific HPI-CI questions, we identified some questions for which students' pre- or postscores were lower or higher than expected by the instructor of the course. As such the implementation of the HPI-CI stimulated close examination of our curriculum. To address RQ2 we used a modification of Allen's (2003) Curriculum Alignment Matrix (Assessing Academic Programs in Higher Education). The use of the Curriculum Alignment Matrix makes it possible to identify where within a curriculum learning objectives are addressed and in what depth. We used the tool to investigate the alignment of HPI concepts within our curriculum. For each question, instructors reported: 1) their assumptions about student prior knowledge (Yes or No) and 2) the level of topic coverage in their classes (0 = not at all; 1 = briefly; 2 = moderately; 3 = detailed). Table 4 shows our matrix for eight of our courses.

| Question | Concept* | BSCI223 | BSCI380 | BSCI424 | BSCI412 | BSCI417 | BSCI422 | BSCI423 | MC GM** |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 12 | N/N (2/1) | No (2) | N/N (0/1) | No (0) | No (2) | No (3) | No (2) | No (3) |

| 2 | 3, 4, 10 | N/Y (3) | Yes (2) | N/N (2/3) | Yes (3) | Yes (3) | Yes (0) | Yes (0) | No (2) |

| 3 | 2, 3 | N/Y (2/3) | Yes (2) | N/N (2/3) | No (1) | Yes (3) | Yes (0) | Yes (0) | No (3) |

| 4 | 3, 4, 10 | N/N (3/2) | Yes (2) | Y/N (3/3) | No (3) | Yes (3) | Yes (0) | Yes (0) | No (3) |

| 5 | 6 | N/N (0/1) | No (2) | N/Y (0/2) | No (1) | Yes (3) | Yes (2) | Yes (1) | No (2) |

| 6 | 13 | N/N (2/1) | Yes (0) | ?/N (0/3) | No (0) | No (2) | No (2) | No (2) | No (3) |

| 7 | 10 | N/N (3/1) | Yes (0) | Y/N (3/0) | No (3) | Yes (3) | Yes (0) | Yes (0) | No (3) |

| 8 | 12 | N/N (1/1) | No (2) | N/N (0/2) | No (0) | No (2) | No (3) | No (3) | No (2) |

| 9 | 7, 1, 12 | N/N (2/1) | No (2) | N/N (0/2) | No (0) | Yes (3) | No (3) | No (2) | No (3) |

| 10 | 4, 5, 9, 12 | N/N (3/2) | Yes (3) | ?/N (2/3) | No (1) | Yes (3) | Yes (2) | Yes (0) | No (1) |

| 11 | 8 | N/N (2/1) | Yes (3) | ?/N (2/3) | No (0) | Yes (3) | Yes (1) | Yes (0) | No (0) |

| 12 | 3 | N/N (2/2) | Yes (1) | ?/N (2/2) | No (3) | Yes (3) | Yes (1) | Yes (0) | No (3) |

| 13 | 7, 9 | N/Y (2/2) | Yes (2) | Y/Y (3/3) | Yes (2) | Yes (3) | Yes (0) | Yes (0) | No (3) |

| 14 | 10 | N/N (2/1) | Yes (1) | ?/N (2/0) | No (1) | Yes (3) | Yes (0) | Yes (0) | No (3) |

| 15 | 13 | N/N (2/2) | Yes (0) | N/N (0/2) | No (0) | No (2) | N/Y (3) | No (3) | No (3) |

| 16 | 9, 10 | N/N (2/1) | Yes (1) | N/N (3/2) | No (3) | Yes (3) | Yes (0) | Yes (0) | No (0) |

Building the curriculum matrix according to the HPI-CI questions forced our group to consider the teaching of each HPI concept in every course. Overall, the matrix confirms that each of the concepts assessed by the HPI-CI questions is addressed in the learning goals of at least one of our HPI courses. Discussing the full table during a team meeting informed each instructor about ways that other instructors aim to teach each concept in their course. For example, we expected that those of us teaching advanced courses would predict that students would have prior knowledge of concepts addressed at the “3” level in BSCI 223 (General Microbiology). However, comments from the Microbial Genetics (BSCI 412) instructor indicated that he did not assume prior knowledge for most of the topics that were reported to be instructed in the General Microbiology (see Table 4, Questions 4, 7, and 10). Table 4 also shows that, in some cases, instructors who teach the same course reported very different levels of coverage for the same topic (e.g., Pathogenic Microbiology, BSCI 424).

The findings from the curriculum matrix review led us to discuss what we meant by “level of coverage.” We found that instructors have different definitions for “level” of coverage. For example, one instructor of the prerequisite course defined the level of coverage in relation to time spent on the topic in the class and whether or not the concept was included in an active-learning assignment. Another instructor, from one of the advanced courses, explained that “detailed coverage” means the level of complexity in which the topic is discussed, so that the students understand not only the phenomenon that is characterized by the concept but also the chemical reactions and the physical mechanisms behind the phenomenon. These discussions led us to better understand both how concepts are taught by various instructors and what level of knowledge the instructors expect students to bring to their classes.

Accordingly, analysis of Concept Inventory data can be considered as a catalyst for in-depth curriculum analysis. By using the Curriculum Alignment Matrix, we were able to analyze our curriculum and the way that we teach particular concepts in both the prerequisite course and the advanced courses. This information and an informed view among us as instructors both are essential to our goal of creating a learning progression where students will achieve deep learning of HPI concepts.

Goal 3: To Compare the Instructors' Reported Curriculum Coverage with Students' Actual Understanding of Each Concept

RQ 3. Do Students Learn in a Course What We Intend for Them to Learn? We aligned our “assumption of prior knowledge” and “level of coverage” with student performance on each HPI-CI question (see example from Immunology lecture BSCI 422 in Supplemental Material A). Close inspection of the alignments showed that for many questions our expectations matched student performance (Supplemental Material A, Questions 1, 3, 4, and 7–10). However, for other questions, our assumption (either of prior knowledge or of coverage) did not match student pre- and postscores. Again, the HPI-CI results fostered an important discussion. We considered the reasons for students' misunderstanding of concepts that we were confident had been addressed in our courses. We raised questions about whether we used the appropriate teaching method (i.e., demonstrations, case studies, problem solving) to teach the concept, or if there was another explanation.

One interesting finding was that students' scores on particular postsurvey questions were higher after the introductory course in comparison to postsurvey scores after an advanced course. For example, in the Microbial Pathogenesis (BSCI 417) course, the survey results for the question presented in Table 5 show that in the presurvey 94.1% of the students selected the correct response, while in the postsurvey only 41.7% of the same students chose the correct response. Through our conversations we realized that in introductory courses science is presented in terms of general rules with examples to support those rules. In advanced courses, those rules are challenged with presentation of exceptions and uncertainties. We suggest that some students may “lose their footing” in advanced courses and become confused with the multiple-choice distractors that target common misconceptions. Consider the question in Table 5. The question asked, “Two roommates fall ill: one has an ear infection and the other has pneumonia. Is it possible that the same causative agent is responsible for both types of disease?” The correct response is “Yes, because the same bacteria can adapt to different surroundings.” In the postsurvey of the Microbial Pathogenesis course, large number of the students (33.3%) selected the distractor, “No, because one infection is in the lung while the other is in the ear.” When we read the students' explanations for the selection of this distractor, we found comments like: “No, same bacterium is not able to attach at both places. The adherence is tissue specific,” “Tissue tropism.” The Microbial Pathogenesis instructor team explained that students learned about tissue tropism in BSCI 417. Tissue tropism is the idea that a specific pathogen infects a specific type of tissue due to tissue specific receptors. To understand the concept(s) targeted by this question students must realize that, although different bacterial pathogens are able to infect various tissues (tropism) leading to distinct diseases at those sites, one pathogen may elicit different diseases depending on its adaptation to distinct environments. The correct answer represents a complete understanding of the overall process of host-pathogen interaction. It seems that as students gain more detailed information on a topic that reveals more intricacies, they have difficulty in making generalizations.

| 2room2sites-4-5-9-12 # 32 Spring 2009 | 1 | 3 | 4 | 5 | ||||

|---|---|---|---|---|---|---|---|---|

| Course | General microbiology | Microbial genetics | Microbial pathogenesis | Immunology | ||||

| Number of students | Pre | Post | Pre | Post | Pre | Post | Pre | Post |

| Two roommates fall ill: one has an ear infection and one has pneumonia. Is it possible that the same causative agent is responsible for both types of disease? | ||||||||

| Unanswered | 1.8 | 0.6 | 0.0 | 2.5 | 0.0 | 0.0 | 2.2 | 0.0 |

| Yes, because both individuals live in the same room and therefore the source of the infection has to be the same. | 4.3 | 5.7 | 7.1 | 0.0 | 0.0 | 8.3 | 6.5 | 2.7 |

| Yes, because the same bacteria can adapt to different surroundings. | 54.3 | 84.7 | 81.0 | 82.5 | 94.1 | 41.7 | 82.6 | 86.5 |

| No, because each bacterium would cause one specific disease. | 9.8 | 5.1 | 2.4 | 0.0 | 0.0 | 16.7 | 2.2 | 5.4 |

| No, because one infection is in the lung while the other is in the ear. | 10.4 | 3.2 | 4.8 | 12.5 | 5.9 | 33.3 | 4.3 | 2.7 |

| I do not know the answer to this question. | 19.5 | 0.6 | 4.8 | 2.5 | 0.0 | 0.0 | 2.2 | 2.7 |

In other advanced courses, we found similar types of advanced learning that seemed to confuse students. Such examples convinced us how important it is to explore the pattern of responses for each individual question rather than concentrating on total scores or on the difference between pre- and postscores.

Goal 4: To Investigate Possible Effects of Background Variables Such as Gender, Ethnicity, GPA, Age, and Previous Education on Student Learning

RQ 4. What Are the Effects of Background Variables Such as Gender, Ethnicity, and Prior Learning? Students come to HPI courses from a wide variety of backgrounds. Supplemental Material B shows the diversity of students in our courses in terms of gender, race, educational background, test scores, and success in college. The HPI-CI served as a tool to look at the effects of these variables and gather evidence that might guide our curriculum reform. For example, using t tests and regression analysis, we noticed that the HPI-CI total pre- and postscores (for all courses) appeared to be lower for females. A surprising and significant difference showed that males outperformed females on the presurveys (Female = 35.27 ± 17.44; Male = 42.56 ± 19.28) and on the postsurveys (Female = 49.06 ± 14.89; Male = 54.44 ± 16.22). However, further examination showed that the differences in scores between the genders might be linked to other background variables. Indeed, males in our sample were more likely (75.8% of the males) to be majoring in the College of Chemical and Life Sciences (CLFS), while only 55% of the females were CLFS majors. Comparing, for example, gender scores only for the cohort of the CLFS majors in our sample showed no significant differences in their performance on the pre- and postsurveys (postsurvey: Female = 52.94 ± 14.61; Male = 55.66 ± 15.28).

Once again the HPI-CI results stimulated a discussion and further investigations of student performance in our courses. We considered possible explanations for success of majors versus nonmajors. For example, nearly all majors had completed a sophomore Genetics course before taking the General Microbiology course. Review of the Genetics course content led us to assume that this course may have given CLFS majors an advantage. Whatever the reason for the differences between the performance of majors and nonmajors, these findings are consistent with our long-held view that students would be better served if we offered different versions of General Microbiology targeted to students' level of background, preparation, and interest. The findings explained here, based on the HPI-CI, allowed us to give evidence-based support for implementing long-discussed changes in our curriculum and proposing a new version of General Microbiology targeted to Biology majors.

Goal 5: To Compare Outcomes from Different Versions of a Course—For Example, from the Same Course as Offered at Two Different Institutions

RQ 5. Is the HPI-CI a Good Tool to Foster Cross-Institution Conversations on Student Learning? We have established a partnership with MC, one of the largest community colleges in our region with an annual enrollment of approximately 25,000 students (Maryland Association of Community Colleges, 2010). We are investigating student learning of HPI concepts in the MC General Microbiology (GM) course as one of our HPI courses. MC GM students completed the HPI-CI in the spring and fall of 2009. Comparison of UM GM student performance with MC GM students revealed that, for some of the questions, the percentage of correct answers of MC students was higher than that of UM students, while in other questions the UM percentage of correct answers was higher than that of MC students. This finding led to discussions of learning goals and teaching practices and student populations at the two institutions. We have also compared the concept coverage goals for each of the courses through the curriculum matrix data (Table 4). It was clear that the instructors emphasized different concepts in their courses. The collaboration and agreement on learning goals between the MC and UM instructors is very important, because the MC GM is accepted as a prerequisite to our advanced HPI courses. Supplemental Material B shows that at least 20% of the students in each of the UM HPI courses had started their higher education studies in a community college. Most of these students are coming from MC.

Discussion of the HPI CI data were an avenue toward open communication about learning goals. One outcome of the discussion is a joint curriculum development project. We found a common challenge in engaging students in learning concepts of bacterial growth. From this conversation the instructors of GM in both MC and UM are cooperating to develop student activities that will meet the needs of both student populations. The HPI-CI will be used as one of the assessment measures to determine the impact of this joint effort on student learning of GM concepts and success in upper-level courses. In the future we plan to collaborate with more colleges and universities by sharing the HPI-CI. These collaborations will include a broad discussion about the concepts that are intended to be covered in their programs as compared with UM's program. Collaboration also will focus on evaluation of concept level of coverage in the different programs.

Goal 6: To Explore the Impact of Number and Combinations of HPI Courses Taken by the Student

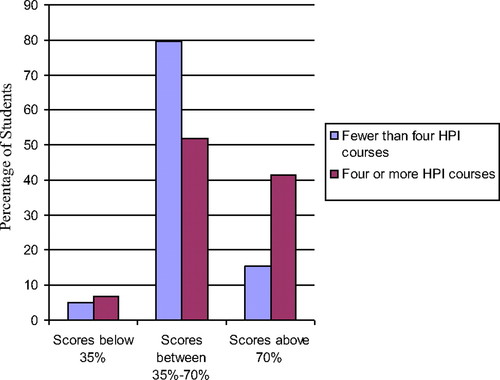

RQ 6. Can We Identify Combinations of HPI Courses That Lead to Better Success? The UM curriculum for a biological sciences degree with specialization in microbiology is anchored with General Microbiology as the prerequisite course and is followed by a variety of upper-level courses from which the students may choose. The sequence of upper-level courses is not mandated. We are using the HPI-CI data to identify the optimum path through the microbiology curriculum. HPI-CI data to date show that students who have completed four or more HPI courses have a better understanding of HPI concepts (Figure 1). Using the dataset from all students enrolled in all courses from Spring 2008 to Fall 2009, we grouped students into three categories based upon their HPI-CI postscores: Lower-scores (students who scored below 35% on the HPI-CI); Midscores (scores from 35 to 70%), and Higher-scores (scores above 70%). Encouragingly, we found that a higher percentage of students (41.4%) who had completed more than four HPI courses fell within the Higher scores' group, in comparison with the students who had completed fewer than four HPI courses, where only a few (15.4%) achieved scores that placed them in the Higher scores group.

Figure 1. Concept Inventory scores according to the number of HPI course taken by the students. Based upon the Concept Inventory scores, students were divided into three groups: Lower-scores (students who scored below 35% on the Concept Inventory), Midscores (scores from 35 to 70%), and Higher-scores (scores above 70%). The blue columns represent students who had completed fewer than four HPI courses. The red columns represent students who had completed four or more HPI courses.

As we move forward with curriculum design the HPI-CI will be useful to help us gauge course sequencing. We will follow students through their four years of undergraduate studies and analyze the impact of the course sequence on students' concept understanding. Based on our findings we will adjust the program curriculum and the recommended course sequence.

Goal 7: To Compare Learning Outcomes for Different Teaching Methods–For Example, One Class Has Exercises That the Other Class Lacks

RQ 7. Do Innovative Teaching Techniques Enhance Concept Learning? As we change our curriculum we are also changing our teaching style. We are working to implement active- learning activities that engage students in research-oriented learning (e.g., Campbell, 2002; Handelsman et al., 2007; Jurkowski et al., 2007; Prince et al., 2007; Ebert-May and Hodder, 2008). Because all faculty members in the Department of Cell Biology and Molecular Genetics who have research expertise in HPI are involved in this project, we have a unique opportunity to infuse our HPI courses with current research problems and scientific approaches. We are planning to use the HPI-CI to assess student progress on specific learning goals targeted by the activities that we develop. We will look at student pre- and postscores and student performance on particular questions that target the learning goals of each activity.

SUMMARY AND IMPLICATIONS

As a faculty teaching community working with large populations of students in a set of nine interlinked courses, we developed the HPI-CI to be used as a pre- and postmeasure. We developed the HPI-CI to assess student learning with the goal of establishing evidence to drive our curriculum reform. The HPI-CI development process involved iterative review of questions with detailed analysis of student responses and explanations for responses.

During the development phase we began to see the value of the HPI-CI in a broader way. With our overall goals of engaging students in deep learning of HPI concepts and developing a learning progression between our courses, the HPI-CI process was informing us in ways we had not expected. We used the HPI-CI qualitative and quantitative data to address seven research goals, and a variety of questions about student learning in HPI courses (Table 2).

From analysis of pre- and postscores (Table 3) in our introductory General Microbiology course, we found that students made significant gains in understanding HPI concepts, independent of course instructor. This level of understanding was maintained—students' postscores from General Microbiology were similar to prescores on advanced courses.

The student overall gain in understanding as indicated by comparing presurvey scores from General Microbiology (the prerequisite course) to postsurvey scores after completion of Immunology (the course generally taken last in the sequence) was significant (from ≈30% average score to ≈60% average score, p < 0.001). Although it is typical with concept inventories that average scores on postsurvey do not reach 100%, the 60% average score motivated us to probe into students' responses for each question. By mapping concepts to course learning goals in a curriculum matrix, we analyzed student performance course by course and question by question. We confronted our expectations with data on student performance. We found that it was very important not to interpret the Concept Inventory results in isolation. We looked at possible confounding variables such as gender and major. For example, student gains in understanding of HPI concepts in General Microbiology were correlated with prior completion of the UM sophomore-level Genetics course. This finding spurred us to discuss a view held by those of us who teach General Microbiology: Students with more sophisticated understanding of genetics are better prepared to learn in General Microbiology. This view has implications in our curriculum design, and the HPI CI data have prompted us to revisit the prerequisite courses for General Microbiology.

The HPI-CI was also useful to anchor discussions related to teaching across institutions. Using the HPI-CI as an objective tool we found an avenue to delve into discussions of learning goals and teaching approaches as we compared performance of students at UM and at MC. The discussion led us to a common understanding of student needs and, as a result, stimulated a cross-institution curriculum design project.

Wenger (1998) describes the value of communities in addressing a particularly challenging endeavor. The “endeavor” serves as the catalyst for group work, and the solution comes from the interplay from each member of a community working collaboratively toward a joint solution or “meaning making.” Our endeavor is student learning, and as it turned out the best catalyst to get us talking was the HPI-CI results. As a teaching community we found that the HPI-CI anchored and deepened discussions of student learning. Reading students' responses allowed us to see the gap between our understanding and students' understanding (Anderson and Schonborn, 2008; Schonborn and Anderson, 2008). Confronting our expectations of student learning (Table 4) with student responses challenged us to think and converse in a reflective manner. Working together during this process compounded the value of the work as each member brought individual perspectives and arguments to the work. The use of the HPI-CI as a broad program assessment tool and the approach we used to discuss our findings should be transferable to other groups interested in communal work on curriculum reform.

ACKNOWLEDGMENTS

Special thanks to Gustavo C. Cerqueria for support and guidance related to data management. This research was supported in part by a grant to the University of Maryland from the Howard Hughes Medical Institute through the Undergraduate Science Education Program, and by a grant from the National Science Foundation, Division of Undergraduate Education, DUE0837515 CCLI-type 1 (Exploratory). This work has been approved by the Institutional Review Board as IRB 060140.