Examining the Role of Leadership in an Undergraduate Biology Institutional Reform Initiative

Abstract

Undergraduate science, technology, engineering, and mathematics (STEM) education reform continues to be a national priority. We studied a reform process in undergraduate biology at a research-intensive university to explore what leadership issues arose in implementation of the initiative when characterized with a descriptive case study method. The data were drawn from transcripts of meetings that occurred over the first 2 years of the reform process. Two literature-based models of change were used as lenses through which to view the data. We find that easing the burden of an undergraduate education reform initiative on faculty through articulating clear outcomes, developing shared vision across stakeholders on how to achieve those outcomes, providing appropriate reward systems, and ensuring faculty have ample opportunity to influence the initiative all appear to increase the success of reform. The two literature-based models were assessed, and an extended model of change is presented that moves from change in STEM instructional strategies to STEM organizational change strategies. These lessons may be transferable to other institutions engaging in education reform.

INTRODUCTION

The national focus on science, technology, engineering, and mathematics (STEM) continues to be a strong driver in both education and research, exemplified by President Obama’s announcement at the 2015 White House Science Fair that foundations, schools, and businesses would contribute more than $240 million in new funding to provide STEM opportunities for students and to help early-career scientists stay in research (White House Office of the Press Secretary, 2015). At the postsecondary level, national reports and initiatives across disciplines continue the clarion call for systemic improvement in STEM education, driven by the need for scientific literacy among the general population, a workforce that can innovate in STEM fields, and increased numbers of K–12 teachers with strong discipline-specific pedagogical preparation (National Research Council [NRC], 2010, 2012a; President’s Council of Advisors on Science and Technology, 2012; Association of American Universities, 2013). Despite high levels of funding and publicity for STEM education, systemic reform across the disciplines at the undergraduate level has been slow (Dancy and Henderson, 2008; Fairweather, 2008; Austin, 2011; Marbach-Ad et al., 2014). Breadth of coverage continues to dominate depth of coverage in many introductory, gateway science courses (Daempfle, 2006; Momsen et al., 2010; Alberts, 2012; Luckie et al., 2012), student attrition in STEM fields continues at a high rate (Chen, 2013; King, 2015), and a gap between men and women and between majority and minority students persists in in-class participation, overall course grades, and degree attainment (Madsen et al., 2013; Eddy et al., 2014; Gayles and Ampaw, 2014; Brown et al., 2016), although some conflicting evidence shows that the gender gap is closing (Ceci et al., 2014; Miller and Wai, 2015).

Much funding and research in improving student outcomes in postsecondary STEM education has been targeted at the classroom level and on moving from instructor-centered, lecture-based instruction to evidence-based pedagogies that include active student participation and frequent feedback (Fairweather, 2008). Indeed, myriad strategies—providing curricular materials, instituting reward structures, and implementing evaluation policies, for example—have been used to encourage faculty to adopt teaching practices that are congruous with how students really learn (Henderson et al., 2011), and significant gains in student outcomes can be obtained through implementing active-learning pedagogies (Hake, 1998; Pascarella and Terenzini, 2005; Kuh et al., 2011; Freeman et al., 2014). Regardless of the strategy, however, changing pedagogy alone does not fully address large-scale issues of persistence, gender-based and race-based achievement gaps, and the need for deep content coverage in introductory courses. Consideration of factors that go beyond issues of pedagogy, such as the effect of instructor beliefs, alignment of curriculum within and across disciplines, and the impact of departmental culture, is required to bring about systemic reform that can outlast any one compelling faculty member or group (Fisher et al., 2003; Austin, 2011; Hora, 2014; Kezar et al., 2015). The study of change strategies specifically in reform of undergraduate STEM education is a relatively new field that offers promise in thinking about how multidimensional approaches can improve teaching and learning in STEM, drawing on knowledge about how people learn, the realities of existing faculty reward systems, and our inherent resistance to change (Dancy and Henderson, 2008; Davis, 2014; Finelli et al., 2014). To extend this conversation, here we examine the multifaceted role of leadership in a reform initiative focused on improving teaching and learning in undergraduate biology at a research-intensive university.

Among all the factors that influence undergraduate STEM education, what is the value of focusing on leadership in particular? Comprehensive undergraduate education reforms challenge long-standing beliefs, require a substantial amount of time to implement, demand the attention of many stakeholders, and ideally result in both structural and cultural changes (Argyris, 1999). For example, the reform initiative in this study attempted to undertake issues of curricular coherence, pedagogy, governance of service courses, and structures for student support such as advising and career guidance all across multiple biological disciplines and within the same time frame. For this kind of substantial intervention, Kezar (2014) cites collaborative leadership as a mechanism that supports change through helping many different individuals make sense of the change process. In other words, sharing the leadership of a change process among many stakeholders gives all stakeholders the opportunity to assess what the change process means for them and their immediate colleagues. In this way, the leadership of an initiative provides leverage for others to pay attention because of the potential to directly influence decision making. Here, we focus on describing what leadership issues arose in implementation of an undergraduate biology transformation initiative.

The primary audience for this study is provosts, deans, and department chairs, because they are predominantly tasked with large-scale reform efforts and these positions are typically long-term, although the findings presented here offer insight for grassroots leaders as well. Further, this study is directed toward research-intensive institutions, because among all kinds of higher education institutions, they most acutely face a tension between research and teaching missions. Many universities are facing challenges such as increasing student enrollments, decreasing external funding, the rise of online education, and the adoption of missions traditionally outside the purview of higher education such as entrepreneurship and social change (NRC, 2012b; Research Universities Futures Consortium, 2012). However, the predominant pressure on tenure-track faculty at research-intensive universities remains producing high-quality research and securing external funding; issues of undergraduate education are, at best, a secondary priority (Fairweather, 2002). Teaching undergraduate courses, for example, especially the large introductory courses that serve many different degree programs, does not easily fit into the career trajectory of specialized researchers and has led to a rise in teaching by non tenure-track faculty (Kezar and Sam, 2010; Snyder and Dillow, 2015). Given the pressure on administrators and faculty at research-intensive universities to maintain research programs, we present insights into an initiative to reform undergraduate biology education as a way to provide guidance to administrators who are making changes in their own undergraduate programs.

Biology is a challenging field in which to study undergraduate education reform because of the breadth and depth of biology-related disciplines. At the university in this study, nine departments and programs, herein referred to as departments for simplicity, participate in undergraduate biology education, with additional programs devoted to graduate education and general courses for nonmajors. Further, concerted calls for biology education reform have proliferated because of the rapid rise of interdisciplinary fields such as genomics, proteomics, and bioinformatics (NRC, 2003, 2009; Association of American Medical Colleges and Howard Hughes Medical Institute, 2009; Wood, 2009; American Association for the Advancement of Science, 2011). Biology-related departments tend to provide a high level of service to students from all across the university (e.g., psychology and engineering majors, and all students tracking for medical school or other health-related professional schools), complicating curricular decisions, and traditional biology departments may need to collaborate with agriculture- and medicine-related departments and colleges. Getting these multiple competing groups moving in one direction presents a major organizational challenge.

We studied a reform process in undergraduate biology using a descriptive case study method and the following research question: What leadership issues arose in implementation of an undergraduate biology transformation initiative? We used an interpretive perspective that values the multiple voices present in an organization and longitudinal analysis that connects current events to a historical context, and we provide readers with enough descriptive data to be able to fully reflect on our interpretations (Tierney, 1987). Because every university has local issues and contextual realities that require attention, we do not attempt to provide an algorithm for other change agents to follow. Rather, we offer insights into the leadership of a reform process that may be transferable to other institutions, depending on local needs.

THEORETICAL FRAMEWORK

Two different models of change in undergraduate STEM education were used for the purpose of theory triangulation, applying different perspectives to the same data set, which identifies whether different theories converge on the same set of findings in a research study, thereby increasing the validity of the results (Patton, 2014). We did not set out to explicitly test these two models but rather to gather data that aligned with the models and then use the models as lenses through which to view the reform effort. Both models originally targeted the factors and change strategies that encourage faculty members to adopt evidence-based instructional practices. The reform process discussed here includes reform of instructional practices in addition to other goals, but these two models were still appropriate, because they easily apply to many issues of educational change.

The Henderson Model

The conceptual model developed by Henderson et al. (2011; herein referred to as the “Henderson model”) categorizes change strategies for reform of undergraduate STEM instructional practices along two dimensions. The first dimension describes whether the outcomes of the reform process are predetermined or emergent, and the second dimension describes whether the target of the reform process is individual faculty or an environment. Crossing these two dimensions yielded four categories of change strategies: disseminating curriculum and pedagogy, developing reflective teachers, enacting policy, and developing shared vision. Through their review of the literature from three typically separate fields—discipline-based education research, faculty development research, and higher education research—the authors identified developing shared vision as an effective change strategy, although it is relatively new to studies of STEM in higher education. The main tenet of developing shared vision is to bring together some institutional unit to identify a collective perspective that will lead to widespread adoption and sustainable change.

The Austin Model

A model for promoting reform in undergraduate science education developed by Austin (2011) (herein referred to as the “Austin model”) was used as a second framework through which to view the data. This model includes four institutional components that affect faculty members’ decisions about teaching: reward systems, work allocation, professional development, and leadership. Reward systems are the policies and structures that institutions use to communicate the relative value of different faculty activities, such as the importance of research versus teaching in promotion and tenure decisions; work allocation is the amount of time allocated to different faculty activities and how flexible faculty can be in choosing to use their time to improve their teaching; professional development can be department-, college-, or institution-level activities designed to support faculty adoption of evidence-based teaching practices; and leadership is the support provided by department chairs and administrators in creating cultures that allow for pedagogical innovation and reward faculty accordingly. An important feature of the Austin model is that each of the four components can act as a barrier to change or as a lever for change.

METHODS

Context

This research focuses on the leadership of an initiative to broadly improve teaching and learning in biology at a research-intensive university (RIU), a large 4-year public university with very high research activity (Carnegie Classifications of Institutions of Higher Education [Indiana University Center for Postsecondary Research, n.d.]). RIU has high undergraduate enrollment that has steadily increased since 2000, in line with national statistics on overall undergraduate enrollment at 4-year institutions (Kena et al., 2015). The undergraduate enrollment in RIU’s Natural Science College (NSC), however, has increased by more than 50% since 2000, to ∼5000 students, vastly outpacing RIU’s overall undergraduate growth. In any given year, greater than 70% of NSC students major in biology, with the remaining students split roughly evenly between mathematics and physical sciences. Annual enrollments in the first introductory biology course (Bio 101) increased by 100% from 1000 to 2000 over the same time frame, with Bio 101 serving both biology majors and nonmajors from more than 100 different degree programs in a typical academic year. Commensurate administrative funding and tenure-track faculty lines did not follow this increase in student demand.

NSC chairs and directors (referred to as chairs for simplicity) of biology-related departments proposed to the provost what became known as the Life Sciences Initiative (LSI), a long-term, multimillion-dollar umbrella program for improvements to teaching and learning in biology. It was intended that the LSI would reach all nine separate NSC departments that participate in biology education, one of which is a decentralized introductory biology program devoted to teaching the two-semester sequence of introductory lectures and laboratories. That there are nine unique departments involved in biology education at RIU is a key contextual factor in this study as is that half of the six original proposal authors were succeeded by new chairs within the first year of the LSI. The provost’s office provisionally funded a portion of the requested budget for the first year of the project with the stipulation that evidence of improved student learning would lead to stepwise funding increases in subsequent years. As is the case for any proposal, the plan of action and outcomes of the project differed somewhat from the outline in the proposal both because of a reduction in funding compared with the original request and because the needs of the biology departments changed as the LSI progressed over the first few years. We note that, throughout this paper, we refer to college-level administrators as “administrators” and provost’s office–level administrators as “upper administration.”

The proposal sought to address that 1) the large influx of students had led to a high number of uncoordinated introductory course sections; 2) biology degree programs did not include sufficient upper-level laboratory experiences; and 3) major curricular revision was needed in a particular degree program that, despite representing 40% of biology students overall, had no dedicated faculty, upper-level courses, or physical space. The proposal centered on actions that the proposal authors thought would help address these issues by moving toward a more horizontally (across sections of a course) and vertically (throughout a degree program) aligned curriculum for all students. Major recommendations included 1) appointing faculty “course committees” to identify and implement reforms of the two core introductory lecture courses and of second-tier biology courses (e.g., genetics, ecology, and evolution) and 2) soliciting proposals from departments for particular reforms they wanted to carry out that would lead to improved student learning. It was anticipated that several structural, pedagogical, and curricular changes would be required to achieve the goals set forth in the proposal. In addition to the course committees, the LSI implemented two leadership committees that would oversee all LSI activities. The functions of these two committees are described in the next section.

Data Collection

To gather data on leadership during the implementation of the LSI, we collected direct observation and participant-observation data from related meetings in as many settings as possible that would shed light on the LSI over the first 2 years of the project. These data sources were selected because we could collect the data unobtrusively and they were the most relevant to the research question: What leadership issues arose in implementation of an undergraduate biology transformation initiative? Audio recordings of 42 meetings that occurred in 2013 and 2014 were collected, totaling 51 hours of data. The prolonged period of data gathering was an intentional technique to improve the credibility of the findings (Owens, 1982). Two types of triangulation (in addition to theory triangulation) support the validity of the results (Patton, 2014). For the purpose of data triangulation, audio from five different types of LSI-related leadership meetings was collected, mitigating the inherent bias in any single type of meeting or group of meeting participants. For the purpose of investigator triangulation, both authors either observed or were participant-observers in all except one of the meetings from which data were collected.

A numeric summary of the basic characteristics of these meetings is provided in Table 1. The meetings were one of five types: 1) administrative strategist meetings in which subsets of administrator and research faculty participants related to the LSI met to discuss day-to-day operations, planning, and evaluation; 2) chair and director meetings, composed of biology department chairs and NSC administrators and focused on allocating resources to different areas of the LSI through collective decision making; 3) curriculum leader meetings, composed of influential faculty members from each biology department and including current administrators, former administrators, and tenure-track and non tenure-track faculty, focused on providing guidance and feedback on the LSI; 4) departmental town hall meetings, held in each biology department as a specific mechanism to introduce the LSI to broader biology faculty communities; and 5) annual NSC faculty meetings in which administrators provided updates about the progress of the LSI, among other things, to all faculty in the college. For the NSC faculty meetings, only the audio that involved discussion of the LSI was analyzed.

| Meeting type | Number of meetings | Average number of participants | Hours of audio data |

|---|---|---|---|

| Administrative strategists | 18 | 4 | 20.9 |

| Chairs and directors | 7 | 10 | 9.3 |

| Curriculum leaders | 11 | 10 | 15.6 |

| Departmental town hall | 4 | 16 | 5.4 |

| NSC faculty | 2 | >70 | 0.2 |

| Total | 42 | 51.4 |

This work was considered program evaluation according to the RIU institutional review board and was therefore classified as nonregulated research and not subject to review, yet was still appropriate for external publication because subject privacy is protected through obscuring identifying references.

Data Analysis

To examine the change strategies used by leaders in implementing the LSI, a complex, multifaceted phenomenon, we used a qualitative case study method (Yin, 2012). A case study was appropriate, because “the main research questions are ‘how’ or ‘why’ questions, we as researchers have little to no control over behavioral events, and the focus of study is a contemporary phenomenon” (Yin, 2014, p. 2). Further, a reform initiative like the LSI is an irregular event in the life of a university, so capturing as much detail as possible about the event was desirable. We used a descriptive case study method, because the purpose of the project was to identify what leadership issues arose during implementation of the LSI.

A transcription service was contracted to transcribe the audio recordings. This step represents the key division between the actual audio data collected and the interpretation of the data. We then reviewed the transcripts alongside the audio recordings to correct for specific acronyms and the names of speakers, because we anticipated that the relationship of speakers would impact the meaning of the text. Dedoose (2016), a Web-based, mixed-methods program that supports real-time collaboration, was used to organize and document the transcripts, codes, and excerpts and to maintain chains of evidence (Yin, 2014). For example, as a reliability measure, all excerpts retain unique identifiers that allow them to be traced back the source transcript and audio data in the event that broader context is needed for interpretation.

We created data analysis guidelines by examining the Henderson and Austin models and generating a list of the important features in each of the eight categories delineated in the articles (see the Supplemental Materials). The two frameworks primarily reviewed changes in teaching practices, but the LSI targeted other types of changes as well. As we coded the transcripts, then, we noted our interpretations of how the guidelines applied to noninstructional situations for future reference. The categorization criteria from the Henderson model (i.e., impacts individuals vs. environments and structures, targets prescribed vs. emergent outcomes) were especially helpful in this regard, because they are general constructs not specific to instruction.

To begin the data analysis, we selected a transcript, coded it separately according to the guidelines, and met to normalize our coding process. The grain size for the excerpts was a conversation that contained a single idea or topic (Gee, 1986) regardless of the length of the conversation between participants. For example, a conversation at a departmental town hall meeting about the order in which certain first- and second-tier courses were chosen as the foci for course committees was coded as “enacting policy,” because the decision to start with particular courses was made by administrators without broader faculty input. A second example is the chair and director committee discussing the need to achieve broad representation from multiple departments on particular course committees, coded as “developing shared vision,” because the goal in having broad representation was to garner as much attention and buy-in for the committee activities as possible. A third example is an administrator in a curriculum leader meeting providing an update to a committee about a faculty member changing positions so as to have more oversight of the reform process (which the committee had discussed and agreed upon), coded as “work allocation and leadership,” because the official duties of the faculty member changed so he or she would play a larger role in formal leadership of the LSI.

We used the constant comparative method (Lincoln and Guba, 1985), in that normalizing consisted of discussing why our code applications to the excerpts were or were not appropriate given both prior code applications and the features from the two frameworks. This is a structured approach to grounded theory, because we used a particular coding paradigm from the beginning of data analysis (Corbin and Strauss, 2015). To gain a broad understanding of the variation in the data, we coded one transcript of each of the five meeting types separately and then reconciled before coding the remainder of the transcripts in a random order. Because of the length and complexity of the transcripts, both authors continued to code every transcript, meeting to reconcile any points of disagreement. Therefore, as a validity measure, all excerpts and codes reflect agreement on the part of both authors.

We used the Henderson and Austin codes as lenses through which to view the data as a basis for a process of explanation building. Explanation building is an analytical technique that was used to develop themes about what leadership issues arose during implementation of the LSI. Explanation building is an iterative process in which an initial theme is proposed, tested against a subset of the data, revised accordingly, compared with a different subset of data, and so forth (Yin, 2014). Repetition of behaviors, ways of thinking, and words and phrases were key indicators for identifying themes in the transcripts (Ryan and Bernard, 2003), and again, engaging multiple analysts was a tactic to increase the reliability of the themes (Miles et al., 2014). We stopped iterating once additional data no longer changed the emergent themes. We acknowledge that while we have presented these analytical steps as sequential, they were not always neatly contained, and preliminary analyses occurred even while the data were being collected (Rist, 1982).

Limitations

Text data are inherently limited in that they lose features of nonverbal communication that can be important for conveying meaning in conversation. However, both authors either observed or were participant-observers in all except one of the meetings from which data were collected, so we were oftentimes able to recall particular exchanges. Also, immediately before coding, we reviewed our meeting notes and the audio recordings, which conveyed some aspects of nonverbal communication such as tone, pacing, and volume.

There were four meetings that we otherwise would have coded but could not, because we either had no audio data or our audio data were of poor quality and could not be transcribed. Further, the LSI was discussed at some meetings that we were not privy to, such as regular departmental faculty meetings and meetings between administrators and the upper administrators. Even so, our data set represents the vast majority of formal activity taking place regarding leadership of the LSI and provides a sufficient basis for analysis. Further, to support the construct validity of this study (Owens, 1982), we had two key colleagues read the penultimate draft of this case study report, reviewing for any inconsistencies in our assumptions and interpretations. These colleagues were critical participants in multiple LSI committees but did not work on these specific analyses.

Acting in part as participant-observers in this research study presents some challenges in data analysis given that we occasionally advocated for particular courses of action in the meetings (Becker, 1958). Because of this potential bias, we were especially restrictive in coding the excerpts in which we were prominent participants. We note that it is precisely because of our participant-observer roles that we were privy to these meetings, which otherwise would have been inaccessible to researchers due to the nature of the LSI and, as such, these data provide up-close and in-depth coverage of the case.

ANALYSES

We summarize the numeric results of the coding scheme in the first of the following sections. These results are then used as a basis for understanding the themes that emerged from the data, discussed in the next seven sections. Collectively, these themes explain the leadership issues that arose in implementation of the LSI, a large college-wide reform initiative. We have included a substantial number of quotations so that readers have enough information to assess our interpretations, and we made minor edits to the quotations to facilitate reading (Boeije, 2009).

Summaries of the Henderson and Austin Models Code Applications

Table 2 provides a summary of the frequency with which the codes from the Henderson and Austin models were applied to excerpts of the transcripts. We used this summary as a way to begin to understand the corpus of data. For example, the enacting policy and developing shared vision categories, which focus on outcomes targeted at organizational structures and environments, dominated in comparison with the codes that focus on changing individuals across all five meeting types. Issues of reward systems, work allocation, and professional development appeared roughly equally across all meeting types. The predominance of leadership was expected, given that the meetings from which the data were sourced essentially all centered around how the LSI would be implemented and sustained. To normalize the count values across meeting types, we calculated the frequency of excerpts coded with a particular category by the hours coded of that meeting type. For example, a code that appeared twice during the administrative strategist meetings occurred on average once every 10.5 h.

| AS | C&D | CL | DTH | NSC | Total | ||

|---|---|---|---|---|---|---|---|

| Disseminating curriculum and pedagogy | C | 2 | 2 | 5 | 3 | 0 | 12 |

| F | 10.5 | 4.7 | 3.1 | 1.8 | — | 4.3 | |

| Developing reflective teachers | C | 10 | 1 | 2 | 4 | 1 | 18 |

| F | 2.1 | 9.3 | 7.8 | 1.4 | 0.2 | 2.9 | |

| Enacting policy | C | 92 | 22 | 33 | 18 | 6 | 171 |

| F | 0.2 | 0.4 | 0.5 | 0.3 | 0.0 | 0.3 | |

| Developing shared vision | C | 63 | 32 | 56 | 7 | 5 | 163 |

| F | 0.3 | 0.3 | 0.3 | 0.8 | 0.0 | 0.3 | |

| Reward systems | C | 37 | 9 | 12 | 11 | 1 | 70 |

| F | 0.6 | 1.0 | 1.3 | 0.5 | 0.2 | 0.7 | |

| Work allocation | C | 33 | 16 | 13 | 11 | 1 | 74 |

| F | 0.6 | 0.6 | 1.2 | 0.5 | 0.2 | 0.7 | |

| Professional development | C | 26 | 10 | 15 | 9 | 1 | 61 |

| F | 0.8 | 0.9 | 1.0 | 0.6 | 0.2 | 0.8 | |

| Leadership | C | 146 | 40 | 65 | 13 | 11 | 275 |

| F | 0.1 | 0.2 | 0.2 | 0.4 | 0.0 | 0.2 |

We allowed individual excerpts to be marked with multiple codes; Table 3 provides the number of excerpts in which each code type was marked alongside a different code. As for Table 2, this summary of the data was constructed as a way to begin to understand the overlapping nature and interdependence of particular codes, recognizing that the two models we used to code the data are not exactly orthogonal. For example, enacting policy and developing shared vision were nearly always co-coded with leadership. In contrast, disseminating curriculum and pedagogy was never co-coded with leadership. These results point to the notion that leaders of the LSI did not intend to use disseminating curriculum and pedagogy as a primary lever for creating change. Indeed, quotations presented throughout the rest of the Analyses section support this idea as well.

| Developing reflective teachers | Enacting policy | Developing shared vision | Reward systems | Work allocation | Professional development | Leadership | ||

|---|---|---|---|---|---|---|---|---|

| Disseminating curriculum and pedagogy | C | 1 | 2 | 0 | 0 | 2 | 12 | 0 |

| F | 51 | 26 | — | — | 26 | 4 | — | |

| Developing reflective teachers | C | 7 | 5 | 5 | 4 | 11 | 9 | |

| F | 7 | 10 | 10 | 13 | 5 | 6 | ||

| Enacting policy | C | 18 | 33 | 29 | 17 | 101 | ||

| F | 3 | 2 | 2 | 3 | 1 | |||

| Developing shared vision | C | 33 | 24 | 12 | 155 | |||

| F | 2 | 2 | 4 | <1 | ||||

| Reward systems | C | 14 | 9 | 54 | ||||

| F | 4 | 6 | 1 | |||||

| Work allocation | C | 17 | 46 | |||||

| F | 3 | 1 | ||||||

| Professional development | C | 20 | ||||||

| F | 3 |

Enacting Policy and Developing Shared Vision Evolved to Become Equally Important Change Strategies

The Henderson model identified enacting policy as an ineffective method for changing instructional practices. However, in broadening the research focus to leadership of an education reform initiative, we identified evidence throughout the five different types of meetings that both the enacting policy and developing shared vision change strategies were perceived to be important for communicating LSI priorities and garnering faculty buy-in. A key feature of this finding is that it seemed the two change strategies needed to be in balance, not just that both should be present. For example, a curriculum leader committee member speaking to an administrator commented on the development of a course committee that

I worry a little bit about being too prescriptive with these. I think you have the right people involved and you have to be careful about how much guidance you give. If you give too little you’re floundering, but there’s a certain amount of working at it by trial and error that I think serves everyone well. I would not aim for a very neat and clean process.

This curriculum leader committee member values the ability of the course committees to have some leeway and discretion in their reform processes but states that too little guidance on the part of the administration leaves the committee “floundering” without a clear objective. An LSI administrator shared the same sentiment that reform efforts have to be guided by some imposed “boundary conditions” yet without completely predetermined outcomes: “I envision [the administrative strategist committee] as arranging the boundary conditions so the solution turns out a certain way. We can arrange boundary conditions. We can’t make things happen in the box. Each department has to do that.”

The perceived importance of the combination of the enacting policy and developing shared vision strategies became apparent because of how it contrasted with the initial approach in the LSI implementation. Early on, administrators stressed a developing shared vision approach in that they would not prescriptively impose behavior changes on the faculty or on the course committees appointed to investigate particular areas of the curriculum. For example, an administrator commented,

I don’t want to be prescriptive here but as we try to institutionalize improvement, the most important thing is to have as broad a conversation as possible so everybody feels like their point of view is being included and we come to decisions that everybody can live with.

Responsibility for determining the early outcomes of the LSI, then, rested not with the chairs or administrators but with the course committees as well as individual faculty and departments that would submit proposals for funding. The heavy emphasis on the autonomy of the faculty to “shape the change that would be institutionalized” seemed to contribute to a fractured process in the beginning, because there were few limitations on what could be addressed as part of the reform effort, because the LSI was not a primary priority for these faculty who all had a mix of teaching and research responsibilities, and because there were a large number of departments and committees involved. A curriculum leader committee member commented,

In my mind, the thing that is the most important that is absent is a clear understanding of what the objectives are for the biology curriculum. So whether we’re talking about Genetics or Introductory Biology or Evolution, we don’t know yet what our curricular objectives are. Absent those, discussions about vertical integration and whether or not something is scalable or not are almost moot. We don’t know where we’re headed.

The effort to build faculty buy-in by asking them to largely determine their own outcomes eventually evolved into a process that incorporated more enacting policy in the form of accountability activities and “top-down” decision making yet still acknowledged the importance of allowing the local departmental cultures to influence the changes being made.

Administrators Intentionally Used Nonthreatening Questions and Positions to Reduce Barriers to Change

Administrators appeared to recognize early on that the LSI had the potential to raise resistance in faculty, in part because approximately 7 years earlier a similar reform effort across RIU biology departments was attempted but failed in the eyes of many faculty. Indeed, a chair noted of the LSI, “This will be my fifth time reforming biology. So this is not a new thing. This has been going on for lots of years.” Because of the potential for resistance, administrators used an intentional leadership strategy in beginning the conversation about the LSI with questions they believed all faculty would value. For example, an administrator said,

If we come in and say “You should teach using cooperative learning,” people are going to say, “I don’t want to teach using cooperative learning and I’m not convinced it works.” But if you focus on disciplinary core ideas and science practices, it becomes a different conversation.

Similarly, another administrator noted,

Curriculum alignment [both horizontal and vertical] is probably a real good thing to focus on now because, in the short term, I think that’s where we can produce outcomes we can boast about. And it’ll have propagating effects. Faculty, some have a lot of discomfort with evaluating classroom practice. But curriculum alignment, I think, is an expectation that we can have, and that is hard to argue against.

These administrators and others seemed to believe that faculty would necessarily be open to moving toward better curricular alignment and to making sure that first- and second-year courses focused on scientific practices and the core disciplinary ideas of biology.

A related choice made to reduce faculty resistance to the LSI was to have the chairs of each department that participated in authoring the proposal hold a departmental town hall–style meeting in their departments for the purpose of introducing their faculty to the LSI. A single slide presentation was developed in collaboration with all the chairs and an NSC administrator, and then each chair used that same presentation in his or her departmental town hall meeting. The administrator said, “The Life Sciences Initiative is a proposal that came from the chairs. It actually came out of the departments. And that’s why the chair has been hosting the presentation—to physically embody the message that this was originally an initiative that came from the chairs.” The choice to approach faculty using nonthreatening questions and positions emerged from the LSI as an intentional leadership strategy to reduce resistance to the reform process and increase acceptance and buy-in.

Curriculum-Level Change Seemed Difficult to Facilitate without a Shared and Broad Vision for Biology Education at RIU

A major recommendation of the original LSI proposal was to vertically align the biology curriculum, in part through reforming first-tier introductory courses and second-tier courses such as genetics, ecology, and evolution that are heavily used by multiple degree programs. For each course, administrators formed a corresponding faculty course committee. Taking into account the input of chairs, the course committees were empaneled with instructors of the given course plus additional faculty to achieve broad representation from most biology departments. The goal for each course committee was to develop shared vision about how that course, or topic area more broadly, would fit best into the overall curriculum and for that vision to appeal to all stakeholders involved.

The course committee structure was implemented because both chairs and administrators valued the resident expertise in each department and understood the importance of developing shared vision for garnering faculty support. An administrator said,

In order to build on what people are learning, it’s important to strive for some common goals in these courses … The way we want to do that is by getting people together to specify what the outcomes should be for students. We’re not trying to specify exactly what should happen in every class to accomplish that. We don’t want to be prescriptive about exactly what you have to do, but there should be some common set of goals we’re trying to accomplish.

As the course committees began their work, however, it became apparent that it was difficult to determine how one particular course should or should not change relative to the overall curriculum when the committees had little understanding of the overall curriculum itself. Without a basis for understanding the overall curriculum, the course committee discussions were largely limited to their particular course of interest. A chair noted,

I think that we’ve been focusing on individual classes and I think that collapses what the LSI could do. I’d like to see us focus on institutional issues. And instead of saying, “Well this class is doing really well and this class is easy and this class gets all the resources and then in this class, nothing happens,” let’s think about, institutionally, what do we want?

Similarly, in a curriculum leader meeting, a chair of one of the course committees said,

The idea of starting with the individual courses is like the tail wagging the dog … Within our committee what kept coming up is “Well I don’t know if students need to know this. It depends on what they’re going to do and it depends on what kind of major they are.” And we couldn’t even within our committee define it for biology majors.

Because it seemed evident that the course committees had “changed the nature of the initiative from looking at institutional problems to individual courses,” the chair and director committee initiated a process of defining the overall purpose of biology education at RIU. By chance, this time frame overlapped with that of accreditation and the need for individual departments to provide explicit statements of learning outcomes for each of their degree programs. Both of these processes were achieved through developing shared vision, in the former case by the chair and director committee and in the latter case by the respective departments. Integrating these new understandings into the overall culture of the college is an ongoing process. Still, new course committees have benefited from the shared understanding of biology education at RIU to which all new courses, activities, and revisions can be aligned.

Leaders Moved toward a Governance System for the Introductory Courses That Emphasizes Collective Oversight

The NSC introductory biology courses serve a variety of stakeholder degree programs in biology and otherwise. Biology degree programs are offered in the Natural Science and Agriculture Colleges, among others. Outside biology, students in engineering and nursing degree programs are primary stakeholders, again among others. Because of the pressure on the introductory biology courses to meet the needs of so many different types of students, a decentralized Introductory Biology Program (IBP) had for many years been solely devoted to running the introductory biology courses for all students.

IBP drew instructors for courses from various departments across campus; however, IBP was not formally connected to the departments. This lack of formal connection created issues with ownership of the courses themselves and in faculty teaching reviews for tenure and promotion; for example, department chairs were not able to easily see evaluations for instructors from their own departments, because the evaluations were routed through IBP. To promote more department-level ownership of these courses, administrators proposed a plan to assign oversight of particular introductory courses to particular departments. However, assigning particular courses to particular departments did not mitigate the need for multiple stakeholders to have input into each course. A department chair commented, “The [administrator] wanted more ownership by faculty and departments. And so he actually thought that each course should be assigned to a department. He realized that that doesn’t work because what you lose, then, is that cohesion of biology.”

The chair and director and curriculum leader committees in collaboration with administrators developed a plan for a new kind of governance of the IBP that would have “a different kind of oversight.” IBP would remain in place as the administrator of introductory biology courses that serve multiple departments, but a board of department chairs would take on the responsibility of overseeing the program and would meet regularly to participate in decision making about IBP and the introductory courses. An administrator commented,

What we’ve come to is the idea that the chairs should serve as the Board of Directors that has governing and approval authority over what happens in those courses … We think it is very important that the departments themselves have ownership of this whole thing, that governance should not stand outside the departments.

In this way, the governance of the introductory courses moved toward a structure in which the chairs could maintain shared vision for the courses as well as share responsibility for assigning sections, reviewing instructors, evaluating historical data trends, and other tasks. An administrator summarized, “We have a number of courses that are offered by particular departments but serve majors outside of their department … Therefore they serve common needs and we should be discussing them collectively,” and later, that “the intention is for the chairs to see themselves as custodians, stakeholders, stewards of [IBP].” This reform in governance was an informal change in work allocation for the chairs.

The Category “Developing Reflective Leaders” Emerged as a Way to Document Chairs and Administrators Being Continuously Engaged and Cognizant of Contextual Factors

When considered together, the Henderson and Austin models are fairly comprehensive in coverage of change strategies for institutional reform. Still, a few patterns emerged from the data that did not fit neatly within a single category. We called one of these patterns developing reflective leaders as an intersection of the developing reflective teachers and leadership change strategies from the Henderson and Austin models, respectively. Excerpts coded as “developing reflective leaders” emphasized the importance of leaders consistently engaging in reviewing data and reflection on their degree programs while also considering important contextual factors that would affect reform. Contextual factors affecting reform efforts that leaders grappled with ranged from course issues such as reassignment of an instructor from one course to another, to departmental issues such as orienting a new chair, to institutional issues such as budgetary constraints.

The idea of leaders engaging in a continuous improvement cycle in which reflection, action, and evaluation are ongoing processes contrasts with a more typical treatment of reform initiatives as short-term efforts championed by a few people. That RIU’s accreditation process had a significant focus on showing evidence of established continuous improvement cycles is a contextual factor that appeared to contribute to this pattern in the data. One administrator said,

In my mind, the number one goal is to institutionalize a system where a focus on outcomes drives attention to continual improvement. Right? So we as a faculty are focusing on outcomes. We are talking about how to measure them. We are talking to our colleagues … If we can get that feedback loop in place, that’s a real accomplishment.

Similarly, a department chair suggested to the larger group of chairs in a chair and director meeting that “constant monitoring” of courses and degree programs by both faculty and administrators is essential because “a lot of these things continually erode. So we’re always in the position of fighting erosion … And that’s why I don’t like to see [the LSI] as something new. This is something we need to be always doing.”

Lack of Alignment between the Funding Structure and Intended Outcomes Appeared to Incentivize Small-Scale Changes at the Expense of Large-Scale Institutional Reform

The original LSI proposal requested a large lump sum that would fund the proposal priorities and further be added into the appropriate budgets into perpetuity as recurring funding; however, upper administration granted funding with a different structure. Specifically, upper administration developed a plan for incremental funding that started small—at ∼10% of the original request—but could be increased annually to eventually allow up to half the entire requested amount on an annual basis. Leaders of the LSI would need to provide evidence of improved student learning for the funds to increase from year to year. An administrator said, “In a way it’s very clever that they’re trying to make sure we keep our eye closely on the ball. And there are no guarantees, so every year we’re motivated to try to do a very good job.”

Upper administration did not specify what evidence of student learning would be required for securing increases in funding, and there appeared to be a great deal of uncertainty (as described in Enacting Policy and Developing Shared Vision Evolved to Become Equally Important Change Strategies) in balancing the two change strategies of developing shared vision and enacting policy. The original proposal contained many distinct priorities, and determining which priorities to target first was challenging. For example, during a departmental town hall meeting, a faculty member requested an explanation of what the overall goal of “improving student learning” meant, to which a chair and director committee member replied,

A better [phrase] might be improving student outcomes. [Upper administration] didn’t tell us what that means which is good in a way because they are really asking us to define what that means. We get the latitude to say, “This is what we’re going for.” But once we make that statement, we need to then show we’re accomplishing it. So that’s what a lot of our discussion is about right now. What does that mean? We have the latitude to define those metrics so we want to take care in what we say to [upper administration] about what we’re trying to do and then come through on it.

It seemed that upper administration used this stepwise funding scheme as a way to build in accountability for the investment in the LSI so as to maximize the potential that it would be used effectively. However, this accountability measure also appeared to contribute to a potentially unintended focus on short-term fixes rather than long-term, sustainable, and systemic solutions. The funding structure required annual reporting on improvements in student learning outcomes, which led to an emphasis on small, uncomplicated efforts that involved minimal collaboration with or input from multiple stakeholders. Given this reward system as context, two issues arose that seemed to promote and incentivize small-scale reform at the expense of large-scale institutional change. We note that the Austin model discusses the change target of reward systems only in relation to individual faculty members, but we considered reward systems more broadly, as the annual allotment of LSI funds from upper administration could be interpreted as a reward to the college.

The first issue was that competition increased among both departments and courses for allocations of the limited funding pool. Especially in the first year of the LSI, funding to departments was on a first-come, first-served basis without a great deal of feedback from the leadership committees or accountability for the proposal writers to track intended outcomes. One administrator specified, “The allocations are going to be based on strong proposals from the departments focused on improved student learning outcomes and effective evaluation of that.” This type of message appeared to contribute to a competitive rather than collaborative environment, as one chair on the chair and director committee noted,

That has changed the nature of the [LSI] from looking at institutional problems to individual courses competing with each other for resources. That then tends to pull things apart instead of making a cohesive whole and I don’t think the [LSI] should be at that fine level of granularity.

Building on the sentiment that competition should be avoided, while curricular and interdepartmental collaboration should be promoted, another chair on the chair and director committee said,

I’ve heard frustration from a couple chairs that, you know, “What exactly do we ask for?” We’ve asked for things and gotten some things and I mean I think it’s really working. We might not have the greatest outcomes, but I know this should be spread across our departments. [We] shouldn’t be competing with each other.

Although the curriculum leader committee later generated explicit expectations for funding requests so as to more objectively decide which proposals should be passed on to the chair and director committee for funding consideration, the competition for funding between departments and courses still seemed to promote small-scale changes at the expense of large-scale institutional reform. Ultimately, the funding structure was a point of contention in building collaboration between departments to work toward a more coherent curriculum, even though building a coherent curriculum was one of the central priorities of the LSI.

A second issue we observed to be affected by the funding and reporting structure was that specific courses were targeted for LSI efforts and funding not because changes to courses were necessarily expected to have the most impact on student outcomes but rather because courses are closed systems that are easily defined. A chair and director committee member said, “[An administrator], as you’ve heard, wants to get going on the next second-tier courses. We want to empanel those committees because we want to be able to say where we’re continuing to make progress with reforming this.” It seemed that changing courses provided immediate evidence to upper administration that committees were being formed, courses were being discussed, and progress was being made. However, as was noted by a concerned faculty member, “Getting the right people in the room in order to effect change … that hasn’t happened and won’t happen if we just continue to have these little course committees,” and a department chair similarly highlighted the tension between focusing on short- versus long-term outcomes:

There are these two issues that I would like us to take on board. You’ve got the [pedagogical] innovation piece that we’re talking about a lot. And then, you’ve got the stewardship—what’s going to happen next week? And if we don’t have those in mind as two different functions that we have to achieve, then, they can actually get in the way of one another. “We can’t innovate because, by God, I [as a department chair] have got to have somebody in that class next Monday.” Or, “Who cares who’s in that class? I’m thinking about innovation. And three years from now, I’m going to have outstanding pedagogical reform.” So, those things have to be somewhat separated.

As compared with reforming a single course, even one with multiple sections taught by different instructors, developing coherence across the curriculum and across departments was more difficult (as described in Curriculum-Level Change Seemed Difficult to Facilitate without a Shared and Broad Vision for Biology Education at RIU), and documenting related changes in student learning outcomes on the short annual time frame seemed essentially impossible. The constraints of the annual funding and reporting structure appeared to yield little incentive or reward for the LSI to target and implement long-term efforts or solutions. Rather, leaders addressed “low-hanging fruit” issues that needed immediate attention and would provide fast results for reporting.

The LSI Was Designed to Target Not Only Student Learning but Also Institutional Structure, Faculty and Staff Support, and Curricular Alignment

The message from upper administration that “year-by-year increments of funding [would be] contingent upon demonstrating that the investments [were] improving student learning” was relayed to all biology faculty who attended a departmental town hall meeting. Yet, inherent in the LSI proposal were objectives that were not directly tied to student learning, such as hiring advisors within biology departments to increase student access to major- and career-specific advising; hiring an internship coordinator to enable greater student access to research and career experiences; and increasing the number of biology faculty in order to, without increasing faculty workload, decrease course section sizes and encourage more active learning. Although these interventions could be indirectly aligned with student learning and potentially student persistence and graduation, they were outside the initial conception of what would be considered acceptable evidence of improvement to present to upper administration. As the initiative progressed, then, we perceived that leaders recognized that evidence of educational improvement would need to go beyond student learning for the LSI to be successful. Data presented here were coded as relating to leadership, because NSC administrators had to iteratively negotiate with upper administration and show that the institutional commitment to the LSI needed to be broad. One administrator discussed the need to state the LSI outcomes so that they would be clear for all stakeholders and noted that those outcomes should go beyond student learning:

One of the first things we need to do is think about articulating the LSI outcomes … and showing how we would measure that, whether or not we’ve achieved those outcomes. They include things like making sure that students have access to the appropriate courses … if you don’t have access, you’re not going to learn anything.

A department chair also seemed to recognize the importance of outcomes that did not directly tie to student learning. For example, regarding improving student access to a course that was historically a bottleneck in the curriculum, the chair said,

To me, that’s a huge thing that is easily assessed, and it’s a totally different scale … than pedagogy in the classroom, but terribly important to students and student satisfaction … It looks like fixing an institutional problem … If they only multiply out the pedagogy that they’re currently doing so that the students aren’t having to wait until they’re seniors before they take this class … If they only did that, I think it would be a huge improvement institutionally.

In the same meeting, an administrator echoed the idea that LSI outcomes should not be limited to those only related directly to student learning:

This raises the important issue that came up before, that we need to distinguish learning outcomes from other outcomes, because the learning outcomes are important, but if we consider programmatic assessment and programmatic evaluation, one [outcome] would be to give students access to the course. So, I completely agree with [a department chair] that this will achieve an outcome that was part of the original Life Sciences Initiative proposal.

Further, it appeared that other outcomes that were not articulated in the original LSI proposal were viewed as improvements in building a coherent biology curriculum, because they developed organically. For example, following a workshop that was devoted to garnering faculty and instructor input on one of the introductory biology courses, Bio 101, a department chair said,

To me the workshop itself is the outcome. The discussions that happened during the workshop made people feel a sense of community and feel … that they’re actually doing something that is bigger than just “my little part.” They were really good discussions.

Similarly, an administrator later said, “I think the goodwill is the biggest outcome, most positive outcome of what has happened in the [Bio 101] community.” Over the course of the implementation of the LSI, these types of outcomes were more explicitly articulated and justified. These and other similar outcomes were used in annual reports to upper administration, providing evidence of progress and success of the LSI and supporting the case for continued funding.

DISCUSSION

Our goal in this study was to document a process of institutional reform and particularly to describe what leadership issues arose in implementation of an undergraduate biology transformation initiative. We focused on the LSI, an initiative to improve teaching and learning at a research-intensive university, because the primary pressure on faculty at this type of institution is not on undergraduate education, rather the primary criteria for achieving tenure and promotion are obtaining funding, producing high-quality research publications, and becoming recognized by one’s peers as a leader in the research field. We used two frameworks designed for describing change in instructional practice for undergraduate STEM courses—the Henderson and Austin models—as lenses through which to view leadership of the LSI. Our analyses reveal how particular change strategies contributed to the leadership and implementation of the LSI. Here, we also assess the efficacy of the Henderson and Austin models for examining a reform effort and offer an extended model that moves beyond change in STEM instructional strategies to STEM organizational change strategies. This analytical process can serve as a model for reform efforts at other institutions to improve timely feedback and potential for success.

Change Strategies Evident in a Biology Reform Effort at a Research-Intensive University

A quantitative analysis identified which codes were most common in each meeting type (Table 2). The highest level of leadership meeting type, administrative strategist, was predominantly focused on enacting policy, and the next-highest level of leadership meeting type, chair and director, was focused more on developing shared vision. The leadership meeting type that was composed of more teaching faculty and fewer chairs or administrators, curriculum leader, had similar frequencies of developing shared vision and enacting policy excerpts as the chair and director meetings. The Austin model codes were not as aligned with the data, resulting in fewer codes present compared with those from the Henderson model, but the most common code, unsurprisingly, as it is the focus of the targeted meeting groups, was leadership.

Several patterns emerged in examining the overlap between the codes from the Henderson and Austin models (Table 3). The leadership code was highly aligned with both enacting policy and developing shared vision. As would be expected, disseminating curriculum and pedagogy and developing reflective teachers were best aligned with professional development. Aside from leadership, enacting policy and developing shared vision were co-coded most frequently with reward systems and work allocation.

A key finding from this work is that a large-scale reform initiative like the LSI targeted at changing a substantial group of faculty and their environment appeared to be made more successful when it both drew insight from the local faculty level through developing shared vision and brought a sense of direction from administration through enacting policy. It is not unusual for STEM faculty to believe that leadership of reform processes should be exclusively bottom-up or top-down (Kezar et al., 2015), and garnering buy-in for and encouraging faculty ownership of a reform initiative through developing shared vision alone can be effective given a relatively noncontroversial change and a particular type of institution or culture that can readily prioritize the change (Kezar, 2014). Indeed, though we heard from faculty a number of times throughout this study that undergraduate education is now more valued at research-intensive institutions compared with a decade ago (Association of American Universities, 2013), the cultural and fiscal pressures on tenure-track faculty at research-intensive institutions to produce research results still renders change in undergraduate education a secondary priority, however well-intentioned faculty may be (Hora, 2012). Further, most top-down organizational change efforts fail (Burnes, 2011).

We perceived the main challenges in the LSI to be poor alignment between the funding structure and intended outcomes and an initial lack of clarity that, in terms of improving student success, the LSI should target institutional structure, faculty and staff support, and curricular alignment as well as student learning outcomes. Once those challenges were identified and reflected upon by LSI participants, we found the LSI to progress more smoothly. In summary, we find that easing the burden of an undergraduate education reform initiative on faculty through articulating clear outcomes, developing shared vision across stakeholders on how to achieve those outcomes, and providing appropriate reward systems, as well as ensuring that faculty have ample opportunity to influence the initiative, appear to increase the success of reform. These lessons may be transferable to other institutions engaging in education reform.

Assessing the Efficacy of the Models Used to Examine a Biology Reform Effort

We used the Henderson and Austin models for change in faculty instructional practice as frameworks within which we could explore our data through identification of change strategies, pertinent levers and barriers, and change targets. In other words, we did not set out to test the two models but rather to gather data that would align with them and to determine whether the data would provide a basis for extending either model. The models overlap in their documentation of change strategies, particularly regarding professional development, and we found one area within each model that was not well represented in the other. The Henderson model specifies that targeting environments and structures is distinct from targeting individuals, whereas the Austin model primarily discusses the actions of individuals. The Austin model highlights that each of the change strategies can act as a lever for change or a barrier to change. We find that layering this idea of levers and barriers with the Henderson model makes the Henderson model even more effective at explaining change—that is, depending on the context and particular issue at hand, enacting policy, for example, can act as either a lever for or barrier to change.

Interestingly, levers for change and barriers to change were important for day-to-day management of the LSI and yet were not as useful for quantitatively coding the implementation efforts. For example, when a faculty member in a course committee expressed concern about whether the LSI would be more effective than previous reform efforts, administrators used that feedback to both build a clearer discourse about how to present the LSI as more promising than prior reform efforts and to identify a venue for discussing the concern with faculty stakeholders. Although the initial concern could be viewed as a barrier to change, because faculty were apprehensive about the reform, the response to allay the concern and garner buy-in was viewed as a lever for change. Similarly, many instances in the transcripts paired barriers and levers. Usually, either a barrier to change was identified (e.g., lack of communication to broader LSI stakeholders) and then an associated lever for change was discussed (e.g., creation of an LSI website), or a lever for change was identified (e.g., a new proposed governance structure) and associated barriers to change became apparent (e.g., lack of standard procedures for coordinating among chairs). Therefore, levers and barriers as codes were not quantitatively useful in characterizing the efficacy of implementing the LSI and, thus, we did not discuss them in the Analyses section.

An Extended Model That Moves from Change in STEM Instructional Strategies to STEM Organizational Change Strategies

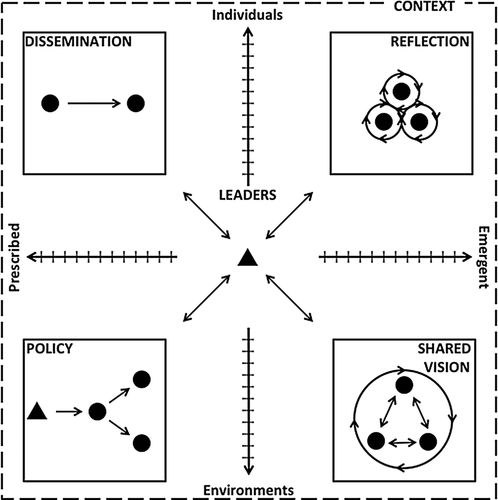

As we examined this case, we developed a model that moves from change in STEM instructional strategies to STEM organizational change strategies (Figure 1). Because the Henderson model more explicitly captures change strategies, whereas the Austin model focuses on change targets, the Henderson model was the more appropriate basis for the extended model.

FIGURE 1. Model of organizational change strategies for STEM reform efforts based on extending the original Henderson model to include leadership and context. Circles represent change members, and triangles represent change leaders, which could be top-down or bottom-up leaders. This model is generalizable, as change leaders could replace change members in any of the change strategies depending on the context of the change effort in question. (Source: The original model appeared in Henderson et al. [2011], published in the Journal of Research in Science Teaching by John Wiley & Sons, Inc., and the modified version is credited to Alexis Knaub and Western Michigan University’s Center for Research on Instructional Change in Postsecondary Education.)

The main extension we propose is the idea of leaders as a specific type of actor to distinguish them from individuals involved in reform efforts who do not take on leadership roles. Leaders, who can be referred to as “change agents,” as they are not necessarily in positions of formal authority (Kezar, 2014; Kezar and Lester, 2014), can contribute to any of the four change strategies that the Henderson model describes: dissemination of information by a thought leader in a seminar to faculty; a faculty learning community leader aiding in reflection within the group; an official leader such as a president, provost, dean, or chair enacting policy; or a chair participating in developing shared vision.

Context was explicitly added to the model to highlight excerpts in which leaders appeared to recognize that a particular reform process would be limited or otherwise enhanced by some kind of contextual factor. Indeed, Kezar (2014) emphasizes that having a sense of the historical, external, and organizational factors that affect reform processes is a key task for change agents, and Toma (2010) provides a succinct outline of specific areas in which universities can build their organizational capacity to better support reform initiatives. Further, initiatives may be more successful if change agents are aware of factors that influence university life more generally and distinguish institutions of higher education from government agencies and private organizations. For example, universities stress the specialized knowledge of faculty; although administrators oversee issues of budgets and infrastructure, faculty are considered to be autonomous. The reliance on disciplinary societies and the commitments that institutions make to individual faculty through tenure are other distinguishing features of higher education that should be considered as part of the context in reform processes.

We also modified aspects of the model to be more general and inclusive of myriad reform efforts. For example, “disseminating curriculum and pedagogy” is more inclusive and generalizable as simply “disseminating.” Consider an instance in which a new provost shares a model of management successful at her previous institution with her new institution, which uses a more traditional management structure. This dissemination does not directly affect pedagogy and curriculum but rather administration of the institution. Similarly, we suggest that “developing reflective teachers” is more inclusive as simply “reflection.” An instance in which reflection is important in higher education revolves around staff involved in student affairs or advising. These actors do not directly affect curriculum and pedagogy, but their reflection on what they hear from students can be used to improve their feedback and responsiveness to students.

Because local context is a crucial factor in any reform initiative, it is important to address why this research is relevant to other institutions of higher education. First, the analytical process of examining the implementation of an initiative is transferable and can be used to uncover leadership issues specific to reform efforts at other institutions. As a case in point, an RIU department chair stated, “Institutional change is critical and I think that we’ve had underlying problems that we didn’t even know about until we started this institutional change … I’m thrilled that I see us recognizing issues now that we just didn’t even know existed.” Second, the Henderson and Austin models were adequate for our work, but future work might evaluate our extended model and test whether it can apply to organizational change strategies more generally. Finally, the goal in any case study is not necessarily to generalize in a broad sense beyond the one case to a larger population but rather to understand the complexity of the case itself and identify ideas that are transferable to another setting (Anderson, 2010). Given the persistent national focus on STEM education reform, we anticipate that these results will be useful to research-intensive universities already engaged in reform efforts and those that will begin reforms in the future.

CONCLUSION

Typical reform efforts in undergraduate STEM education have focused on the classroom level, encouraging faculty to adopt evidence-based teaching practices by providing professional development opportunities, disseminating curricular innovations, and initiating new policies (Fairweather, 2008; Henderson et al., 2011). The research community in undergraduate STEM reform has, however, begun to recognize the myriad factors at play when engaging faculty, departments, and colleges in institutional reform (Henderson and Dancy, 2007; Brownell and Tanner, 2012; Kezar et al., 2015). This study of leadership in an undergraduate STEM reform initiative is situated in this niche and sheds light on how department chairs and administrators, especially those under the pressures of research-intensive institutions, can approach reform work. Although we have targeted faculty in formal positions of leadership, those without formal positions of leadership can still be advocates for systemic change.

We have used a qualitative approach that acknowledges the deep integration of the institutional context and the object of study; indeed, context-specific research has been highlighted as a need in higher education (Menges, 2000). Because the particular characteristics, features, and idiosyncrasies of an institution must be integral to any reform effort, we have not attempted to write a prescriptive algorithm for others to follow. Rather, we offer insights into our own reform process that in turn offer lessons for our faculty colleagues engaging in reform work.

ACKNOWLEDGMENTS

We thank James S. Fairweather for his mentorship and support; Charles Henderson, Andrea Beach, and Noah Finkelstein for granting permission to use their model; our anonymous colleagues for reviewing the paper; and Stephen R. Thomas for feedback on the visual model.