Characterizing Student Perceptions of and Buy-In toward Common Formative Assessment Techniques

Abstract

Formative assessments (FAs) can occur as preclass assignments, in-class activities, or postclass homework. FAs aim to promote student learning by accomplishing key objectives, including clarifying learning expectations, revealing student thinking to the instructor, providing feedback to the student that promotes learning, facilitating peer interactions, and activating student ownership of learning. While FAs have gained prominence within the education community, we have limited knowledge regarding student perceptions of these activities. We used a mixed-methods approach to determine whether students recognize and value the role of FAs in their learning and how students perceive course activities to align with five key FA objectives. To address these questions, we administered a midsemester survey in seven introductory biology course sections that were using multiple FA techniques. Overall, responses to both open-ended and closed-ended questions revealed that the majority of students held positive perceptions of FAs and perceived FAs to facilitate their learning in a variety of ways. Students consistently considered FA activities to have accomplished particular objectives, but there was greater variation among FAs in how students perceived the achievement of other objectives. We further discuss potential sources of student resistance and implications of these results for instructor practice.

INTRODUCTION

National reports calling for improvements to undergraduate science, technology, engineering, and mathematics (STEM) education have urged faculty to implement research-based instructional strategies (RBISs) in their courses (National Research Council, 1999, 2000), and nearly all the RBISs recommended by the Vision and Change report represented formative assessment (FA) techniques (American Association for the Advancement of Science, 2011). FAs have been broadly defined as assessments that are “intended to generate feedback on performance to improve and accelerate learning” (Sadler, 1998). Unlike summative assessments (e.g., exams, finals), FAs typically occur more frequently and involve lower stakes (Angelo and Cross, 1993; Handelsman et al., 2007). FAs are considered to be one of the most effective educational interventions (Black and Wiliam, 1998). When integrated throughout the learning cycle as preclass, in-class, or postclass activities, FAs provide structure to a course by giving students opportunities to demonstrate their knowledge and correct misunderstandings. This course structure has been associated with reduced failure rates and improved achievement for all students, with the largest gains for students from traditionally underrepresented groups (Freeman et al., 2011; Haak et al., 2011; Eddy and Hogan, 2014).

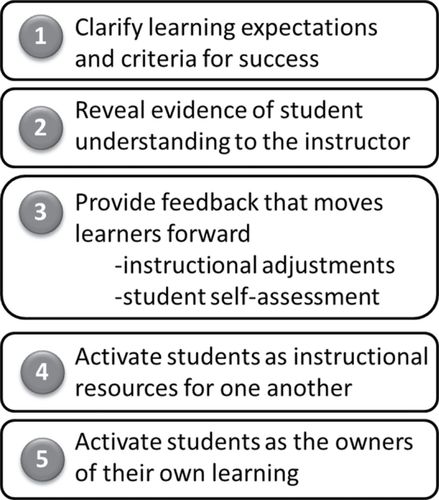

Through a literature review, Black and Wiliam (2009) identified five key ways that FAs support student learning (Figure 1). 1) FAs clarify learning intentions and criteria for success by helping students identify the material they must learn and glean clues about performance expectations (Wiggins and McTighe, 2005). 2) FAs help elicit evidence of student understanding for the instructor (Tanner and Allen, 2004). 3) FAs provide feedback that moves learners forward both by enabling instructors to modify their teaching to focus on challenging topics and by helping students to identify their own deficiencies and correct their misunderstandings (Nicol and Macfarlane-Dick, 2006). 4) FAs activate students as instructional resources for one another through peer discussion, which has the potential to help students improve their understandings (Smith et al., 2009; Tanner, 2009). 5) FAs activate students as owners of their own learning and help empower students to become self-regulated learners by providing tools for regular practice and self-reflection (Ertmer and Newby, 1996; Schraw et al., 2006).

FIGURE 1. The five objectives of FA. (Adapted from Black and Wiliam, 2009.)

While FAs have the potential to promote learning in STEM courses, many factors can influence their impact on student behaviors and outcomes. First, students vary in their individual learning orientations and study strategies (e.g., motivation, self-efficacy, metacognition, and resource management), and these characteristics correlate with assessment preferences (Birenbaum, 1997). In addition, student perceptions about learning environments and activities can affect how students approach learning and engage with course activities. For example, students with negative attitudes toward their learning environment or the appropriateness of course assessments favor surface approaches to learning, such as rote memorization, while positive course perceptions are associated with deeper approaches that emphasize conceptual understanding (Trigwell and Prosser, 1991; Lizzio et al., 2002; Struyven et al., 2005). Such deep approaches to learning also correlate with higher exam performance (Holschuh, 2000; Davidson, 2003; Elias, 2005). This prior work focusing on summative assessments suggests that student perceptions regarding the purpose and benefits of FAs could impact student interactions with FAs and the learning that results. If students understand and value how particular FA methods can facilitate their learning (i.e., if they “buy into” their use), then they may be more likely to put more effort into completing and using them in productive ways (Cavanagh et al., 2016). Conversely, if students view these methods as unhelpful or irrelevant to their learning, they may choose to engage with FAs in superficial ways that undermine learning (e.g., rushing through them or copying answers from the Internet).

Student perceptions of FA techniques can also influence instructors’ decisions to adopt and continue using these teaching methods. Instructors frequently cite student resistance as an important factor affecting their ability to implement transformed teaching practices (Ebert-May et al., 2011; Seidel and Tanner, 2013). Instructors fear that students will not complete preclass reading and other assignments, will refuse to participate during in-class activities, or will fail to work cooperatively in groups (Felder and Brent, 1996). Instructors also express concerns that students equate teaching with lecture and will therefore express dissatisfaction about student-centered approaches (Felder and Brent, 1996). In rare cases, students may exhibit extreme forms of resistance in response to reformed teaching, such as signing petitions and boycotting classes (Breslow, 2010). More commonly, faculty may experience resistance through declines in their teaching evaluation scores (e.g., Lake, 2001). Improved understanding about student attitudes toward FA methods and sources of student resistance could help address instructor concerns regarding the implementation of these techniques.

Studies examining course evaluations or other measures of overall course satisfaction after FA implementation have yielded mixed results. Some studies found positive student reactions (Huxham, 2005; Ernst and Colthorpe, 2007) or comparable satisfaction between courses using FA techniques and those using traditional lecture methods (Crouch and Mazur, 2001; Van Dijk and Jochems, 2002; Machemer and Crawford, 2007). Others determined that student course satisfaction declined after FA techniques were incorporated (Goodwin et al., 1991; Lake, 2001; Struyven et al., 2008). However, these studies using general course evaluation measures failed to link student attitudes to particular FA methods and neglected to probe student perceptions regarding the different dimensions in which FAs support learning.

Studies of student perceptions of particular FA methods have mainly focused on the use of clickers or peer instruction (a pedagogy associated with clickers; Mazur, 1996). Reviews of these methods found overall positive student attitudes about how they make class enjoyable and foster learning (Keough, 2012; Vickrey et al., 2015). Additionally, a survey of 384 instructors who use peer instruction revealed that the majority of faculty reported positive student reactions, some experienced mixed reviews, 5% experienced negative reactions, and 4% experienced initially negative responses that improved over time (Fagen, 2003). Only a few studies have examined undergraduate student perceptions of out-of-class assignments (e.g., preclass assignments and postclass homework). Two studies found that the majority of students perceived Just-in-Time Teaching (JiTT) assignments (Marrs and Novak, 2004) and homework assignments (Letterman, 2013) as useful for their learning. A different study found that preclass assignments in a flipped class were rated by students as less effective than postclass assignments in a nonflipped class (Jensen et al., 2015). Importantly, none of these studies on in-class or out-of-class FA methods addressed how student perceptions align with the five FA objectives identified by Black and Wiliam (2009). Whereas previous studies focused primarily on whether or not students viewed FAs positively, this study is the first to consider how FAs are being used as assessments from a student perspective.

In the present study, we sought to characterize student perceptions of FAs by directly surveying students about FAs that occur before, during, and after class. In particular, we aimed to understand why students think FAs are being used in a course and how students perceive FAs as influencing their own learning and the instructor’s teaching. We also wanted to determine the degree to which students perceived commonly used FA methods to have achieved the five FA objectives. In collecting this information, we further sought to gauge levels of student buy-in for different FA activities and to identify potential sources of student resistance.

METHODS

Study Context and Survey Administration

This study was conducted during the 2014–2015 academic year in seven sections of two sequential introductory biology courses that serve as the gateway for a diverse array of life sciences majors at the University of Nebraska–Lincoln (UNL). These courses were chosen for this study for several reasons. First, we wanted to focus on introductory courses due to their prioritization in national educational efforts and their role as entry points to STEM majors (Seymour, 2000). Second, these courses have the potential to establish course norms and shape student experiences in later courses. Finally, these courses had recently been revamped as part of a larger campus-wide movement to improve STEM education, and the instructors were eager to gain insights into how students were reacting to the pedagogical changes.

Nine instructors were involved in teaching these seven sections, including three sections that were team taught.1 All of the sections used three or four different types of FAs, including at least one in-class and one out-of-class method. We interviewed all instructors briefly at the beginning of the semester and more thoroughly at the end of the semester about how each FA was implemented in the course. Instructors were given no instructions by the researchers on which FAs to use or how to implement them. Most of the instructors had some level of educational training through participation in professional development workshops on teaching (e.g., Pfund et al., 2009).

Students enrolled in these course sections completed a midsemester survey that occurred roughly half to three-quarters of the way through the semester. This timing was chosen to give students sufficient time to experience how the FAs were being used and to avoid overlapping with end-of-term course evaluations. Students took the survey outside class time and received a small (<2%) amount of course credit (either required or extra credit) for survey completion. Deidentified survey responses were shared with the course instructors. We collected a total of 1123 student surveys from consenting students, representing a 76% participation rate. Participating students were 62% female and 38% male, with 40% first years, 36% sophomores, 17% juniors, 6% seniors, and 1% postbaccalaureate or graduate students.

FA Methods

This study examined a variety of FA methods that occurred before, during, or after class. Table 1 shows the number of sections that used each FA type and the total number of students who provided survey responses.

| FA timing | FA type | Abbreviation | Course sections | Student responses |

|---|---|---|---|---|

| Preclass | Just-in-Time Teaching assignments | JiTT | 2 | 247 |

| Online textbook-associated program preclass assignments | OTP-pre | 4 | 470 | |

| In-class | Clicker questions | CQ | 6 | 646 |

| In-class activities | ICA | 3 | 235 | |

| Postclass | Online textbook-associated program postclass assignments | OTP-post | 3 | 256 |

| Homework assignments or homework quizzes | HW/Q | 5 | 446 |

Preclass FAs were assignments that were completed outside class and were due before the class session in which the corresponding material would be covered. JiTT assignments were preclass exercises in which students answered three to four questions that were typically open ended and focused on a particular topic (Marrs and Novak, 2004). These assignments also included a question designed to allow students to communicate areas of confusion to the instructor (e.g., a “muddiest point” question; Angelo and Cross, 1993). Online textbook-program assignments that occurred before class (OTP-pre) involved students completing a predefined set of electronic learning activities, including video tutorials and closed-ended questions, related to the particular textbook chapter that would be covered during the following week.

In-class FAs were activities that occurred during face-to-face class meetings. Clicker questions (CQ) involved the use of electronic audience-response systems that enabled students to submit individual answers to closed-ended questions. Peer instruction, a pedagogy often used with clicker devices, involves posing a question that students answer individually, followed by peer discussion, a second vote, and a wrap-up instructor explanation (Mazur, 1996; Crouch and Mazur, 2001). Most instructors followed this sequence, while two skipped the individual vote before peer discussion. A second in-class FA involved some type of in-class activity (ICA) in which students worked in groups to complete a task or set of questions either electronically or on paper (e.g., worksheet).

Postclass FAs were assignments that were completed outside class and covered topics that had already been discussed during class. OTP-post assignments were very similar to OTP-pre assignments, except that they were due after the given material had been covered in class. Another type of postclass FA was homework assignments or online homework quizzes (HW/Q). The format of homework varied among sections, with some using only closed-ended questions, and others including a mixture of closed-ended and open-ended questions. Some instructors wrote their own questions, while others used a question bank.

Most instructors provided students with some degree of rationale for the different FA types, except OTP-post assignments (Table 2). For certain FA types (e.g., JiTT and clickers), instructors explicitly mentioned that the FA results provided information they could use to alter their teaching. For other FA types (e.g., OTP-pre assignments, in-class activities, and homework), the instructors focused on the benefits to students, such as to help students become familiar with concepts before class, learn from their peers, complete practice problems, or self-assess. Instructors varied in the depth and timing of the rationale they provided: some instructors mentioned rationale only briefly, while others had more extensive discussions, including presenting students with data supporting the benefits of that FA for learning. Rationale was often provided on the first day of class, and some instructors revisited this discussion later in the course.

| FA timing | FA type | Rationale provided to students | FA used to alter teaching |

|---|---|---|---|

| Preclass | JiTT | 2/2 – discussed benefits for students and the instructor. | 2/2 – devoted class time to discussing JiTT answers and addressing problem areas. |

| OTP-pre | 4/4 – discussed benefits for students but not for the instructor. | 1/4 – used results to identify areas in which students were struggling or proficient and adjusted lectures accordingly. | |

| In- class | CQ | 5/6 – discussed benefits for students; instructors in four of these sections also mentioned benefits for the instructor as part of the rationale. | 6/6 – gave immediate feedback based on student responses; instructors in three of these sections occasionally revisited topics in later lectures. |

| ICA | 2/3 – discussed benefits for students but not for the instructor. | 2/3 – gave immediate feedback based on responses; the instructor in one of these sections used ICAs to shape the content of lectures. | |

| Postclass | OTP-post | 0/3 | 0/3 |

| HW/Q | 4/5 – discussed benefits for students but not for the instructor. | 3/5 – occasionally revisited student problem areas. |

Instructors also varied in the extent to which they used the FA results to alter their teaching (Table 2). For in-class FAs, instructors typically made immediate adjustments on the basis of student understanding, though some occasionally revisited difficult topics in subsequent lectures. For out-of-class FAs, instructors varied in whether they used the FAs to shape their upcoming lectures. For both sections using JiTT, the instructors devoted class time to covering topics that the assignment revealed as problem areas for students, particularly from the “muddiest point” question. The other out-of-class FAs were used by some instructors to alter their teaching practices, but these changes were only made on an occasional basis (i.e., not after every assignment).

Survey Format

The survey consisted of individual blocks containing open-ended and closed-ended questions pertaining to each FA type used in a course. To minimize survey fatigue, each student was randomly given blocks pertaining to only two of the FAs used in that section. Open-ended questions were used to provide insights into students’ most salient thoughts about particular FA methods, while closed-ended items directly probed student perceptions about specific FA characteristics. Each block began with three open-ended questions addressing why students thought the FA was being used in the course, how it influenced their learning, and how it influenced the instructor’s teaching. Two closed-ended Likert items measured the degree to which students perceived the FA to benefit students or the instructor. Seven closed-ended items addressed student perceptions regarding FA alignment with each of the five FA objectives. Most of these items used a five-point Likert scale (strongly disagree, disagree, neither agree nor disagree, agree, strongly agree), while one item used a different scale to capture the frequency of peer discussion (never, rarely, sometimes, often, always). Only students who engaged in peer discussion of an FA at least rarely were subsequently asked whether peer discussions helped their learning. Finally, students were asked an open-ended question regarding how the FA could be changed to improve student learning. Each survey item was customized by including the name of the FA type. For example, in the item “[These FAs] help me identify what material I am expected to learn in this course,” the bracketed portion was replaced with the FA name (e.g., “Clicker questions” or “JiTT assignments”). Instructors were consulted to ensure that the FA names used in the survey would be familiar to the students. The question order within each block followed the order described in this section and presented in the Results section (see the Supplemental Material).

Data Analyses

We adopted a descriptive approach to data analysis that sought to characterize the range of different student responses across a broad sample of FA types and the level of alignment with FA objectives. The small number of courses using each FA type restricted our ability to make inferences regarding specific differences between FA types.

Responses to each of the four open-ended questions were coded using the following process. First, we read through a subset of student responses (at least 25% of total responses) from each course and generated a list of all the unique response types (i.e., initial coding). We then grouped these different responses into categories of similar responses (i.e., focused coding) and used this list to create a coding rubric (Saldaña, 2009). During the coding process, student responses were first separated into their distinct ideas, which were each coded separately. Therefore, each student response could receive more than one code, depending on how many separate ideas were present. All three authors were involved in developing and finalizing the coding rubrics. A separate coding rubric was created for each question, but some codes were present across multiple rubrics. Complete coding rubrics, including code definitions and example student responses, can be found in the Supplemental Material. Codes were also grouped according to overarching themes for each question (e.g., positive, negative).

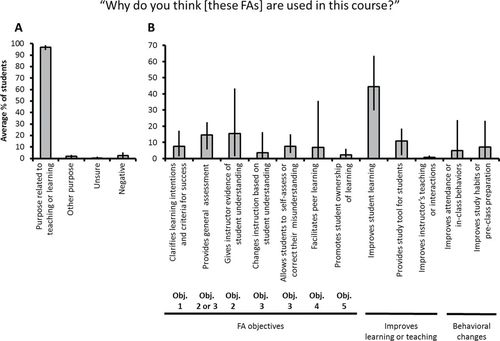

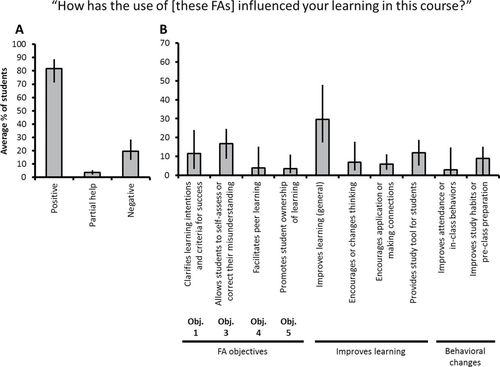

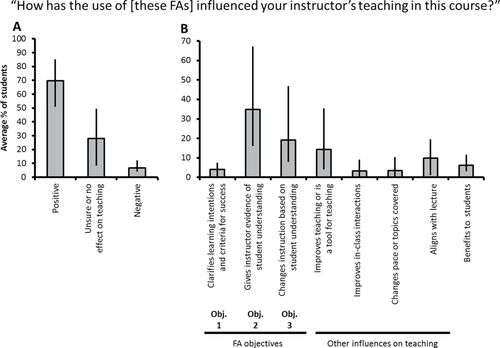

The coding process involved first establishing interrater reliability by having two authors (K.R.B. and T.L.B.) iteratively cocode small sets of responses. Disagreements were discussed and resolved between each set until an interrater reliability of kappa 0.8 was achieved (Landis and Koch, 1977). Additional responses were then cocoded until 10% of total responses had been cocoded for each question, and kappa was verified to still be greater than 0.8. Finally, one author (K.R.B.) finished coding the remaining responses. For each of the four open-ended questions, the percent of student responses including a given category were averaged across all course sections using each FA type. The graphs from open-ended questions show the average of these FA type averages, with error bars showing the range of FA type averages (Figures 2 and 3, 4, and 7 later in this article). A small number (2%) of off-topic responses that did not address the questions were omitted from the graphs. In some cases, related codes were collapsed into aggregate codes to streamline data presentation.

FIGURE 2. Open-ended student responses about why FAs are used in a course. The x-axis shows response categories for the open-ended question shown in the figure title. (A) Frequencies of overall response themes and (B) the response categories comprising the “purpose related to teaching or learning” theme. Bars show the average percent of students whose response included each theme or category. Error bars represent the range of averages for each FA type.

For closed-ended items, we calculated the percent of students choosing agree/strongly agree or disagree/strongly disagree for each course section and averaged these rates across all course sections using each FA type. We used the following adjectives to describe average agreement rates (i.e., students selecting agree/strongly agree) on closed-ended items: 40–49% = low, 50–59% = fair, 60–69% = moderate, 70–79% = high, ≥ 80% = very high.

RESULTS

Student Perceptions of the Purposes of FAs

The first open-ended question addressed student perceptions about the purposes of FAs. For all six FA types, nearly all students (with averages for the different FA types ranging from 94 to 99%) provided answers related to teaching or learning, while few students (0–3%) listed a purpose that was unrelated to teaching or learning (e.g., to boost grades; Figure 2A). Many of the purposes related to teaching or learning were aligned with the five FA objectives (see Figure 2B for average category frequencies). For example, one category of responses included statements that FAs were used to clarify learning intentions and criteria for success (objective [obj.] 1; e.g., to help students see what they were expected to know or to give examples of what test questions would look like). In addition, some students indicated that FAs were used to assess student understanding. While some of these comments did not specify whether the assessment information was intended for the instructor or the student, other comments indicated that the assessment was used specifically by the instructor to gather evidence of student understanding (obj. 2), that this evidence led the instructor to adjust subsequent teaching practices (obj. 3; e.g., by spending more time on a topic that students had failed to master), or that the FAs provided feedback that enabled students to self-assess or correct their misunderstandings (obj. 3). Additional student responses aligned with the FA objectives included comments about how the FA facilitated peer learning (obj. 4) and promoted student ownership of learning (obj. 5; e.g., helped students teach themselves or construct answers in their own words). Other student responses fell into an aggregated category capturing additional ways that FAs improved student learning, such as by encouraging thinking or knowledge application. Students also reported pragmatic purposes for FAs, including that the FAs provided study tools, improved in-class behaviors (e.g., motivated class attendance or helped students pay attention), and supported valuable out-of-class study habits (e.g., encouraged preclass preparation or prevented procrastination and cramming).

Very few student responses to this question were categorized as negative (1–5%; Figure 2A). These comments included general statements of dissatisfaction and specific complaints about how the FA was implemented. Collectively, these data indicate that students perceived a variety of theory-based and practical purposes behind FA implementation.

Student Perceptions of the Influence of FAs on Learning in a Given Course.

The second open-ended question focused on how students perceived FAs to positively or negatively influence their learning in a given course. For all six FA types, a majority of students (71–89%) indicated at least one positive way in which FAs facilitated their learning (Figure 3A). These positive student responses encompassed many different ways that the FAs improved their learning, again including responses aligned to the five FA objectives (see Figure 3B for average category frequencies). Namely, these responses indicated that the FAs helped students understand learning intentions and criteria for success (obj. 1), provided feedback for students by allowing them to self-assess or correct their misunderstandings (obj. 3), facilitated peer discussions to improve learning (obj. 4), and helped students take ownership of their learning (obj. 5). Students also specified additional ways that FAs improved their learning. While some provided general statements (e.g., helped improve understanding or provided information), others gave more specific ways that FAs improved their learning, such as by encouraging critical thinking, changing how students thought about the material, and helping students apply concepts or understand connections among concepts. Finally, similar to the previous open-ended question about the purpose of FAs, students also mentioned that the FAs provided a study tool or motivated behavioral changes in students (e.g., improved attendance, in-class behaviors, or out-of-class study habits).

FIGURE 3. Open-ended student responses about how FAs influence their learning. The x-axis shows response categories for the open-ended question shown in the figure title. (A) Frequencies of overall themes of responses and (B) the response categories comprising the “positive” theme. Bars show the average percent of students whose response included each theme or category. Error bars represent the range of averages for each FA type.

While the majority of students cited positive ways that FAs influenced their learning, some indicated that the FA was only partially helpful (2–5%) or gave negative comments (13–29%; Figure 3A). Most negative comments (8–18% of all students) were of a general nature (e.g., unhelpful to student learning) or were not directly related to learning (e.g., detrimental to grades). Some students (3–9%) gave specific reasons why FAs were unhelpful to learning, including complaints about question content, group members, feedback mechanisms, FA timing, FA frequency, or the amount of time taken away from lecturing. Finally, a small number of students (< 2% for all FA types) said that the FA actually hindered their learning (e.g., confused them or caused stress that prevented them from focusing on learning). On the whole, while the majority of students perceived positive ways that the FAs influenced their learning, a small subset of students expressed negative perceptions.

Student Perceptions of the Influence of FAs on Teaching in a Given Course.

The third open-ended question asked students about how the use of an FA influenced the instructor’s teaching in a given course. Unlike the responses to the previous two open-ended questions, the average percentage of students responding with positive comments varied considerably among FA types (Figure 4A). For JiTT, clicker questions, in-class activities, and homework, the majority of students (70–85%) perceived positive influences on the instructor’s teaching; however, fewer students gave positive comments for OTP-pre and OTP-post assignments (56 and 51%, respectively). Similar to the questions about FA purpose and influence on learning, some positive student comments aligned with the five FA objectives (see Figure 4B for average category frequencies). These included statements indicating that the instructor used the FA to clarify learning intentions and criteria for success (obj. 1), that the FA provided the instructor with evidence of student understanding (obj. 2), and that the instructor used this information to alter instructional practices (obj. 3). Other positive comments included general improvements to instruction (e.g., improved instructor’s explanations or served as an instructional tool), improvements to in-class interactions, changes in the topics covered or pace of lecture based on reasons other than student understanding (e.g., the instructor could rely on the FA to cover certain material), and alignment between the instructor’s lecture and the FA (e.g., topics were similar between lecture and the FA or the instructor incorporated discussion of the out-of-class FAs into lecture). Finally, some positive comments did not focus on the instructor’s teaching but instead indicated ways in which the FA benefited students.

FIGURE 4. Open-ended student responses about how FAs influence teaching. The x-axis shows response categories for the open-ended question shown in the figure title. (A) Frequencies of overall themes of responses and (B) the response categories comprising the “positive” theme. Bars show the average percent of students whose response included each theme or category. Error bars represent the range of averages for each FA type.

The remaining student responses were categorized under neutral or negative themes (Figure 4A). For OTP-pre and OTP-post assignments, many students (44 and 50%, respectively) indicated that they were either unsure or that the FA did not influence the instructor’s teaching, while this type of response was mentioned less frequently for the other FA types (8–32%). Negative comments were relatively uncommon for all FA types (4–12%). These negative comments included complaints that the FA hindered instruction, because it took time away from lecturing (0–6% of all students), complaints that the instructor failed to modify instruction on the basis of FA results (<1–4%), and other complaints about the FA or instructor implementation (3–5%; e.g., FA grading, FA completion time, clarity of instructor explanations). Taken together, the responses to this question indicate that student perceptions about how FAs influenced an instructor’s teaching practices varied among FA types, with a substantial number expressing uncertainty for certain activities.

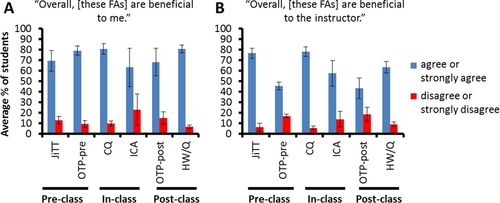

Student Perceptions of Overall Benefit of FA Methods.

To address the overall perceived benefits of FA methods, students were separately asked two closed-ended questions on whether a particular FA was beneficial to themselves or to the instructor. While the perceived benefit could have been interpreted by students in a variety of different ways (e.g., beneficial for learning, grades, or career), this question was intended to capture an overall sense of perceived FA value without making assumptions regarding the value system that students use to judge course activities. On average, a high to very high number of students agreed that preclass FAs, clicker questions, and homework assignments were beneficial to them (70–81%), and a moderate number of students agreed that in-class activities and OTP-post assignments were beneficial (63–68%; Figure 5A). Student perceptions about the benefit of FAs to the instructor varied more widely among FA types. Agreement rates were high for JiTT and clicker questions (77–78%), moderate for homework (63%), fair for in-class activities (58%), and low for OTP-pre and OTP-post assignments (43–46%; Figure 5B).

FIGURE 5. Student perceptions of the overall benefit of six different FA types. (A) Responses to the item about the perceived benefit to the student and (B) responses to the item about the perceived benefit to the instructor. Average rates of agreement and disagreement across each section using that FA type are shown as blue or red bars, respectively. The neutral response (“neither agree nor disagree”) is omitted. Error bars represent SEs.

In addition to variation among FA types, there was also variation among instructors using the same FA type, reflected in the SE bars (Figure 5). For example, among the six instructors using clicker questions, the agreement rates ranged from 64 to 94% for the question about overall benefit to the student and from 67 to 95% for the question about overall benefit to the instructor.

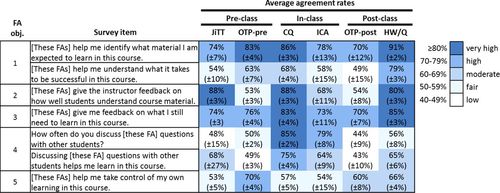

Student Perceptions of FA Alignment with the Five FA Objectives.

While the open-ended questions enabled students to articulate their most prominent conceptions, they did not directly probe student perceptions regarding the alignment of each FA with the five FA objectives. Thus, the survey included seven closed-ended items intended to address student perceptions about whether the FA techniques achieved each of the five FA objectives (Figure 6). Agreement rates for the item about learning intentions (obj. 1) were high to very high across FA types (70–91%). Agreement with the related item about criteria for success (obj. 1) was high for homework (79%) but low to moderate for the other FA types (49–68%). On the third item, which addressed providing feedback to the instructor (obj. 2), there was great variation in agreement rates, with very high rates for JiTT, clicker questions, and homework (80–88%); moderate rates for in-class activities (68%); and fair rates for OTP-pre and OTP-post assignments (53–54%). Conversely, for all FA types, students reported high to very high agreement with the item about the FA providing feedback to students (obj. 3; 70–85%). In response to the item about frequency of peer discussion (obj. 4), a high to very high percent of students indicated that they discussed in-class FAs at least sometimes (79–85%), while only a low to fair percent of students reported discussing preclass or postclass assignments (44–56%). On the related item about helpfulness of peer discussion (obj. 4), posed only to students who reported discussing FA activities, a moderate to high percent (64–75%) viewed discussion of JiTT, clicker questions, in-class activities, and homework as helpful, while a low percent found discussion of OTP-pre and OTP-post assignments helpful (43–49%). The final item, regarding the degree to which an FA helped students take control of their learning (obj. 5), showed fair to moderate agreement across most FA types (53–66%), with OTP-pre assignments receiving high agreement (70%). Thus, while many students perceived FA activities to be meeting the key objectives, there was variation in how well the different objectives were met by the various FAs. Furthermore, as with the questions about overall benefit, agreement rates varied among instructors using the same FA type, which is reflected in the SEs (Figure 6).

FIGURE 6. Heat map showing student perceptions of how various FA types are aligned with the five FA objectives. The first column indicates the FA objective to which each survey item aligned. Percentages represent the average percent of students (± SE) across each section who agreed with the survey item. Darker boxes indicate higher agreement rates (see key). For most items, the agreement rates shown reflects combined “agree” and “strongly agree” rates, but for the item about how often students discuss FA questions, responses for “sometimes,” “often,” and “always” were combined. Students only answered the item about the helpfulness of discussion if they indicated that they discuss FA questions at least rarely for the previous question.

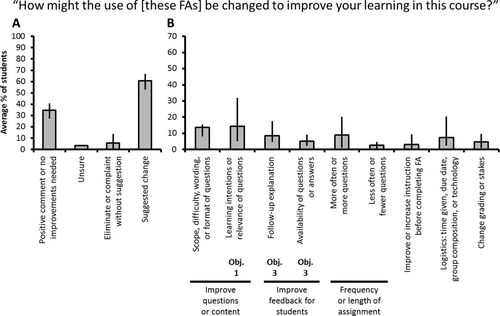

Student Perceptions about How to Improve FAs.

To provide a better understanding of potential sources of resistance, students were asked an open-ended question on how the FAs might be changed to improve learning. Some students gave positive comments or stated that no improvements were needed (27–41%; Figure 7A). A small number of students expressed uncertainty (< 4% for all FA types), while some indicated that the FA should be eliminated or complained without offering a suggestion (1–14%). Finally, most students suggested at least one way to change FAs (53–67%).

FIGURE 7. Open-ended student responses about how FAs could be improved. The x-axis shows all response categories for the open-ended question shown in the figure title. (A) Frequencies of overall themes of responses and (B) the response categories comprising the “suggested change” theme. Bars show the average percent of students whose response included each theme or category. Error bars represent the range of averages for each FA type.

Suggested improvements spanned a broad range of different categories (see Figure 7B for average category frequencies). Some suggestions involved improvements to FA content, including the scope, difficulty, wording, or format of questions, while other improvements related to the degree to which the questions aligned with course expectations (obj. 1; e.g., questions were unrelated to material covered in class or exam questions). Students also mentioned two types of modifications that could improve FA-related feedback to students (obj. 3). First, students stated that better follow-up explanations were needed to help students understand why answers were right or wrong (i.e., either from instructor explanation, through rubrics, or via other instructional materials). Second, students wanted increased access to FA questions and answers (e.g., posting in-class questions online after class or making out-of-class questions and answers viewable after completion). Other suggested changes included adjustments to the frequency or length of FA assignments, with more students recommending an increased frequency or number of questions, especially for JiTT and clicker questions. Another type of suggested change was to increase the amount or quality of instruction before FA completion. Finally, some suggested changes related to logistical issues (e.g., completion time, due date, composition of groups, technological issues) or grading issues (most of which focused on reducing the stakes or strictness of FA grading). Overall, while students generally perceived the value of FA activities, many students identified mechanisms for improvement, some of which are consistent with the ways that FAs are intended to support learning.

Sources of Student Resistance

To better understand student perspectives, we identified a small subset of putative “resisters” for each FA type on the basis of their responding that they disagree or strongly disagree that the FA benefits them (Figure 5A, red bars). We then analyzed the frequencies of comments these students made to the open-ended question regarding how to improve the FAs. Some resisters either stated that the FA should be eliminated or gave a complaint without an additional suggestion (5–45% of resisters), while most resisters recommended a specific change (70–81% of resisters; Supplemental Figure S1A). The most common suggested improvements made by resisters varied among FA types (Supplemental Figure S1B). For JiTT, the most common suggestions of resisters related to the scope, difficulty, wording, or format of the questions or content (23%); the need for better follow-up explanation (17%); or logistical timing issues (17%). Resisters of OTP-pre assignments most commonly called for improving how FA questions related to course expectations (30%) or indicated that they needed more instruction before completing these assignments (19%). The most common resister suggestion for clicker questions related to grading (38%), usually advocating for fewer total points or points awarded based on participation rather than correctness. For in-class activities, the most common suggestion made by resisters pertained to activity logistics (41%), such as completion time or group composition. With respect to OTP-post assignments, resisters largely suggested that the questions should better clarify learning intentions or relate to course content (50%). Finally, resisters of homework often made suggestions related to the availability of questions or answers (18%) or improvements to the scope, difficulty, wording, or format of questions (18%). While overall resistance toward FAs was relatively low, these responses suggest that resistant students often have specific criticisms for certain FA activities.

DISCUSSION

In this study, we used a descriptive, mixed-methods approach in several sections of introductory biology to gain insights into how students viewed commonly used FA techniques. This research was designed to capitalize on the strengths of different question formats for addressing our overarching research questions. Open-ended questions were used to capture salient ideas and to enable students to provide a level of breadth and detail in their responses. However, these open-ended questions had a limited ability to diagnose or quantify student perceptions regarding the overall benefit of FA activities or the alignment of FA techniques with specific FA objectives, so we also asked closed-ended questions targeting these specific points. These complementary approaches were intended to capture a broad picture of student perceptions regarding the purpose and use of different FAs.

Open-ended student responses revealed many important insights into student perceptions of FAs. First, the vast majority of the students recognized that the FAs served important purposes associated with teaching and learning, suggesting that students are able to recognize how FAs are intended to function (Figure 2A). Second, the breadth of student responses across open-ended questions related to FA purposes and influences indicated that student perceptions were highly diverse (Figures 2–4). This diversity of student responses suggests that student perceptions include a wide range of ideas that cannot be reduced to a singular, consensus opinion. Thus, instructors and other stakeholders should resist oversimplifying student perceptions or applying uniform assumptions across different students. Third, students provided a variety of responses aligned with the five FA objectives, supporting the idea that these objectives encompass many of the ways in which students view FAs to be operating. Finally, students mentioned other meaningful perceptions that provide additional insights into how students view FAs as supportive of learning. In particular, students cited FAs as promoting certain cognitive processes, such as applying concepts or thinking differently about course topics. Students also recognized that FAs can be used to motivate students to attend and participate during class and to help students develop productive study habits. Thus, while many student perceptions were aligned to the Black and Wiliam (2009) framework, students also saw additional purposes and influences of FAs within the course context.

As a complement to open-ended questions, we used closed-ended questions to gauge the extent to which student perceptions aligned with the five FA objectives (Figure 6). Students showed consistent agreement for the objectives of clarifying learning intentions (obj. 1) and providing feedback that moves learners forward (obj. 3). However, alignment with other objectives was more varied among the different FA types. In particular, OTP assignments were perceived by fewer students as providing feedback for the instructor, suggesting that these assignments were not meeting this important FA objective. In addition, all out-of-class assignments promoted less peer discussion than in-class assignments. Finally, agreement with the item addressing student ownership of learning was modest for most FA types. These results highlight important opportunities for instructors to optimize their use of FAs by working to achieve the different FA objectives. Instructors can consider using information from FAs to shape their instruction (Angelo and Cross, 1993; Keeley, 2015). Because discussing class material with others outside class is associated with higher academic success, instructors might also find ways to promote peer interactions for out-of-class assignments (Benford and Gess-Newsome, 2006). Finally, instructors can help students realize how FAs provide a means to take ownership of their course success by having students consider ways in which FAs support learning and achievement (Nicol and Macfarlane-Dick, 2006).

While all of the FAs studied have the potential to achieve each of the five FA objectives, the variation seen in student responses indicates that activity characteristics and implementation decisions likely affected student perceptions (Figures 5 and 6). Variation across the FA types implies that certain FAs have particular affordances or limitations that can be recognized by students, even when implemented by multiple instructors. Variation among instructors using the same FA type suggests that course-level instructional decisions can also influence student perceptions. These findings agree with previous results showing that instructors implement activities in different ways and that these differences can establish course norms that affect student behaviors and engagement with instructional activities (Turpen and Finkelstein, 2009; Dancy and Henderson, 2010). Furthermore, student perceptions are also likely influenced by activity framing and the extent to which an instructor explained why and how particular activities were being used (Seidel and Tanner, 2013). Understanding the complex relationships between FA implementation and student perceptions remains an important area for investigation. By uncovering the different dimensions in which students consider FAs to influence teaching and learning, our current work lays a foundation for future studies into how specific implementation decisions affect student perceptions.

Building on our mixed-methods approach, we also sought to look across different questions to synthesize a broader understanding of student perceptions of FA activities. Before this study, there had been little research addressing student perceptions of several of these methods. Overall, student responses to both open-ended and closed-ended questions indicated that student perceptions of the various FA types were largely positive and that resistance toward their use was low. Students largely agreed with the closed-ended question regarding whether the FA benefited the student (Figure 5A), and many students perceived that FAs helped them identify what they were expected to learn and gave them feedback on what they still needed to learn (Figure 6). Similarly, in response to the open-ended question about how FAs influence learning in the course, the majority of students indicated a positive way that the FAs have influenced their learning and identified specific mechanisms for how FAs facilitate their learning (Figure 3). This level of student buy-in agrees with other work reporting positive student perceptions of in-class FAs (Keough, 2012; Vickrey et al., 2015) and addresses gaps in the literature about perceptions of out-of-class FAs.

Another overarching trend pertained specifically to how students perceived the FAs to influence instructor teaching practices. To some degree, students were not well positioned to gauge how the instructor used FA results, because the instructor could have made these adjustments outside class time. This limitation was potentially reflected in students being unsure or reporting no effect of the FA on the instructor’s teaching (Figure 4A). However, these responses were largely driven by the OTP activities, which instructors reported using only minimally to alter their teaching (Table 2). Conversely, positive student perceptions for this same question were particularly pronounced for JiTT and clickers, which instructors reported explicitly using to make instructional changes (Table 2). These response patterns were also reflected in closed-ended questions, in response to which students reported the OTP assignments as being less beneficial for the instructor (Figure 5B) and providing less feedback to the instructor on how well students understand course materials (Figure 6), while agreement rates for JiTT and clickers were considerably higher for these same questions. These results suggest that, while the way that the instructor uses FA results may be beyond normal student purview, FA follow-up and messaging can lead students to perceive that FA-related information is being used to make instructional adjustments. Thus, in addition to using FA results to alter their teaching practices, instructors may also want to consider how being explicit about their use of FA results shapes student thinking regarding the purposes and functions of an FA activity.

The positive perceptions revealed by this study are important, because they suggest that FAs have the potential for high student buy-in. Indeed, few students expressed complaints related to factors that have been previously cited to cause resistance toward active-learning techniques. For example, very few students expressed that they would learn better if the instructor just lectured. This result contrasts with earlier findings of such opinions among students (Qualters, 2001; Fox-Cardamone and Rue, 2003; Yadav et al., 2011) and with fears expressed by instructors when considering adopting reformed teaching practices (Felder and Brent, 1996). Instructors may also have concerns that students will reject group work and refuse to complete preclass assignments (Felder and Brent, 1996). However, few students complained about working with peers or about the lack of instruction before preclass assignments. Across all FA types, students were more likely to offer tractable changes. These changes included improving alignment with the five FA objectives, particularly with respect to writing questions that better reflect the instructor’s learning intentions (obj. 1) and improving feedback mechanisms for students (obj. 3; e.g., by providing follow-up explanations or access to questions and answers). Other suggestions included general improvements to FA questions (e.g., scope, difficulty, wording, or format) and logistical issues (e.g., timing, group construction, or technology issues). Overall, our data suggest that the students were open to the use of FAs and would rather see them improved than removed.

Faculty perceptions of student resistance may be related to a tendency to focus on the loudest negative voices, especially if satisfied students are less likely than resisters to make their opinions readily known. However, when students are polled more widely, these negative voices may prove to be in the minority. This underscores the importance of maintaining communication between students and instructors. Formal or informal surveys of the entire class can help gauge the prevalence of student resistance (Seidel and Tanner, 2013). These surveys also provide an opportunity for instructors to communicate the purposes of FAs and encourage student metacognition (Tanner, 2012) while gaining valuable feedback from students on whether FAs are achieving certain objectives. Furthermore, by addressing student responses, instructors can potentially help increase student buy-in and lead students to use FAs in a manner that better supports learning (Goodwin et al., 1991; Keeney-Kennicutt et al., 2008).

While student resistance toward FAs may not have been widespread, it remains important to understand the most negative student voices. To gain insight into potential sources of student resistance, we specifically examined a group of resisters who disagreed that the FA was beneficial to them. For many FA types, the most common complaints among resisters were related to the FA questions or content. These responses collectively suggest that instructors could minimize resistance by ensuring close alignment in the scope and difficulty of course learning goals, FA activities, lecture content, and exam questions (Wiggins and McTighe, 2005). For in-class FAs, other common issues cited by resisters pertained to grading or logistics (e.g., how groups are constructed or having enough time to complete activities). Other work has shown that student perceptions of unfairness in grading procedures and other course policies are strongly associated with resistance (Chory-Assad, 2002; Chory-Assad and Paulsel, 2004). Thus, an instructor may be able to address particular types of resistance by tuning the scoring and implementation of an activity so that these aspects do not become barriers to student engagement, while maintaining adequate incentives and desired activity dynamics.

Overall, our data provide evidence that students in introductory biology courses can buy into a wide variety of FA types. Previous research supports the notion that such positive student perceptions can lead students to engage in deeper rather than surface approaches to learning (Trigwell and Prosser, 1991; Lizzio et al., 2002; Struyven et al., 2005). However, the present study cannot draw any conclusions regarding the learning gains resulting from the particular FA activities. These perceptions also reflect a particular instructor sample and local student demographics, and it is possible that a random sample of biology courses from across the country would yield different results. Furthermore, we are limited in our ability to determine the extent to which FA perceptions were formed by students on their own or were influenced by how the instructor framed the activity (Seidel and Tanner, 2013). Finally, the questions used here were designed to provide an initial portrait of student perceptions. While certain patterns appeared consistently across different questions, further efforts are needed to understand the extent to which these questions capture student perceptions and represent the dimensions proposed by Black and Wiliam’s (2009) framework. Despite these limitations, this study provides specific recommendations that instructors can use to inform FA implementation and create environments conducive to student learning. This study also provides one of the largest and most comprehensive data sets that leaders of professional development workshops can cite to ease instructor fears, help them anticipate potential sources of student resistance, and encourage them to recognize students as important partners in educational transformation.

FOOTNOTES

1 There was one instructor who contributed to two of the team-taught sections. For one of these sections, this instructor had a minor role before survey administration, while in the other section this instructor had a predominant role before survey administration. For this reason, these were treated as separate sections. For the third team-taught section, two different instructors alternated across consecutive weeks.

ACKNOWLEDGMENTS

This material is based on work supported by the National Science Foundation WIDER Award (DUE-1347814). This work was completed in conjunction with broader efforts by the UNL ARISE project team, which included Lance Pérez, Ruth Heaton, Leilani Arthurs, Doug Golick, Kevin Lee, Marilyne Stains, Matt Patton, Trisha Vickrey, Hee-Jeong Kim, Ruth Lionberger, Jeremy van Hof, Beverly Russell, Sydney Brown, Tareq Daher, Eyde Olson, Brian Wilson, Jim Hammerman, and Amy Spiegel. We are grateful to Mary Durham, Joanna Hubbard, Marilyne Stains, and the UNL Discipline-Based Education Research community for critical research discussions and feedback on the manuscript. We also thank the instructors and students who participated in the study. This research was classified as exempt from institutional review board review (protocol 14314).