Improvements from a Flipped Classroom May Simply Be the Fruits of Active Learning

Abstract

The “flipped classroom” is a learning model in which content attainment is shifted forward to outside of class, then followed by instructor-facilitated concept application activities in class. Current studies on the flipped model are limited. Our goal was to provide quantitative and controlled data about the effectiveness of this model. Using a quasi-experimental design, we compared an active nonflipped classroom with an active flipped classroom, both using the 5-E learning cycle, in an effort to vary only the role of the instructor and control for as many of the other potentially influential variables as possible. Results showed that both low-level and deep conceptual learning were equivalent between the conditions. Attitudinal data revealed equal student satisfaction with the course. Interestingly, both treatments ranked their contact time with the instructor as more influential to their learning than what they did at home. We conclude that the flipped classroom does not result in higher learning gains or better attitudes compared with the nonflipped classroom when both utilize an active-learning, constructivist approach and propose that learning gains in either condition are most likely a result of the active-learning style of instruction rather than the order in which the instructor participated in the learning process.

INTRODUCTION

Technological advances have impacted almost every facet of modern culture; education is no different. As new technologies become available, they are often embraced in educational innovation in an attempt to enhance traditional instruction. The “flipped classroom” is one of the most recently emerged and popular technology-infused learning models. This is a learning model in which content attainment is shifted forward to outside of class in an online format and then followed by teacher-facilitated concept application activities in class. This model has gained such popularity across the country that there is now a Flipped Learning Network with more than 12,000 member educators that supports educators wanting to implement a flipped strategy in their classrooms. It is especially popular among K–12 educators, 40% of whom reported a desire to try the flipped model this coming academic year in the Speak Up online survey (Project Tomorrow, 2013). Even in higher education, the flipped classroom is gaining significant ground, being implemented on college campuses across the country.

Hamdan and others offer a definition: “In the Flipped Learning model, teachers shift direct learning out of the large group learning space and move it into the individual learning space, with the help of one of several technologies” (Hamdan et al., 2013, p. 4). Many researchers have put forth variations on the definition of “flipped.” The main idea is to shift the attainment of content before class in the form of instructional videos, recorded lectures, and other remotely accessed instructional items. Then, instructors spend in-class time applying the material through complex problem solving, deeper conceptual coverage, and peer interaction (Strayer, 2012; Tucker, 2012; Gajjar, 2013; Sarawagi, 2013). Sarawagi (2013) suggests that it is defined by facilitating low-level (terms, definitions, and basic content) learning outside class and high-level (application-based) learning within class.

According to the constructivist, inquiry-based, learning cycle model (Heiss et al., 1950; Bybee, 1993; Lawson, 2002), teaching consists of two phases: a phase in which students are gaining conceptual understanding (hereafter referred to as the content attainment phase) and a phase in which students learn to apply and/or evaluate those concepts in novel situations in order to broaden their conceptual understanding beyond the context in which they learned it (hereafter referred to as the concept application phase). In a traditional teaching model, the instructor facilitates content attainment through various means in a classroom setting. Students are then given the responsibility of applying the concepts, generally in the form of homework assignments. In a flipped model, the roles are reversed, with students being responsible for attaining the content before coming to class, at which time the instructor facilitates the application process. It appears that the main difference between these models is the role of the instructor: to facilitate content attainment or concept application. Does it matter where you place instructor and student responsibilities?

Owing to the relatively recent emergence of this model, research has not been done to determine the differential effect of instructor facilitation in the two phases of learning. Constructivist theory would suggest that instructor facilitation is equally important in both phases. Vygotsky also put forth the idea that an instructor (or a more capable peer) can provide scaffolding by which students can perform above their current level of development, thus facilitating their learning (Vygotsky, 1978). Research has also been done on the complete presence or absence of an instructor, comparing entirely online courses with face-to-face courses. Results are mixed and confounded by the many different variables involved, that is, active versus passive approaches, inclusion of blended models, and differences in instructional material and learning time; see Means et al., 2010, for a meta-analysis. From this, it seems that an instructor can play an important role; however, a theoretical rationale for the differential importance of the instructor in each phase of learning is impossible to establish.

Studies testing the effectiveness of the flipped model currently consist of either case studies, in which practitioners describe the implementation of a flipped model in their own classroom and report primarily affective data (e.g., Lage et al., 2000; Bergstrom, 2009; Fulton, 2012; Johnson, 2012), or comparison studies, in which the flipped model is compared directly with traditional didactic methods (e.g., Strayer, 2012; Tucker, 2012; Tune et al., 2013). Case studies are informative but may offer limited value, in that they are often not generalizable, they often do not target any causal explanation, and they are seldom compared with a control. Comparative studies can offer different insight in a more generalizable manner; researchers are finding positive trends in a flipped environment over a traditional environment (Bergstrom, 2011; Strayer, 2012; Tucker, 2012; Tune et al., 2013). However, current studies on the flipped classroom are limited due to the fact that so many potential causative mechanisms are being changed between treatments (e.g., shifting to active learning, including additional technology, using additional teaching materials, implementing peer instruction) that it is difficult, if not impossible, to disaggregate them.

For example, Bergstrom (2011) compared a passive lecture model with an approach that used online content delivery. However, many of the variables changed between conditions, so it was impossible to parse out the effects and pinpoint a specific causal factor. Bergstrom did, however, find more positive opinions from students utilizing online content, although exam scores showed no differences. In 2012, Strayer ran a statistics course in both lecture-based and active flipped formats. Student attitudes and impressions showed slight improvement in the flipped condition; no quantitative learning gains were measured. Tucker (2012) suggests that, in high school settings, the flipped model leads to better relationships between students and the instructor, greater student engagement, and higher motivation; however, no quantifiable data were presented. Tune et al. (2013) gathered data on graduate students in a flipped physiology course in which marked and quantified improvement in conceptual understanding was seen. However, many variables differed between conditions (including active learning, course materials, and instructional lessons) making it impossible to attribute the success of the model to any one causal factor. McLaughlin et al. (2014) flipped an introductory pharmaceutics course at a pharmacy school and found that student attitudes and self-reported learning were greater in the flipped model. However, no quantitative learning gains were measured, and the flipped model was compared back to a lecture-based model. In their report on flipped learning, Hamdan et al. (2013) observed, “Quantitative and rigorous qualitative research on Flipped Learning is limited” (p. 6).

The goal of this study was to take the first step into providing such quantitative and controlled data about the effectiveness of the flipped model. Specifically, we aimed to compare a flipped model with a nonflipped model while only varying the role of the instructor, thus controlling for as many of the other potentially influential variables as possible, especially the influence of active learning. A 5-E learning cycle model (Bybee, 1993) was used in both treatments. In the nonflipped model, the instructor’s responsibility lay within the content attainment process, while the responsibility of concept application was delegated to the student as homework assignments, quizzes, and other assessments (albeit, often utilizing peer interactions). Alternatively, in the flipped model, the student was given responsibility for content attainment before class, and the instructor was then able to facilitate concept application through complex problem-solving and group work on items that would traditionally have been homework assignments. It would seem logical that instructor and peer facilitation are equally important in both phases; however, classroom time constraints necessitate the delegation of one of the phases to students. If it matters where instructor and peer facilitation takes place, we predicted that we would see a clear difference in treatment conditions between a nonflipped learning cycle and a flipped learning cycle model. Currently this comparison has not been made; it was therefore impossible to make a research-driven prediction on which condition would be superior.

METHODS

Ethics Statement

The institutional review board for human subjects at J.L.J.’s institution approved this research and granted permission for human subjects use in this study; written consent was obtained from all participants.

Subjects

This study was run at a large (approx. 35,000), private, doctorate-granting university in the western United States. The university is highly selective, with an average incoming freshman ACT score of 28 and grade point average of 3.82, which means that students are highly motivated. It is a private religious institution with a highly homogeneous population both religiously and culturally. Students in this study were nonmajors enrolled in a general education biology course. They ranged from freshmen to seniors and ranged in majors across all non–life sciences disciplines. The classes had ∼60 students each. The course met three times per week for 50-min time periods.

Data were included in the analysis only for students who completed the course with a passing grade to exclude students who stopped attending the course midway through the semester and returned only to take the final exam (60% and above; both sections had an equal fail rate of ∼8%). Thus, 53 students in the nonflipped condition and 55 students in the flipped condition were included in the final analysis.

Study Design

A comparative quasi-experimental design was used. Two sections of the course were used in a test-control situation. Significant effort was put forth to ensure as much group equivalence as possible, that is, the same instructor taught both sections, the sections were taught back to back at the same time of day in the same classroom, the same textbook and course materials were used, and the same two teaching assistants were present in both sections. Additionally, group equivalence was tested using several pretests (see Measures of Group Equivalence).

The nature of our hypothesis, that instructor facilitation is the main causal factor of improvements in student learning in the flipped model, necessitated that all other factors be tightly controlled. Thus, the nonflipped and flipped sections were exposed to the same active-learning instructional materials, just via different platforms, as described in Flipped Condition Setup and Nonflipped Condition Setup below. Both sections were taught using Bybee’s (1993) 5-E learning cycle to accomplish both the content attainment and concept application phases of learning. The 5-E learning cycle consists of five instructional phases:

Engage serves to interest the students in the material and engage them in the process of learning; in this course, it usually took the form of simply introducing students to a puzzling phenomenon.

Explore allows the students to explore the content and construct their own understanding before introducing any terminology; in this course, students actively engaged with materials to discover patterns, make hypotheses, and build conceptual understanding.

Explain is the phase at which the instructor introduces terminology that students can link to their own constructions to facilitate concept building; in this course, this was accomplished through minilectures provided in person or remotely.

Elaborate forces the students to apply their new conceptual understanding to novel situations in order to broaden the domain and strengthen the framework of these concepts; in this course, students were asked to solve higher-order problems using what they had learned in the previous phases.

Evaluate can take the form of both formative and/or summative assessments that test students’ understanding of the concepts they have just learned; in this course, evaluation took the form of clicker or online quizzes (formative) and unit exams (summative).

The first three phases (engage, explore, and explain) are used to facilitate content attainment. The elaborate phase is the one in which students apply the concepts they have constructed through the content attainment stage, that is, the concept application phase.

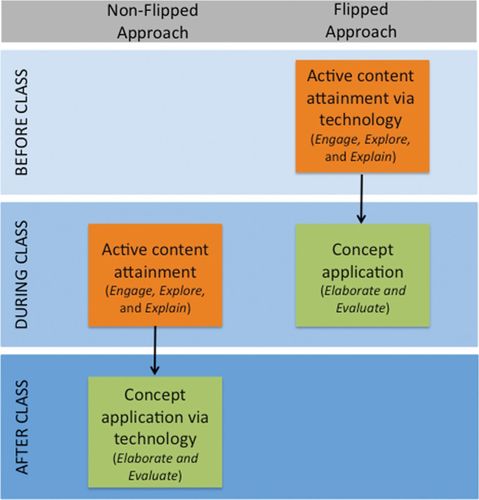

The nonflipped condition was taught such that engage, explore, and explain took place during the 50-min class period, and elaborate and evaluate took place online as a homework assignment. The flipped condition was taught using the same materials, but with the variation being that the engage, explore, and explain procedures were performed by students online as preclass homework assignments, leaving the elaborate and evaluate steps to be performed during the 50-min class period (see Figure 1).

Figure 1. Study design. This represents the activities that would occur surrounding one class period.

Nonflipped Condition Setup.

In this condition, students were first introduced to the material during in-class instruction. A typical class period began with the exploration of a novel biological phenomenon (engage and explore). Students worked in groups of three or four using a prepared student guide to discover patterns, put forth hypotheses, and analyze data. These group sessions were facilitated by both the instructor and teaching assistants; thus, students had direct access to individualized feedback. Brief whole-class discussions were interspersed between the group work to clarify concepts and introduce terms. Terms were usually written on the board or included directly in the student guide. Students were encouraged to ask questions and offer comments throughout the class period. The instructor acted mostly as a guide to their learning, rather than as the authority figure in the classroom.

A homework assignment was assigned directly following each in-class session (elaborate). The assignments were posted on the university’s course-management system (CMS). This system is formatted similar to Canvas (by Instructure). The assignments encouraged students to apply their newly acquired knowledge by presenting them with novel situations and problems to solve. Students entered their responses directly into the CMS, and teaching assistants then graded them for accuracy. Students also received feedback to most problems by preset text entered by the instructor. The instructor also answered questions and worked through any particularly difficult problems that students requested in the class period following the due date.

To assess students’ understanding of the material (evaluate), we administered periodic quizzes either via the CMS directly following the homework assignment or by iClicker in the class period directly following the assignment’s due date. The quizzes consisted of four apply, analyze, or evaluate questions with multiple-choice answers. If the quizzes were taken in class, students were allowed to discuss answers as groups, but they were required to answer individually. The instructor would then review the questions and correct answers with the class as a whole. If the quizzes were taken at home, feedback appeared directly after the quiz via the CMS.

Flipped Condition Setup.

In this condition, students were first introduced to the material online during the preclass homework assignment. This assignment was identical to the in-class activity administered in the nonflipped condition, except that it was completed online and alone. However, it still followed the format of the learning cycle, encouraging students to explore the phenomenon and discover patterns, offer explanations, and analyze data (engage and explore). For explorations that required materials, students watched short video clips that demonstrated what the other students did as an activity in class. To progress through the assignment (and to receive credit), students had to input responses in the CMS to each of the questions posed before moving to the next question. Feedback was immediately given to each question through preset text embedded in the assignment. Teaching assistants then graded responses, not for accuracy but for effort and completion. The assignments could be resubmitted unlimited times. As a consequence, students reported that they would often go back and revise their answers based on feedback offered throughout the assignment.

Students were then asked to apply the concepts to novel situations during the in-class instructional period (elaborate). At the start of class, the instructor reviewed any questions or particularly hard problems from the preclass assignment. Then, the students worked in groups to solve novel problems and apply what they had learned. Group work was facilitated by the instructor and teaching assistants; thus, students had direct access to individualized feedback. Brief whole-class discussions were interspersed among the group work to clarify concepts and offer feedback. Students were encouraged to ask questions and to offer comments throughout the class period. Again, the instructor acted mostly as a guide to their learning, rather than as the authority figure in the classroom.

For assessment of students’ understanding, the same quizzes administered in the nonflipped condition were administered in the flipped condition (evaluate). Quizzes followed application either as a homework assignment on the CMS or in class using iClicker. Quizzes administered in class in the nonflipped condition were administered online in the flipped condition and vice versa. Again, in-class quizzes were taken collaboratively but answered individually. Feedback was given in the same manner.

Students learning under both conditions took identical unit exams. Students learning under both conditions were also administered a comprehensive final exam at the end of the semester. Thus, neither section was exposed to more content material, more application practice, or more assessment than the other. A sample class activity, homework assignment, and quiz for each condition are included in the Supplemental Material.

Additional Control.

As an additional control, scores from this study were compared with scores from the previous semester, when the course was taught using the 5-E learning cycle but with less structure, that is, it was not always strictly divided into engage, explore, and explain in class and elaborate and evaluate at home. This method was the original method the instructor used to teach the course for several years and is identified here as the original group, n = 94. It closely resembled the nonflipped condition, but was less time intensive for the student in that there were fewer homework assignments and less rigidity in when and where the phases occurred. The explore and explain phases were still conducted in class and were nearly identical to the activities in the current study. However, less elaborative exercises were required of the student, with only 13 assignments given throughout the semester (in comparison with 39 assignments in the nonflipped and flipped conditions of the current study). These same 13 assignments, with minor editing, were incorporated into the current study, but an additional 26 assignments were created. In addition, students took short vocabulary quizzes in the original method to ensure that they were keeping up with the material. Unit exams were nearly identical in the original method but included 25 additional questions. Sixty-three questions from the original final exam were used in the current semester (22 low level, 17 high level, and 24 LCTSR). Table 1 highlights differences between the original course, the nonflipped course, and the flipped course. While several distinct changes were made from the original method of teaching to accommodate the current study, the goal of this comparison was simply to assess whether the extra time and effort put in by the instructor to enhance the course and the extra effort put forth by students on additional homework assignments and time on task were beneficial to learning.

| Course design | Original | Nonflipped | Flipped |

|---|---|---|---|

| Sample size (n) | 94 | 53 | 55 |

| Method | 5-E learning cycle | 5-E learning cycle | 5-E learning cycle |

| Portion in class | Engage, explore, and explain | Engage, explore, and explain | Elaborate and evaluate |

| Number of assignments that students complete outside class | 13 | 39 | 39 |

| Final exam | |||

| Shared low-level items | 22 | 40 | 40 |

| Shared high-level items | 17 | 40 | 40 |

| LCTSR | 24 | 24 | 24 |

| Nonshared additional items | 51 | ||

| Quizzes | 26 | 26 | 26 |

| Unit exams | 3 | 3 | 3 |

| Length of unit exams | 100 questions | 75 questions | 75 questions |

| Additional elements | Vocabulary quizzes, unit exam study guides (no study guide for the final) |

Measures of Group Equivalence

Both students’ prior knowledge of biology and students’ scientific reasoning skills were assessed at the start of the semester to determine group equivalence (and to be used as covariates if groups appeared to be nonequivalent). Students’ prior biology knowledge was assessed using the Biology Knowledge Assessment (BKA), an instrument designed by the researchers to assess basic biology understanding. Reliability of the instrument was low (Spearman-Brown coefficient = 0.51); it was therefore only used to establish a baseline level to assess group equivalence. It was not used in a pretest/posttest design to determine student learning. Students’ scientific reasoning ability was measured using Lawson’s Classroom Test of Scientific Reasoning (LCTSR; Lawson, 1978, version 2000). The LCTSR is a content-independent test of basic formal reasoning skills, including correlational, combinatorial, probabilistic, proportional, and hypothetico-deductive reasoning as well as the ability to identify and control variables. The LCTSR has been shown to be highly correlated with performance in science classes (Johnson and Lawson, 1998). Validity and reliability have been well established (see Lawson et al., 2000). Thus, it was used both to assess group equivalence and to determine student gains in a pretest/posttest design.

Measures of Effect

Unit Exams.

To assess incremental learning throughout the semester, we administered three identical unit exams in both the flipped and nonflipped conditions (the original condition received an additional 25 questions on each unit exam). Exams consisted of 75 multiple-choice questions written at “apply” or above of Bloom’s taxonomy (as assessed by four independent raters; Bloom, 1984). Each question was worth 2 points, making exams worth 150 points. Together, the three exams (450 points) made up approximately one-quarter of a student’s grade in the course (out of 1624 points). Exams were administered at the university testing center. Student scores were calculated as total percent correct.

Homework Assignments.

Students in both conditions completed homework assignments corresponding to each day of class. Each homework assignment was worth 10 points, and 39 assignments were given throughout the semester. Some of the assignments were given in two parts, each worth 5 points. Homework assignments made up 390 points of the total 1624 points in the course, comprising approximately one-quarter of a student’s grade. Two homework scores were analyzed. First, a homework accuracy score was computed by averaging a student’s raw score on each of the assignments and then taking an average for the section. This served as a measure of students’ understanding of the material and effort. Second, a homework attempt score was computed by tallying the average percentage of homework assignments that were attempted, regardless of their overall accuracy. This served as a measure of students’ engagement outside the course.

Final Exam Scores.

An identical comprehensive final exam was administered to both the flipped and nonflipped conditions in the university testing center (the original condition received some of the same final exam questions, see Table 1). The exam consisted of 104 multiple-choice questions, including the 24-question LCTSR (to assess reasoning gains), 40 low-level items (“remember” and “understand” of Bloom’s taxonomy), and 40 high-level items (“apply” and above of Bloom’s taxonomy). Again, all items were Bloomed by four independent raters. An additional 20 questions were included in the final that were not related to this study and were excluded from the analyses. The final exam was worth 300 points, or ∼18% of the final grade. For each student, the percent correct was calculated for the LCTSR, low-level, and high-level items and for an overall average performance for the full exam. A change in LCTSR was calculated by subtracting pre-LCTSR scores from post-LCTSR scores.

All comparisons between sections were done using independent-samples t tests or univariate analysis of variance (ANOVA) in the SPSS statistical package (2012, version 21). Where appropriate, findings were confirmed using univariate analysis of covariance (ANCOVA) using the LCTSR and BKA scores as covariates.

Measures of Affect

Student attitudes toward the course were gathered at the end of the semester. Students were given self-report surveys on a 6-point Likert scale, 1 being strongly disagree to 6 being strongly agree, with no option for neutrality. The survey asked seven questions regarding course purpose followed by a free-response item asking them to elaborate on their reasons for the course being atypical of a college-level biology course. Students were then asked to rank the importance of the activities (in class versus at home) to their learning. Table 2 outlines the questions asked. An additional two free-response questions were included asking students to comment on what they liked most about the class and on what could be improved. Responses were digitized, averaged, and compared between sections. The larger the numbers, the more strongly students agreed with the statement. Ranking of the activities were compared between sections to look for trends in student perceived usefulness of instructor facilitation. Free-response questions were categorized into common responses and frequencies were gathered.

| 1. The overall structure of this course was helpful to my learning. |

| 2. The activities I did at home were helpful to my learning. |

| 3. The activities we did in class were helpful to my learning. |

| 4. The technology-facilitated activities (e.g., learning suite exams, online videos, etc.) were helpful to my learning. |

| 5. The course seemed well-organized with each activity having a clear purpose. |

| 6. This class was designed to make me think and discover principles on my own before being taught them by the instructor. |

| 7. This class was typical of what I would have expected of a college-level biology course. |

| 8. If not, why not? Please explain. (Free response) |

| 9. If you were to rank the activities according their overall contribution to your learning, how would you rank them? (Place a 1 and a 2 in the blanks below.) |

| _____Activities done in class with instructor and peer collaboration |

| _____Activities done at home on my own time |

RESULTS

Group Equivalence

Scores on the BKA were compared using an independent-samples t test to evaluate the equivalence of the sections on prior biology knowledge upon entering the course (not all students completed the pretests; nnonflipped = 44; nflipped = 46). The BKA was scored as a raw percentage correct. The difference between sections did not reach significance, suggesting that sections were equally matched in prior biology knowledge (Mnonflipped = 41.4, Mflipped = 46.0, t(88) = 1.80, p = 0.08).

Scores on the LCTSR were compared using an independent-samples t test to evaluate the equivalence of sections on reasoning ability upon entering the course (not all students completed the pretests; nnonflipped = 45; nflipped = 47). The LCTSR is scored out of 24 points. The difference was not significant, suggesting that sections were equally matched in reasoning ability (Mnonflipped = 18.2, Mflipped = 19.5, t(90) = 1.58, p = 0.12).

Both tests confirm that the groups were statistically equivalent in prior knowledge and scientific reasoning skills. However, since both prior knowledge and scientific reasoning ability could potentially affect student performance on high-level exams, analyses were run with these as covariates to confirm the findings of the analyses without covariates included.

As an added control, a third group was included in the analysis as the original teaching method condition. The LCTSR was administered to this group at the beginning and end of the semester as well. Pre-LCTSR scores were compared between both treatment groups and this original group using ANOVA. Results showed that all three groups were equivalent (Moriginal = 17.7, Mnonflipped = 18.2, Mflipped = 19.5, F(2167) = 2.78, p = 0.07). The BKA was not administered in this original condition.

Unit Exams

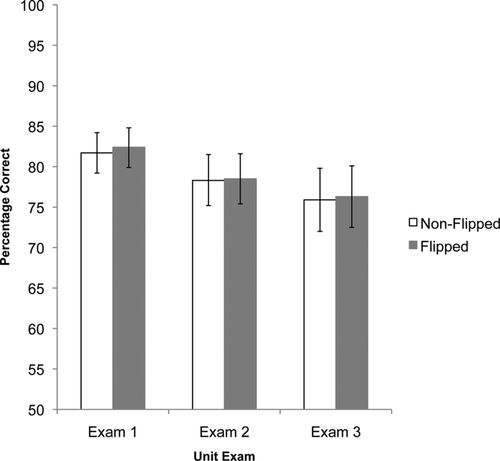

Unit exam scores were compiled for all students in each condition. Unit exams were scored as a raw percentage correct. An ANOVA was conducted, wherein each unit exam was compare between the nonflipped and flipped conditions. For all three unit exams, student scores were equivalent (see Figure 2 and Table 3). An ANCOVA using students’ LCTSR and BKA scores as covariates confirmed these results (p = 0.34, 0.09, and 0.58, respectively).

Figure 2. Unit exam scores. None of the differences is significant. Error bars represent 95% confidence intervals.

| Exam | M nonflipped (SD)nonflipped (%) | M flipped (SD)flipped (%) | F (1106) | p Value |

|---|---|---|---|---|

| 1 | 81.7 (0.08) | 82.4 (0.10) | 0.15 | 0.70 |

| 2 | 78.3 (0.11) | 78.5 (0.12) | <0.01 | 0.95 |

| 3 | 75.9 (0.11) | 76.3 (0.17) | 0.03 | 0.87 |

Homework Assignments

As a brief reminder, both the nonflipped and flipped sections completed homework assignments accompanying each in-class session. In the nonflipped section, homework assignments followed in-class sessions and encompassed the elaborate portion of the learning cycle. In the flipped section, homework assignments preceded in-class sessions and encompassed the engage, explore, and explain portions of the learning cycle.

Accuracy.

Average homework accuracy scores for each condition are shown in Table 4. Data did not meet the assumption of equal variances, therefore, a nonparametric test was warranted. A Mann-Whitney U-test was conducted comparing the control and test sections. The results show that the flipped condition achieved greater accuracy on the homework than the nonflipped condition. However, students in the flipped condition were generally graded on completion of all the problems (engage, explore, and explain), rather than accuracy; whereas, the nonflipped condition was graded on completion and accuracy (elaborate). Therefore, slightly higher scores in the flipped condition are expected.

| Score | M nonflipped (SD)nonflipped (%) | M flipped (SD)flipped (%) | Mann- Whitney U score | z-score | p Value |

|---|---|---|---|---|---|

| Homework accuracy | 82.1 (0.10) | 89.8 (0.07) | 710 | −4.59 | <0.001 |

| Homework attempt | 92.7 (0.09) | 95.7 (0.07) | 1185 | −1.71 | 0.09 |

Attempts.

A Mann-Whitney U-test was conducted to compare the percentage of homework assignments attempted between conditions. Results show that there was no significant difference between conditions (Mnonflipped = 92.7%, Mflipped = 95.7%; see Table 4).

Final Exam

Total Score.

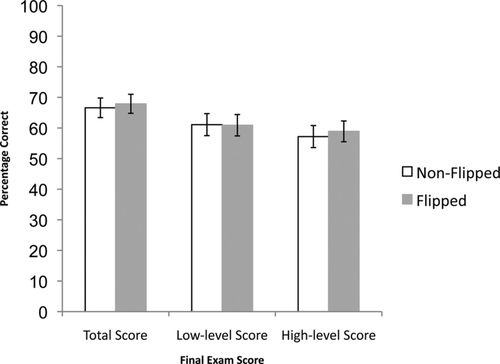

The overall total score on the final exam was evaluated using ANOVA between the nonflipped and flipped conditions. Results indicated that students learning under both conditions performed equally (Mnonflipped = 66.6%, Mflipped = 67.9%; see Figure 3 and Table 5). ANCOVA using pre-LCTSR and BKA as covariates confirmed these results (p = 0.66).

Figure 3. Final exam scores. Flipped and nonflipped treatment conditions are represented. None of the comparisons are significant. Error bars represent 95% confidence intervals.

| Final exam component | M nonflipped (SD)nonflipped (%) | M flipped (SD)flipped (%) | F(1106) | p Value |

|---|---|---|---|---|

| Total | 66.6 (11.3) | 67.9 (11.8) | 0.37 | 0.55 |

| High-level | 57.2 (12.0) | 58.9 (14.0) | 0.50 | 0.48 |

| Low-level | 61.1 (13.8) | 60.9 (12.5) | <0.01 | 0.93 |

LCTSR.

The average change in LCTSR scores from pretest to posttest was compared between conditions. Results of ANOVA show that the average change in reasoning was equal between conditions (MΔnonflipped = 2.07, MΔflipped = 1.57, F(1, 90) = 0.86, p = 0.36). To assess whether this change in reasoning was significant within each condition, we conducted a paired-samples t test comparing pre-LCTSR scores to post-LCTSR scores. The results indicated that the change in LCTSR scores in both conditions was significant (MΔnonflipped = 2.07, t(44) = 5.60, p < 0.001; MΔflipped = 1.57, t(46) = 4.11, p < 0.001). As an added control, the average change in LCTSR scores was compared between both conditions and the original teaching method using ANOVA. The average change in LCTSR in the original group was 2.8 and was statistically equivalent to both conditions in the present study (F(2167) = 2.49, p = 0.09).

High-Level Items.

An ANOVA was conducted to evaluate whether the condition affected student performance on high-level Bloom’s items (i.e., “apply” and above). Results indicated that students learning under both conditions performed equally (Mnonflipped = 57.2%, Mflipped = 58.9%; see Figure 3 and Table 5). ANCOVA using pre-LCTSR and BKA as covariates confirmed these results (p = 0.86). As an additional control, conditions in the current study were compared with conditions in the original group. On the 17 high-level items that all three conditions shared, scores were equivalent (Moriginal = 55.4%, Mnonflipped = 55.6%, Mflipped = 57.4%, F(2199) = 0.33, p = 0.72).

Low-Level Items.

An ANOVA was conducted to evaluate whether the condition affected student performance on low-level Bloom’s items (i.e., “remember” and “understand”). Results indicated that students learning under both conditions performed equally (Mnonflipped = 61.1%, Mflipped = 60.9%; see Figure 3 and Table 5). ANCOVA using pre-LCTSR and BKA as covariates confirmed these results (p = 0.40). As an additional control, conditions in the current study were compared with those of the original group. On the 22 low-level items shared between all three conditions, results of an ANOVA indicated a significant difference between them (F(2, 199) = 3.35, p = 0.04). Pairwise comparisons show that scores were equivalent between the nonflipped and flipped conditions (Mnonflipped = 61.7%, Mflipped = 63.0%, p = 0.87); however, the flipped condition outperformed the original condition (Moriginal = 57.8%, p = 0.05).

Attitudes

The attitudes survey was answered on a 6-point Likert scale. Scores were analyzed using a Mann-Whitney U-test. Results of the test are displayed in Table 6. Only two of the attitudes differed significantly between conditions. Students in the flipped condition had a more negative attitude toward the technology-facilitated activities (i.e., online tutorials, videos, and homework assignments), with an average rank of 43.03 compared with an average rank of 55.35 in the control condition (z = −2.25, p = 0.03; Table 2, item 4). However, students in the flipped condition felt that the activities had a more clear purpose for their learning, with an average rank of 55.32 in comparison with an average rank of 42.28 in the control condition (z = −2.39, p = 0.02; Table 2, item 5). There was no difference between conditions in student perceptions of the overall structure of the course (item 1), usefulness of the at-home and in-class activities (items 2 and 3), constructivist quality of the course (item 6), or perception of it being typical of a college course (item 7).

| Strongly disagree | Disagree | Somewhat disagree | Somewhat agree | Agree | Strongly agree | |

|---|---|---|---|---|---|---|

| The overall structure of this course was helpful to my learning. | ||||||

| Nonflipped | 0% | 7.5% | 15.1% | 26.4% | 28.3% | 11.3% |

| Flipped | 0% | 3.5% | 7.0% | 28.1% | 38.6% | 10.5% |

| The activities I did at home were helpful to my learning. | ||||||

| Nonflipped | 0% | 9.4% | 13.2% | 41.5% | 15.1% | 9.4% |

| Flipped | 0% | 5.3% | 15.8% | 31.6% | 28.1% | 7.0% |

| The activities we did in class were helpful to my learning. | ||||||

| Nonflipped | 1.9% | 5.7% | 11.3% | 28.3% | 30.2% | 11.3% |

| Flipped | 0% | 3.5% | 5.3% | 22.8% | 40.4% | 15.8% |

| *The technology-facilitated activities were helpful to my learning. | ||||||

| Nonflipped | 0% | 3.8% | 11.3% | 30.2% | 30.2% | 13.2% |

| Flipped | 3.5% | 14.0% | 8.8% | 31.6% | 28.1% | 1.8% |

| *The course seemed well-organized with each activity having a clear purpose. | ||||||

| Nonflipped | 7.5% | 5.7% | 13.2% | 35.8% | 17.0% | 9.4% |

| Flipped | 0% | 1.8% | 8.8% | 33.3% | 31.6% | 12.3% |

| This class was designed to make me think and discover principles on my own before being taught them by the instructor. | ||||||

| Nonflipped | 0% | 3.8% | 7.5% | 22.6% | 39.6% | 15.1% |

| Flipped | 0% | 0% | 3.5% | 19.3% | 42.1% | 22.8% |

| The class was typical of what I would have expected of a college-level biology course. | ||||||

| Nonflipped | 5.7% | 22.6% | 7.5% | 24.5% | 13.2% | 15.1% |

| Flipped | 5.3% | 10.5% | 17.5% | 21.1% | 24.6% | 8.8% |

At the end of the survey (see Table 2, item 9), students in both conditions were asked to rank the helpfulness of the assignments and activities they did in class versus the ones they did at home. Interestingly, of those who responded to the survey (nnonflipped = 47; nflipped = 50), the in-class activities were ranked as more influential in student learning than the assignments done at home in both conditions (66.0% in the nonflipped condition, 76.0% in the flipped condition). A cross-tabulation analysis indicated that these percentages are equal (χ2 = 1.19, p = 0.28). Both groups perceived their time with the instructor as more influential for learning, regardless of whether they were participating in content attainment or concept application.

Students were asked three free-response questions. Fifty-one students responded to the question concerning what made the class atypical of college classes (Table 2, item 8). In the nonflipped condition, the most common responses included that it was “more like high school,” implying that the activities seemed juvenile (27.3%), and that lecturing was noticeably absent (22.7%). A third, less-common response was that the course was easier than expected (13.6%). In the flipped condition, the most common response was that lecturing was noticeably absent (34.5%). No other response was frequent enough to be classified.

Students were also asked to list what they most enjoyed about the course; 95 students offered a response. In the nonflipped condition, the most common response was that they enjoyed the active-learning, hands-on activities format (43.5%). Other somewhat common responses included group work (15.2%) and comments pertaining to a specific lesson or content covered in the class (15.2%). In the flipped condition, the overwhelming response was the active-learning, hands-on activities format (59.2%). The second most common response was that they loved the group work (14.3%).

Finally, students were asked to offer an improvement for the course; 93 students offered a response. In the nonflipped condition, responses varied widely (34.8% fell into the “other” category, meaning they were not repeated by others), but two stood out as common responses: they wanted more lecture (21.7%); and the homework assignments were confusing, too long, or unclear (17.4%). In the flipped condition, the two most frequent responses were that the technology system used (the CMS) was a hindrance to their enjoyment of the class (27.7%) and that the assignments were confusing, too long, or unclear (23.4%). Other less common responses included the desire for more lecture (12.8%) and that the exams were difficult (10.6%).

DISCUSSION

This study shows that the flipped classroom does not result in higher learning gains or better attitudes over the nonflipped classroom when both utilize an active-learning, constructivist approach. Students performed equally well on unit exams and on both low-level and high-level items on a comprehensive final exam. In addition, student attitudes toward the class and gains in scientific reasoning ability were equal in both conditions. Finally, neither condition outperformed the original condition (less structure and less homework) on high-level conceptual understanding or reasoning gains. It should be mentioned that these data have been gathered within a specific context and from a narrow demographic. Thus, we recommend and encourage others to implement this same research design with varying student bodies in a variety of academic settings (e.g., small vs. large class sizes, lower vs. upper division, majors vs. nonmajors, different learning outcomes) to better define the degree and extent of transferability of these results.

On the basis of these data, we propose that any learning gains seen in either condition are more likely a result of the active-learning style of instruction rather than the order in which the instructor participated in the learning process, that is, whether the instructor was facilitating initial content attainment as seen in the nonflipped model or facilitating concept application as seen in the flipped model. Active learning is a more effective means of instruction over a traditional, didactic approach (Andrews et al., 2011; Freeman et al., 2014). In an influential study of more than 6000 physics students across multiple high schools and universities, Hake (1998) found that students taught using active strategies learned twice as much as students taught using a direct instruction approach. This trend has been documented in a variety of science disciplines (e.g., Shaffer and McDermott, 1992; Jensen and Finley, 1996; Wright, 1996; Ebert-May et al., 1997; Crouch and Mazur, 2001; Knight and Wood, 2005). Michael (2006) reviewed multiple active-learning techniques and concluded that active learning is now a well-supported pedagogical strategy to improve student learning. Most recently, a meta-analysis was done on 225 studies comparing active learning with traditional lecture (Freeman et al., 2014). Results confirm that active learning is superior to traditional lecture-based teaching, increasing exam scores by 6% and decreasing fail rates by more than 50%. These results hold across all STEM disciplines. In fact, it is such a successful strategy that it has been recommended in several seminal publications, including How People Learn, published by the National Research Council (2000) and Scientific Teaching, endorsed by HHMI and the National Science Foundation’s Summer Institutes (Handelsman et al., 2007).

Because both treatments in the current study were taught using active-learning strategies, specifically the 5-E learning cycle, if active learning is the most influential factor in student learning, we would not expect to see substantial differences in student learning. Results in the current study support this prediction. Several researchers recently reported that the flipped classroom facilitated learning more than the control with which they compared it (e.g., Strayer, 2012; Tune et al., 2013; McLaughlin et al., 2014). It is difficult to determine the causal factor in each of these successes, because the flipped model is being compared with a passive lecture model of instruction. Thus, it is possible that the flip is simply being used to facilitate the shift from more passive, traditionalist teaching to active-learning approaches. Our current results support this idea. When using an active-learning approach to instruction, flipping the classroom does not add any benefit to student learning. Students learning under both conditions performed equally well on unit exams, low-level recall of facts on the final exam, and high-level application problems on the final exam in addition to experiencing equal gains in scientific reasoning.

It is interesting that adding additional structure and application activities to the current active-learning model also did not increase benefits. In semesters before this study, an active-learning, 5-E learning cycle approach was used wherein engage, explore, and explain were generally done during in-class time, whereas elaborate and evaluate were most often exclusive to homework assignments. However, these homework assignments took place every third or fourth class period rather than directly following each day of instruction. In addition, parts of the concept application activities were often done together as a class. Thus, a great deal of flexibility was evident in the course structure and less temporal demands were placed on students. When comparing the final exam performances by students in this original approach and in the highly structured approach used in the current study, a significant difference was only found in the students’ abilities to recall factual information. While the flipped classroom approach did produce higher gains on low-level recall items than the original format of the class, the difference was small (57.8% vs. 63.0%). The flipped approach did not, however, produce higher gains than the nonflipped approach in the current study. This could simply be due to the more frequent review of content demanded by the structured approach, as would be suggested by the spacing effect (Ebbinghaus, 1885).

In the current study, attitudes toward the course did differ somewhat between treatments. First, students in the flipped classroom had a more negative opinion of the use of technology. While some of this sentiment may arise from the current platform used by the university, technology acting as a barrier to student learning and/or satisfaction is not new in the literature. Gender-specific difficulty with technology has been documented (Bray, 2007; Kuriyan, 2012). Those of differing socioeconomic status may have varying levels of familiarity with technology that can hinder their comfort level with a flipped classroom (Ching et al., 2005; Child Trends, 2012; Kassam et al., 2013). Many other factors may affect a student’s ability to succeed in a technology-enhanced environment. The cost to the student caused by technological impediments should certainly be weighed against the benefits of an approach that may or may not increase student learning over current active-learning approaches.

It does appear from student attitudinal responses that students in the flipped classroom had a better impression of assignments and activities being purposeful for their learning. This may indicate that the flipped model, which forces students to complete homework assignments in preparation for class instead of as a follow-up to class, gives students more sense of purpose in their at-home activities. It did not, however, increase the completion rate of these activities (see Table 4).

It is interesting that students in both sections perceived the homework assignments to be difficult and less beneficial to their learning than the activities done in class, regardless of whether the homework comprised content attainment or concept application. The majority of students in both sections labeled the in-class activities as most beneficial to their learning. Keep in mind that the activities done in class by the nonflipped section are equivalent to the at-home activities completed by the flipped classroom, and vice versa. The most valid conclusion from this is that the presence of the instructor and/or peer interaction had greater influence on students’ perceptions of learning than the activities themselves. This may in fact be due to the adjustable method of feedback, that is, direct interaction with the instructor and/or teaching assistants, available in a face-to-face format as opposed to the preset feedback available through the CMS. This finding refutes the hypothesis that instructor support matters more in one phase of learning over the other. Rather, it supports the idea that instructor support matters, regardless of whether it is in initially presenting the concepts or in aiding students in applying them. The next step to confirm this hypothesis would be to compare either of the approaches in the current study with a strictly online course in which no instructor interaction is ever experienced.

Finally, in confirmation of the highly active quality of both the flipped and nonflipped conditions in the present study, students overwhelmingly recognized this quality in both courses and the distinct lack of lecture, as evidenced by their comments in the attitudes survey. Students in both conditions, but even more so in the flipped condition, listed the highly interactive nature of the course as the characteristic they liked the most (nonflipped: 43.5%; flipped: 59.2%). Disappointingly, however, a common improvement suggestion in both sections was to include more lectures (nonflipped: 21.7%; flipped: 12.8%). This supports a trend in resistance to inquiry-based learning documented by other researchers (e.g., Prince and Felder, 2007; Doyle, 2008).

CONCLUSIONS

A cost-effectiveness analysis is a valid way to analyze educational innovations to determine whether the effort is worth the benefit to students (cf. Bleichrodt and Quiggin, 1999). What is the “cost” of flipping a classroom? For the current study, the cost was in time and money. To produce a flipped classroom required the digitization of active, exploratory lessons for every class period. This required access to audiovisual equipment and many hours of recording and editing. In addition, the maintenance of the online system was a labor-intensive process requiring the full cooperation of an IT department that could provide student support around the clock. It also required the instructor to design application activities beyond what was currently being done in the classroom, a process that all educators know is extremely time intensive. Granted, some of these costs are large only at the outset, after which they diminish or disappear once curricular materials are created. However, maintenance and technology support are ongoing costs.

The cost to students should also be considered. In a flipped classroom, students are required to put in effort to learn the materials on their own before coming to class. Certainly, this is not a cost we would expect to be absent in a normal classroom. However, due to the variation in student learning abilities and styles, in a class in which students are required to initially learn the information on their own, wide variation in how much time and effort this process takes would be expected. In addition, a flipped classroom requires that students have access to technology, whether this is provided by the university (a substantial cost to the institution) or by the student (a substantial cost to the student). In addition to the actual cost of computers and Internet access, the cost of the knowledge to effectively navigate the technology was an issue encountered in the current study. Many of the student issues with the technology involved a lack of knowledge on the students’ part in using certain file types, Web browsers, and the CMS.

Socioeconomic status plays a large role in accessibility and prior exposure to technology both in the home and in the educational setting (Ching et al., 2005). Termed the “digital divide,” access to technology is largely influenced by socioeconomic status (Kassam et al., 2013). The Child Trends survey (2012) indicated that only 57% of U.S. children ages 3–17 had access to the Internet in their homes (85% had access to a computer). This indicates potentially 43% of our incoming freshman did not have access to the Internet. In homes with incomes less than $15,000, the number of children with access to the Internet drops to 17%. Even among the highest income bracket, only 63% of children regularly used the Internet. Clearly, this digital divide may influence students’ overall comfort level with and likely achievement in a flipped learning environment.

If the benefits of the flipped classroom far outweigh the effects seen in the current model of instruction, then these benefits would certainly outweigh the costs of implementation. However, these data have not been sufficiently generated at this point. The current study has taken a step toward providing the data necessary to conduct this cost-effectiveness analysis. Results show that the benefits of the flipped model over a nonflipped active-learning model are insignificant (see Figures 2 and 3), when holding all other variables constant. The hypothesis that the instructor’s role in content attainment versus concept application makes a meaningful difference in student learning has not been supported by the current study.

This study certainly does not discount the value of the flipped approach. If active learning is not currently being used or is being used very rarely, the flipped classroom may be a viable way to facilitate the use of such approaches, if the costs of implementation are not too great. As the research indicates, using active learning in the flipped approach can increase student learning as well as satisfaction over traditional, non–active learning approaches (e.g., Bergstrom, 2011; Strayer, 2012; Tucker, 2012; Tune et al., 2013).

The results of this study are a preliminary attempt to address a major issue involved with the implementation of a new and exciting educational innovation, that is, the causal mechanisms involved in the flipped learning approach. Specifically, this study aimed to address the role of the instructor controlling for the effects of active learning. Because active learning is a well-documented improvement over the traditional lecture approach (Freeman et al., 2014), in order to test the effectiveness of a flipped model that necessarily involves active learning, it is imperative that it be compared with a control model that uses active learning. In doing this comparison, the current study suggests that the flipped approach offers no additional benefits to student learning over a nonflipped, active-learning approach. This is the first step in our ongoing research to parse out the causal mechanisms involved in this new methodology. Our future research will involve testing these same causal mechanisms across a wide variety of classrooms and student bodies to confirm the universality of our findings and to shed further light on a new educational innovation.

ACKNOWLEDGMENTS

Funding from an internal Mentoring Environment Grant supported this project. We thank the many graduate and undergraduate students who assisted in the development of curriculum for this project: Amy Buxton, Stephanie Cox, Ted Piorczynski, Jordan Hatch, Ephraim Taylor, Andrew Schmutz, Dallas Ralph, and James Dalgleish.