Testing CREATE at Community Colleges: An Examination of Faculty Perspectives and Diverse Student Gains

Abstract

CREATE (Consider, Read, Elucidate the hypotheses, Analyze and interpret the data, and Think of the next Experiment) is an innovative pedagogy for teaching science through the intensive analysis of scientific literature. Initiated at the City College of New York, a minority-serving institution, and regionally expanded in the New York/New Jersey/Pennsylvania area, this methodology has had multiple positive impacts on faculty and students in science, technology, engineering, and mathematics courses. To determine whether the CREATE strategy is effective at the community college (2-yr) level, we prepared 2-yr faculty to use CREATE methodologies and investigated CREATE implementation at community colleges in seven regions of the United States. We used outside evaluation combined with pre/postcourse assessments of students to test related hypotheses: 1) workshop-trained 2-yr faculty teach effectively with the CREATE strategy in their first attempt, and 2) 2-yr students in CREATE courses make cognitive and affective gains during their CREATE quarter or semester. Community college students demonstrated positive shifts in experimental design and critical-thinking ability concurrent with gains in attitudes/self-rated learning and maturation of epistemological beliefs about science.

While 81.4 percent of students entering community college for the first time say they eventually want to transfer and earn at least a bachelor’s degree, only 11.6 percent of them do so within six years. A central problem is that two-year colleges are asked to educate those students with the greatest needs, using the least funds, and in increasingly separate and unequal institutions. Our higher education system, like the larger society, is growing more and more unequal. We need radical innovations.

Century Foundation, 2013INTRODUCTION

Community colleges play a key role in the American education system (Fletcher and Carter, 2010; Labov, 2012). They serve a wide-ranging student population that includes veterans, high school graduates not yet academically prepared for college-level course work, and older adults seeking new skills for technically demanding jobs. Many K–12 teachers begin their training at the community college (2-yr) level (Townsent and Ignash, 2003; Barnett and San Felice, 2005), and currently close to 50% of such teachers receive some training at 2-yr institutions (National Science Board, 2006).

While many 2-yr students aim to transfer to 4-yr colleges, others go directly go into the workforce. Employers consistently state that job candidates need to think creatively, apply existing knowledge to new situations, solve problems, and work effectively in diverse teams (Binkley et al., 2011; Association of American Colleges and Universities, 2014). The skill set 2-yr graduates will need for career success is thus distinct from the skill set associated with traditional teaching and learning in science, technology, engineering, and mathematics (STEM) courses. Innovative and affordable pedagogical approaches that succeed at 2-yr institutions will have a disproportionate effect on multiple subsets of students currently underrepresented in STEM and in the workforce.

CREATE (Consider, Read, Elucidate hypotheses, Analyze and interpret the data, Think of the next Experiment) is an innovative strategy for teaching and learning in STEM disciplines (Hoskins et al., 2007). CREATE courses do not follow a textbook-prescribed curriculum; rather, faculty redesign their existing courses around primary or other scientific literature using CREATE strategies (Table 1; Hoskins and Stevens, 2009). A CREATE learning environment challenges students to read closely, think independently, and analyze scientific ideas with confidence. Large amounts of science content are reviewed. Late in the semester, email communication with paper authors further facilitates students’ understanding of “research life.” This approach both demystifies journal articles and humanizes scientists, helping to dispel negative preconceptions that may forestall students’ persistence in science studies or serious consideration of research careers. In previous work, CREATE courses produced cognitive and affective gains in students at a diverse group of 4-yr colleges/universities (Hoskins et al., 2007, 2011; Gottesman and Hoskins, 2013; Stevens and Hoskins, 2014).

| Pedagogical tool | Students’ activities | Sample assignment |

|---|---|---|

| Concept mapping | • Explicitly relate old and new knowledge | • Concept map the first paragraph of an assigned article |

| • Build metacognitive skills | • Concept map a set of terms provided by instructor, connecting textbook reading and assigned article | |

| Cartooning | • Learn to visualize how data were generated in the lab or collected in the field | • Illustrate how the study outlined in a particular article was carried out in the lab or field |

| • Create a context for the data analysis | ||

| Annotating figures | • Engage closely with data by triangulating between figures, tables, methods, and results | • Add labels to figures or charts in an assigned article, based on information provided in caption, narrative, or (if present) methods section |

| Transforming tables | ||

| Analyzing data using templates | • Determine the organization/logic of each experiment | • Paraphrase title of each figure/table |

| • Interpret results critically; evaluate the purpose and need for controls | • Define purpose of each substudy for which data are presented in a figure or table | |

| • Specifically define and interpret control vs. experimental conditions | ||

| Grant panel activity | • Practice creativity and synthetic thinking | • Design two distinct follow-up experiments or research studies. |

| • Hone critical skills of analysis | • Conduct a grant panel review of student follow-up experiments: students work in small groups, tasks include first defining criteria for judging proposed experiment, then reaching consensus on which ones should be “funded” | |

| • Develop argumentation and communication skills through deliberation of proposed experiments | ||

| • Recognize the dynamic nature of scientific progress | ||

| Email surveys of paper authors | • Gain insight into the people behind the papers | • Annotate email responses, noting what was most surprising and/or intriguing |

| • Recognize that scientists have diverse life histories | • Compare/contrast responses of different authors | |

| • Change negative preconceptions of scientists and research careers | • Write a reflection focused on personal reactions to the authors’ responses |

We reasoned that our tested adaptations of CREATE for first-year college students (Gottesman and Hoskins, 2013) might be effectively applied to community college students. Like many 2-yr students nationally, a substantial fraction of students at City College of New York (CCNY), the minority-serving institution (MSI) where the CREATE strategy was developed, are the first in their families to attend college and face similar challenges (e.g., financial, academic preparation). Herein, we report a study of CREATE implementation at seven community colleges throughout the United States. We tested two related hypotheses: 1) 2-yr faculty trained in a 4.5-d intensive CREATE workshop will teach effectively with the CREATE strategy in their first attempt, and 2) 2-yr students will make cognitive and affective gains paralleling those we have seen previously at 4-yr institutions. We found that 2-yr faculty taught effectively using CREATE pedagogy in their initial attempt, and implementers voiced that their students derived significant benefits from the CREATE tool kit. Furthermore, students in these courses demonstrated diverse cognitive and affective gains.

METHODS

Study Participants

Community College Faculty.

We recruited community college faculty participants through meeting presentations (e.g., League for Innovation and National Association of Biology Teachers [NABT]), workshops (International Society for the Scholarship of Teaching and Learning [ISSoTL] 2010), visits to campuses, and direct mailings to 2-yr deans and/or department chairs. Faculty submitted written applications, and those selected spent 4.5 d in residence at Hobart and William Smith Colleges (Geneva, NY). Thirty-three community college faculty from 12 states participated in the June 2012 and 2013 CREATE faculty workshops.

For the implementation phase of the study, we invited all 2-yr participants to apply (per a written application) to be one of 10 “faculty implementers.” Approximately 40% of the 2-yr participants applied, generating a self-selected pool. In choosing implementers, we strove for geographic diversity and a mix of urban and rural community colleges. The principal investigators (PIs) guaranteed that participation would be anonymous and that individual institutions would not be identified in our publications. Some faculty stated that without such an assurance, they could not participate. In turn, implementers agreed to redesign a course and teach using the CREATE strategy in the postworkshop academic year. We present the outcomes from seven of the original 10 implementations. One faculty member withdrew from the study. On another campus, due to curricular restrictions, the CREATE course was structured as independent study. This is an interesting model but not directly comparable with the seven discussed herein. On a third campus, the PIs determined from transcripts of the conference calls with the implementer that CREATE tools were not applied sufficiently and consistently enough to make the course “a CREATE course.” The seven faculty implementers (all women, including one from a minority group currently underrepresented in STEM) each had taught for five or more years before the CREATE implementation (Hurley, 2014). Implementers received a stipend to compensate for time spent in course development, processing institutional review board (IRB) approval, conference calls, and administering student surveys.

Community College Students.

Six 2-yr CREATE implementers taught biology courses and one taught a psychology course (Table 2). We compared outcomes combined from all courses from 2-yr institutions (defined as “pool”) with outcomes from students in biology courses (defined as “Bio pool”). At the beginning of the term, faculty members described the study, offering students the option of participating anonymously or declining. Participation/nonparticipation had no bearing on student grades, and no participation points were awarded. Nonparticipants read course-related material during the time participating students took the anonymous surveys, which were administered during the first (pre) and final (post) weeks of the course. Participants invented unique “secret code numbers” to use on all their surveys, allowing the pairing of pre- and postcourse surveys from individual students for statistical analysis, without compromising anonymity (Hoskins et al., 2007). Most students reported their major/nonmajor status in response to a Student Assessment of Their Learning Gains (SALG) prompt. We thus defined subsets of “majors” and “nonmajors” within our data sets on each survey. Not all students were present for pre and post versions of every survey, and thus n differs among surveys. All courses included a mix of majors and nonmajors. Overall, of the total number of students studied, 62% were female and 30% were from minority groups currently underrepresented in STEM.

| College | Students | % Women | % Minority | Major | Course | Instruction | CTT | EDAT | SAAB survey | SALG survey | OE survey |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Community College 1 | 35 | 60 | 31 | Undecided 7 | Principles of Biology II | 80 min (twice/week), 50 min (once/week), 170 min (lab/week) | √ | √ | √ | √ | √ |

| Chem 5 | |||||||||||

| Premed 12 | |||||||||||

| Biology 8 | |||||||||||

| Math 3 | |||||||||||

| Community College 2 | 33 | 57 | 45 | Undecided 10 | Marine Biology (3 credits) | 80 min (twice/week) | √ | √ | √ | √ | √ |

| Biology 9 | |||||||||||

| Business 2 | |||||||||||

| Pol Sci 2 | |||||||||||

| Arts 4 | |||||||||||

| Language 2 | |||||||||||

| Sociology 3 | |||||||||||

| Health Sci 1 | |||||||||||

| Community College 3 | 16 | 75 | 6 | Undecided 7 | General Biology (5 credits) | 170 min (twice/week, including lab) | √ | √ | √ | √ | √ |

| Nursing 2 | |||||||||||

| Environment 1 | |||||||||||

| Gen Studies 2 | |||||||||||

| Biology 4 | |||||||||||

| Community College 4 | 6 | 67 | 17 | Mol Bio 3 | Molecular Biology (4 credits) | 120 min (three/week) | √ | √ | √ | √ | √ |

| Marine Bio 1 | |||||||||||

| Chem Eng 1 | |||||||||||

| Hist/Path 1 | |||||||||||

| Community College 5 | 11 | 54 | 18 | Undecided 1 | Intro Biology (3 credits) | 75 min (twice/week) | √ | √ | –b | √ | √ |

| Gen Ed 3 Arts 3 | |||||||||||

| Hum Serv 2 | |||||||||||

| English 1 | |||||||||||

| Kinesiology 1 | |||||||||||

| Community College 6 | 15 | 73 | 20 | Psych 4 | Introduction to General Psychology (3 credits) | 180 min (one evening /week) | √ | √ | –b | √ | √ |

| Pol Sci 2 | |||||||||||

| Premed 3 | |||||||||||

| Nursing 3 | |||||||||||

| Arts 1 | |||||||||||

| Biology 2 | |||||||||||

| Community College 7 | 7 | 57 | 57 | Education 3 | Exploring Biology (3 credits) | 175 min (twice/week) | √ | –c | √ | –c | √ |

| Hosp Mgmt 1 | |||||||||||

| Soc Work 1 | |||||||||||

| Radiology 1 | |||||||||||

| None 1 | |||||||||||

| Combined | 123 | 62 | 30 |

CREATE Faculty Development

Workshops.

The multiday June 2012 and 2013 workshops included faculty from 4-yr as well as 2-yr institutions (n = 24 faculty per workshop). Workshop sessions focused on the rationale for developing the CREATE strategy, alignment of CREATE tools with pedagogy literature, and examples of how to use CREATE in a variety of classroom situations (see Table 1). The workshops were designed to model a “CREATE course” experience, with faculty participants acting as “students,” working in small groups and completing assignments like those used by the PIs in CREATE courses. Some participants “student taught” a mock class, thereby practicing a CREATE activity designed for a future course. Each also developed a “CREATE roadmap” teaching plan. Many 2-yr workshop participants adapted CREATE strategies for first-year college students in their roadmaps similar to those in Gottesman and Hoskins (2013). Examples can be found at www.teachcreate.org.

Course Implementation.

The PIs (K.L.K. and S.G.H.) supported implementers in several ways. Precourse, the PIs reviewed syllabi and course design, providing advice on pace, assignments, and data collection, and worked with each implementer to obtain local IRB approval. During implementation, PIs provided direct support through periodic conference calls (30–45 min; PIs and single implementers); the first occurred early in the CREATE implementation, the second either in mid to late in the term. These discussions allowed PIs to capture details of faculty experiences and to provide formative feedback. Implementers were encouraged to contact PIs for advice on issues as they arose. Post-course, PIs held a third conference call with small groups (PIs plus two or three implementers), allowing 2-yr faculty to compare experiences and exchange ideas for future CREATE courses.

Data Collection and Analysis

Faculty Experiences.

We used outside evaluation coupled with faculty self-reporting to examine how faculty responded to the CREATE implementation (Figure 1). As in our recent study testing CREATE in a range of 4-yr colleges/universities (Stevens and Hoskins, 2014), Marlene Hurley, PhD, served as the outside evaluator (OE). Dr. Hurley used a modified Weiss Observation Protocol for Science Programs (Weiss et al., 1998) for her three observations of implementer teaching on each campus and interviewed each implementer after the third observation (see the Supplemental Material for details).

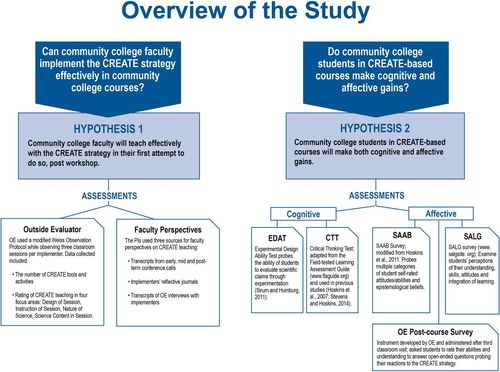

Figure 1. Overview of the study. Assessments (hypothesis 2): EDAT, Experimental Design Ability Test; CTT, Critical-Thinking Test; SAAB, Survey of Student Attitudes, Abilities, and Beliefs; SALG, Student Assessment of Their Learning Gains.

The PIs independently examined faculty reactions to CREATE teaching by examining 1) transcripts of conference calls, 2) faculty members’ reflective journals, and 3) the OE’s transcribed interview responses. We used approaches developed previously for analysis of postcourse student interview data (Hoskins et al., 2007). We transcribed the initial conference calls, using a constant comparison approach to examine themes that recurred in discussions with additional faculty. Subsequent call transcripts were analyzed to track the extent to which they aligned with or differed from the themes defined by the initial cohort (Glaser and Strauss, 1967; Ary et al., 2002). We used a similar approach with the implementer interview data compiled independently by the OE.

Student Cognitive Tests and Affective Surveys

Experimental Design Ability Test (EDAT).

The EDAT tool probes the ability of students to evaluate scientific claims through experimentation (Sirum and Humburg, 2011). Two independent scorers graded all EDAT responses using a standard rubric; scorers did not know if student responses were from pre- or postcourse tests. Initial scores were compared and discussed to determine the degree of concordance. Once scorers achieved a high level of agreement (Pearson’s r > 0.8; Best and Kahn, 2006), scores were averaged for each student response.

Critical-Thinking Test (CTT).

This tool was adapted from the Field-tested Learning Assessment Guide (FLAG; www.flaguide.org) and was used in previous studies (Hoskins et al., 2007; Stevens and Hoskins, 2014). The four-question survey was designed to probe students’ ability to evaluate data, draw conclusions, and justify them. Three questions are derived from the “General Science/Conceptual Diagnostic Test/Fault Finding and Fixing/Interpreting and Misinterpreting Data” section of the FLAG website, and a fourth question was designed in a previous CREATE study to focus on biological data analysis. Each problem presents data and proposes a conclusion. Respondents are asked to write a short response stating whether they agree with the proposed conclusion and, based on the data presented, stating why or why not. Students’ logical and illogical justifications are scored by two independent scorers blind as to pre/post nature of surveys, using a rubric developed in previous work (Hoskins et al., 2007). As with the EDAT, concordance between scorers was determined and reconciled for an initial set of responses. Scores were then averaged for each respondent.

Survey of Student Attitudes, Abilities, and Beliefs (SAAB).

This tool probes multiple categories of student self-rated attitudes or abilities and epistemological beliefs. The instrument includes three summary Likert-style statements directed at students’ sense of their ability to read/understand journal articles, their understanding of the research process, and the degree to which they believed reading literature had influenced their understanding of the research process. The SAAB was developed previously through factor analysis of data obtained at a 4-yr institution (Hoskins et al., 2011). We used Cronbach’s alpha to examine interitem reliability of statements within each scale and present outcomes for categories in which Cronbach’s alpha was 0.7 or above.

SALG.

This survey is a modifiable online instrument available free of charge to the science education community at www.salgsite/instructor. We designed a SALG survey to probe diverse aspects of 2-yr CREATE courses. Implementers administered the survey pre/postcourse. For analysis, we pooled responses to statements within individual categories, calculated means and standard deviations in Excel, and carried out Wilcoxon signed-rank tests and effect-size (ES) calculations (Maher et al., 2013).

OE Student Survey.

The OE administered a survey that included 10 Likert-type statements and three open-ended queries asking students to reflect on their views about CREATE as a teaching method and how CREATE classrooms compared with others. Responses from the seven campuses were scored independently by two individuals (initial concordance greater than 81%), and the scores were reconciled with the assistance of a third scorer.

On completion of the CREATE term, faculty sent all course surveys and their own reflective journals to the PIs for analysis. Data from the seven campuses were scored and pooled. In some cases, fewer than seven campuses are represented in the data from a given instrument, as not all as surveys were administered properly in every case. For example, on campuses where students did not use secret self-identifying codes on both pre- and postcourse surveys, those surveys were omitted from the analyses.

RESULTS

We examined two issues (Figure 1): whether 2-yr faculty implementers taught effectively with the CREATE strategy, postworkshop (test of hypothesis 1); and the degree to which students in 2-yr CREATE courses underwent cognitive and/or affective changes (test of hypothesis 2).

Results from Testing Hypothesis (1): Community College Faculty Teach Effectively with the CREATE Strategy in Their First Attempt to Do So, Postworkshop

We used two sources to evaluate how 2-yr faculty taught with the CREATE strategy in their first attempt: 1) OE observation of three class sessions per implementation and 2) faculty self-reporting of experiences captured by conference calls and reflective journals.

The OE evaluated four focus areas in each teaching observation: design of session, instruction of session, nature of science, and science content of session (Table 3; see the Supplemental Material for full protocol; Hurley, 2014). Overall, the means of the means for the 2-yr faculty cohort for each focus area were high (greater than 3.0 on a 5-point scale), indicating that the majority (60% or higher) of the observed class sessions reflected CREATE pedagogy. The OE reported that implementers used a broad range of CREATE tools and activities (Supplemental Table S1 and Supplemental Material).

|

We analyzed OE interview transcripts to determine faculty reaction to the use of CREATE pedagogy. There was general consensus among the implementers that the use of CREATE promoted students’ inquiry and analysis: “It is the critical thinking version of active learning about the process of science,” noted one faculty member, whereas another stated, “In this course, they actively think about things, analyze data and critically evaluate” (Table 4). Responses were more varied in response to questions about the “difficulties/challenges” of teaching with CREATE. Three main issues emerged among the responses: 1) concern about content coverage, 2) finding appropriate articles, and 3) student preparation for class activities (Tables 4 and 5). Faculty reported a range of “favorite aspects” of CREATE; cartooning (sketching) and small-group discussions were chosen by several faculty. Two faculty implementers voiced contrasting views in reference to the grant panel component, with one identifying it as the “most” favorite and the other as the “least” (Table 5). All seven faculty implementers commented that teaching with the CREATE strategy had shifted their own views about teaching and learning. One implementer stated, “I have developed an appreciation for how little they understand. I’m asking them things that show their lack of understanding.” A different faculty member reflected, “It has brought me back to the core of the scientific method and teaching science. I realize that teaching needs more time for understanding.” A third implementer stated, “I’m OK that there is some content they won’t get, and I’m feeling OK about not having to teach so much stuff and knowing there is a tradeoff and they can always look stuff up,” thus providing some context about the “depth/breadth” concern.

| What were the benefits of using CREATE in your course? |

| • “CREATE makes them think more … It is a critical thinking version of active learning about processes of science” (CC1). |

| • “It exposes them to the process of doing science and gives them life skills” (CC2). |

| • “Students have to think” (CC3). |

| • “In this course, they actively think about things, analyze data and critically evaluate” (CC4). |

| • “Interactions,” “good attendance” (CC5). |

| • “ [Students] develop questioning skills” (CC6). |

| • “CREATE helped them become better thinkers”(CC7). |

| What were the difficulties or challenges encountered when using CREATE? |

| • “It's really hard to find papers” (CC1). |

| • “I also have to cover content. Nonetheless, I have had to cut back on content. The tools were helpful, but I have had to make changes. I now grade homework because some students won't do it if it isn't graded”(CC2). |

| • “The students are at different levels” (CC3). |

| • “This course is lecture and lab integrated with a ‘to do’ lab list that didn't all get covered. Also finding a collection of papers is hard (if all are from the same lab)” (CC4). |

| • “Getting students to do their homework has been challenging, but checking it at the beginning of the class has helped greatly. Selecting articles too scary for students (i.e., containing math and too scientific) has been a problem” (CC5). |

| • “Students lack interest in the style of teaching” (CC6). |

| • “Implementing it fully. Full CREATE is not necessary for nonmajors. They read about five popular press articles before the article. I would like to use just bits and pieces” (CC 7). |

| What about your own knowledge and beliefs? Have they changed as a result of using CREATE? |

| • “I have developed an appreciation for how little they understand. I’m asking them things that show their lack of understanding” (CC1). |

| • “It has brought me back to the core of the scientific method and teaching science. I realize that teaching needs more time for understanding—science teaches us about what is measurable” (CC2). |

| • “I am totally learning as I go; I think a lot about pedagogy and biology” (CC3). |

| • “CREATE has give me more confidence, especially with other courses using secondary literature. My critical thinking is sharper; I’m OK that there is some content they won't get, and I’m feeling OK about not having to teach so much stuff and knowing there is a tradeoff and they can always look stuff up” (CC4). |

| • “Before CREATE I was using small groups and discussion-based science. I never had the view that lecture-based teaching was the only way to go. CREATE has provided more guidance and a modern style of teaching” (CC5). |

| • “CREATE has validated my dreams of how an experiential classroom would look” (CC6). |

| “Yes, something from the workshop changed my thinking—students who become critical thinkers need to produce something” (CC7). |

| What are your favorite aspects? | Your least favorite? |

|---|---|

| Cartooninga | Finding good articlesa |

| Small-group discussiona | Tradeoff between “depth” and “breadth” |

| Grant panels | Grant panels (time needed) |

| Concept mapping | Paraphrasing sentences |

| Emails with scientists | Dealing with lack of preparation/independence |

| Faculty/student interactions; establishing rapport | |

| Transforming data (converting a table/chart) |

Community College Faculty Perspectives on Teaching with CREATE

Conference Calls.

The initial conversations with 2-yr CREATE faculty were focused on course pace and student expectations. Most implementers reported that they were not moving as rapidly through their course syllabi they had planned. Many were concerned about whether they were “covering enough content.” Yet most implementers expressed that students seemed to be “going deeper and learning more.” Regarding a CREATE lab course, the implementer commented, “I think it’s going well. The students are responding pretty positively … they just needed a reason to learn the stuff. This approach to wanting to learn stuff and know more about stuff … is one of the benefits … I would never expect a bunch of sophomores to be able to stand up at the board and talk about ‘endogenous gene repression’ and feel comfortable with it. But they are.” The other theme emerging from these discussions focused on the challenges of shifting student attitudes and expectations. One implementer stated, “They [students] don’t think they can learn unless told by a teacher.” Some faculty reported that their students were confused by the notion that the course would focus on scientific literature, with their textbook used mainly as “reference material,” and that they would not be taught by the lecture/PowerPoint approaches to which they had been accustomed. On two of the seven campuses, some individual students strongly resisted CREATE. A few students objected to group activities, communicating that it was the teacher’s job to provide the information, and the student’s job to receive it. Such resistance in response to teaching innovations, reflecting naïve epistemological views about the nature of knowledge, is not unusual (Dembo and Seli, 2004; Seidel and Tanner, 2013). Interestingly, faculty felt that nonmajors overall were less daunted by the changes in pedagogical approach than were the majors.

As implementations progressed, faculty reported greater confidence with CREATE: “I can see how it has such a positive effect on the way they’re thinking critically.… It’s a real difference.” Some reflected that, in previous semesters, their students would say nothing in class, but that now “arguments break out.” Faculty also noted that students brought information from other courses to bear on their discussions in the CREATE course. Implementers described the “tangents” they took in class as decreasing their overall coverage of topics, yet reported that such discussions were nonetheless valuable. Several commented that cartooning distinguished students who comprehended experimental methods from those who did not. Some faculty observed that, as students were becoming more independent, classroom sessions became student driven. During the third, postimplementation conference call, faculty indicated that the CREATE strategy promoted student engagement and fostered broader participation in class discussions. Some observed the dynamic and unpredictable nature of CREATE classes to be a challenge compared with a lecture format, but one worth mastering.

Overall, faculty perceived that a significant majority of their students reacted positively to the CREATE course; a perception mirrored by student opinion data and complemented by evidence of students’ cognitive/affective gains (discussed below). At two campuses, individual students remained disaffected throughout the entire term. One implementer was made aware that a student had anonymously notified the administration of their dissatisfaction. In contrast, a faculty member on a different campus reported that “my student reviews were better than they have ever been” for a nonmajors course and noted that persistence rate was unusually good during the CREATE semester, with only 10% of the class dropping, compared with greater than 80% in previous semesters.

Reflective Journals.

Faculty journals provided context for issues described in the conference calls. Faculty described feeling excited/nervous before the course began, often pleasantly surprised in early weeks at student reaction (despite occasional individual student resistance), and concerned over the pace of the course. For example, one implementer described her decision to change her approach to what was usually a long lecture on biochemistry: “After seeing them all work together yesterday I couldn’t see standing in front of them and talking for 3 h.” As the term progressed, faculty described feeling more confident about “loosening the reins” and trusting the students to participate in their own learning. Some noted that their students were surprised that their portfolio work was not graded daily or that everything they did for class (e.g., a group concept map) was not awarded points. In this vein, some students did not follow through with assignments. One implementer wrote, “It’s really difficult to teach this way when students don’t come to class prepared.” Some reported that they had spent too much class time doing “group homework” that should have been done in advance. Faculty commented on the challenge of designing CREATE curricula for nonmajors courses with minimal prerequisites: “I had to give myself leeway … to keep it challenging enough for the top students, do-able for the struggling students, and interesting enough for everyone. I picked progressively [more] complex papers.… What I discovered [was that] students had a larger capacity to explore, think creatively and critically, and create new ideas than I thought was possible. After a while I figured out when to just ‘get out of the way’ of their learning.”

Many faculty referenced the impact of the CREATE tools: “For pre-lab, students cartooned the experiment. It’s by far the fewest questions I’ve had to answer during lab. They knew what they were doing.” The dichotomy between student engagement during a CREATE class as compared with a lecture class was evident to this implementer: “They did such a good job [today] thinking and struggling and fighting their way through. But when we finished with the membrane paper and I pulled up a PPt presentation to go over a few more things, there was an audible sigh (which at first I thought was hatred for PPt) until someone said ‘thank goodness’; their brains hurt and they were happy to sit back and let me do the thinking.” Other faculty commented that “they [students] strengthened their opinions through discussing the evidence.” Broader class participation was frequently mentioned and appreciated for its importance. One implementer recorded in the week 2: “Today [student] talked! He was an active participant in the class discussion. He really put a lot of effort into his ‘Babies recognize faces’ [a paper we recommend for introducing CREATE tools]. It was a total breakthrough and I feel like it wouldn’t have happened without the CREATE method.”

Results from Testing Hypothesis (2): Community College Students in CREATE-Based Courses Taught by First-Time Implementers Will Make Cognitive and Affective Gains

Cognitive Tests.

We found significant gains on the EDAT, with an ES of 0.29 and 0.34 for the pool and the Bio pool, respectively (Table 6). We also compared outcomes of nonmajors versus majors. Interestingly, on average, nonmajors as a group gained a full point on the nonlinear EDAT 10-point scale (pre average: 3.4; post: 4.5), while majors did not change significantly during the term (pre: 3.6; post: 3.4). This finding suggests that 2-yr CREATE courses strongly affect nonmajors’ understanding of experimental approaches, as well as their ability to design experiments (Table 6). Two of the six individual campuses showed significant EDAT gains (Supplemental Table S2 and Supplemental Material).

|

We found small significant gains on the CTT. Pooled groups made fewer illogical claims on posttests, with stronger effects in the Bio pool (ES = 0.24) than the overall (all respondents, ES = 0.12; Table 6). Subsets of biology majors and nonmajors both made fewer illogical claims postcourse. ESs were comparable in the two groups (0.25 and 0.24), but the effect was significant at p < 0.05 only for majors. No group made gains in numbers of logical statements used, although on one individual campus such gains were made (Supplemental Table S3 and Supplemental Material). Four of the six individual campuses from which CTT data were obtained reported significant decreases in use of illogical justifications (Supplemental Table S3 and Supplemental Material).

While individual campus scores must be interpreted with caution due to small sample sizes, it is notable that campus 5, which showed no significant gain on CTT, showed large gains on EDAT, with ES = 0.95. Overall, each of the 2-yr biology courses showed individual gains on the EDAT or the CTT, or both (Supplemental Tables S2 and S3). Neither the EDAT nor the CTT was designed around content areas addressed in the individual courses from 2-yr institutions; improvements on these tests are therefore assumed to reflect gains made by students during the semester that could be applied in a broader context. To our knowledge, this is the first report of transferable skills attained in courses from 2-yr institutions focused on the use of primary literature.

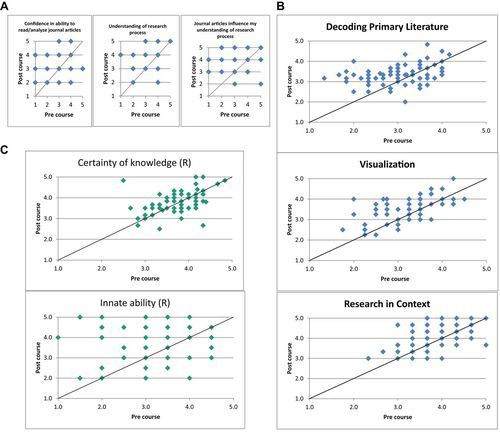

SAAB Survey.

Community college students’ views differed significantly post- versus precourse in the three SAAB summary categories, in three of the four attitude/ability categories, and in four of the seven epistemological categories (Figure 2, A–C, and Table 7). Students’ responses indicated that they perceived significant gains in the ability to read and understand primary literature (p < 0.0001, ES = 0.72) and their understanding of the research process (p < 0.0001, ES = 1.0) and that reading scientific literature had informed their understanding of the research process (p < 0.0001, ES = 0.59; Figure 2A and Table 7). In individual attitude/ability categories, ES was largest for decoding primary literature (0.68; Figure 2B and Table 7, pooled n = 73). Interestingly, more pre/postcourse changes were made by the nonmajors cohort (n = 39) than by majors (n = 25; Table 8). Both groups made significant gains in the decoding primary literature and research in context categories, with moderate ES = (0.5–0.7). Nonmajors also changed significantly on visualization (ES = 0.65), and showed a small but significant change in the interpreting data category (ES = 0.23; Table 8). While these findings must be interpreted with caution due to a small sample size for majors, it is notable that, overall, the gains seen for nonmajors exceed those made by majors in SAAB self-rated attitude/ability categories.

Figure 2. (A) SAAB summary statements. The SAAB survey contained two summary Likert-style questions probing students’ self-rated ability to read/understand journal articles (1 = zero confidence; 2 = slight confidence; 3 = confident; 4 = quite confident; 5 = extremely confident); understand how scientific research is done (1 = no understanding; 2 = slight understanding; 3 = some understanding; 4 = understand it well; 5 = understand it very well). A third statement links the first two by asking the extent to which analyzing journal articles might have influenced students’ understanding of research (1 = no influence; 2 = very little influence; 3 = some influence; 4 = a lot of influence; 5 = major influence). Five 2-years represented, n = 73; all significant gains at p < 0.0001 (Wilcoxon signed-rank test; http://vassarstats.net/wilcoxon.html); ES = 0.72 for read/analyze journal articles; ES = 1.0 for understanding of research process; ES = 0.59 for journal articles influence my understanding of research process. (B) Community college student gains on self-rated abilities and attitudes. Each student response is represented as a dot with coordinates pre (x-axis) and post (y-axis). The reference line (y = x) indicates where responses would fall for students who answered the postcourse survey identically to the precourse survey. Responses above the line thus represent students who self-rated as having made postcourse gains; while responses below the line represent students who felt their skills diminished during the semester. The number of dots is smaller than the number of students due to many superimpositions. n = 73, pooled; 1 = I strongly disagree; 2 = I disagree; 3 = I am neutral; 4 = I agree; 5 = I strongly agree. (C) Example of outcomes in SAAB epistemological categories. Scoring as in B, except that illustrated statements were reverse scored (R). Statements above the y = x line represent more mature views that knowledge can change and ability is not innate, and conversely. Statements in the “knowledge is certain” category did not change significantly during the CREATE semesters or quarter; students’ postcourse responses are quite similar to their precourse responses. Such stability in epistemological beliefs is not unusual. Statements in the “innate ability” category changed significantly during the CREATE quarter or semester, with postcourse students significantly more likely to disagree with an “ability is innate” statement. This finding suggests a strong impact of 2-yr CREATE courses on aspects of students’ epistemological beliefs about science. See the text for discussion and Tables 7 and 8 for additional data.

| SAAB category | n | Pre average (SD) | Post average (SD) | Wxnb | ES |

|---|---|---|---|---|---|

| Decoding literature | 73 | 3.0 (0.65) | 3.4 (0.51) | <0.0001c | 0.68 |

| Visualization | 73 | 3.2 (0.67) | 3.5 (0.62) | <0.0001 | 0.46 |

| Interpreting datad | 73 | 3.5 (0.89) | 3.6 (0.85) | 0. 27 | 0.17 |

| Research in context | 73 | 3.9 (0.61) | 4.1 (0.63) | 0.0006 | 0.30 |

| Knowledge is certain (R) | 73 | 3.8 (0.48) | 3.8 (0.52) | 0.34 | 0 |

| Ability is innate (R) | 73 | 3.2 (0.82) | 3.6 (0.82) | 0.0065 | 0.49 |

| Science is creative | 73 | 4.1 (0.64) | 4.4 (0.62) | 0.0013 | 0.49 |

| Sense of scientists | 72 | 3.0 (0.87) | 3.6 (0.76) | 0.0001 | 0.72 |

| Sense of motives | 72 | 3.5 (0.79) | 3.9 (0.82) | 0.0006 | 0.49 |

| Outcomes known in advance (R) | 73 | 3.6 (1.10) | 3.7 (0.96) | 0.77 | 0.10 |

| Collaboration | 73 | 4.4 (0.92) | 4.4 (0.64) | 1.0 | 0 |

| Majors (n = 25) | Nonmajors (n = 39) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Category | Pre average | Pre SD | Post average | Post SD | Wxn | ES | Pre average | Pre SD | Post average | Post SD | Wxn | ES |

| Decoding primary literature | 3.0 | 0.74 | 3.4 | 0.56 | 0.044 | 0.51 | 3.0 | 0.62 | 3.4 | 0.52 | 0.001 | 0.73 |

| Interpreting datab | 3.6 | 0.79 | 3.7 | 0.82 | 0.285 | 0.12 | 3.5 | 0.93 | 3.7 | 0.90 | 0.041 | 0.23 |

| Visualization | 3.3 | 0.66 | 3.5 | 0.60 | 0.254 | 0.32 | 3.1 | 0.66 | 3.5 | 0.67 | <0.0001 | 0.65 |

| Research in context | 3.9 | 0.72 | 4.3 | 0.63 | 0.004 | 0.61 | 3.9 | 0.54 | 4.1 | 0.60 | 0.026 | 0.45 |

| Certainty of knowledge (R) | 3.8 | 0.45 | 3.8 | 0.54 | 0.795 | 0.00 | 3.8 | 0.50 | 3.9 | 0.47 | 0.101 | 0.21 |

| Innate ability (R) | 3.1 | 1.03 | 3.5 | 0.91 | 0.162 | 0.42 | 3.3 | 0.69 | 3.7 | 0.76 | 0.009 | 0.57 |

| Creativity | 4.2 | 0.72 | 4.6 | 0.58 | 0.072 | 0.49 | 4.1 | 0.60 | 4.4 | 0.68 | 0.008 | 0.56 |

| Sense of scientists | 3.2 | 0.87 | 3.7 | 0.69 | 0.024 | 0.70 | 2.8 | 0.82 | 3.5 | 0.88 | 0.001 | 0.84 |

| Sense of motives | 3.6 | 0.83 | 3.9 | 0.90 | 0.142 | 0.35 | 3.5 | 0.79 | 4.1 | 0.74 | 0.002 | 0.81 |

| Known outcomes (R) | 3.4 | 1.36 | 3.4 | 1.00 | 0.912 | 0.00 | 3.7 | 0.94 | 3.9 | 0.80 | 0.332 | 0.22 |

| Collaboration | 4.6 | 0.58 | 4.3 | 0.63 | 0.150 | 0.25 | 4.4 | 0.54 | 4.6 | 0.55 | 0.050 | 0.33 |

A similar situation holds for the epistemological categories, in which both majors and nonmajors gain significantly in sense of scientists, but only nonmajors show significant gain in creativity, collaboration, sense of motives, and innate ability; the latter a reverse-scored statement in which the more epistemologically mature response is to disagree more post- than precourse (Figure 2C and Table 8). For nonmajors, ESs on sense of scientists and sense of motives are quite high (exceeding 0.8), arguing that this single course strongly affected 2-yr students’ views both of “who scientists are” and “why they do what they do” (Table 8). This finding is important, as it suggests that a single semester/quarter CREATE course can deeply alter students’ views of research/researchers.

As above, results must be interpreted with caution given small sample sizes, particularly in the majors group. For the creativity category, for example, ESs were comparable for majors (0.49) and nonmajors (0.56), though only the nonmajors showed significance at p < 0.05 by Wilcoxon signed-rank test. While ceiling effects may account for some of the lack of change in majors (e.g., most majors agreed precourse that science was creative and collaborative), overall, our findings from the comparison argue that CREATE courses have powerful effects on general-education science students as well as on STEM-interested students.

SALG Survey.

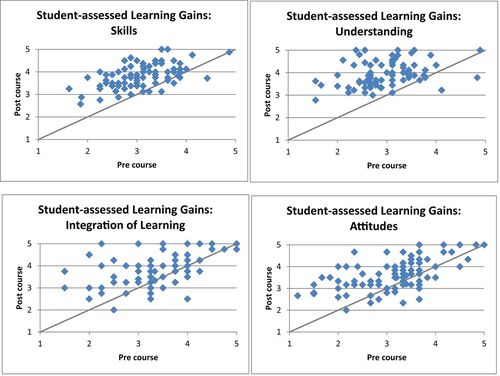

In each SALG category (understanding, skills, attitudes, integration of learning), pre/post differences were highly significant by Wilcoxon signed-rank test. ESs ranged from 0.4 (attitudes) to 0.7 (integration of learning), 1.2 (skills), and 1.5 (understanding; see Figure 3 and Table 9). These data suggest that students perceived the largest impact of their CREATE courses to have been on aspects of their understanding and skills, with a lesser but still substantial impact on their attitudes and integration of learning. Moderate ESs were seen on statements about using a critical approach in daily life or thinking about “How I know what I know” when studying, and a small ES on the statement regarding students’ thinking about whether they fully understood what they read (Table 9). These observations complement data from the OE report (Hurley, 2014). Community college students self-reported “improved understanding” as one of the major benefits of their CREATE course (Supplemental Table S4 and Supplemental Material), and 2-yr implementers perceived that their students developed deeper understanding when taught using CREATE strategies.

Figure 3. Pooled SALG results from six of the seven 2-yr campuses. Campuses 1–6 (Table 2); n = 85 students. Dots above the line represent students’ whose postcourse scores exceeded their precourse scores and conversely; dots on the y = x line represent students who did not change in a particular category. In each broad category, substantial self-assessed gains with large ESs were seen postcourse compared with precourse. See the text for discussion and Tables 9 and 10 for individual statements and quantitation.

|

Within individual categories, significant change was seen on most substatements (Table 9). For the understanding category, gains were seen in almost every statement, with ESs moderate to large. Students also reported large gains on self-rated ability to analyze figures, to move from figures “back” to determining the hypothesis or question addressed by a figure’s data, and in their understanding of the nature of science. Moderate ESs were seen on statements about researchers as people and researchers’ motivations.

In the skills category, students rated themselves as having made gains in their ability to critically read/evaluate both primary and secondary/tertiary scientific literature, to recognize a sound argument, and to cope with jargon of scientific writing, and in experimental design ability (all large ES, statements 2.2–2.6; Table 9). Smaller gains (moderate ES) were reported for recognizing patterns in data, developing a logical argument, and working effectively with others.

With regard to confidence, students made significant gains with moderate ESs on three statements concerning their confidence in their critical analytical ability, reading ability, and experimental design ability; and gains with small ES on a statement about ability to decode data. No change was seen in statements about enthusiasm for science careers or interest in taking additional courses in the subjects. The latter finding may relate to the fact that many students in the implementers’ courses were non-STEM majors and/or general education students likely taking a single science course to fulfill a requirement. In the integration of learning category, students made significant gains on all four substatements, with the largest ES = (0.67) on the statement addressing whether they typically connected key ideas learned in class with other knowledge (Table 9, statement 4.1).

We also examined SALG outcomes based on the reporting of major versus nonmajor status on the SALG survey (Table 10). Notably, both nonmajors and majors made significant changes in all categories, with ESs larger for nonmajors in each category. For majors, ESs ranged from a low of 0.46 (integration of learning) to a high of 1.34 (understanding); nonmajors in the same categories had ES = 0.85 and 1.68, respectively. These findings complement those outlined above for the SAAB survey and suggest that CREATE courses build confidence and self-rated understanding in both majors and nonmajors but have a particularly pronounced effect on nonmajor students. Such findings mesh with 2-yr CREATE faculty comments regarding their students’ gains in understanding.

| Pre (SD) | Post (SD) | Wxn | ES | |

|---|---|---|---|---|

| Understanding | ||||

| Majors | 3.0 (0.50) | 3.9 (0.71) | <0.001b | 1.34 |

| Nonmajors | 2.9 (0.70) | 3.9 (0.53) | <0.001 | 1.68 |

| All | 3.0 (0.63) | 3.9 (0.61) | <0.001 | 1.52 |

| Skills | ||||

| Majors | 3.1 (0.68) | 3.7 (0.66) | <0.001 | 0.91 |

| Nonmajors | 3.0 (0.62) | 3.8 (0.54) | <0.001 | 1.37 |

| All | 3.1 (0.64) | 3.8 (0.60 | <0.001 | 1.16 |

| Attitudes | ||||

| Majors | 3.4 (0.76) | 3.7 (0.80) | <0.001 | 0.37 |

| Nonmajors | 3.0 (0.83) | 3.6 (0.76) | <0.001 | 0.7 |

| All | 3.2 (0.82) | 3.6 (0.77) | <0.001 | 0.56 |

| Integration of learning | ||||

| Majors | 3.4 (0.74) | 3.8 (0.78) | 0.004 | 0.46 |

| Nonmajors | 3.3 (0.74) | 3.9 (0.71) | <0.001 | 0.85 |

| All | 3.3 (0.74) | 3.8 (0.74) | <0.001 | 0.69 |

OE Student Survey Outcomes.

We evaluated student responses to the OE survey to determine how 2-yr students rated their overall experiences in CREATE courses. In response to the OE prompt “What aspects of C.R.E.A.T.E. have you liked best?,” students mentioned “concept mapping” and “cartooning,” two key CREATE tools, with highest frequency, and also noted CREATE’s effect on their understanding (Supplemental Table S4 and Supplemental Material). Students were most positive about group activities, cartooning, and the general CREATE approach (Table S4). These findings were complemented by analysis of student open-ended responses to the OE survey question: “How do you feel overall about the C.R.E.A.T.E. method and your science learning in this course, compared with other ways of teaching you have experienced?” On all campuses, the majority of comments (range: 57–83% on individual campuses) expressed preference for CREATE over other methods of instruction (Table 11). Overall, 70% of comments compared CREATE favorably with other methods of instruction, and the ratio of students preferring CREATE to those preferring other methods (typically “lecture”) was 5:1. On six campuses, fewer than 20% of students expressed preference for other teaching methods; on a single campus, 28% of students expressed such a preference. Some students declared themselves neutral on the issue (subset ranged from 9 to 21% on individual campuses). Our findings argue that first-time 2-yr CREATE teachers taught courses that were perceived by a substantial majority of students to be more beneficial than other teaching styles they had experienced.

| “How do you feel overall about the CREATE method and your science learning in this course, compared with other ways of teaching you have experienced?” | ||||

|---|---|---|---|---|

| Campus | Total responsesb | Positive (“I liked CREATE better than other teaching styles”) | Negative (“I like other ways better than CREATE”) | Neutral (no preference between CREATE and traditional teaching) |

| 1 | 32 | 62% | 16% | 22% |

| 2 | 29 | 76% | 7% | 17% |

| 3 | 14 | 57% | 28% | 14% |

| 4 | 6 | 67% | 17% | 17% |

| 5 | 14 | 71% | 7% | 21% |

| 6 | 11 | 82% | 9% | 9% |

| 7 | 6 | 83% | 17% | 0 |

| All | 112 | 70% | 13% | 17% |

DISCUSSION

We have addressed novel questions about the efficacy of the CREATE strategy at the community college level, extending previous research focused on 4-yr institutions (Stevens and Hoskins, 2014). Specifically, we tested: 1) whether workshop-trained 2-yr faculty could transform and teach a CREATE-based course at their home institution, and 2) whether 2-yr students of such faculty would make cognitive and affective gains.

Hypothesis 1: Workshop-Trained Community College Faculty Will Teach Effectively with the CREATE Strategy in Their First Attempt to Do So, Postworkshop

We determined through OE evaluation that 2-yr faculty implementers were sufficiently prepared by an intensive 4.5-d workshop to use CREATE in their first attempt, postwork. This finding was an important outcome given recent concerns about the efficacy of the workshop model for faculty development (Henderson and Dancy, 2007, 2011; Henderson et al., 2010, 2011; D’Avanzo, 2013). The OE evaluation did reveal variation among the seven implementers in their execution of the CREATE pedagogy. The transcripts of conference calls and reflective journals provided a deeper context for these observations. Many of the implementers, charged with “covering” a prescribed series of topics in introductory courses, struggled with the pace of CREATE. Even implementers who stated that they could see their students learning more and participating more actively and articulately expressed concerns over whether they had “covered enough.” We have argued elsewhere (Hoskins and Stevens, 2009) that the pace of information growth in the sciences makes “full content coverage” an impossible dream. Nonetheless, this issue remains a stressor and highlights the challenges of enacting Vision and Change recommendations: “Encourage all biologists to move beyond the “depth versus breadth” debate. Less really is more” (American Association for the Advancement of Science, 2011, p. xv).

We learned that 2-yr faculty experienced more curricular constraints and restrictions than implementers from previous workshops who tested CREATE at 4-yr colleges/universities. One 2-yr faculty member applied CREATE in “course 2” of a three-course introductory biology sequence. This situation proved challenging for the implementer, as the student-centered CREATE format contrasted sharply with the traditional style of the other two courses. On some campuses, course “learning objectives” were not developed by course faculty but mandated by a state review board. The narrowness or breadth of such objectives (e.g., 12–15 major topics in a single term) influenced how CREATE could be implemented. Some implementers reported a wide range in the readiness of their first-year students for college-level work, or noted their students’ sporadic attendance as an issue.

The seven institutions of this study provided a small window into the diversity of community colleges, in terms of student body, institutional resources, curricular structures, and physical spaces. On some campuses, IRB boards were absent, or IRB processes were ill defined, increasing the obstacles facing 2-yr faculty wishing to participate in an education study. Unexpected nonsupportive reactions from administrators were a factor for certain implementers. Some 2-yr faculty required dean or chair approval for any curricular changes; deans and chairs did not always agree. In some cases, we saw strong administrative support for the implementer.

Recent studies have reported positive gains made by 2-yr science faculty participating in professional development workshops (e.g., STAR; Gregg et al., 2013) and programs that train 2-yr faculty in the use of course-based research experiences for introductory science courses (Wolkow et al., 2014). Overall, our findings lend further support for calls to expand professional development opportunities for community college faculty. Furthermore, we hope that the experiences of the 2-yr implementers will encourage other 2-yr faculty to consider investing in the CREATE pedagogy.

CREATE-Based Community College Courses

Cognitive Tests.

Students’ experimental design ability has been little studied to date at the community college level and is a challenging issue for students at 4-yr colleges (Dasgupta et al., 2014). In previous CREATE courses at a 4-year MSI, both first-year and upper-level students made significant gains as measured by the EDAT (Gottesman and Hoskins, 2013), as did the pooled 2-yr cohort in the present study. We hypothesize that gains in experimental design ability relate to the multiple experimental design and grant panel activities that characterize CREATE courses, as repetition and practice support learning (reviewed in National Research Council [NRC], 2000; Pellegrino and Hilton, 2012). Our finding that large gains were made by nonmajors was a surprise. We speculate that nonmajor students, who may be unexcited about their required science course, are perhaps pleasantly surprised to encounter open-ended assignments that encourage creativity and have no single “correct answer.” That the nonmajors undergo larger epistemological shifts than do the majors argues the nonmajors are more likely than majors to rethink their precourse beliefs.

While “critical thinking” appears in many colleges’ mission statements and is considered an essential skill for graduates (Flores et al., 2012), a universally agreed-upon definition of the term is elusive. Our critical-thinking assessment, derived from the field-tested learning assessments developed as part of a National Science Foundation (NSF)-supported National Institute for Science Education project in 1996–2001 (www.flaguide.org), asks students to read charts or graphs with accompanying narrative, evaluate the data presented and conclusion stated, decide whether or not they agree, and write brief responses that draw conclusions and explain, with reference to the data, why these conclusions were reached. In this sense, we are assessing critical thinking as defined by Glaser (1941, pp. 5–6):

Critical thinking calls for a persistent effort to examine any belief or supposed form of knowledge in the light of the evidence that supports it and the further conclusions to which it tends. It also generally requires ability to recognize problems, to find workable means for meeting those problems, to gather and marshal pertinent information, to recognize unstated assumptions and values, to comprehend and use language with accuracy, clarity, and discrimination, to interpret data, to appraise evidence and evaluate arguments, to recognize the existence (or non-existence) of logical relationships between propositions, to draw warranted conclusions and generalizations, to put to test the conclusions and generalizations at which one arrives, to reconstruct one’s patterns of beliefs on the basis of wider experience, and to render accurate judgments about specific things and qualities in everyday life.

In an article describing available tools for programmatic assessment of critical thinking at community colleges, Bers notes: “Taylor (2004) presents a simpler definition of critical thinking used at community colleges: ‘Critical thinking is the kind of thinking that professionals in the discipline use when doing the work of the discipline’” (Bers, 2005, p. 2). In previous work using the CTT, we have documented critical-thinking gains in upper-level students at an MSI in courses taught by one of the PIs (Hoskins et al., 2007) and at R1, large public, and small private colleges/universities (Stevens and Hoskins, 2014) in courses taught by 4-yr faculty trained in previous extended CREATE workshops.

We found overall small, significant gains in critical-thinking ability in the pooled 2-yr cohort studied, with different degrees of change on different campuses. Students were more likely to decrease their use of illogical justifications than to increase their use of logical ones (Table 6, Supplemental Table S3, and Supplemental Material), and while ESs were comparable in majors and nonmajors, gains were significant by paired t test only in majors. Much of CREATE pedagogy focuses on “decoding” data presented in charts, graphs, and tables in scientific literature, and we speculate that students’ increased abilities in this area underlie their modest gains. Overall less use of “illogical” statements also suggests that students have developed a better sense of how to construct a reasonable argument during the CREATE term.

Critical-thinking changes as related to specific curricular interventions have not been widely probed at the 2-yr level. However, some aspects of student thinking have received attention at 2-yr institutions or in cohorts of nonmajors at 4-yr institutions. For example, nonmajor 2-yr students’ reasoning ability improved more in inquiry-based than in traditionally taught courses, and improved reasoning skills led in turn to higher overall achievement (Johnson and Lawson, 1998). In a nonmajors biology course at a 4-yr college, “community based inquiry” teaching but not traditional instruction methods resulted in gains on the California Critical Thinking Test (Quitadamo et al., 2008).

We did not directly address learning of course-related information, for example, by using a biology or psychology concept inventory during the CREATE term. Nonetheless, we suggest that both the EDAT and the CTT ask students to transfer their understanding of how to analyze course-related data, and how to design experiments, to new, non–course-related contexts. Transferable thinking skills are traditionally considered both difficult to teach (Schwartz et al., 2005) yet key to deep learning (NRC, 2000; Pellegrino and Hilton, 2012). Successful transfer of something learned in one context to a new context depends on factors including students’ practice, “learning with understanding,” and “learn[ing] how to extract underlying themes and principles from their learning exercises” (NRC, 2000, p. 237). Gains in cognitive measures suggest that students have gained transferable analytical skills; this perception was reflected in students’ self-report in this area as well.

Two recent reports on “21st century skills” (Finegold and Notabartolo, 2010; Binkley et al., 2011), list critical thinking, problem solving, creativity and decision-making as “key skills.” Such skills are arguably developed through applying the CREATE tool kit in an iterative way. The Heart of Student Success report on community college educational practices (Center for Community College Student Engagement [CCCSE, 2010]) expresses concerns that too many courses challenge students to simply memorize rather than engage in new experiences that could facilitate deep learning. The open-book testing in CREATE courses, coupled with the emphasis on close reading, data analysis, grant panels, and discussion of authors’ responses to student email survey questions (Hoskins and Stevens, 2009) are largely new in science classrooms at 2-yr institutions. We suggest that the diverse activities, feedback, and iterative nature of CREATE may underlie the gains documented above and contribute to 2-yr students’ development of key thinking skills that could transfer to future course work.

Affective Surveys.

The SAAB and SALG instruments allowed us to probe multiple affective changes associated with student experiences in 2-yr CREATE courses. Two major categories emerged from our analysis: gains in student self-rated understanding (e.g., knowledge of CREATE tool kit, processing skills involved in reading scientific publications and analyzing data, integration of knowledge) and shifts reflecting students’ self-described “beliefs and attitudes” (e.g., epistemological beliefs, metacognition, self-efficacy).

We acknowledge the complexities associated with self-report surveys. Several studies highlight the challenges of using student self-report data, particularly for estimates of academic ability or performance. Students may overestimate their skills (Dunning et al., 2003), reasoning ability (Lawson et al., 2007), performance on standardized tests (Cole and Gorga, 2010), and knowledge of biology concepts (Zeigler and Montplaisir, 2014). As noted by Lopatto (2007), when mentors and students independently rate students’ skills, estimates may or may not be well aligned (Falchikov and Boud, 1989; Kardash, 2000). Still, self-report assessments arguably provide valuable information not accessible by other means. Lopatto further comments on a study of student reaction to undergraduate research experiences, “[w]ithin the undergraduate research experience, however, there are learning and experience goals that may be most directly measured by student report. Estimates of personal development, including tolerance for obstacles, readiness for more research, and self-confidence, are best made by the person who has direct access to these estimates (Lopatto, 2007, p. 305). We feel that a similar argument applies to students experiencing a new teaching method for the first time. Moreover, our study combines cognitive evaluations with affective surveys to allow triangulation of outcomes (Oliver-Hoyo and Allen, 2006).

Gains in Student Understanding of Processing Skills and CREATE Tool Kit.

We are encouraged to find that community college CREATE students report substantial positive shifts in self-rated ability to decode primary literature, visualize experiments, and understand the context in which research is done, per responses on the SAAB survey (Figure 2A and Table 8). While both majors and nonmajors cohorts make significant gains, the nonmajors outperform the majors in self-rated data interpretation and visualization abilities (Table 9). Similar outcomes emerged from the SALG survey; 2-yr nonmajors shift more than majors in the skills category (Tables 9 and 10). Collectively, our findings suggest that teaching with CREATE has strong impact on diverse 2-yr student populations. Our affective findings align with the work of Gormally and colleagues (2012). In their study, some cohorts of general education students made larger gains on a cognitive assessment (Test of Science Literacy Skills) than did majors (Gormally et al., 2012).

Some studies indicate that self-reported gains correlate poorly with actual gains (e.g., self-reported vs. actual critical-thinking abilities; Bowman, 2010) but reasonably well with measures of motivation or satisfaction (Bowman, 2014). In this vein, we cannot (and do not) claim that students who rate themselves higher postcourse in the decoding primary literature category are actually better at this task. A separate assessment is needed to make this determination. Bowman argues that self-reported gains should be considered affective measures, which is the spirit in which we are using them in this study (Bowman, 2011).

At 4-yr institutions, CREATE courses have been shown to provide students with transferable skills and to increase their confidence in tackling scientific jargon, deconstructing a scientific study, and independently interpreting data (see CTT and postcourse interview data in Hoskins et al., 2007). In the present study, 2-yr students’ positive shifts in perceived abilities complemented gains in the critical analysis of data (CTT) and experimental design (EDAT). Arguably, the cognitive activities fostered by CREATE pedagogy are those commonly used by scientists in their research efforts. Thus, we see CREATE as a means by which faculty can facilitate the development of both skills and attitudes of critical importance to STEM careers.

Positive Shifts in Student Beliefs and Attitudes.

Students’ achievement may be strongly influenced by their preconceived ideas and attitudes about science (Oliver and Simpson, 1998; Pintrich, 2004). In particular, epistemological beliefs about the nature of knowledge are known to influence cognitive development (Perry, 1970; Baxter Magolda, 1992). Our SAAB data show that nonmajors made gains in five of seven epistemological categories, while majors made significant gains in one (Table 8). A shift in epistemological beliefs suggests that students confronted challenges to their established understanding of science during their CREATE course. Changes in epistemological beliefs may influence students’ approaches to learning (Schommer, 1994). For example, if students feel that “ability is innate” and define themselves precourse as “C students,” they may be unwilling to make the effort that could earn them a higher grade. Students’ levels of engagement may also be affected by the maturity of their epistemological beliefs (Hofer, 2001). The epistemological maturation observed in 2-yr CREATE students thus has potential to support students’ motivation and learning in future courses.

While no comparison groups were examined in this study, we have previously compared CREATE student cohorts with students in courses not taught with CREATE pedagogy at CCNY. We found that CREATE students in a “scientific thinking” course meeting 2.5 h/wk made a number of significant gains in SAAB categories, including epistemological ones. In contrast, the non-CREATE students in a physiology course with lab, meeting 5 h/wk made no significant shifts in any of the categories addressed by the SAAB (Gottesman and Hoskins, 2013). This finding supports our sense that students’ responses on such surveys reflect the impact of learning with the CREATE strategy rather than gains that would occur simply as a consequence of spending a semester in a science course.

Self-efficacy is a critical component of student learning in STEM courses (Baldwin et al., 1999; Trujillo and Tanner, 2014). Self-efficacy refers to the perceived level of confidence one has for performing a specific task or action within a defined domain (Bandura, 1997; Zimmerman, 2000; Pajares, 2005). Factors that contribute to self-efficacy are typically organized into four categories: mastery experiences, vicarious experiences, social persuasion, and physiological states (reviewed in Usher and Pajares, 2008). The mastery experience category aligns with our examination of student attitudes using the SALG survey. Community college students make significant gains with moderate to large ESs in the attitudes category (e.g., statement 3.3: “I am confident that I can ‘decode’ data presented in graphs, tables or charts”; Table 10). It is notable that a single course can substantially shift 2-yr students’ confidence both in their scientific-thinking ability and in their ability to apply CREATE tools successfully.

Self-efficacy can influence persistence in college (Pajares, 2005), self-regulated learning behaviors (Vogt, 2008), and career aspirations (Rittmayer and Beier, 2008). Understanding how to improve or expand the self-efficacy of students in STEM courses is thus a worthy goal, especially at the 2-yr level. Recently, Amelink and colleagues (2015) have reported gains in self-efficacy achieved by 2-yr college students participating in an 8-wk summer research experience at the University of California–Berkeley. We suggest that CREATE courses offer a complementary method for developing the self-efficacy of 2-yr students in STEM courses.

Finally, we note that the SALG survey also captured metacognitive changes in 2-yr students (Tables 8 and 9 and Supplemental Figure S1). Such changes suggest that CREATE courses promote a reflective approach to learning. Given the relationships between epistemological beliefs, attitudes, and behaviors (Rittmayer and Beier, 2008; Graham et al., 2013; Dweck et al., 2014), we are encouraged by the alignment of affective outcomes with cognitive learning gains. For example, faculty reported that their students understood more. Moreover, students perceived to have gained increased understanding (e.g., ability to decode data, read charts/graphs, design experiments; SALG/SAAB) and they demonstrated cognitive gains on closed-book assessments designed to challenge them to demonstrate such understanding. In a discussion of whether the Discipline-Based Education Research (DBER) community is “up to the challenge” of STEM education reform, Talanquer notes that, in addition to rethinking curricular design and instructional methods, core transformation of postsecondary STEM education also necessitates “the enrichments and transformation of the knowledge, beliefs, attitudes and behaviors of students, instructors and administrators” (Talanquer, 2014, p. 816). The ability of 2-yr faculty to transform their teaching with the CREATE strategy in a single term, producing diverse benefits for students, is a first step in this direction.

CONCLUSIONS

The Center for Community College Student Engagement has argued that all 2-yr faculty should have access to professional development to improve teaching effectiveness at the 2-yr level: “Research abounds about what works in teaching and learning. Instructors, however, must be given the opportunities necessary to learn more about effective teaching strategies and to apply those strategies in their day-to-day work” (CCCSE, 2010, p. 16). We found that CREATE workshop–trained 2-yr faculty can effectively implement the CREATE strategy and transform their teaching practices in their first attempt. Community college faculty implementers taught CREATE courses that evoked cognitive gains paralleling those observed previously in student cohorts from 4-yr institutions, strongly affected students’ sense of their abilities and science understanding, and increased understanding of scientists and the research enterprise.

We recognize that potential resistance from students or institutions (Singer et al., 2012; Seidel and Tanner, 2013) can discourage faculty from changing how they teach. In response, we would note that the majority of 2-yr students responded positively to the use of CREATE, with a 5:1 preference for CREATE over other teaching styles (Table 11). Faculty implementers voiced strong support for CREATE pedagogy in their first-person accounts of their experiences. We hope that these findings will encourage other 2-yr faculty to implement CREATE pedagogy in a variety of STEM courses.

Community college students face multiple challenges, including, in many cases, family responsibilities and full- or part-time jobs in addition to course work. As a population, these students are highly diverse, and if they succeeded in completing STEM degrees, could do a great deal to rebalance the representation of currently underrepresented minority students in STEM careers. Yet these students are less likely than those at 4-yr schools to remain in STEM majors and to graduate in a timely manner (Labov, 2012). Our findings suggest that CREATE courses leave 2-yr students with improved scientific thinking and design skills along with the confidence that they are better at reading, analyzing, and understanding scientific literature, and a better understanding of scientists and “the research life.” Students who have a clearer sense of what scientists do and how they do it are more likely to seek research experiences that can lead to STEM careers (majors) and to vote insightfully on issues of science as it influences public policy. As such, the CREATE course is a low-cost way to powerfully transform community college students’ sense of science.

Accessing Materials

CREATE curricular resources (e.g., roadmaps) produced from the CREATE faculty development workshops can be found at the website teachcreate.org.

ACKNOWLEDGMENTS

This work was funded by DUE 1021443 “Extending CREATE Demographically and Geographically, to Test its Efficacy on Diverse Populations of Learners at 2 Year and 4 Year Institutions.” This project was approved by the CCNY IRB (protocol H-0633). We thank Samuel Deluccia, Melissa Haggerty, Courtney Franceschi, and Jamila Hoque for their efforts in supporting the faculty development workshops, and the events and conferences staff at Hobart and William Smith colleges. We thank all faculty members who participated in the workshops, especially those who implemented CREATE, and the students in the courses we assessed. We thank the NSF TUES/IUSE program for support. We thank Leslie Stevens and Heather Bock for manuscript feedback. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the NSF.