Increasing the Use of Student-Centered Pedagogies from Moderate to High Improves Student Learning and Attitudes about Biology

Abstract

Student-centered strategies are being incorporated into undergraduate classrooms in response to a call for reform. We tested whether teaching in an extensively student-centered manner (many active-learning pedagogies, consistent formative assessment, cooperative groups; the Extensive section) was more effective than teaching in a moderately student-centered manner (fewer active-learning pedagogies, less formative assessment, without groups; the Moderate section) in a large-enrollment course. One instructor taught both sections of Biology 101 during the same quarter, covering the same material. Students in the Extensive section had significantly higher mean scores on course exams. They also scored significantly higher on a content postassessment when accounting for preassessment score and student demographics. Item response theory analysis supported these results. Students in the Extensive section had greater changes in postinstruction abilities compared with students in the Moderate section. Finally, students in the Extensive section exhibited a statistically greater expert shift in their views about biology and learning biology. We suggest our results are explained by the greater number of active-learning pedagogies experienced by students in cooperative groups, the consistent use of formative assessment, and the frequent use of explicit metacognition in the Extensive section.

INTRODUCTION

Over the past 30 yr, there has been call to reform undergraduate science classes into something more engaging and student centered (e.g., National Science Foundation [NSF], 1996; National Research Council [NRC], 1999, 2003; Stokstad, 2001; Wood, 2009). Recently, the report Vision and Change in Undergraduate Biology Education: A Call to Action (American Association for the Advancement of Science [AAAS], 2011) highlighted the need for more student-centered learning in undergraduate biology classes. The authors of the report recommend using pedagogies that engage students as active participants in a variety of class activities beyond lecture, facilitating student work in cooperative groups, and incorporating ongoing assessment of student conceptual understanding to provide feedback to both students and instructors. This call for reform is being heard by an increasing number of higher education faculty members who are implementing active-learning strategies and incorporating formative assessment into their undergraduate classes. Currently, a spectrum of instructional practices can be found at many institutions, ranging from purely traditional lecture to wholly student centered (Smith et al., 2014; Wieman and Gilbert, 2014).

Active-learning pedagogies are intended to move classrooms toward more student-centered learning, and they engage students in knowledge construction. This context is in contrast to traditional lecture, which focuses on dissemination of instructor knowledge and relies on passive student listening. A variety of active-learning pedagogies have been described in recent publications, ranging from quick, easily implemented strategies such as think–pair–share and minute papers to more complex strategies such as problem-based learning in organized groups (e.g., Allen and Tanner, 2005; Handelsman et al., 2007; Ebert-May and Hodder, 2008; AAAS, 2011; Miller and Tanner, 2015). There is substantial evidence that active-learning pedagogies are much more effective than lecture. According to a meta-analysis by Freeman et al. (2014), classes that incorporate active-learning strategies have significantly greater gains in student performance compared with classes relying on traditional lectures and significantly lower failure rates. The effect of active-learning pedagogies was so pronounced that Freeman and his colleagues suggested moving onto second-generation studies that focus on comparing active-learning techniques to determine which practices are most effective and the best way to implement them, and how much active learning needs to be implemented to produce positive results. Furthermore, many of these pedagogies rely on interactions between students, and there is mounting evidence that structuring classes such that students work in small cooperative or collaborative groups increases student achievement. In a meta-analysis, Springer et al. (1999) found that, when students work in small groups, they have higher learning gains and better attitudes toward learning science, and these results applied to all types of students.

The importance of assessing students during instruction to provide feedback to both students and instructor about students’ conceptual understanding is also gaining attention. Higher education faculty often use summative assessments (e.g., exams, major projects) that assess student understanding at the end of instruction, when the class is moving on to the next topic (Wood, 2009). This type of assessment generally does not allow instructors to respond to gaps in student knowledge. However, the use of formative assessments that are administered on a regular basis during instruction to provide timely feedback about what students know and understand is a critical component of student-centered instruction (Handelsman et al., 2007; Wood, 2009; AAAS, 2011). There are many ways to formatively assess students (e.g., Angelo and Cross, 1993; Huba and Freed, 2000), but one common component is that the assessment allows an instructor to modify instruction in response to students’ misunderstandings or confusion. Thus, not only does formative assessment provide information about what students know, but it also promotes learning. In their seminal paper, Black and Wiliam (1998) concluded that regular formative assessment increases student learning gains and is perhaps the most important pedagogical intervention that can occur in a classroom. In his review of innovative practices in undergraduate biology education, Wood (2009) includes formative assessment as one of the most promising practices that leads to increased learning gains in biology classes.

In biology classrooms, the contexts in which student-centered strategies are implemented extend from moderate changes to lecture-based courses to wholesale changes in course sequences. For example, Knight and Wood (2005) found that adding cooperative problem solving and frequent in-class assessment to an upper-level major’s lecture course yielded higher learning gains compared with student achievement in a class with only traditional lecture. In a large-lecture format, Freeman et al. (2007) found that a highly structured learning environment in which students worked in groups to answer exam-type questions led to increased achievement, especially for students who traditionally struggle with biology. Ebert-May et al. (1997) found that students working in cooperative groups to engage in material presented via a learning cycle had increased self-efficacy and process skills compared with students in a traditional lecture course. Finally, Udovic et al. (2002) restructured a three-course sequence of nonmajors courses into a workshop format in which each lesson was driven by a focus on conceptual understanding, scientific inquiry, and science in context. They found that students in the workshop sections had deeper conceptual understanding compared with students in more traditional courses.

However, there are challenges to implementing active-learning pedagogies and embedding formative assessment into higher education classrooms. Science, technology, engineering, and mathematics (STEM) undergraduate education courses can be quite disparate, from large-lecture general education courses to small, lab-based courses for targeted audiences, and it is difficult for instructors to know which pedagogies are appropriate for which contexts. Variables such as class size and arrangement, as well as student motivation and engagement, potentially influence a strategy’s outcomes. Implementing new pedagogies can also be daunting for faculty, especially those trained in the sciences without exposure to active-learning strategies or formative assessment. Even when these strategies are used, maximizing student gains requires a student-centered pedagogical approach that few higher education faculty have had the opportunity to learn and practice. Sunal et al. (2001) and Wright and Sunal (2004) describe this lack of pedagogical knowledge on the part of science discipline faculty as a type of “instructional barrier.”

If faculty are going to spend resources and time to transform their classes, it is important to know how much active learning needs to be incorporated into a class to increase learning gains. Some studies have shown that even moderate changes can lead to improved results. Knight and Wood (2005) found that partially changing a lecture-based class to a more student-centered context led to increased learning gains, even though lecture still accounted for 60–70% of class time. More recently, Eddy and Hogan (2014) found that a moderate course structure, which included preclass guided-reading questions and some in-class activities completed in informal groups, yielded higher exam scores compared with low course structure, especially for African-American students and first-generation students. If moderate changes can lead to increased learning, is it worthwhile to invest additional time to create and teach a highly student-centered course? Is using many active-learning strategies more effective than using fewer active-learning strategies, or is there a limit to the number of strategies that are effective?

To begin to address these questions, we compared learning gains and attitudes toward learning biology in two sections of Biology 101. The two sections were taught during the same quarter, by the same instructor (G.L.C.), and the same content was covered in each. However, they were taught in different ways. In the Extensive section, the class was structured to support many group-based, active-learning strategies with consistent formative assessment. In the Moderate section, the class incorporated some active-learning strategies but was more lecture-based and had less formative assessment.

We asked two research questions:

Do students gain more content knowledge in a class that uses an Extensive amount and variety of active-learning pedagogies coupled with formative assessment compared with a class that uses a Moderate amount and variety of active-learning pedagogies and less formative assessment?

Do students exhibit increased sophistication in their views about biology in a class that uses an Extensive amount and variety of active-learning pedagogies coupled with formative assessment compared with a class that uses a Moderate amount and variety of active-learning pedagogies and less formative assessment?

METHODS

Extensive and Moderate Course Structures

At Western Washington University, Introduction to Biology (Biol 101) is taught as a large-enrollment course for nonmajors. Until recently, it was taught using traditional lecture and historically had one of the highest student failure rates of introductory courses in our college. However, while the size of the class has not changed, the pedagogy has changed substantially over the past 7 yr. This transformation happened in two major phases.

In 2006–2010, we began teaching the course using the Moderate course structure. We implemented an interactive lecture approach using active-learning pedagogies such as reflective pauses (e.g., Rowe, 1976; Ruhl et al., 1987), real-time writing, and think–pair–share (e.g., Lyman, 1981). These pedagogies allowed us to slow down curriculum delivery, increase monitoring of student understanding, and address confusions that were brought to our attention. Students were also given reading guides to focus their attention on the important concepts in the reading (the reading guides were not graded), which helped them better prepare for lecture. Thus, we incorporated some easily implemented active-learning strategies into the course, along with some formative assessment. However, even with these changes to the course structure, we did not think students were mastering the material to the extent that they could, although we had little direct evidence beyond exam scores, and exams were not the same from year to year.

We began using the Extensive course structure in 2010. We “flipped” the course, which included adding more active-learning pedagogies and changing the overall course structure to include permanent working groups with assigned seating, activity-based classes, and online prelectures to support those strategies. We drew upon team-based learning (Michaelson et al., 2003) and collaborative learning (Bosworth and Hamilton, 1994) pedagogies to inform our reformation. We also embedded more formative assessment to monitor student understanding.

With the Extensive course structure, Biol 101 students spent 2–4 h out of class per module topic (1–2 wk) learning basic content through online lectures, reading assignments, reading and watching guides, reporting areas of confusion to a discussion board, and writing a content summary. Class time was used to quiz them individually over their preparation, then as a group using Immediate Feedback Assessment Technique scratch cards (Epstein Educational Enterprises, www.epsteineducation.com/home/about). Following assessment, we provided just-in-time lectures (e.g., Novak et al., 1999) covering the most difficult concepts based on student response to the discussion board. Students then worked in instructor-created groups of four to six students to complete carefully designed worksheet activities, which came at the time when students were ready to move to a higher level of content understanding. Worksheet activities took ∼30 min to complete, depending on the activity, and there were two to three activities per course module (Table 1). Once groups finished the in-class worksheet activities, students revised their initial content summaries to include all of the new and corrected information they had constructed. This process occurred for each of the six modules in the course (Table 1). Finally, students were tested individually on their understanding of the concepts on three course exams.

| Module | Activities |

|---|---|

| Cell membranes | 1. Properties of water: Students demonstrate their understanding of polarity and apply it to the classification of organic polymers. |

| 2. Osmosis: Questions address preconceptions about equilibrium. Students then apply their knowledge to a case study of hyponatremia. | |

| Energy transfers | 1. Photosynthesis: Students diagram the reactants and products of photosynthesis. Questions ask students to connect this topic to the big picture and to membrane transport and properties of water. |

| 2. Cellular respiration: Students diagram the reactants and products of respiration. Questions address misconceptions about oxygen and ask students to connect this topic to the big picture and to membrane transport. | |

| 3. Nutrition: Students explain the process of atherosclerosis leading to heart attack and explain how diet influences the variables involved in atherosclerosis. | |

| Cell growth and division | 1. Mitosis and meiosis: Questions address preconceptions about independent assortment and allow students to diagram the differences between mitosis and meiosis using the cell cycle as a framework. |

| 2. Cancer: Students apply their knowledge of cell cycle to cancer. | |

| Genetics | 1. Genetics: Students work through genetics problem sets and do a blood-typing simulation online. |

| 2. Protein synthesis: Students learn about cystic fibrosis and apply what they learned about proteins in module 1. | |

| Evolution | 1. Evolution: Students learn about Central European Blackcap subpopulations and answer questions to determine whether the two populations have become different species. |

| 2. Human evolution: Students explore the protein lactase and how the lactate gene is distributed throughout the world. Questions encourage students to make connections between genetics and evolution. | |

| 3. Artificial selection: Groups choose an agricultural method to research and share out to the class (Jigsaw). | |

| Ecology | 1. Population ecology: Questions address preconceptions about how populations behave over time. Students diagram and interpret data from the Isle Royale wolf and moose populations. |

| 2. Ecosystem ecology: Questions lead students to explain the process of eutrophication and climate change in terms of nutrient pools (sinks and sources) on earth. |

Because students spent much of their class time engaged in group work, the groups were intentionally designed by the instructor before the start of the quarter, and students remained with their groups for the duration of the class (10 wk). Students were assigned to groups to maximize diversity of science background (determined by major or declared interest in a major), year in school, and gender, as some researchers advise (Slavin, 1990; Flannery, 1994; Gerlach, 1994; Colbeck et al., 2000). We attempted to minimize racial diversity within groups by not isolating single minority students, as advised by Rosser (1998).

The worksheet activities were developed and revised to address what we know about how people learn (NRC, 2000). They followed the basic tenets of constructivism, in which students are presumed to construct understanding based on their prior understanding of a concept and to incorporate new ideas into an existing conceptual framework, rather than passively assimilate knowledge as it is passed to them (Piaget, 1978; Vygotsky, 1978). To develop and refine the activities, we relied on our experience writing a reformed biology curriculum called Life Science and Everyday Thinking (LSET; Donovan et al., 2014), and we used some activities modified from LSET. LSET is a semester-long curriculum targeted to preservice elementary teachers and modeled after the established physics curriculum Physics and Everyday Thinking (Goldberg et al., 2005). Each chapter begins with a formative assessment to expose student prior ideas about the main concepts of the chapter. The rest of the chapter is a series of activities (laboratory activities, thought experiments, and exercises using paper and computer models) carefully designed to address common ideas and to guide students to construct knowledge in a sequential manner. Finally, students explicitly reconsider ideas they held before the activity and document any changes in their thinking. A full description of LSET and different aspects of its development can be found in two articles by Donovan and colleagues (2013, 2015).

The LSET activities that could be successfully modified for Biol 101 were those that did not require long-term development (e.g., multiple activities building up to cellular respiration in plants) or extensive lab equipment (e.g., measuring fats, carbohydrates, and proteins in different foods). Activities that relied on interpreting data from outside sources and drawing conclusions based on real-life scenarios were most easily adapted for our purposes. For example, the activity that presented data on the distribution of lactose intolerance in humans as a means to understand human evolution could be used in Biol 101 with very little modification (see the activity worksheet in the Supplemental Material). Also, not all of the Biol 101 activities were from modified LSET activities; we developed several for topics that we had traditionally covered in previous course offerings but that were not part of LSET (e.g., moose and wolves on Isle Royale as examples of population and community interactions). Altogether, the activities currently used in Biol 101 represent a set of coherent, student-centered learning experiences that attend to student preconceptions and facilitate construction of understanding.

After using the Extensive course structure for 4 yr, we were curious whether it was more effective at supporting student learning than the Moderate course structure we had initially used. Was the work worth it? We also wondered whether the Extensive structure changed students’ attitudes about learning biology compared with the Moderate structure. We designed an experiment, using a quasi-experimental approach, to compare the two course structures.

Study Design

Two sections of Biology 101 were offered in winter 2014 at our regional comprehensive university. One section, scheduled from 8:00 to 9:20 am, was taught using the Moderate course structure and the other, scheduled from 12:00 to 1:20 pm, was taught using the Extensive course structure. Both sections met twice per week on the same days over a 10-wk quarter. The same experienced instructor (G.L.C.) taught both sections, and the same content was covered in each. Both sections were taught in large lecture halls with fixed seating, although the two rooms were located in different buildings on campus. Students from both sections matriculated into the same laboratory sections, which were taught by the same graduate teaching assistants. Students in the Moderate section were required to buy a textbook, while a textbook was only recommended for students in the Extensive section (students in the Extensive section had access to instructor-produced videos that they watched before coming to class). Ninety-six textbooks were purchased through our bookstore by students in the Moderate section (enrollment of 172), and none were purchased by students in the Extensive section (enrollment of 182). We did not collect information on how many books were purchased through nonuniversity merchants.

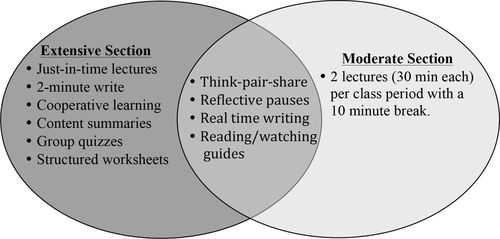

The Extensive section was taught in the highly structured manner described in detail earlier. The Moderate section was also taught as described earlier, but with the addition of didactic explanations of the worksheet activities used in the Extensive section to ensure the same content was covered in both sections. See Figure 1 for a comparison of the different pedagogies used in the two sections.

Figure 1. Comparison of the different pedagogies used in the Extensive section and the Moderate section of Biology 101 during Winter 2014.

Class Observations

To document what both the instructor and the students were doing in each section, we observed the classes using the Classroom Observation Protocol for Undergraduate STEM (COPUS; Smith et al., 2013). Using the COPUS, observers record what is happening in a class in 2-min intervals using predetermined categories of behaviors developed to reflect common behaviors in STEM classes. For our study, two observers completed the COPUS training together, which included reviewing the protocol, watching and scoring online segments of classes together, and comparing and discussing scores (the training procedure can be found at www.cwsei.ubc.ca/resources/COPUS.htm). The observers then attended both the Moderate and Extensive sections together to determine interrater reliability, which was calculated using Cohen’s kappa (0.91 for the Moderate section and 0.85 for the Extensive section). For subsequent observations by a single observer, both sections of the class were observed by the same person on the same day. Observations were scheduled to capture the range of activities in both sections, and five observations were completed for each section.

To determine the occurrence of behaviors present in each class during our observations, we calculated the percent of 2-min time periods in which each behavior occurred, following the recommendations of Lund et al. (2015). For example, if lecturing occurred during 35 of the 80 2-min time periods, we recorded 44% of the 2-min time periods as having lecture. This analysis is different from the calculations normally performed for COPUS data (in which the number of times a behavior is coded for is expressed as a percentage of the total number of codes for all behaviors during the class; Smith et al., 2013) but allowed us to compare student and instructor behaviors in terms of the time available in the class rather than in terms of total number of behavior codes recorded during a class observation.

Assessment of Content Knowledge

Gains in content knowledge were assessed in two ways. Students from both sections were given three multiple-choice exams during the quarter. The exams were the same for both sections, and the third exam was not cumulative. For each student in the two sections, a mean score for the three exams was calculated, and these mean scores were compared using a Student’s t test. We calculated the odds ratio of passing the three exams (i.e., the likelihood of passing the exam with a 60%) by dividing the average number of students who passed the three exams by the average number of students who failed the three exams in the Moderate and Extensive sections.

Students were also given a comprehensive content assessment, which was administered as a preassessment on the first day of class and as a postassessment on the penultimate day of class. Because there currently is no research-validated concept inventory suitable for use in a broad biology course, we gathered 28 items from published concept inventories (Klymkowsky and Garvin-Doxas, 2008; D’Avanzo et al., 2010; Nadelson and Southerland, 2010; Fischer et al., 2011) and wrote another 12 questions ourselves so that we could assess over the range of topics covered in our class (Supplemental Material). The content assessments were administered in class using Scantron. To account for potential differences in student demographics between the two sections, we used multiple linear regression to compare the postassessment scores for the students in the different sections, following the suggestions of Theobald and Freeman (2014). We used the following model:

To obtain a more detailed understanding of student performance on the pre- and postassessments, we also conducted an item response theory (IRT) analysis of the data. In IRT, unlike in classical test theory, it is possible to estimate both the properties of items on an assessment and the latent traits (often called “abilities” for knowledge-based tests) of people taking the assessment from a single data set. This analysis can be done because IRT provides mathematical models that let one calculate the probability of a student producing a correct answer to an item as a function of that item’s properties and the ability of the student. The probability of answering correctly as a function of ability is given by a logistic curve, with the exact equation for the curve depending on which parameters are used in the model. Typical item properties include difficulty (a measure of the ability required to have a given probability of answering the item correctly), discrimination (a measure of the power of the item to distinguish between low- and high-ability students), and guessing (the probability of a very-low-ability student answering the item correctly). Models can be unidimensional, meaning that a single ability score is measured for each individual, or multidimensional, meaning that multiple abilities are measured for each individual. And finally, models can be dichotomous (all answers are either right or wrong) or polytomous (items are scored on a point scale; Lord and Novick, 1968). For the majority of the present analysis, we used a unidimensional, dichotomous, three-parameter model, but for some items we found a unidimensional, polytomous, two-parameter model was more appropriate.

While IRT is more powerful than classical test theory, certain requirements must be met for it to be used appropriately. Because a probabilistic estimation procedure is used for finding both person and item parameters, a large sample size is necessary to generate results with acceptable levels of statistical error. The large number of students who took the assessment (n = 316 altogether from both sections) allowed us to meet this requirement. This procedure also assumes that the distribution of abilities in the assessed population is approximately normal, so it can be unreliable if the true distribution is far from normal. Most importantly, it is necessary that the items be “locally independent” of each other, which is not the case for classical test theory. This means that correlations between student responses on different items must be sufficiently explained by only the student abilities and the item parameters and not by any other factors (e.g., demographic variables or the topics of the items). Because IRT analyses yield information that cannot be obtained through classical analyses, they are increasingly being used in discipline-based education research. We refer the reader to Wallace and Bailey (2010) and Wang and Bao (2010) for further information about IRT.

We attempted several analyses of the data to determine whether our content assessment was well suited to a unidimensional IRT analysis. Five of the 40 items on the assessment did not satisfy the local independence criterion and were excluded from the final IRT analyses (yet were retained for the classical analyses, which do not have this criterion). Other items were internally dependent within pairs, but independent of all other items, so for the analysis, we treated each pair as a single combined item scored on a 0–2 scale (0 being neither item correct, 1 being a single item correct, and 2 being both items correct). In the end, 29 items from the original assessment were left unchanged, and six other items were combined into three pairs. See the Supplemental Material for a list of these items. For the unchanged items, the unidimensional three-parameter logistic model was used for analysis, and for the combined items, the unidimensional polytomous model was used for analysis.

Once we had chosen appropriate IRT models for the analysis, we used the commercial software package IRTPRO to execute the calculations. Because both the item parameters and the student abilities were unknown, we used the expected a posteriori method to simultaneously estimate both sets of values from our data set. This method entailed first using the postassessment data to estimate the item parameters and then using these parameter values for estimating students’ pre- and postinstruction abilities.

Because abilities can in principle range from –∞ to +∞, there is no normalized gain calculation used in IRT analysis; instead, the gain for each individual is simply Δθ = θpost– θpre, where θ is student ability. We calculated Δθ for each student to visually examine the distributions of ability gains of students in each section. We also performed a multiple linear regression using the same model as above, but substituted postinstruction ability (θpost) for postassessment score and preinstruction ability (θpre) for preassessment score.

Assessment of Attitudes about Science and Learning Science

We used the Views about Sciences Survey Form B12 (VASS—Biology; available at www.halloun.net;Halloun and Hestenes, 1996) to assess the students’ attitudes about science and learning science. The VASS classifies students into four distinct profiles: expert, high transitional, low transitional, and folk. Students with an expert profile are predominantly scientific realists and critical learners. Students with a folk profile are naïve realists and passive learners (Halloun and Hestenes, 1998). The profiles are based on the following classification system:

Expert: 19 items or more with expert views (out of the 30 total items on the VASS)

High transitional: 15–18 items with expert views

Low transitional: 11–14 items with expert views and an equal or smaller number of items with folk views

Folk: 11–14 items with expert views but a larger number of items with folk views, or 10 items or fewer with expert views

The VASS was administered online through the course management system used at our university. Students took the VASS during the first few days of the class and again during the last few days. To compare students in the two sections at the beginning of the class, we compared the initial VASS profiles of students in each section using a Student’s t test, following the recommendations of Lovelace and Brickman (2013). At the end of the class, we calculated an “expert shift” (i.e., students’ movement toward the expert view from pretest to posttest) for each student. To do this, we used 1 to represent a shift from one profile group to another in the direction of the expert view (e.g., from folk to low transitional), 2 to represent a shift across two profile groups in the direction of the expert view (e.g., from folk to high transitional), and so forth. Mean expert shifts for each section were also compared using a Student’s t test.

When the students in the Extensive section took the VASS at the end of the quarter, they were asked an additional two questions through the course management system. These questions were not analyzed with the VASS data but rather gave us information about the students’ attitudes concerning the different pedagogies and the workload associated with the Extensive section.

We used RStudio (version 0.98.1049) for all statistical analyses, except the IRT analyses as described above and the calculation of Cohen’s kappa for interrater reliability, for which we used SPSS (IBM, version 22). Our study was completed with approval from our university’s human subjects review committee (IRB FWA00001207).

RESULTS

Student Demographics and Participation

The two sections were similar in terms of student demographics and participation. The majority of students in both sections were sophomores, although there were twice as many freshmen in the Moderate section compared with the Extensive section (16 and 8%, respectively; Table 2). Women accounted for ∼60% of the students in both sections, which reflects the gender balance on our campus. Students in both sections took an average of one biology class in high school; more than 90% of students in both sections had taken either one high school biology class or two, which included Advanced Placement biology. In addition, the number of previous college science classes taken by students was similar in both sections, with most students taking just one other science course.

| Student demographic | Extensive section | Moderate section |

|---|---|---|

| % Freshmen | 8 | 16 |

| % Sophomores | 68 | 61 |

| % Juniors | 18 | 16 |

| % Seniors | 6 | 6 |

| % Women | 59 | 60 |

| Number of years of high school biology (mean ± SD) | 1.0 ± 0.3 | 1.0 ± 0.4 |

| Number of science courses taken in college (mean ± SD) | 1.2 ± 1.1 | 1.0 ± 1.1 |

The percent of students consenting to participate in the study was very high (∼95% for both sections; Table 3), but not all of these students within each section completed all components of the study. When analyzing results from each component of the study, we used data from the subset of students who completed all aspects of that component (e.g., the subset of students who completed all three course exams or the subset of students who completed both pre- and post-VASS). Thus, the number of students (n) was different for each component of the study, since there were some students who did not complete all aspects of every component (e.g., the subset of students who took all three course exams overlapped with but was not completely the same as the subset of students who completed both the pre- and post-VASS). As a way to determine whether the subsets of students completing the different study components were academically different from each other within a section, we calculated the mean final grade, calculated as a grade point, for each subset of students (Table 3) and compared these with analysis of variance. Within each subset, there were no differences between mean final grades in the Moderate section (F = 0.650, p = 0.52) or the Extensive section (F = 0.537, p = 0.58).

| Extensive section | Moderate section | |||

|---|---|---|---|---|

| Subset of students who consented to participate and… | Number of students (percent total enrollment) | Mean final grade ± SD | Number of students (percent total enrollment) | Mean final grade ± SD |

| Completed all three course exams | 174 (96) | 2.9 ± 0.9 | 163 (95) | 2.8 ± 1.0 |

| Completed the pre and post content assessments | 158 (87) | 2.9 ± 0.8 | 142 (84) | 2.9 ± 1.0 |

| Completed the pre- and post-VASS surveys | 128 (71) | 3.0 ± 0.8 | 115 (67) | 3.0 ± 0.9 |

Classroom Observations

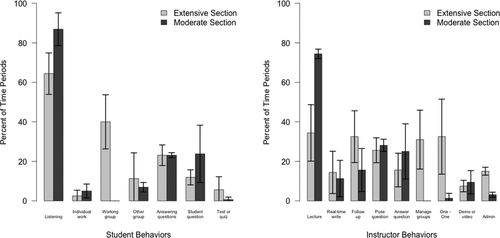

There were differences in what the students did in class in each section. The main difference was that students in the Extensive section worked in their assigned work groups on class activities, while students in the Moderate section did not have groups (Figure 2). Students in the Moderate section listened to the instructor and/or classmates during more of the 2-min time periods, compared with students in the Extensive section. However, students in both sections spent a substantial amount of time answering questions posed by the instructor and asking their own questions during whole-class time (time not working in groups for the Extensive section).

Figure 2. Percent of 2-min time periods spent at different activities by the students and the instructor in the Extensive and Moderate sections. The COPUS was used for classroom observations. The bars represent the means ± SD of five observations.

The instructor lectured during twice as many of the 2-min time periods in the Moderate section compared with the Extensive section (Figure 2). In the Extensive section, the instructor managed group work and worked one-on-one with students, which did not occur in the Moderate section. However, the instructor spent time posing and answering questions, using real-time writing during her lecture, and following up on student in-class work in both sections.

Assessments of Content Knowledge

Students in the Extensive section had significantly higher mean exam scores (grand mean of 72.0%) compared with students in the Moderate section (grand mean of 63.6%; t = 6.57, p < 0.001). In addition, students in the Extensive section had an odds ratio of passing an exam of 2.78 compared with students in the Moderate section who were equally likely to pass or fail exams (1.00 odds ratio of passing).

Students in the Extensive and Moderate sections performed equally on the content preassessment, correctly answering 44.3 ± 10.7% and 42.9 ± 10.4% of the questions, respectively (results are represented by mean ± SD, unless otherwise noted). Mean postassessment scores were 58.5 ± 12.1% of the questions correct by students in the Extensive section compared with 54.6 ± 12.2% by students in the Moderate section. The multiple regression model was significantly different compared with an intercept-only model (F = 29.83, p < 0.001). Using the multiple regression model, we found that students enrolled in the Extensive section had significantly higher postassessment scores compared with students in the Moderate section (Table 4). The regression coefficient of 2.51 for section on postassessment score indicates that students in the Extensive section scored ∼2.5 points higher than students in the Moderate section, all other factors being held equal. Preassessment score and the number of other science classes taken at the university also significantly affected postassessment score, while the number of high school biology classes taken by a student and the number of years the student had been in university did not.

| Regression coefficient | Estimate ± SE | p Value |

|---|---|---|

| Model intercept (β0) | 28.1 ± 3.6 | <0.001 |

| Preassessment score (β1) | 0.67 ± 0.06 | <0.001 |

| Section (reference level: Moderate) (β2) | 2.51 ± 1.23 | 0.045 |

| High school biology (reference level: None) (β3) | ||

| One year | −3.93 ± 2.38 | 0.10 |

| Two years (AP biology) | −2.98 ± 3.27 | 0.36 |

| Number of other science courses (β4) | 1.39 ± 0.64 | 0.031 |

| Year in university (reference level: Freshman) (β5) | ||

| Sophomore | 0.39 ± 1.92 | 0.84 |

| Junior | −2.25 ± 2.37 | 0.28 |

| Senior | 1.19 ± 3.43 | 0.73 |

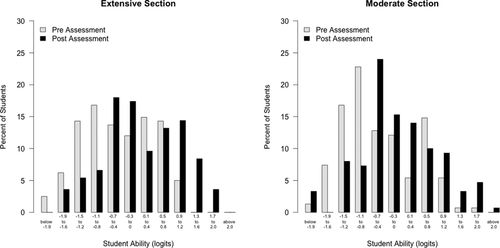

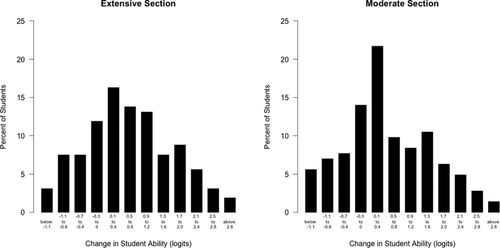

The IRT analyses of student response data supported the classical test theory analyses of the content assessment. We found that the mean preinstruction ability (the term “ability” is often used in IRT analyses to describe the performance of students on knowledge-based assessments) of students in the Extensive section was −0.49 ± 0.83 compared with −0.58 ± 0.83 for students in the Moderate section. Mean postinstruction abilities were 0.10 ± 0.92 and −0.11 ± 0.94 for students in the Extensive and Moderate sections, respectively. The distributions of pre- and postinstruction abilities for the two sections indicate that the two sections look similar in terms of preinstruction ability, but postinstruction the two distributions look less similar to each other (Figure 3). Postinstruction, a majority of students in the Extensive section were approximately evenly distributed throughout the range −0.7 < θ < 1.2, with fewer than 30% of students outside this range. In contrast, the distribution of postinstruction abilities in the Moderate section peaks sharply in the range −0.7 < θ < −0.4, with relatively fewer students in the higher range of abilities from 0.5 < θ < 1.6. The histograms of individual ability gains (Figure 4) afford us information about how the distributions of gains differed between sections. These histograms show that a greater fraction of students in the Extensive section had changes in ability in the range 0.5 < Δθ < 2.0 compared with students in the Moderate section, while in the Moderate section small positive gains (0 < Δθ < 0.5) were more common. Negative gains and extremely large positive gains (Δθ > 2.0) were uncommon in both groups.

Figure 3. Student ability on the content assessment at the beginning of the class (preassessment) and the end of class (postassessment) of students in the Extensive section compared with students in the Moderate section. The content assessment was administered in class by Scantron. IRT was used to determine student ability. Logits are units of measurement that are used to report relative differences in abilities with respect to item difficulty. They occur at equal intervals.

Figure 4. Change in student ability, as measured by content assessment, of students in the Extensive section compared with students in the Moderate section. The content assessment was administered in class by Scantron. IRT was used to determine student ability. Logits are units of measurement that are used to report relative differences in abilities with respect to item difficulty. They occur at equal intervals.

The multiple regression model using the IRT data was significantly different compared with an intercept-only model (F = 5.21, p < 0.001). We found that preinstruction ability, the number of other science courses taken at the university, and year in school (for juniors) significantly affected postinstruction ability, while section and the number of high school biology courses did not (Table 5). The regression coefficient of 0.18 for the section variable indicates that students in the Extensive section had higher postinstruction abilities than students in the Moderate section, all other factors being held equal, but this effect was not large enough to be statistically significant given the power of our analysis. The different results between the classical test theory and IRT analyses may be a statistical consequence of the use of IRT. As described earlier, a key advantage of IRT is that it allows us to simultaneously estimate properties of both people and items within a single data set. However, this advantage comes at a cost—a degree of statistical uncertainty is introduced into these estimates that is not present in classical test theory analyses. As a result, the statistical power of the analysis is weakened by the use of IRT. In cases in which the effect size one is trying to measure is near the threshold of what a classical test theory analysis could resolve based on the study’s statistical power, the IRT analysis may be unable to claim that the effect is statistically significant, even when it is real—a type II error. Thus, combining the above analyses suggests that students in the Extensive section had greater gains in the content knowledge probed by the assessment instrument than those in the Moderate section but that the true effect of the section variable may be less than is suggested by the classical test theory analysis alone.

| Regression coefficient | Estimate ± SE | p Value |

|---|---|---|

| Model intercept (β0) | 0.22 ± 0.27 | 0.41 |

| Preassessment score (β1) | 0.31 ± 0.07 | <0.001 |

| Section (reference level: Moderate) (β2) | 0.18 ± 0.11 | 0.11 |

| High school biology (reference level: None) (β3) | ||

| One year | −0.04 ± 0.21 | 0.84 |

| Two years (AP biology) | −0.02 ± 0.29 | 0.96 |

| Number of other science courses (β4) | 0.16 ± 0.06 | 0.006 |

| Year in university (reference level: Freshman) (β5) | ||

| Sophomore | −0.29 ± 0.18 | 0.09 |

| Junior | −0.51 ± 0.22 | 0.02 |

| Senior | −0.03 ± 0.31 | 0.91 |

Assessment of Attitudes about Science and Learning Science

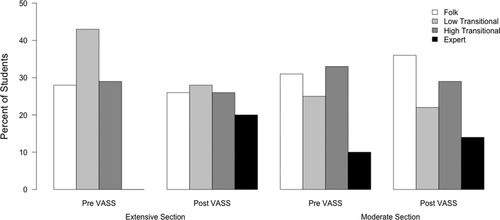

Students in both sections had statistically similar profiles when they took the VASS at the beginning of the class (t = 1.89, p = 0.06, Student’s t test), although the Extensive section had no students classified as “experts,” while 10% of the students in the Moderate section were classified as such (Figure 5). By the end of the class, however, fully 20% of the students in the Extensive section were classified as experts, and the number of students classified as low transitional dropped from 43 to 28%. There was very little change in the profile of the Moderate section. Students in the Extensive section had a significantly greater mean expert shift (0.40 ± 1.10) compared with students in the Moderate section (−0.02 ± 0.88; t = 3.23, p = 0.001).

Figure 5. VASS profiles of students in the Extensive section compared with students in the Moderate section. The VASS was administered online at the beginning (pre) and end (post) of the course.

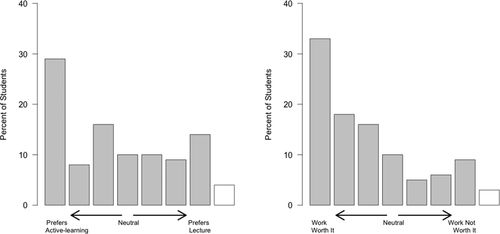

Students in the Extensive section generally had positive attitudes toward the student-centered pedagogies used in the class. Fifty three percent of students reported that they prefer active-learning pedagogies as opposed to lecture, while 33% reported that they prefer lecture (the remaining students either preferred both pedagogies equally or did not like either pedagogy; Figure 6). Also, 67% of the students in the Extensive section thought that the work they did outside class to prepare for the in-class activities was worth the time they put into it, while only 19% thought that the work was not worth their time (Figure 6).

Figure 6. Attitudes of students in the Extensive section about the active-learning strategies used in the class and the workload associated with them. The open bars at the right of each graph reflect the percent of students who answered “neither” for each question.

DISCUSSION

We investigated whether students in a highly structured, student-centered class (Extensive section) would have increased content knowledge and more sophisticated views about learning biology compared with students in a class with moderate use of student-centered strategies and a less structured context (Moderate section). Indeed, we found that students in the Extensive section had significantly higher exam scores and higher scores on the content postassessment (Table 4 and Figure 3), even though both the content and the depth of material were the same in the two sections. We also found that, at the end of the class, the students in the Extensive section had more expert attitudes toward learning biology compared with students in the Moderate section (Figure 5).

Why Did Students in the Extensive Section Gain More Content Knowledge Compared with Students in the Moderate Section?

In contrast to students in the Moderate section, students in the Extensive section consistently encountered content in a manner that promoted active, cooperative engagement with the material. For example, the instructor led the students in the Moderate section didactically through the worksheet activities, while students in the Extensive section completed the worksheet activities with their group members. The worksheet activities were carefully designed so that they first elicited preconceptions and then guided students through a logical progression of ideas, requiring students to make predictions, analyze data, and explain their reasoning (see the Supplemental Material for an example). By recording their preconceptions, and then actively engaging in the worksheet activities, students in the Extensive section were better able to organize the content into a conceptual framework that built upon their prior ideas. Both the elicitation of prior knowledge and the construction of understanding are critical components of learning (NRC, 2000)

Active engagement with the worksheet activities was facilitated in the Extensive section by students working in assigned groups for the entire quarter, and grades on the worksheet activities were given based on group performance. While studies have shown that incorporating group work in undergraduate science classes is beneficial (Springer et al., 1999; Johnson et al., 1998; Gaudet et al., 2010), implementation of group work is complex (Gillespie et al., 2006; Borrego et al., 2013), especially group formation. Our presumption was that successful engagement with the worksheet activities would require a range of academic ability and perspectives, so we intentionally formed groups based on criteria such as background in science, year in school, race, and gender. Using these student demographics helped us create diverse groups, with the expectation that this would maximize student achievement (Slavin, 1990; Flannery, 1994; Gerlach, 1994; Colbeck et al., 2000). We do not know whether the worksheet activities would be as effective if the incentive was shifted from the group to the individual or whether our worksheet activities would be as helpful to students if they performed them outside class as homework. There is recent evidence that what matters most is having students actively engage with activities that deepen conceptual understanding, whether they perform them in or out of class (Jensen et al., 2014). This evidence is corroborated by our data, since the students in the Moderate section encountered the worksheet activities but were guided through them by the instructor and did not work though them on their own.

Lecture was used in both sections, although it was used less in the Extensive section compared with the Moderate section (Figure 2). The different reasons for lecturing in the two sections were probably more important than the total time spent lecturing. In the Moderate section, in-class lecture was the primary mode of instruction, and the majority of the course content was delivered using this pedagogy. In the Extensive section, lecture was used primarily in response to student questions and confusions. During their review of the basic material at home, students posted questions and comments on a discussion page, which allowed the instructor to tailor lecture material to address the content about which students were least confident. This strategy is an example of just-in-time teaching (e.g., Novak et al., 1999), which can increase cognitive gains of students in large-enrollment biology classes (Marrs and Novak, 2004).

Another pedagogical difference between the two sections was the increased amount of formative assessment used in the Extensive section (Figure 1). Students in this section encountered low-stakes assessments several times during a module, and these assessments gave students regular feedback about their content knowledge. Our use of quizzes at the beginning of each module is consistent with evidence that frequent quizzes after learning material improves content understanding and retention (Karpicke and Roediger, 2008; Klionsky, 2008). Another technique we used, 2-min writes, has been linked to increased student learning gains in social science courses (Stead, 2005). There is other evidence that systematic formative assessment can be used effectively by instructors to improve student learning in undergraduate biology classes. Ebert-May et al. (2003) describe using formative assessment in a research-based manner to determine student understandings about the flow of energy and matter in ecosystems, and then using that information to refine instructional practice to address student misconceptions. Similarly, Maskiewicz et al. (2012) found that using active-learning activities targeted to specifically address students’ misconceptions, which were identified by diagnostic questions, led to improved student mastery of that content compared with instruction that did not incorporate active learning. There is also growing understanding of what instructors must do to assist students in assessing their own understanding of course material. Strategies include setting clear standards for mastery of content, providing students with high-quality feedback about the current state of their learning, and giving them opportunities to “close the gap” between their current state and mastery (Sadler, 1998; Nicol and Macfarlane-Dick, 2006).

There was also explicit use of metacognition in the Extensive section, which is related to student self-assessment of understanding and has been identified as important for student learning (NRC, 2000; Schraw, 2002). Students in the Extensive section prepared content summaries at the beginning of each module and then revised them when the module was finished. This revision allowed students to reflect on how their understanding of the content had changed. There is increasing focus on incorporating metacognition into undergraduate biology classes (D’Avanzo, 2003; Tanner, 2012), and recent studies support the use of metacognition as a learning tool. Crowe et al. (2008) found that teaching students how to “Bloom” practice exam questions before answering them (i.e., determining the cognitive level of the different questions) gave students insight into the types of questions they were having the most difficulty with, which in turn gave them insight into their mastery of the course material. Similarly, incorporating writing assignments with metacognitive components (postexam corrections and peer-reviewed writing assignments) into a large-lecture biology class yielded better critical-thinking abilities and student learning (Mynlieff et al., 2014). In an undergraduate chemistry course, Sandi-Urena et al. (2011) found that students participating in class activities designed to improve metacognitive skills had increased awareness about metacognition and improved ability to solve higher-level chemistry problems.

How Did Students’ Attitudes toward Learning Biology Change in the Extensive Section Compared with the Moderate Section?

We found a significant shift in students’ views about learning science toward a more expert view in the Extensive section but not in the Moderate section (Figure 5). This shift suggests that the student-centered pedagogies we used in the Extensive section not only facilitated learning content but also positively influenced views about learning biology compared with the pedagogies used in the Moderate section. There is conflicting evidence about changes in students’ epistemological beliefs about science and learning science during instruction. For example, Semsar et al. (2011) found that nonmajors became more novice in their views about learning biology after instruction, while no change was observed in students enrolled in majors’ courses. In contrast, Ding and Mollohan (2015) found that biology majors became more novice in their views about biology in lecture-based biology courses, while nonmajors became more expert, although the shift for nonmajors was slight. Shifts toward more novice-like views have also been observed in other disciplines such as chemistry (Barbera et al., 2008) and physics (Adams et al., 2006). In our study, we observed a shift toward a more expert-like view in our nonmajors, but only in the section using extensive student-centered strategies. Using an active-learning approach, Finkelstein and Pollock (2005) observed a positive shift in physics students (both majors and nonmajors were enrolled in the course), although only among high-achieving students. These discrepant findings point to a need for further research in this area.

Student response to the student-centered pedagogies in the Extensive section was generally very positive. The majority of students reported that they preferred an activity-based pedagogy as opposed to traditional lecture and that the work required outside class for the Extensive section was worthwhile (Figure 6). This response is similar to that found by Armbruster et al. (2009) when they reformed their introductory biology course from lecture based to student centered. However, one-third of the students in the Extensive section of our study reported that they preferred lecture. Students often react unfavorably to pedagogies different from those to which they are accustomed (Seidel and Tanner, 2013). For example, when an undergraduate physics course was changed from a traditional lecture to a collaborative group-centered structure at the Massachusetts Institute of Technology, the students were so unhappy with the change that they circulated a petition objecting to the course structure and asked that a lecture section be reinstated (Breslow, 2010). Student dissatisfaction continued despite evidence of improved learning with the group-centered pedagogies (Dori and Belcher, 2005). In our study, we would argue that the increased learning gains and more expert views about learning science in students in the Extensive section far outweigh any negative perceptions held by the students.

Potential Limitations of the Study

Although we were able to control for many of the variables between the two sections (instructor, content, lab experience, time of year), we had to teach the two sections at different times of day, and it is possible that this is the cause of the differences we found in our study. However, we do not think that this is the case for the following reasons. First, students in the two sections performed equally on both the content and VASS preassessments, and our results suggest that differences in demographics between the two sections (i.e., number of freshmen in each section; Table 2) did not significantly affect postassessment scores (Table 4). Second, although students took the same exams at different times of day, we had a rigorous testing procedure that made it difficult for them to share an exam with a student in the other section. Finally, we have evidence that there was no difference between student achievement on exams in the two sections in previous quarters. The quarter before our study, we used the same exams for both sections (although different exams from those used in this study) and the same testing procedure for sections offered at the same times as those in our study. We found no differences in performance between students in the morning and noon sections. Thus, we think that the differences we observed between students in the two sections of our study were a function of pedagogical differences and not time of day.

CONCLUSIONS

We reformed our course in stages over several years, and unburdening the curriculum was an essential part of the process. Paring content down to specific learning targets allowed students to process less breadth of content in both the Moderate and Extensive sections. In their seminal paper “Less Teaching, More Learning,” Luckie et al. (2012) found that less breadth meant significant learning gains for students in their introductory biology lab. Support for unburdening the curriculum also comes from Knight and Wood (2005) and Schwartz et al. (2008). We went a step further in the Extensive section by flipping the classroom, moving a manageable amount of basic lecture content out of the class to free up in-class time for active-learning strategies such as worksheet activities. There is recent evidence that flipping the classroom can lead to increased content knowledge in an undergraduate biology class (Gross et al., 2015), although there is also evidence that active-learning strategies can be equally effective if they are used outside the classroom (Jensen et al., 2014). There was not a flipped component to our Moderate section, although the unburdening of the curriculum allowed time for some student-centered strategies and didactic explanations of the same worksheet activities that students in the Extensive section completed in groups.

Overall, we conclude that the work required to shift from a Moderate course structure to an Extensive course structure was worth it. We found that using several active-learning strategies coupled with consistent formative assessment led to better student outcomes compared with using fewer active-learning strategies in a more teacher-centered classroom. Students in the Extensive section performed better on course exams and the content assessment and had more expert views about learning biology. However, we do not want to discourage faculty members from starting with active learning in a teacher-centered context. Implementing even a few active-learning strategies into a lecture-based course can lead to increased learning gains (e.g., Knight and Wood, 2005). In addition, we think that our success in the Extensive section would not have been possible if we had implemented the pedagogies all at once. The course that we currently teach is the product of multiple revisions and refinements over several years of offering the course, and the leap of creating a wholly student-centered course came only after practicing and developing active-learning strategies for a lecture-based class. It is also possible that there is a maximum amount of learning that can be achieved solely by increasing the number of active-learning strategies used in a class. Put another way, at some point instructor resources may be better allocated to improving aspects of a class other than the number of pedagogical strategies used. We think that future research to explore this possible ceiling effect will be important for helping instructors reform their teaching practices.

ACKNOWLEDGMENTS

We thank Lilane Dethier and Sarah Fraser for help with data collection and analysis, Stephanie Carlson for in-class facilitation help, and two anonymous reviewers for their thoughtful comments. Our work was partially funded by Western Washington University Research and Sponsored Programs.